Artificial Eyes with Emotion and Light Responsive Pupils for Realistic Humanoid Robots

Abstract

:1. Introduction

2. The Importance of Eye Contact Interfacing in HRI

3. Human Eye Dilation to Light and Emotion

4. Previous Prototype Robotic Eyes with Dilating Pupils

5. Building on the State-of-the-Art in Robotic Eyes Design

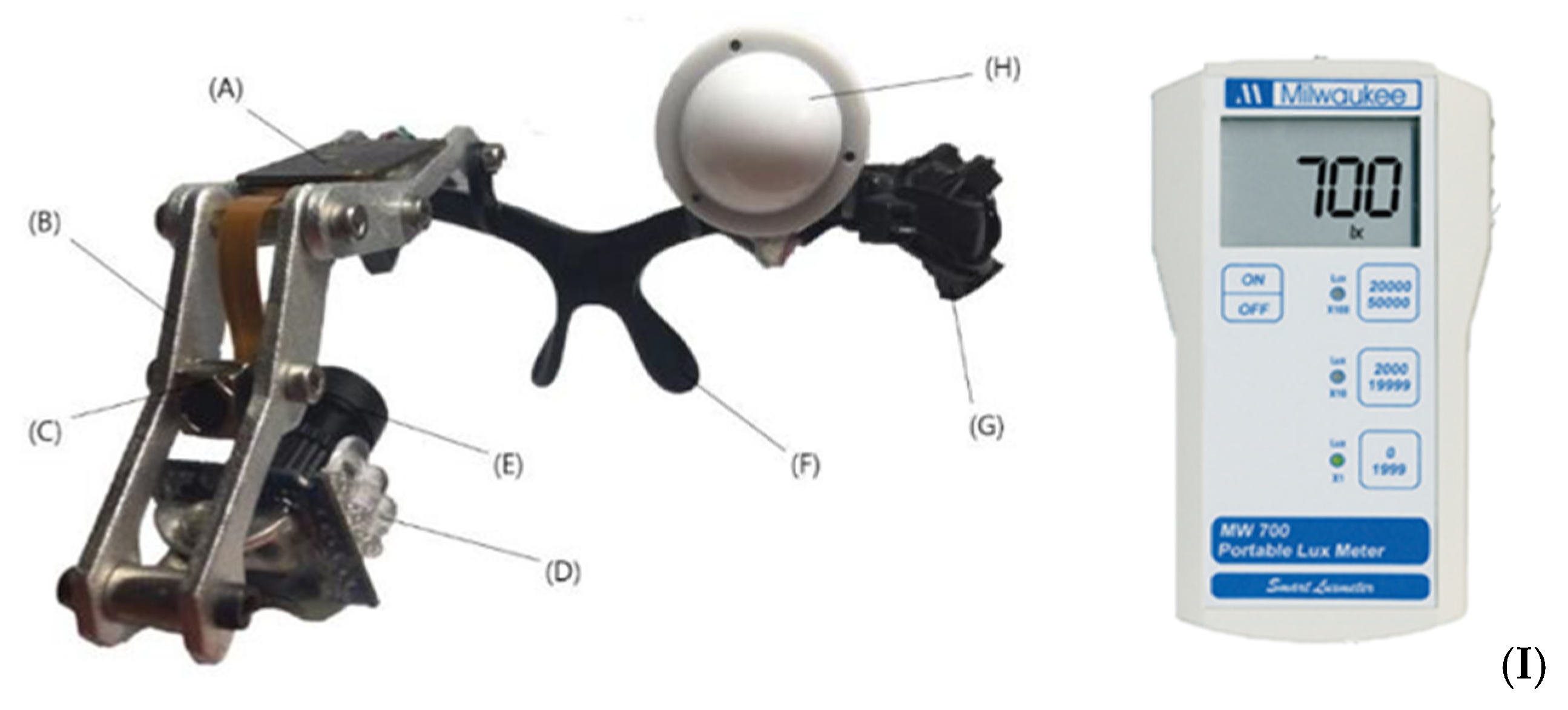

6. Robotic Eye Design

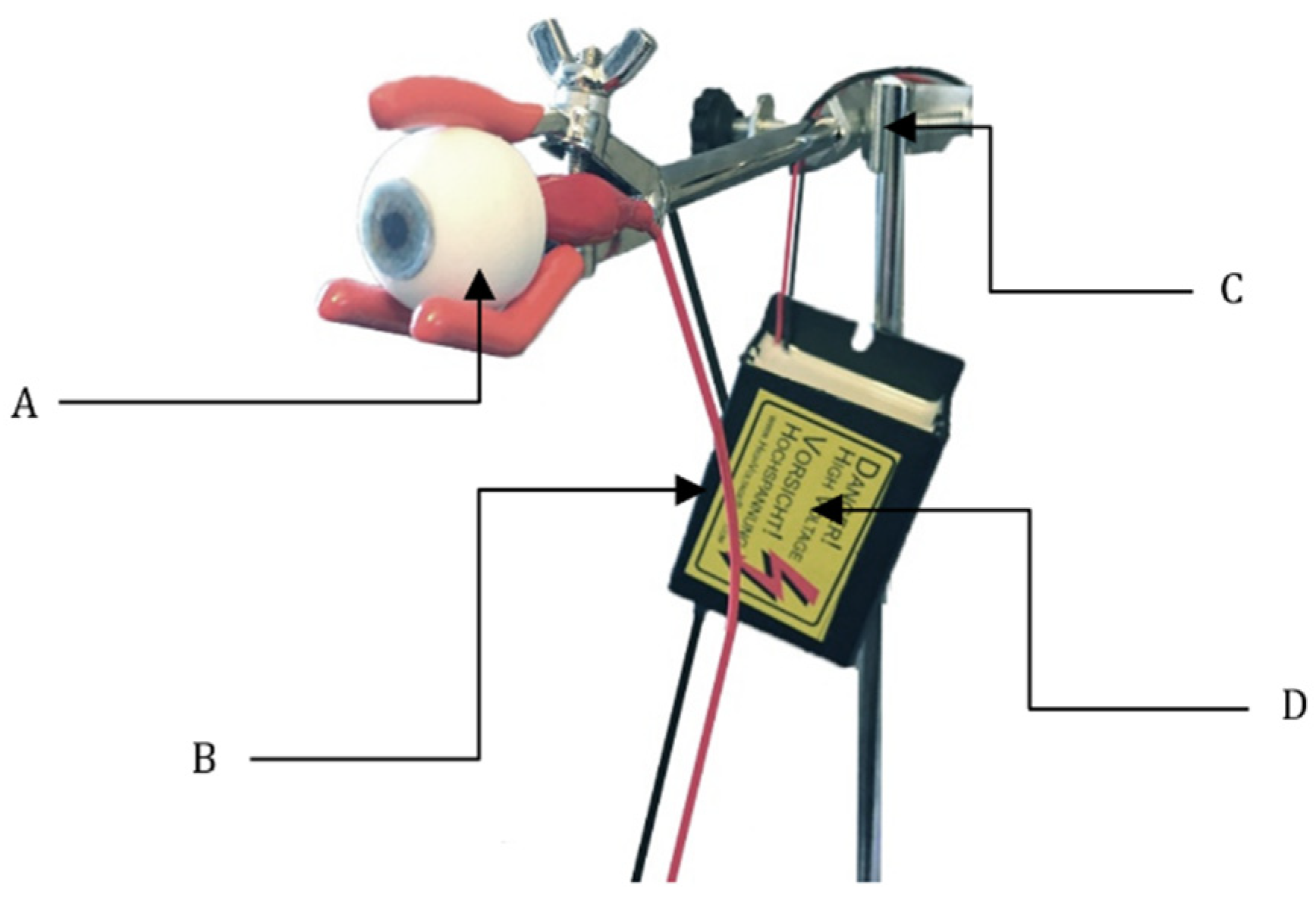

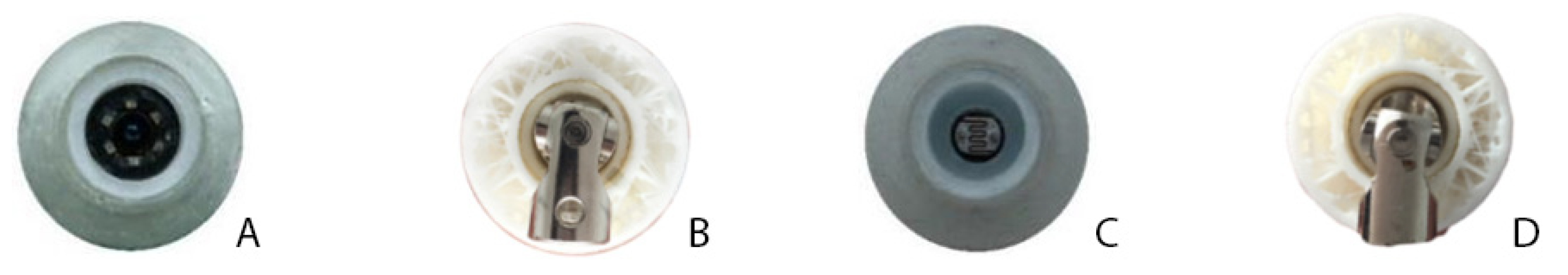

6.1. Integrating Gelatin Iris with Graphene Dielectric Elastomer Actuator

6.2. CAD-Designed 3D Printed Thermoplastic Polyurethane Sclera

6.3. Camera and Photo-Resistor Integration into Robotic Eye

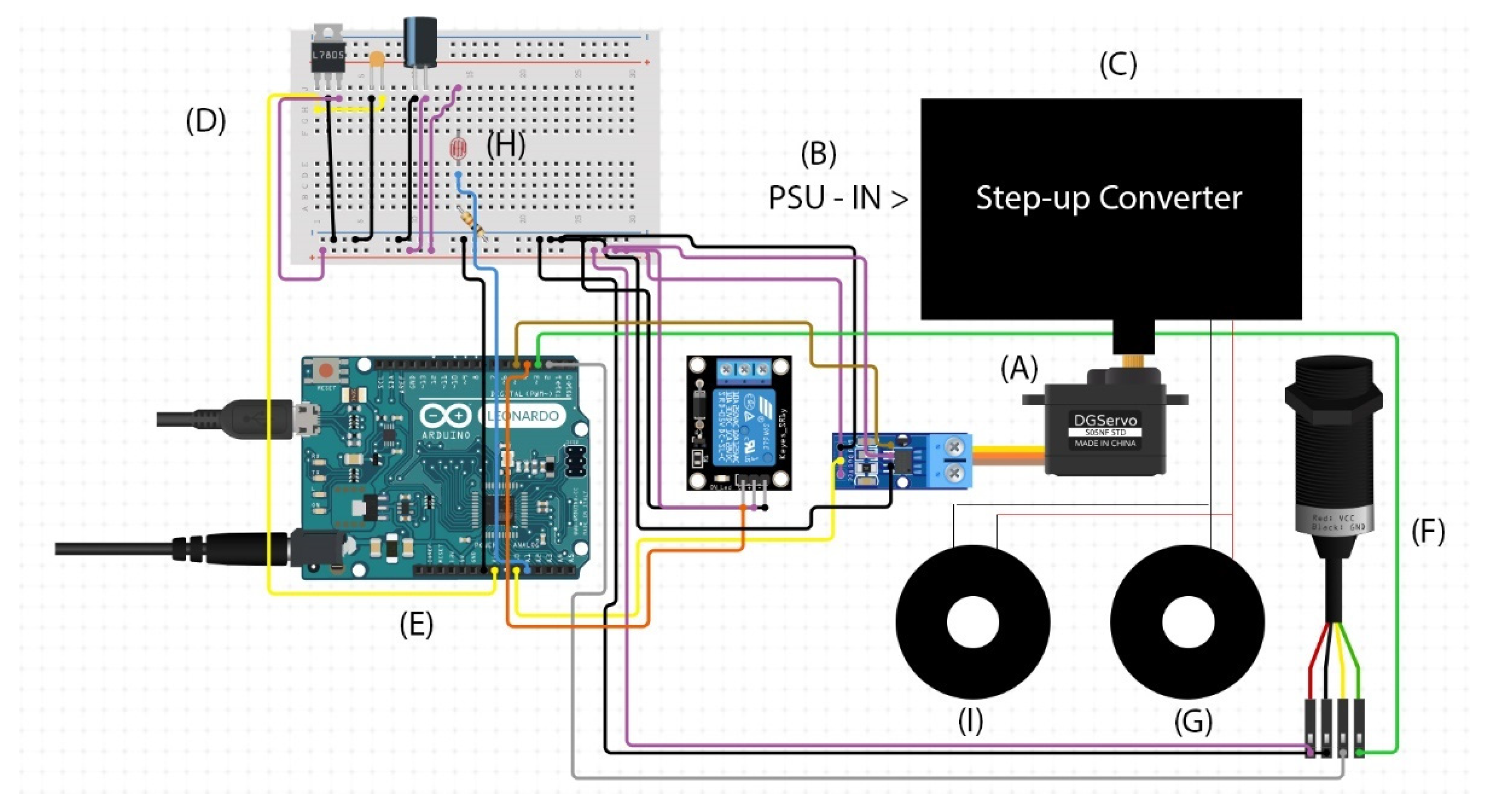

6.4. Control System Hardware and Software Design

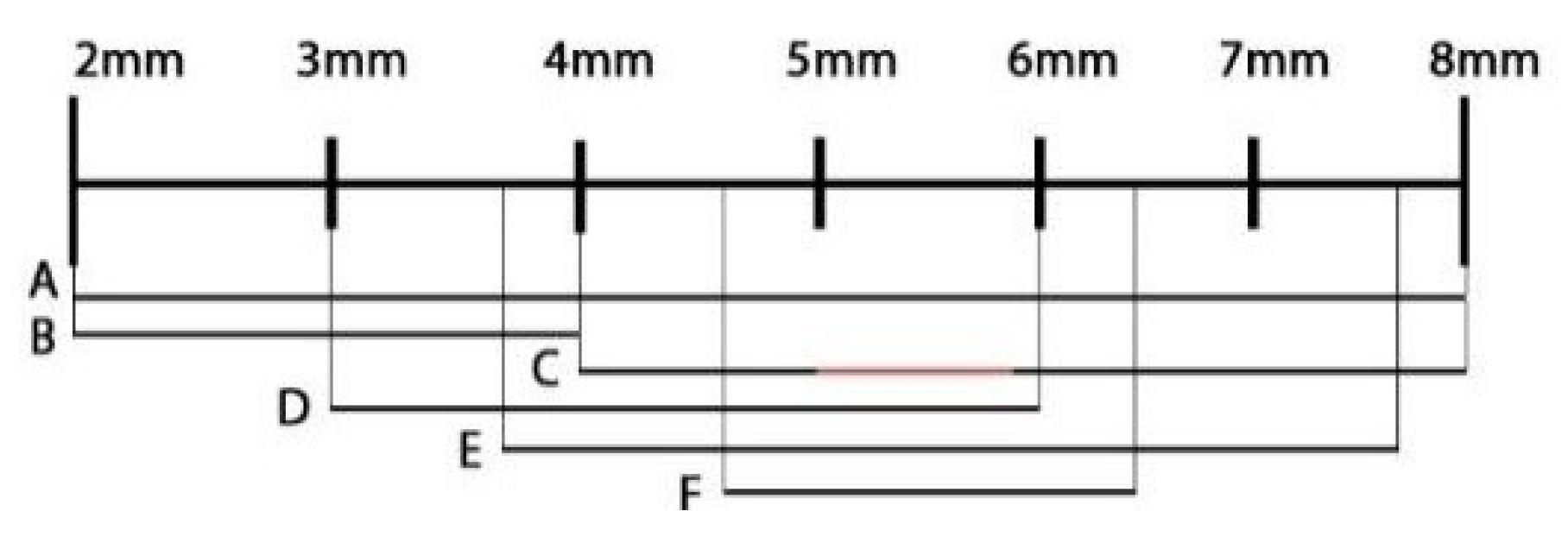

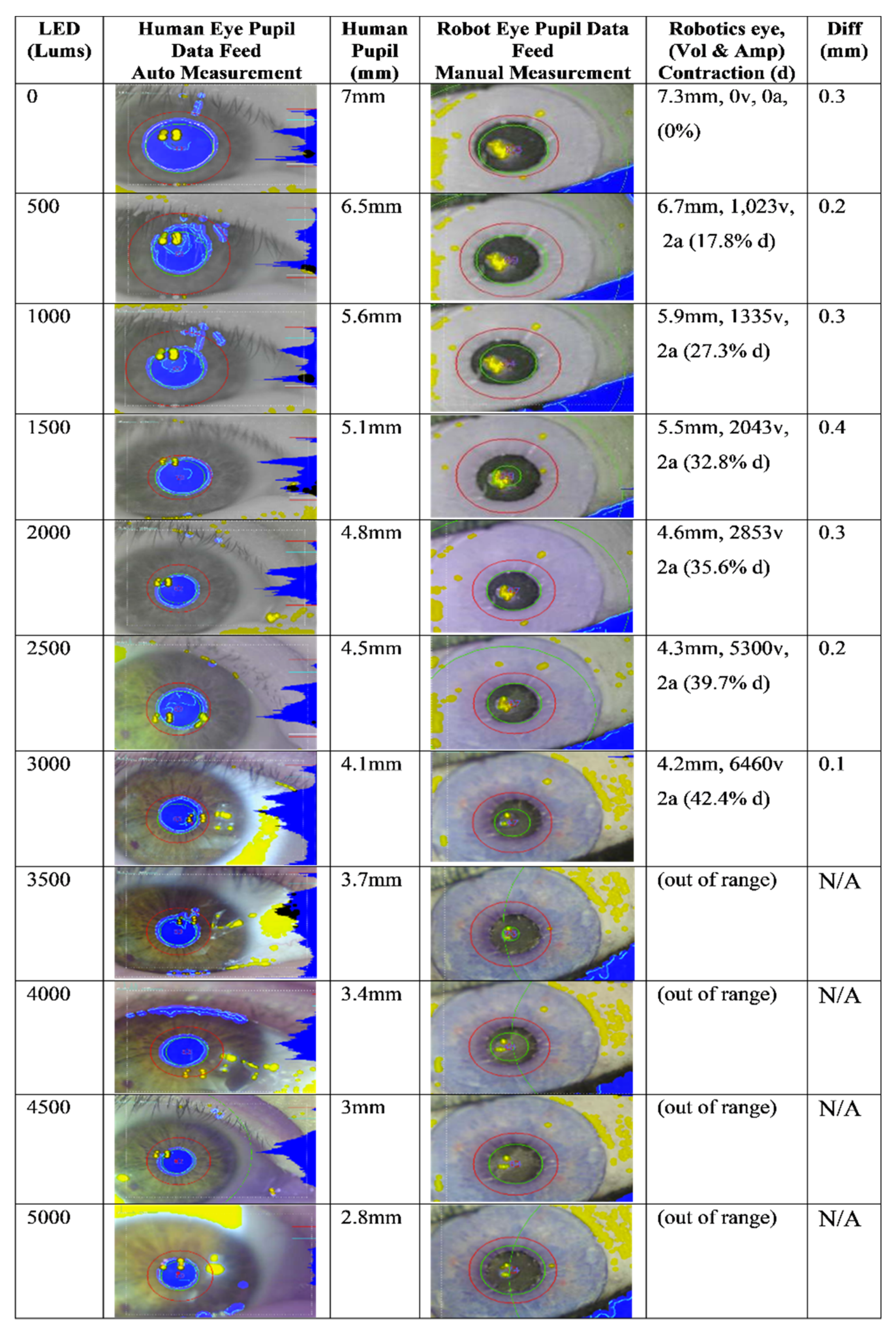

7. Robotic Eye Testing and Calibration

8. Robotic Eye Test Results

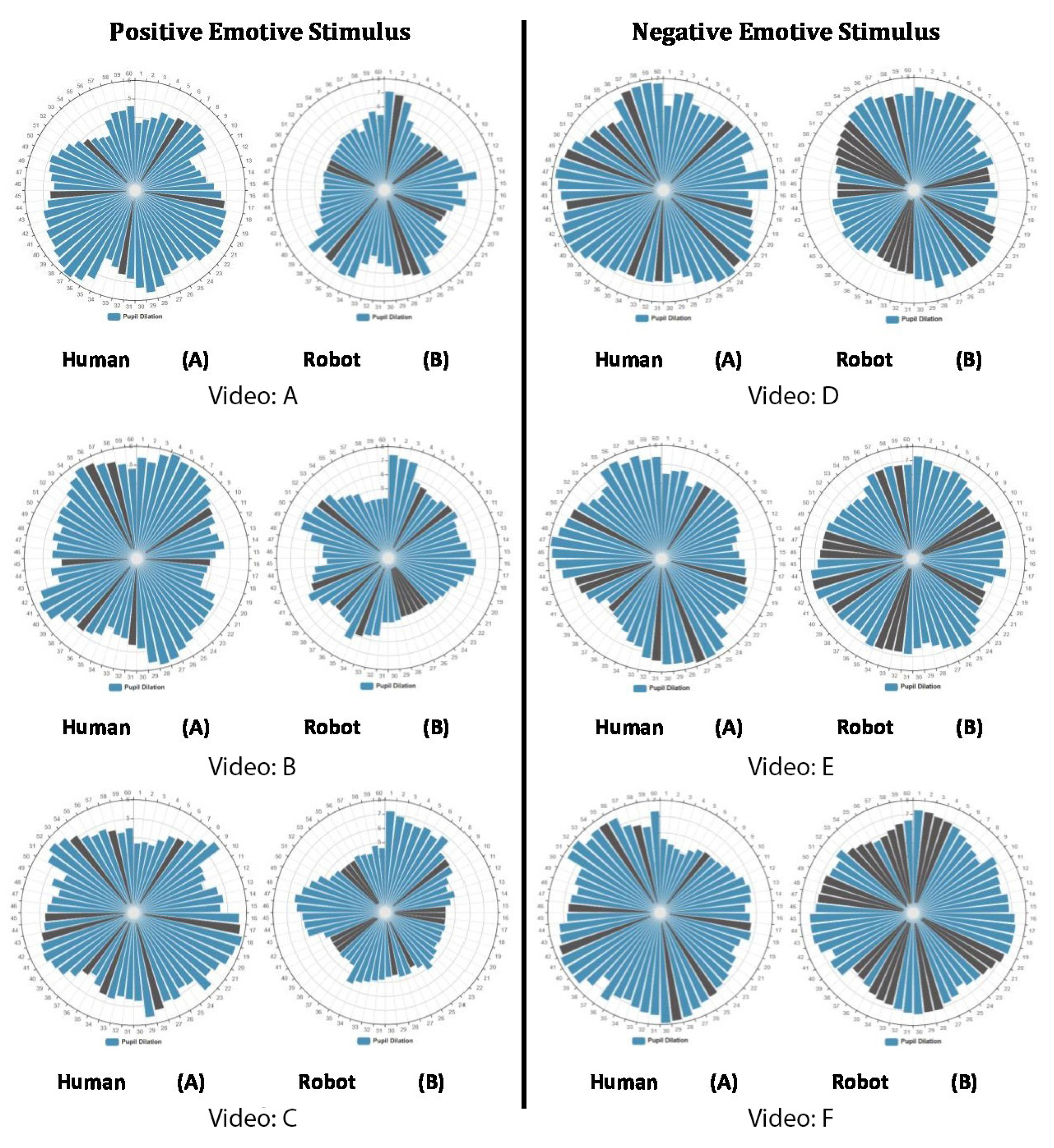

8.1. Results of Positive Video Stimulus Experiment

8.2. Results of Negative Video Stimulus Experiment

9. Analysis of the Light and Emotion Test Results

10. Human-Robot Interaction Experiment

HRI Test Results and Analysis

11. Conclusions

12. Future Work

Supplementary Materials

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Strathearn, C.; Ma, M. Biomimetic pupils for augmenting eye emulation in humanoid robots. Artif. Life Robot. 2018, 23, 540–546. [Google Scholar] [CrossRef]

- Strathearn, C.; Ma, M. Development of 3D sculpted, hyper-realistic biomimetic eyes for humanoid robots and medical ocular prostheses. In Proceedings of the 2nd International Symposium on Swarm Behavior and Bio-Inspired Robotics (SWARM 2017), Kyoto, Japan, 29 October–1 November 2017. [Google Scholar]

- Ludden, D. Your Eyes Really Are the Window to Your Soul. 2015. Available online: www.psychologytoday.com/gb/blog/talking-apes/201512/your-eyes-really-are-the-window-your-soul (accessed on 17 February 2020).

- Kret, M. The role of pupil size in communication. Is there room for learning? Cogn. Emot. 2018, 32, 1139–1145. [Google Scholar] [CrossRef] [Green Version]

- Oliva, M.; Anikin, A. Pupil dilation, reflects the time course of emotion recognition in human vocalisations. Sci. Rep. 2018, 8, 1–10. [Google Scholar] [CrossRef] [Green Version]

- Wang, C.; Baird, T.D.; Huang, J.; Coutinho, J.D.; Brien, D.C.; Munoz, D.P. Arousal effects on pupil size, heart rate, and skin conductance in an emotional face task. Front. Neurol. 2018, 9, 1029. [Google Scholar] [CrossRef]

- Reuten, A.; Dam, M.S.; Naber, M. Pupillary responses to robotic and human emotions: The uncanny valley and media equation confirmed. Front. Psychol. 2018, 9, 774. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Wuss, R. Teaching a Robot to See: A Conversation in Eye Tracking in the Media Arts and Human-Robot Interaction. 2019. Available online: http://www.interactivearchitecture.org/trashed-10.html (accessed on 17 February 2020).

- Koike, T.; Sumiya, M.; Nakagawa, E.; Okazaki, S.; Sadato, N. What makes eye contact special? Neural substrates of on-line mutual eye-gaze: A hyperscanning fMRI study. Eneuro 2019, 6, 326. [Google Scholar] [CrossRef]

- Jarick, M.; Bencic, R. Eye contact is a two-way street: Arousal is elicited by the sending and receiving of eye gaze information. Front. Psychol. 2019, 10, 1262. [Google Scholar] [CrossRef]

- Kompatsiari, K.; Ciardo, F.; Tikhanoff, V. On the role of eye contact in gaze cueing. Sci. Rep. 2018, 8, 1–10. [Google Scholar] [CrossRef] [Green Version]

- Xu, T.L.; Zhang, H.; Yu, C. See you see me: The role of eye contact in multimodal human-robot interaction. ACM Trans. Interact. Intell. Syst. 2016, 6, 2. [Google Scholar] [CrossRef] [Green Version]

- Admoni, H.; Scassellati, B. Social eye gaze in human-robot interaction: A review. J. Hum. Robot Interact. 2017, 6, 25. [Google Scholar] [CrossRef] [Green Version]

- Broz, H.; Lehmann, Y.; Nakano, T.; Mutlu, B. HRI Face-to-Face: Gaze and Speech Communication (Fifth Workshop on Eye-Gaze in Intelligent Human-Machine Interaction). In Proceedings of the 2013 8th ACM/IEEE International Conference on Human-Robot Interaction (HRI), Tokyo, Japan, 3–6 March 2013; pp. 431–432. [Google Scholar] [CrossRef]

- Ruhland, K.; Peters, C.E.; Andrist, S.; Badler, J.B.; Badler, N.I.; Gleicher, M.; Mutlu, B.; McDonnell, R. A review of eye gaze in virtual agents, social robotics and HCI. Comput. Graph. Forum 2015, 34, 299–326. [Google Scholar] [CrossRef]

- Hoque, M.; Kobayashi, Y.; Kuno, Y. A proactive approach of robotic framework for making eye contact with humans. Adv. Hum. Comput. Interact. 2014, 5, 1–12. [Google Scholar] [CrossRef] [Green Version]

- Riley-Wong, M. Energy metabolism of the visual system. Eye Brain 2010, 2, 99–116. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Spector, R.H. The pupils. In Clinical Methods: The History, Physical and Laboratory Examinations, 3rd ed.; Butterworths: Boston, MA, USA, 1990; pp. 256–272. [Google Scholar]

- Gigahertz, O. Measurements of Light. 2018. Available online: www.light-measurement.com/spectral-sensitivity-of-eye/ (accessed on 9 October 2019).

- Zandman, F. Resistor Theory and Technology; SciTech Publishing Inc.: Raleigh, NC, USA, 2002; pp. 56–58. [Google Scholar]

- Edwards, M.; Cha, D.; Krithika, S.; Johnson, M.; Parra, J. Analysis of iris surface features in populations of diverse ancestry. R. Soc. Open Sci. 2016, 3, 150424. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Kinner, V.L.; Kuchinke, L.; Dierolf, A.M.; Merz, C.J.; Otto, T.; Wolf, O.T. What our eyes tell us about feelings: Tracking pupillary responses during emotion regulation processes. J. Psychophysiol. 2017, 54, 508–518. [Google Scholar] [CrossRef]

- Hoppe, S.; Loetscher, T.; Morey, S.; Bulling, A. Eye movements during everyday behaviour predict personality traits. Front. Hum. Neurosci. 2018, 12, 105. [Google Scholar] [CrossRef] [PubMed]

- Bradley, M.; Miccoli, L.; Escrig, M.; Lang, P. The pupil as a measure of emotional arousal and autonomic activation. J. Psychophysiol. 2013, 4, 602–607. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Munoz, G. How Fast is a Blink of Eyes. 2018. Available online: https://sciencing.com/fast-blink-eye-5199669.html (accessed on 14 March 2020).

- Sejima, Y.; Egawa, S.; Maeda, R.; Sato, Y.; Watanabe, T. A speech-driven pupil response robot synchronised with burst-pause of utterance. In Proceedings of the 26th IEEE International Workshop on Robot and Human Communication (ROMAN), Lisbon, Portugal, 28 August–1 September 2017; pp. 228–233. [Google Scholar] [CrossRef]

- Prendergast, K.W.; Reed, T.J. Simulator Eye Dilation Device. U.S. Patent 5900923, 4 May 1999. Available online: www.google.co.uk/patents/US5900923 (accessed on 9 April 2020).

- Simon, M. This Hyper-Real Robot Will Cry and Bleed on Med Students. 2018. Available online: www.wired.com/story/hal-robot/ (accessed on 12 January 2019).

- Schnuckle, G. Expressive Eye with Dilating and Constricting Pupils. U.S. Patent 7485025B2, 3 February 2006. Available online: https://www.google.com/patents/US7485025 (accessed on 9 April 2018).

- Zeng, H.; Wani, M.; Wasylczyk, P.; Kaczmarek, R.; Priimagi, A. Self-regulating iris based on light-actuated liquid crystal elastomer. Adv. Mat. 2017, 29, 90–97. [Google Scholar] [CrossRef] [PubMed]

- Breedon, J.; Lowrie, P. Lifelike prosthetic eye: The call for smart materials. Expert Rev. Ophthalmol. 2013, 8, 135–137. [Google Scholar] [CrossRef]

- Liu, Y.; Shi, L.; Liu, L.; Zhang, Z.; Jinsong, L. Inflated dielectric elastomer actuator for eyeball’s movements: Fabrication, analysis and experiments. In Proceedings of the SPIE Smart Structures and Materials + Nondestructive Evaluation and Health Monitoring, San Diego, CA, USA, 9–13 March 2008; Volume 6927. [Google Scholar] [CrossRef]

- Chen, B.; Bai, Y.; Xiang, F.; Sun, J.; Mei Chen, Y.; Wang, H.; Zhou, J.; Suo, Z. Stretchable and transparent hydrogels as soft conductors for dielectric elastomer actuators. J. Polym. Sci. Polym. Phys. 2014, 52, 1055–1060. [Google Scholar] [CrossRef]

- Vunder, V.; Punning, A.; Aabloo, A. Variable-focal lens using an electroactive polymer actuator. In Proceedings of the SPIE Smart Structures and Materials + Nondestructive Evaluation and Health Monitoring, San Diego, CA, USA, 6–10 March 2011; Volume 7977. [Google Scholar] [CrossRef]

- Son, S.; Pugal, D.; Hwang, T.; Choi, H.; Choon, K.; Lee, Y.; Kim, K.; Nam, J.-D. Electromechanically driven variable-focus lens based on the transparent dielectric elastomer. Int. J. Appl. Optics 2012, 51, 2987–2996. [Google Scholar] [CrossRef]

- Shian, S.; Diebold, R.; Clarke, D. Tunable lenses using transparent dielectric elastomer actuators. Opt. Express 2013, 21, 8669–8676. [Google Scholar] [CrossRef] [PubMed]

- Lapointe, J.; Boisvert, J.; Kashyap, R. Next-generation artificial eyes with dynamic iris. Int. J. Clin. Res. 2016, 3, 1–10. [Google Scholar] [CrossRef]

- Lapointe, J.; Durette, J.F.; Shaat, A.; Boulos, P.R.; Kashyap, R. A ‘living’ prosthetic iris. Eye 2010, 24, 1716–1723. [Google Scholar] [CrossRef] [PubMed]

- Abramson, D.; Bohle, G.; Marr, B.; Booth, P.; Black, P.; Katze, A.; Moore, J. Ocular Prosthesis with a Display Device. 2013. Available online: https://patents.google.com/patent/WO2014110190A2/en (accessed on 24 April 2020).

- Mertens, R. MIT Robotic Labs Makes a New Cute Little Robot with OLED Eyes. 2009. Available online: https://www.oled-info.com/mit-robotic-labs-make-new-cute-little-robot-oled-eyes (accessed on 12 June 2019).

- Amadeo, R. Sony’s Aibo Robot Dog Is Back, Gives Us OLED Puppy Dog Eyes. 2017. Available online: https://arstechnica.com/gadgets/2017/11/sonys-aibo-robot-dog-is-back-gives-us-oled-puppy-dog-eyes/ (accessed on 12 February 2020).

- Blaine, E. Eye of Newt: Keep Watch with a Creepy, Compact, Animated Eyeball. 2018. Available online: www.makezine.com/projects/eye-of-newt-keep-watch-with-a-creepy-compact-animated-eyeball/ (accessed on 15 February 2020).

- Castro-González, Á.; Castillo, J.; Alonso, M.; Olortegui-Ortega, O.; Gonzalez-Pacheco, V.; Malfaz, M.; Salichs, M. The effects of an impolite vs a polite robot playing rock-paper-scissors. In Social Robotics, Proceedings of the 8th International Conference, ICSR 2016, Kansas City, MO, USA, 1–3 November 2016; Agah, A., Cabibihan, J.-J., Howard, A., Salichs, M.A., He, H., Eds.; Springer: Cham, Switzerland, 2016; Volume 9979. [Google Scholar] [CrossRef]

- Gu, G.; Zhu, J.; Zhu, X. A survey on dielectric elastomer actuators for soft robots. J. Bioinspiration Biomim. 2017, 12, 011003. [Google Scholar] [CrossRef]

- Hajiesmaili, E.; Clarke, D.R. Dielectric elastomer actuators. J. Appl. Phys. 2021, 129, 151102. [Google Scholar] [CrossRef]

- Minaminosono, A.; Shigemune, H.; Okuno, Y.; Katsumata, T.; Hosoya, N.; Maeda, S. A deformable motor driven by dielectric elastomer actuators and flexible mechanisms. Front. Robot. AI 2019, 6, 1. [Google Scholar] [CrossRef] [Green Version]

- Shintake, J.; Cacucciolo, V.; Shea, H.R.; Floreano, D. Soft biomimetic fish robot made of DEAs. Soft Robot. 2018, 5, 466–474. [Google Scholar] [CrossRef] [Green Version]

- Ji, X.; Liu, X.; Cacucciolo, V.; Imboden, M.; Civet, Y.; El Haitami, A.; Cantin, S.; Perriard, Y.; Shea, H. An autonomous untethered fast, soft robotic insect driven by low-voltage dielectric elastomer actuators. Sci. Robot. 2019, 4, eaaz6451. [Google Scholar] [CrossRef]

- Gupta, U.; Lei, Q.; Wang, G.; Jian, Z. Soft robots based on dielectric elastomer actuators: A review. Smart Mater. Struct. 2018, 28, 103002. [Google Scholar] [CrossRef]

- Panahi-Sarmad, M.; Zahiri, B.; Noroozi, M. Graphene-based composite for dielectric elastomer actuator. Sens. Actuators A Phys. 2019, 293, 222–241. [Google Scholar] [CrossRef]

- Duduta, M.; Hajiesmaili, E.; Huichan, Z.; Wood, R.; Clarke, C. Realizing the potential of dielectric elastomer artificial muscles. Proc. Natl. Acad. Sci. USA 2019, 116, 2476–2481. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Zurutuza, A. Graphene and Graphite, How do They Compare? 2018. Available online: www.graphenea.com/pages/graphenegraphite.WQEXOj7Q2y (accessed on 20 November 2018).

- Woodford, C. What is Graphene. 2018. Available online: www.explainthatstuff.com/graphene.html (accessed on 29 April 2020).

- Roberts, J.; Dennison, J. The photobiology of lutein and zeaxanthin in the eye. J. Ophthalmol. 2015, 20, 332–342. [Google Scholar] [CrossRef] [Green Version]

- Miao, P.; Wang, J.; Zhang, C. Graphene nanostructure-based tactile sensors for electronic skin applications. Nano-Micro Lett. 2019, 11, 1–37. [Google Scholar] [CrossRef] [Green Version]

- Anding, B. EmotionPinTumbler. 2016. Available online: https://github.com/BenjaminAnding/EmotionPinTumbler (accessed on 15 December 2020).

- Kassner, M.; Patera, W.; Bulling, A. Pupil: An open-source platform for pervasive eye tracking and mobile gaze-based interaction. In Proceedings of the 2014 ACM International Joint Conference on Pervasive and Ubiquitous Computing: Adjunct Publication, Seattle, WA, USA, 13–17 September 2014. [Google Scholar] [CrossRef]

- Rossi, L.; Zegna, L.; Rossi, G. Pupil size under different lighting sources. Lighting Eng. 2012, 21, 40–50. [Google Scholar]

- Soleymani, M.; Pantic, M.; Pun, T. Multimodal emotion recognition in response to videos. IEEE Trans. Affect. Comput. 2012, 3, 211–223. [Google Scholar] [CrossRef] [Green Version]

- Brochu, P.; Pei, Q. Advances in dielectric elastomers for actuators and artificial muscles. Macromol. Rapid Commun. 2010, 31, 10–36. [Google Scholar] [CrossRef] [PubMed]

- Mardaljevic, J.; Andersen, M.; Nicolas, R.; Christoffersen, J. Daylighting metrics: Is there a relation between useful daylight illuminance and daylight glare probability? In Proceedings of the Building Simulation and Optimization Conference BSO12, Loughborough, UK, 10–11 September 2012. [Google Scholar] [CrossRef]

- Strathearn, C.; Minhua, M. Modelling user preference for embodied artificial intelligence and appearance in realistic humanoid robots. Informatics 2020, 7, 28. [Google Scholar] [CrossRef]

- Karniel, A.; Avraham, G.; Peles, B.; Levy-Tzedek, S.; Nisky, I. One dimensional Turing-like handshake test for motor intelligence. J. Vis. Exp. JoVE 2010, 46, 2492. [Google Scholar] [CrossRef] [Green Version]

- Stock-Homburg, R.; Peters, J.; Schneider, K.; Prasad, V.; Nukovic, L. Evaluation of the handshake Turing test for anthropomorphic robots. In Proceedings of the 15th ACM/IEEE International Conference on Human-Robot Interaction (HRI), Cambridge, UK, 23–26 March 2020. [Google Scholar] [CrossRef] [Green Version]

- Cheetham, M. The uncanny valley hypothesis: Behavioural, eye-movement, and functional MRI findings. Int. J. HRI 2014, 1, 145. [Google Scholar] [CrossRef]

- Tinwell, A.; Abdel, D. The effect of onset asynchrony in audio visual speech and the uncanny valley in virtual characters. Int. J. Mech. Robot. Syst. 2015, 2, 97–110. [Google Scholar] [CrossRef]

- Trambusti, S. Automated Lip-Sync for Animatronics—Uncanny Valley. 2019. Available online: https://mcqdev.de/automated-lip-sync-for-animatronics/ (accessed on 11 February 2020).

- White, G.; McKay, L.; Pollick, F. Motion and the uncanny valley. J. Vis. 2007, 7, 477. [Google Scholar] [CrossRef]

- Novella, S. The Uncanny Valley. 2010. Available online: https://theness.com/neurologicablog/index.php/the-uncanny-valley/ (accessed on 14 April 2020).

- Lonkar, A. The Uncanny Valley The Effect of Removing Blend Shapes from Facial Animation. 2016. Available online: https://sites.google.com/site/lameya17/ms-thesis (accessed on 12 April 2020).

- Garau, M.; Weirich, D. The Impact of Avatar Fidelity on Social Interaction in Virtual Environments. Ph.D. Thesis, University College London, London, UK, 2003. [Google Scholar]

- Tromp, J.; Bullock, A.; Steed, A.; Sadagic, A.; Slater, M.; Frécon, E. Small-group behaviour experiments in the COVEN project. IEEE Comput. Graph. Appl. 1998, 18, 53–63. [Google Scholar] [CrossRef]

- Ishiguro, H. Android science: Toward a new cross-interdisciplinary framework. J. Comput. Sci. 2005, 28, 118–127. [Google Scholar]

- Schweizer, P. The truly total turing test. Minds Mach. 1998, 8, 263–272. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Strathearn, C. Artificial Eyes with Emotion and Light Responsive Pupils for Realistic Humanoid Robots. Informatics 2021, 8, 64. https://0-doi-org.brum.beds.ac.uk/10.3390/informatics8040064

Strathearn C. Artificial Eyes with Emotion and Light Responsive Pupils for Realistic Humanoid Robots. Informatics. 2021; 8(4):64. https://0-doi-org.brum.beds.ac.uk/10.3390/informatics8040064

Chicago/Turabian StyleStrathearn, Carl. 2021. "Artificial Eyes with Emotion and Light Responsive Pupils for Realistic Humanoid Robots" Informatics 8, no. 4: 64. https://0-doi-org.brum.beds.ac.uk/10.3390/informatics8040064