OFCOD: On the Fly Clustering Based Outlier Detection Framework †

Abstract

:1. Introduction

2. Proposed Framework

2.1. The Offline Stage

2.1.1. Data Preprocessing and Parameters Initialization

2.1.2. Launching Clustering

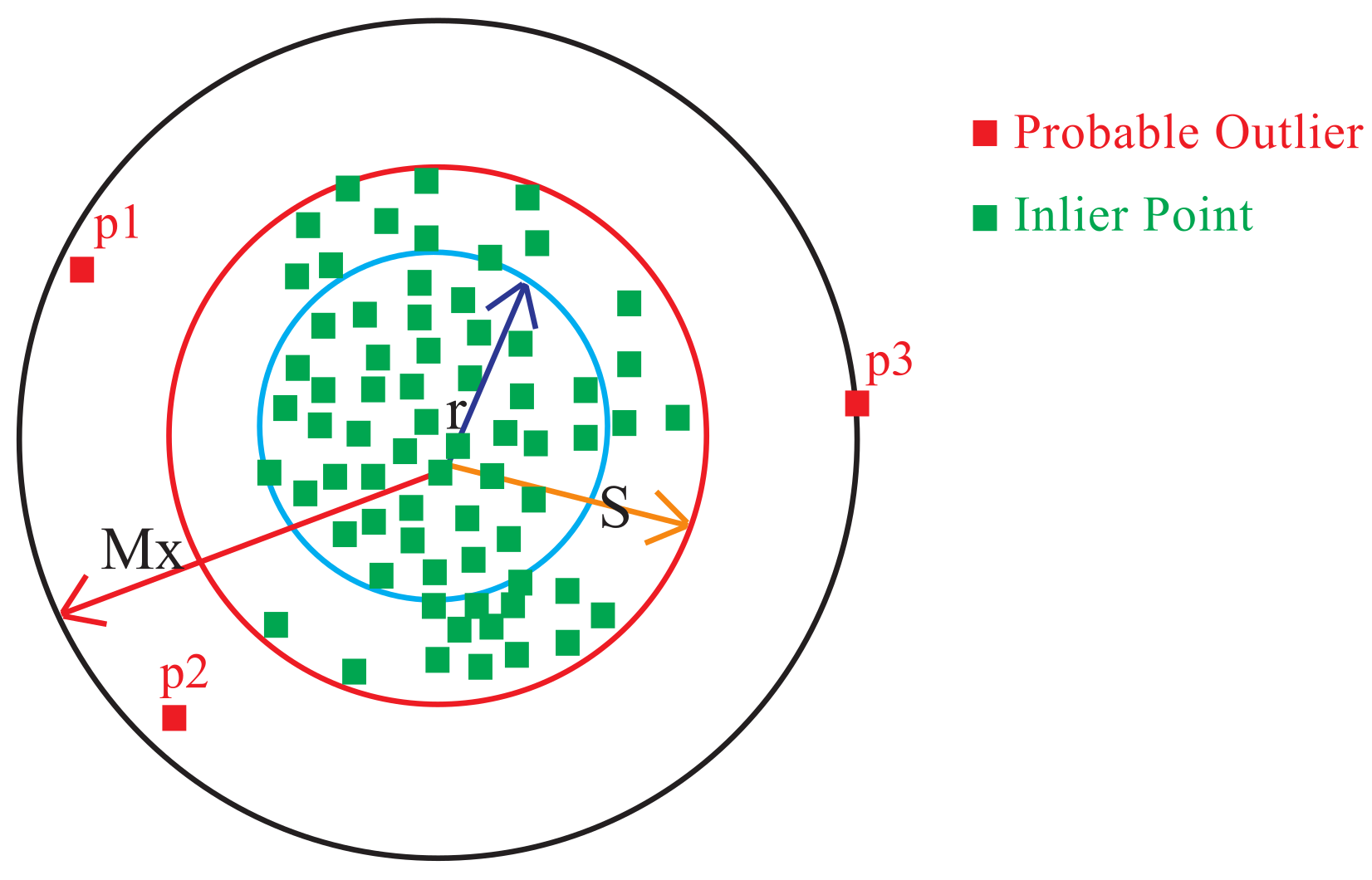

2.1.3. Finding Outlier Clusters

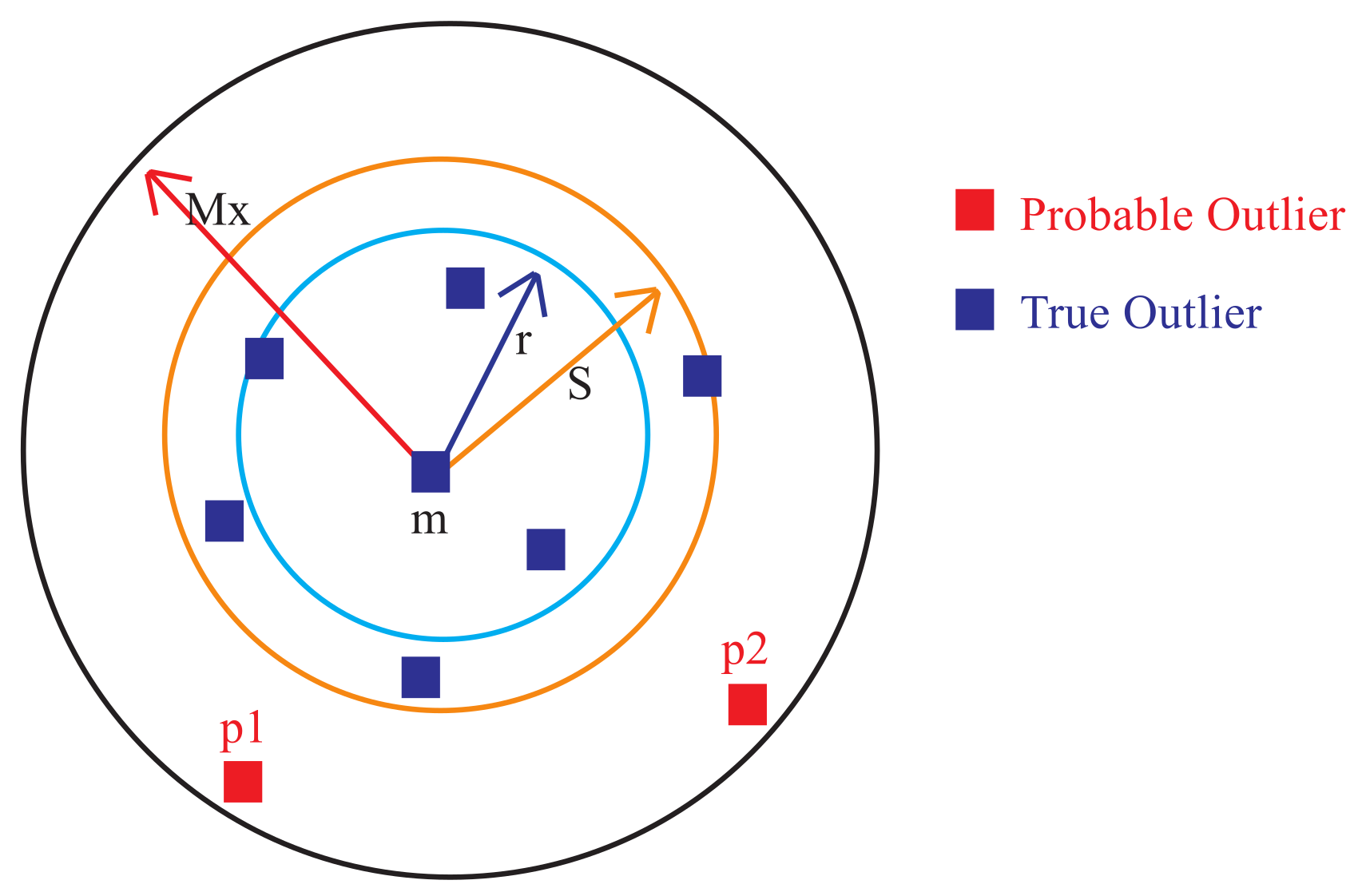

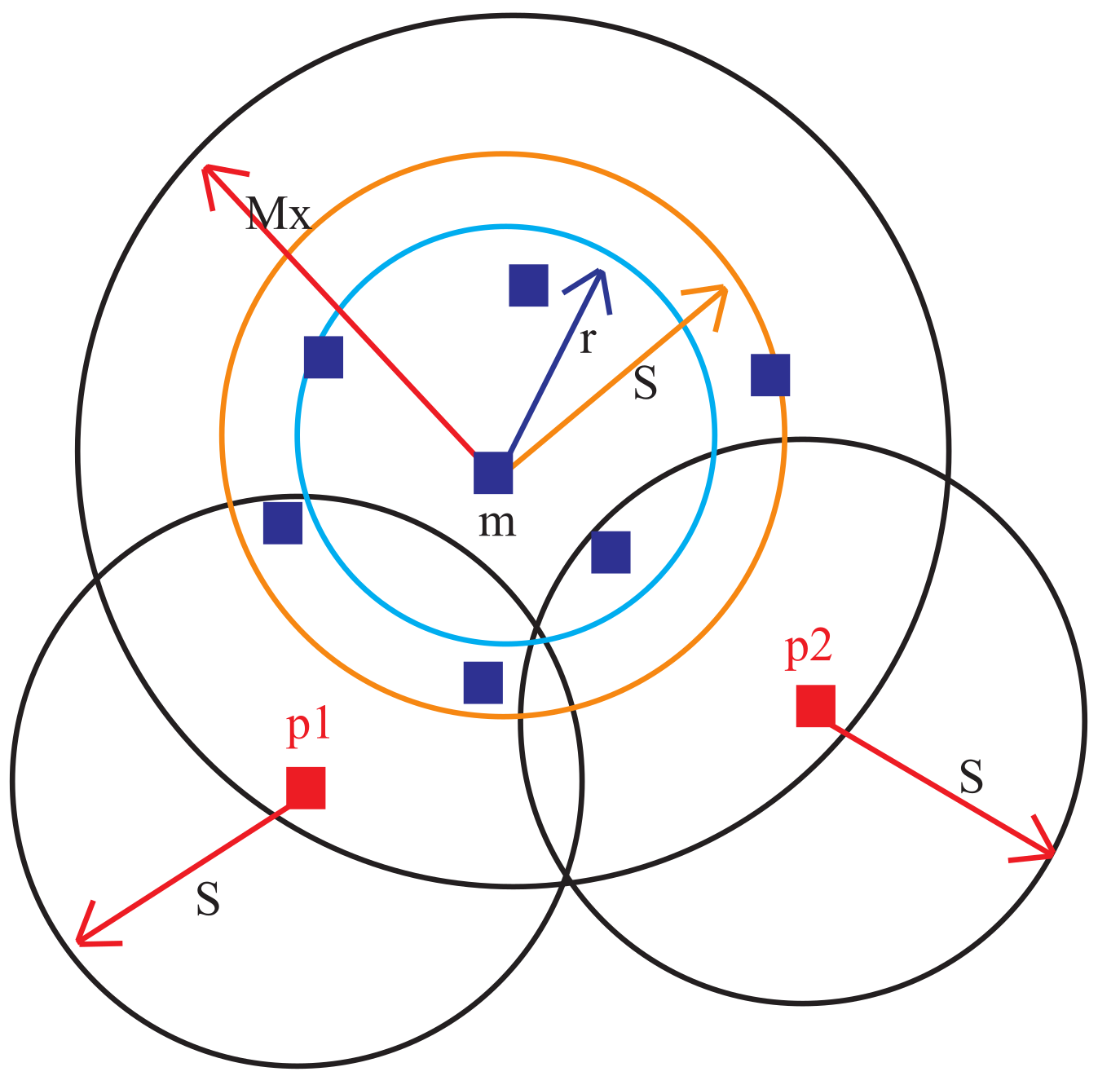

2.1.4. Identify Probable Outliers inside Cluster

- Slice the distance from medoid M of cluster to the border of the cluster into a set of regions of equal width .

- Calculate the average number of points in all slices under radius region , from medoid as follows:where is the number of slices inside the radius region.

- Find the number of points, , in each slicing region located outside the cluster radius .

- Compare with the average and continue incrementing b until the stopping condition ( is met.

- Finally, .

2.1.5. Assigning Outlier Factor

2.1.6. Removing Outliers from the Dataset

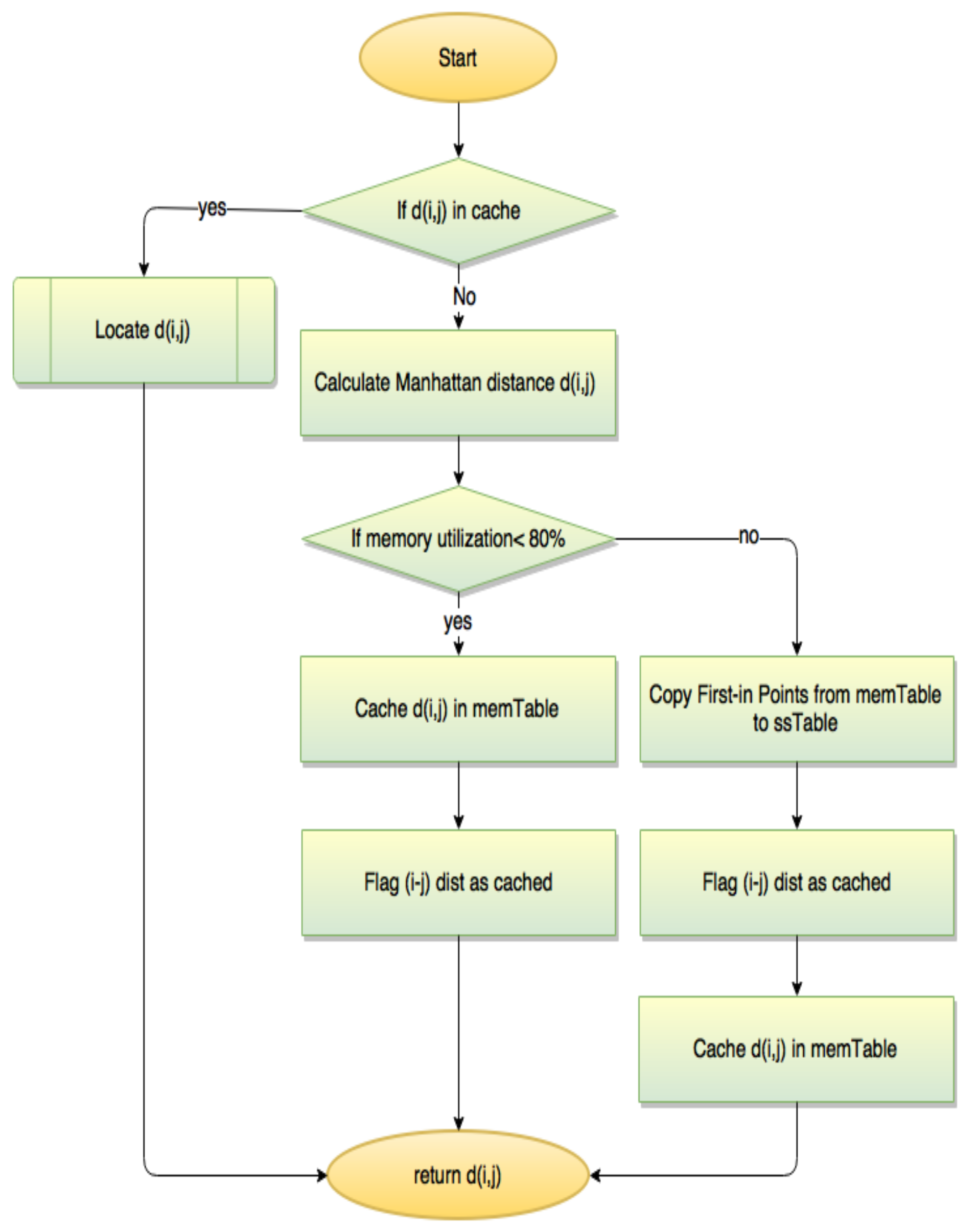

2.2. The Online Stage

- Receiving the query: The online framework receives the query from the user through this step. The query can be a single point to be checked whether it is an outlier or not, or a batch of points to be labeled as outlier or inlier. A preprocessing function is performed on the data before being passed to the next step similar to the one used in the offline stage.

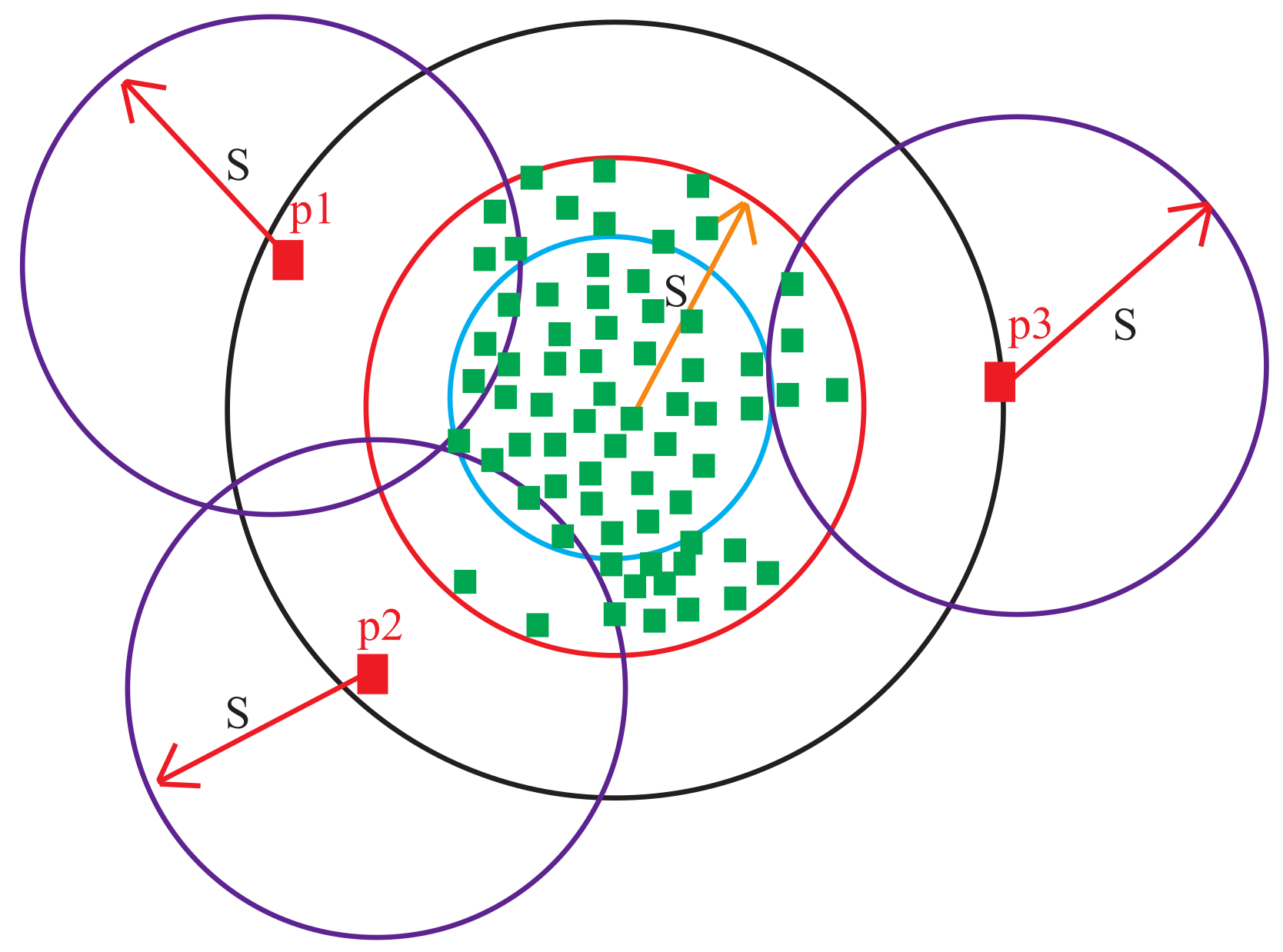

- Similarity calculation: In this step, we utilize the clusters set obtained from the offline stage in measuring the similarity between the tested point and all clusters represented by their centroids. The output of the offline stage is a clustered outlier-free dataset. Each cluster has a medoid that represents the whole cluster. The online stage exploits the relationship between the medoids and their clusters (i.e., a medoid is a fully representative of its cluster). The similarity between the test point and all clusters represented by their medoids is measured. The cosine similarity measure [35] is chosen to calculate the similarity according to the following equation:where P is the point given by the user to check its outlierness, M is the medoid of cluster C and i is the ith dimension of the point. The resulting similarity ranges from −1 to 1. The value −1 means exactly opposite similarity while 1 means exactly the same similarity. The 0 value indicates orthogonality. Any value between −1 and 1 indicates intermediate similarity or dissimilarity.

- Classification and assignment:After calculating the similarity between the test point and the clusters, the test point should belong to the cluster with the highest measured similarity to the point and satisfying Equation (15). The similarity measure is evaluated not only for normal clusters but also for outlier clusters.The test point is assigned to the closest cluster in the feature space. Hence, the cluster size is increased by one point and its medoid should be relocated. Thus, this cluster is maintained up-to-date with any new points.Steps 2 and 3 greatly reduce the computation time than the traditional clustering approaches because the traditional approaches require the generation of the whole clusters including the new data point.

- Medoids and cluster relocation:Finally, to keep the clusters data-up-to date after the insertion of the new point, this step runs in the background to determine the correct center point of the cluster that is affected by the new added point. A new medoid is calculated for the assigned cluster of the test point based on averaging the values of all points including the test point to form a new value. Thus, the only required calculation is for the cluster affected by the addition of that point. This will surely reduce the computational time.Alternatively, to avoid repeating the average calculation for each new assigned point, the centroid is computed directly based on the previous value of the centroid. Thus, the process of calculating the new medoid is independent on cluster size. Equation (16) is used for that.

- Outlier alarm system:This system is responsible for presenting the results to the user based on the requested mode. The request mode could be a single point mode, a batch mode or a test mode. In the single point mode, only one point is introduced to the framework and the result is an alert indicating whether the point is an outlier or not. In the batch mode, the framework runs on a set of points and the response is a file that includes all input points with the class of each one whether it is an outlier or not. The third mode is the test mode which generates four files consisting of the false positive points, the true positive points, the true negative points, and the false negative points of the test set [36]. This data is used to verify the performance of the framework, when both of the input set and the original set are class labeled.At the end of this online stage, the tested point is reclassified as an outlier or inlier. The point is assigned to a specific outlier or inlier cluster and the clusters are updated to be ready for further usage. The complexity of the online stage of the proposed framework is O(K) while K is the number of clusters which is very smaller than the number of points in the dataset leading to on-the-fly response.

3. Results and Discussion

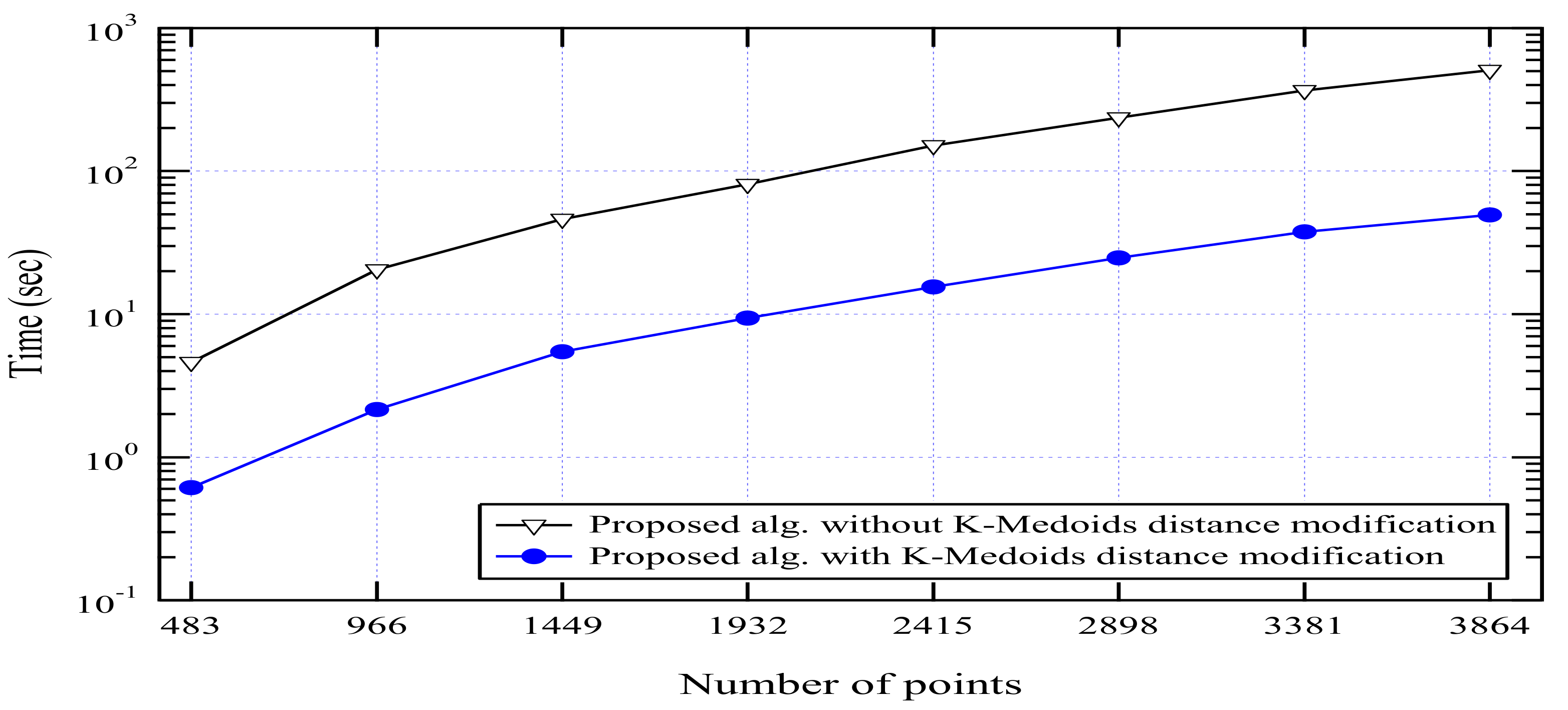

3.1. The Offline Stage of the Proposed Framework

3.2. The Online Stage of the Proposed Framework

3.2.1. The Effect of the Number of Clusters k

3.2.2. The Effect of the Number of Top Outliers Queried

3.2.3. The OFCOD Performance When Varying the Test Set

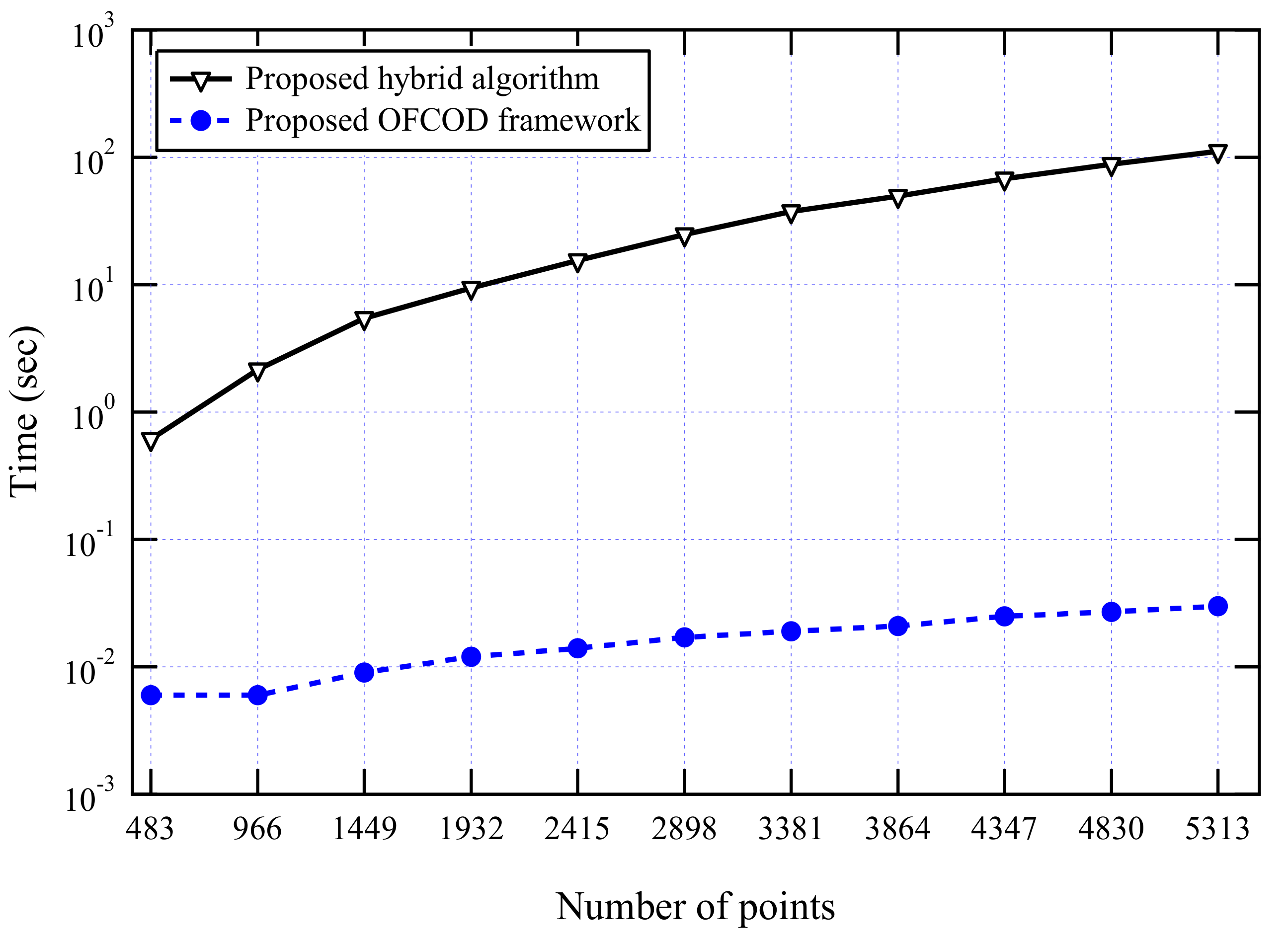

3.2.4. The OFCOD Response Time

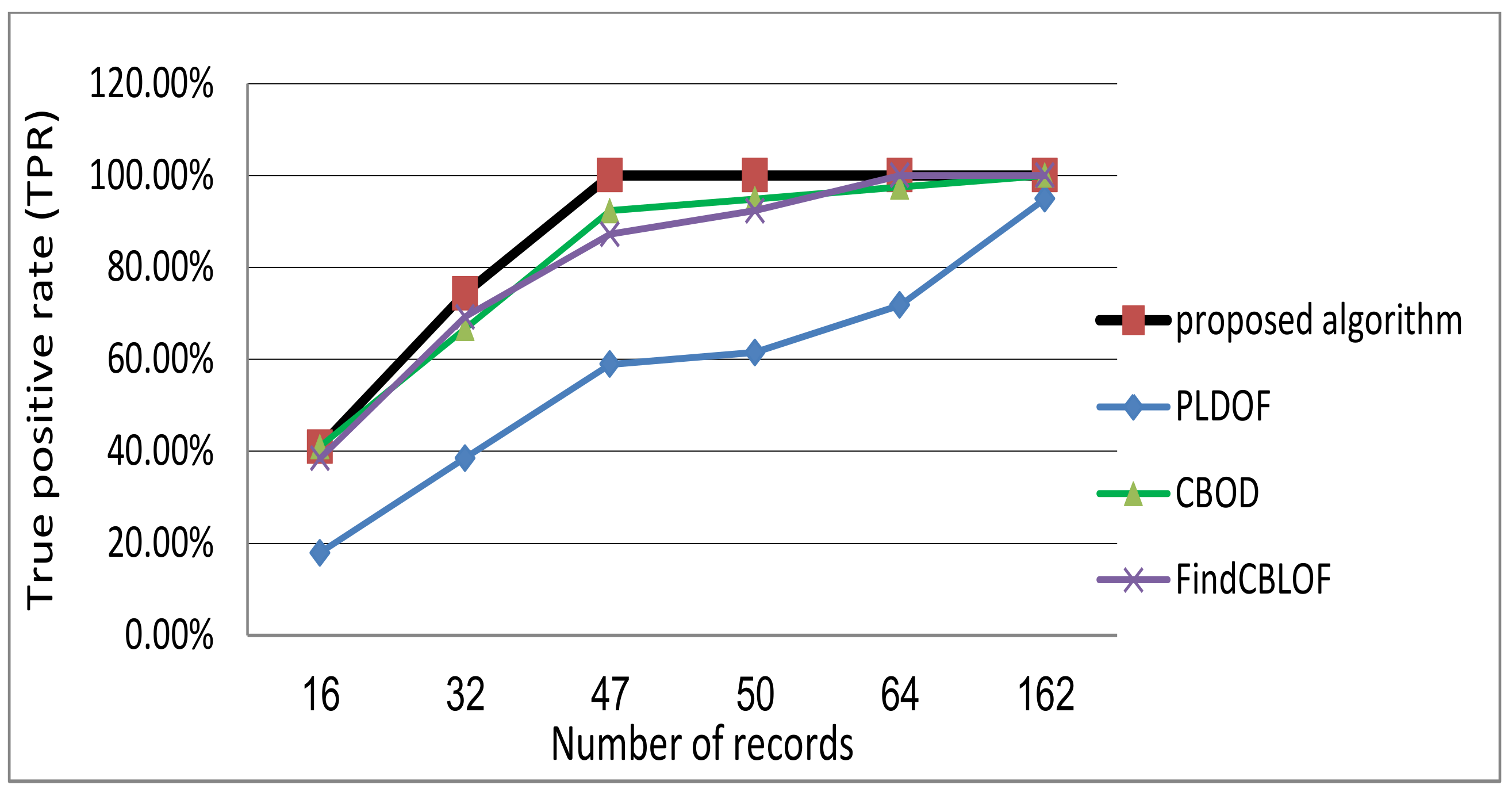

3.2.5. Comparative Evaluation

3.2.6. Scalability Evaluation

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Simon, H.; Hongxing, H.; Graham, W.; Rohan, B. Outlier Detection Using Replicator Neural Networks. In Data Warehousing and Knowledge Discovery; Springer: Berlin/Heidelberg, Germany, 2002; pp. 170–180. [Google Scholar]

- Gagniuc, P.; Ionescu-Tirgoviste, C.; Radulescu, C.H. Automatic Growth Detection of Cell Cultures through Outlier Techniques using 2D Images. Int. J. Comput. Commun. 2013, 8, 407–415. [Google Scholar] [CrossRef] [Green Version]

- Han, J.; Kamber, M.; Pei, J. Data Mining: Concepts and Techniques; Elsevier: Amsterdam, The Netherlands, 2012; Volume 3. [Google Scholar]

- Markus, B.; Hans-Peter, K.; Raymond, N.; Jörg, S. LOF: Identifying Density-based Local Outliers. SIGMOD Rec. 2000, 29, 93–104. [Google Scholar]

- Lei, C.; Mingrui, W.; Di, Y.; Elke, R. Online Outlier Exploration Over Large Datasets. In Proceedings of the KDD ’15, 21th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, Sydney, Australia, 10–13 August 2015; ACM: New York, NY, USA, 2015; pp. 89–98. [Google Scholar]

- Howsalya, D.; Devi, I. Outlier Detection Algorithm Combined with Decision Tree Classifier for Early Diagnosis of Breast Cancer. Int. J. Adv. Eng. Technol. 2009, 7, 93–98. [Google Scholar]

- Jianhua, G.; Wei, H.; Billy, W. Real time traffic flow outlier detection using short-term traffic conditional variance prediction. Transp. Res. Part C Emerg. Technol. 2014, 50, 160–172. [Google Scholar]

- Xiaodan, X.; Huawen, L.; Minghai, Y. Recent Progress of Anomaly Detection. Complexity 2019, 2019, 1–11. [Google Scholar] [CrossRef]

- Jatindra, P.; Sukumar, N. An Outlier Detection Method Based on Clustering. In Proceedings of the 2011 Second International Conference on Emerging Applications of Information Technology, Kolkata, India, 19–20 February 2011; pp. 253–256. [Google Scholar]

- Chawla, S.; Gionis, A. k-means-: A Unified Approach to Clustering and Outlier Detection; SDM: Bethesda, MD, USA, 2013. [Google Scholar]

- Kanishka, B.; Bryan, M.; Chris, G. Algorithms for Speeding Up Distance-based Outlier Detection. In Proceedings of the KDD ’11, 17th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, San Diego, CA, USA, 21–24 August 2011; pp. 859–867. [Google Scholar]

- Xu, X.; Li, H.L.; Yao, M. A Comparison of Outlier Detection Techniques for High-Dimensional Data. Int. J. Comput. Intell. Syst. 2018, 11, 652–662. [Google Scholar] [CrossRef] [Green Version]

- Bendechache, M.; Tari, A.K.; Kechadi, M. Parallel and distributed clustering framework for big spatial data mining. Int. J. Parallel Emergent Distrib. Syst. 2019, 34, 671–689. [Google Scholar] [CrossRef]

- Santhi, P.; Bhaskaran, V.M. Improving the Efficiency of Image Clustering using Modified Non Euclidean Distance Measures in Data Mining. Int. Comput. Commun. 2014, 9, 56–61. [Google Scholar]

- Kamal, M.; Harsh, S.; Gursharanjeet, K. Comparative Analysis of Outlier Detection Techniques. Int. J. Comput. Appl. 2014, 97, 12–21. [Google Scholar] [CrossRef]

- Ricardo, C.; Davoud, M.; Arthur, Z.; Jörg, S. Hierarchical Density Estimates for Data Clustering, Visualization, and Outlier Detection. ACM Trans. Knowl. Discov. Data 2015, 10, 5:1–5:51. [Google Scholar]

- Edwin, K.; Raymond, N. Finding Intensional Knowledge of Distance-Based Outliers. In Proceedings of the VLDB ’99, 25th International Conference on Very Large Data Bases, Edinburgh, UK, 7–10 September 1999; Morgan Kaufmann Publishers Inc.: San Mateo, CA, USA, 1999; pp. 211–222. [Google Scholar]

- Amol, G.; Srinivasan, P.; Eric, O. Fast mining of distance-based outliers in high-dimensional datasets. Data Min. Knowl. Discov. 2008, 16, 349–364. [Google Scholar]

- Tang, B.; He, H. A Local Density-Based Approach for Local Outlier Detection. arXiv 2016, arXiv:1606.08538. [Google Scholar] [CrossRef] [Green Version]

- Su, S.; Xiao, L.; Zhang, Z.; Gu, F.; Ruan, L.; Li, S.; He, Z.; Huo, Z.; Yan, B.; Wang, H.; et al. N2DLOF: A New Local Density-Based Outlier Detection Approach for Scattered Data. In Proceedings of the 2017 IEEE 19th International Conference on High Performance Computing and Communications, Bangkok, Thailand, 18–20 December 2017; pp. 458–465. [Google Scholar]

- He, Z.; Xu, X.; Deng, S. Discovering Cluster Based Local Outliers. Pattern Recognit. Lett. 2003, 2003, 9–10. [Google Scholar] [CrossRef]

- Jiang, S.; An, Q. Clustering-Based Outlier Detection Method. In Proceedings of the 2008 Fifth International Conference on Fuzzy Systems and Knowledge Discovery, Shandong, China, 18–20 October 2008; Volume 2, pp. 429–433. [Google Scholar]

- Rizk, H.; Elgokhy, S.; Sarhan, A. A hybrid outlier detection algorithm based on partitioning clustering and density measures. In Proceedings of the Tenth International Conference on Computer Engineering & Systems (ICCES), Cairo, Egypt, 23–24 December 2015; pp. 175–181. [Google Scholar]

- Elbasiony, R.M.; Sallam, E.A.; Eltobely, T.E.; Fahmy, M.M. A hybrid network intrusion detection framework based on random forests and weighted k-means. Ain Shams Eng. J. 2013, 4, 753–762. [Google Scholar] [CrossRef] [Green Version]

- Edwin, K.; Raymond, N.; Vladimir, T. Distance-based Outliers: Algorithms and Applications. Vldb J. 2000, 8, 237–253. [Google Scholar]

- Maria, K.; Anastasios, G.; Apostolos, P.; Kostas, T.; Yannis, M. Efficient and Flexible Algorithms for Monitoring Distance-based Outliers over Data Streams. Inf. Syst. 2016, 55, 37–53. [Google Scholar]

- Justin, Z. Privacy preserving K-medoids clustering. In Proceedings of the 2007 IEEE International Conference on Systems, Man and Cybernetics, Montreal, QC, Canada, 7–10 October 2007. [Google Scholar]

- Shaukat, K.; Masood, N.; Shafaat, A.; Jabbar, K.; Shabbir, H.; Shabbir, S. Dengue Fever in Perspective of Clustering Algorithms. J. Data Min. Genom. Proteom. 2015, 6, 3. [Google Scholar]

- Jaing, M.F.; Tseng, S.S.; Su, C.M. Two-phase Clustering Process for Outliers Detection. Pattern Recogn. Lett. 2001, 22, 691–700. [Google Scholar] [CrossRef]

- Nirmal, S. Comparative Study between K-Means and K-Medoids Clustering Algorithms. J. Classif. 2019, 6, 839–844. [Google Scholar]

- Budiaji, W.; Leisch, F. Simple K-Medoids Partitioning Algorithm for Mixed Variable Data. Algorithms 2019, 12, 1–15. [Google Scholar] [CrossRef] [Green Version]

- Archana, S.; Yadav, A.; Rana, A. K-means with Three different Distance Metrics. Int. J. Comput. Appl. 2013, 67, 13–17. [Google Scholar]

- Victoria, H.; Jim, A. A Survey of Outlier Detection Methodologies. Artif. Intell. Rev. 2004, 22, 85–126. [Google Scholar]

- Park, H.S.; Jun, C.H. A simple and fast algorithm for K-medoids clustering. Expert Syst. Appl. 2009, 36, 3336–3341. [Google Scholar] [CrossRef]

- Anna, K.; Michael, G. A fast outlier detection strategy for distributed high-dimensional data sets with mixed attributes. Data Min. Knowl. Discov. 2010, 20, 259–289. [Google Scholar]

- Fawcett, T. An introduction to ROC analysis. Pattern Recognit. Lett. 2006, 27, 861–874. [Google Scholar] [CrossRef]

- Wolberg, W.; Street, W.; Mangasarian, O. UCI Repository of Machine Learning Databases: Breast Cancer Wisconsin (Diagnostic) Data Set; UCI: Irvine, CA, USA, 1998. [Google Scholar]

- KDD’99: The KDD Intrusion Detection Dataset. 1999. Available online: http://kdd.ics.uci.edu/databases/kddcup99/kddcup99.html (accessed on 20 December 2020).

- Zheng, B.; Yoon, S.; Lam, S. Breast cancer diagnosis based on feature extraction using a hybrid of K-means and support vector machine algorithms. Expert Syst. Appl. 2014, 41, 1476–1482. [Google Scholar] [CrossRef]

- Shanxiong, C.; Maoling, P.; Hailing, X.; Sheng, W. An anomaly detection method based on Lasso. Clust. Comput. 2017, 22. [Google Scholar] [CrossRef]

| Number of Points (Top Ratio) | Proposed Algorithm | PLDOF [9] | CBOD [22] | FindCBLOF [21] | ||||

|---|---|---|---|---|---|---|---|---|

| TPR | FPR | TPR | FPR | TPR | FPR | TPR | FPR | |

| 16 | 41.03% | 0% | 17.8% | 2.03% | 41.03% | 0% | 38.46% | 0.23% |

| 32 | 74.36% | 0.67% | 38.5% | 3.82% | 66.67% | 1.35% | 69.23% | 1.13% |

| 47 | 94.87% | 1.8% | 58.9% | 5.4% | 92.31% | 2.48% | 87.18% | 2.93% |

| 50 | 100% | 1.8% | 61.5% | 5.85% | 94.87% | 2.93% | 92.3% | 3.15% |

| 64 | 100% | 1.8% | 71.79% | 8.11% | 97.44% | 5.8% | 100% | 5.63% |

| 162 | 100% | 1.8% | 94.87% | 27.7% | 100% | 27.7% | 100% | 27.7% |

| K | Percent of Points to Check | ||||

|---|---|---|---|---|---|

| 5% | 15% | 20% | 25% | 31% | |

| 2 | 100% | 100% | 100% | 99.39% | 99.5% |

| 4 | 100% | 97.93% | 100% | 95.7% | 99.5% |

| 6 | 96.77% | 100% | 100% | 99.39% | 99% |

| 8 | 100% | 100% | 100% | 99.39% | 100% |

| 9 | 96.77% | 94.84% | 93.89% | 92.64% | 92.57% |

| 10 | 96.77% | 95.88% | 93.89% | 91.41% | 92.1% |

| K | Percent of Points to Check | ||||

|---|---|---|---|---|---|

| 5% | 15% | 20% | 25% | 31% | |

| 2 | 33.33% | 12.5% | 22.22% | 9.1% | 14.82% |

| 4 | 33.33% | 12.5% | 11.11% | 0% | 7.14% |

| 6 | 33.33% | 12.5% | 22.22% | 9.1 | 7.14% |

| 8 | 33.33% | 12.5% | 11.11% | 0% | 7.14% |

| 9 | 33.33% | 12.5% | 11.11% | 0% | 7.14% |

| 10 | 33.33% | 12.5% | 22.22% | 0% | 7.14% |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Elmogy, A.; Rizk, H.; Sarhan, A.M. OFCOD: On the Fly Clustering Based Outlier Detection Framework. Data 2021, 6, 1. https://0-doi-org.brum.beds.ac.uk/10.3390/data6010001

Elmogy A, Rizk H, Sarhan AM. OFCOD: On the Fly Clustering Based Outlier Detection Framework. Data. 2021; 6(1):1. https://0-doi-org.brum.beds.ac.uk/10.3390/data6010001

Chicago/Turabian StyleElmogy, Ahmed, Hamada Rizk, and Amany M. Sarhan. 2021. "OFCOD: On the Fly Clustering Based Outlier Detection Framework" Data 6, no. 1: 1. https://0-doi-org.brum.beds.ac.uk/10.3390/data6010001