Information Quality Assessment for Data Fusion Systems

Abstract

:1. Introduction

2. Literature Review Process

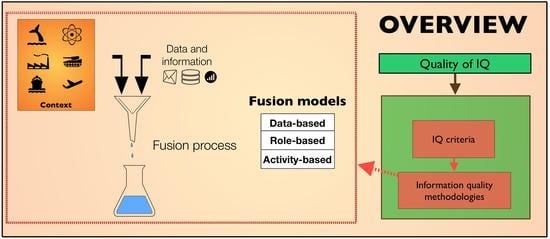

3. Data Fusion

3.1. Data Fusion Classification

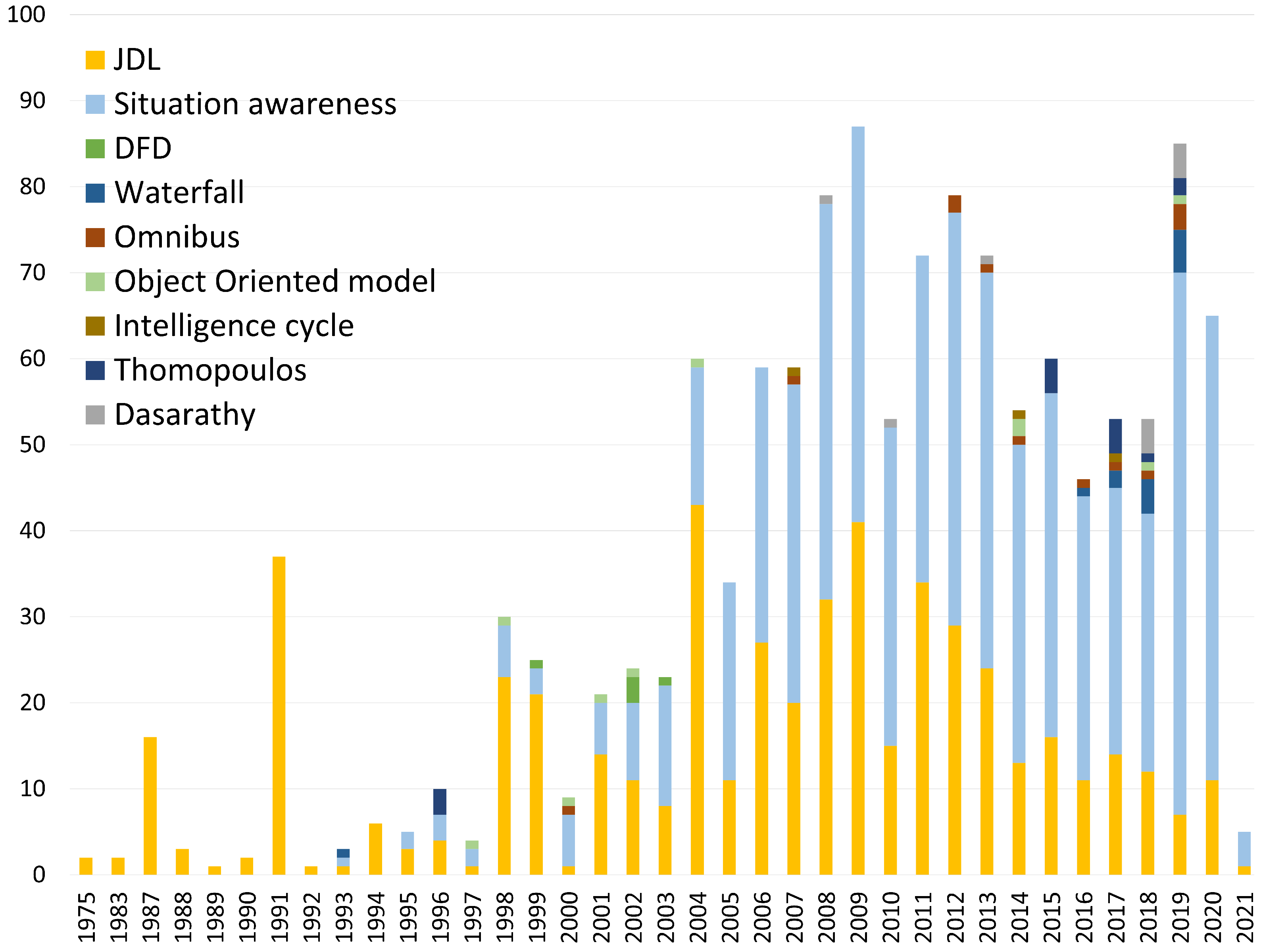

3.2. Data Fusion Models

3.3. Data Fusion Techniques

4. Information Quality

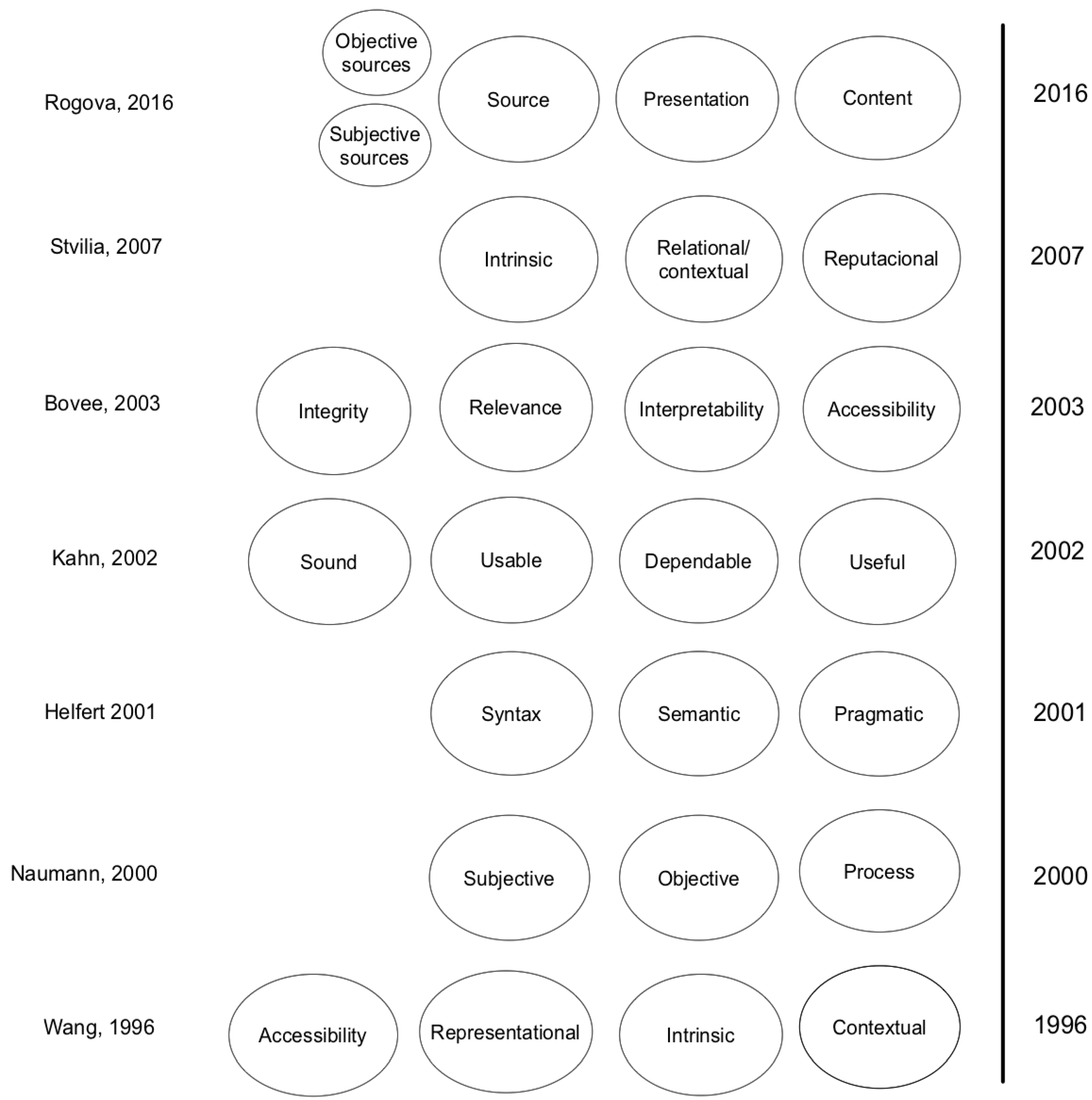

4.1. Information Quality Criteria

4.2. Information Quality Assessment Frameworks and Methodologies

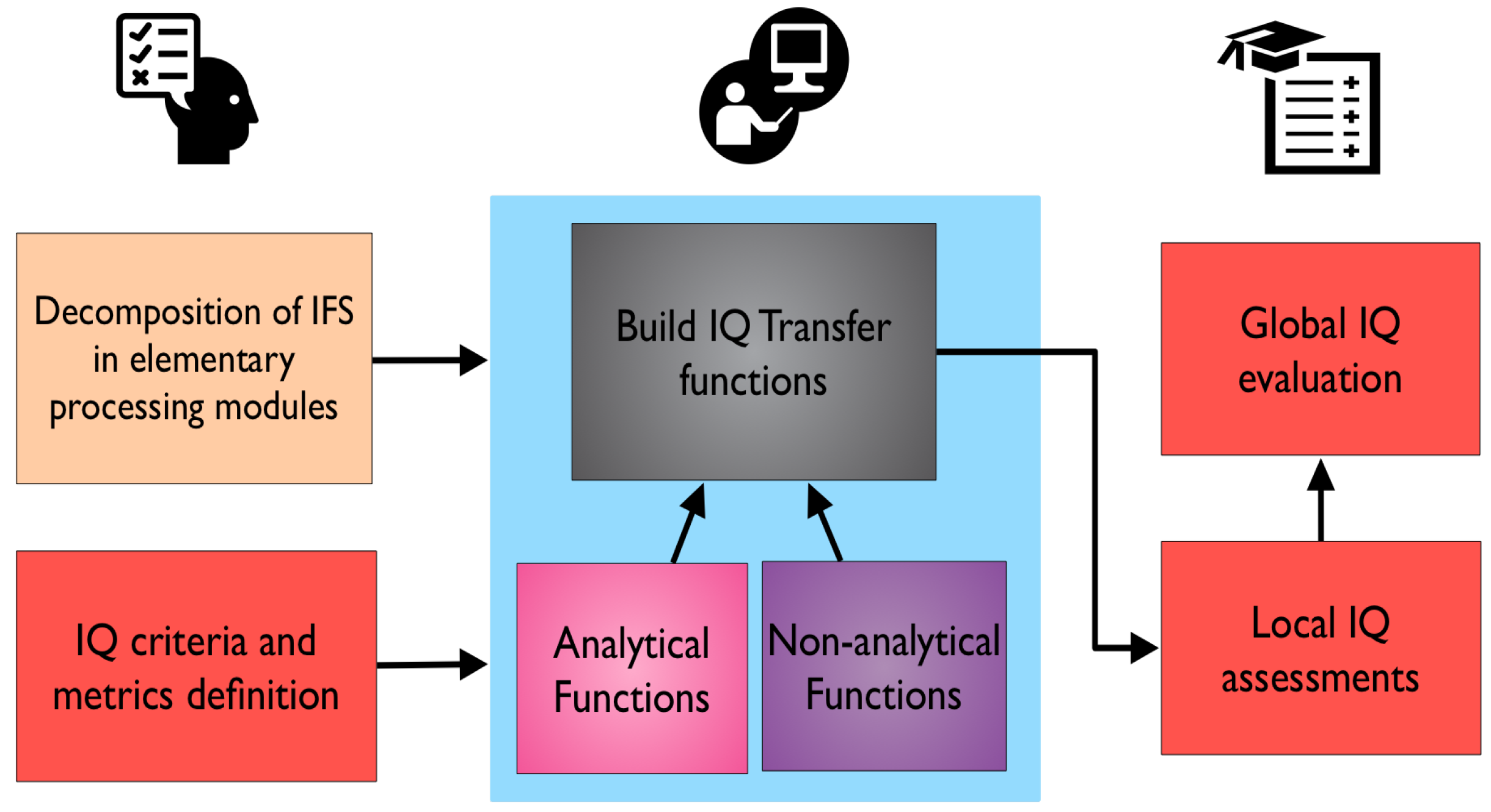

4.2.1. Methodology Introduced by Todoran et al.

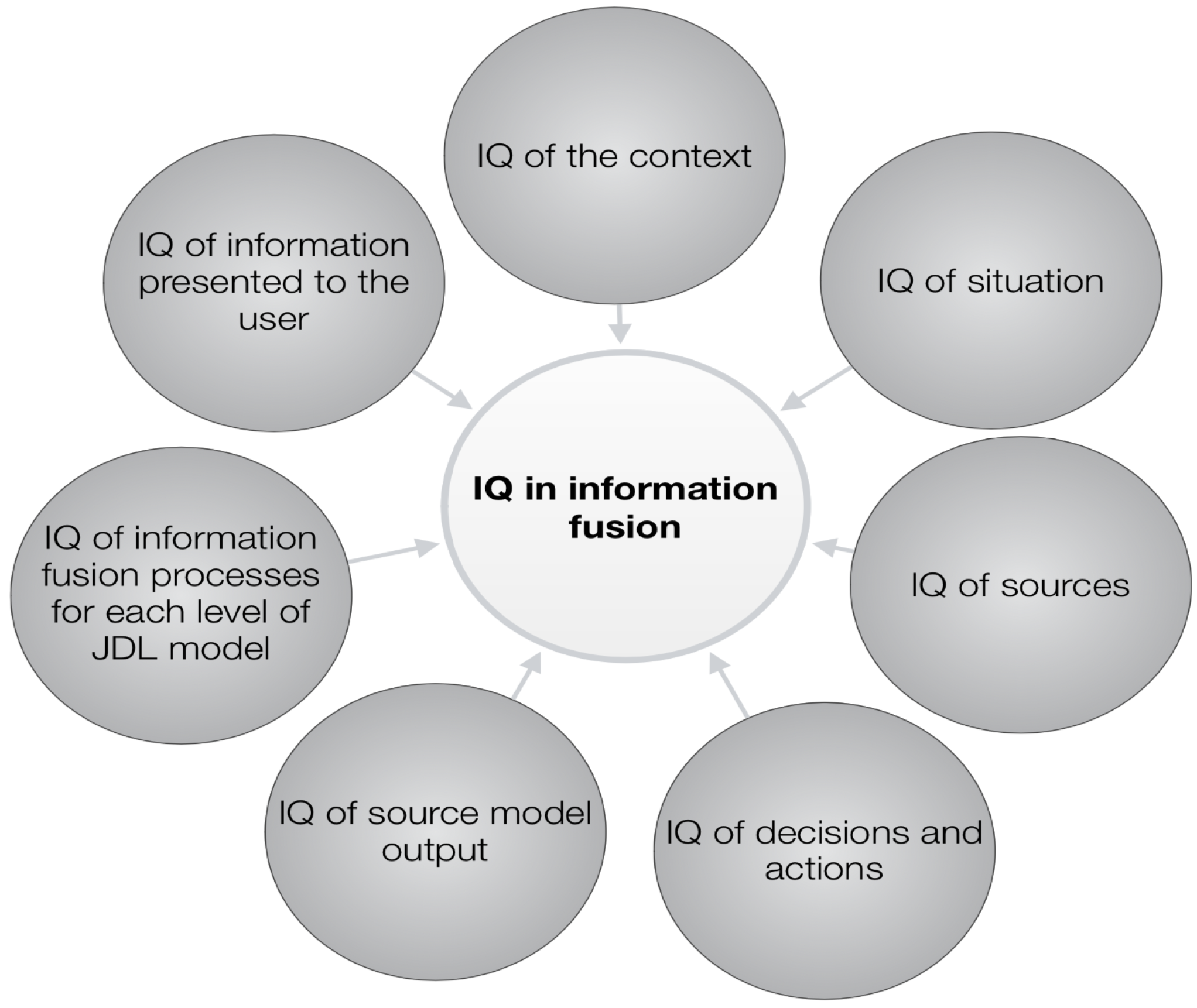

4.2.2. Methodology Proposed by Rogova (2016)

4.2.3. Methodology Introduced by Blasch Based on Measures for High-Level Fusion

4.3. Data Fusion System Performance

5. Conclusions and Future Work

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Xuan, L. Data Fusion in Managing Crowdsourcing Data Analytics Systems. Ph.D. Thesis, National University of Singapore, Singapore, 2013. [Google Scholar]

- Wickramarathne, T.L.; Premaratne, K.; Murthi, M.N.; Scheutz, M.; Kubler, S.; Pravia, M. Belief theoretic methods for soft and hard data fusion. In Proceedings of the 2011 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Prague, Czech Republic, 22–27 May 2011; pp. 2388–2391. [Google Scholar] [CrossRef]

- Lahat, D.; Adaly, T.; Jutten, C. Challenges in multimodal data fusion. In Proceedings of the 2014 22nd European Signal Processing Conference (EUSIPCO) European, Lisbon, Portugal, 1–5 September 2014; pp. 101–105. [Google Scholar]

- Rogova, G.L.; Snidaro, L. Considerations of Context and Quality in Information Fusion. In Proceedings of the 2018 21st International Conference on Information Fusion (FUSION), Cambridge, UK, 10–13 July 2018; pp. 1925–1932. [Google Scholar] [CrossRef]

- Todoran, I.G.; Lecornu, L.; Khenchaf, A.; Caillec, J.M.L. A Methodology to Evaluate Important Dimensions of Information. ACM J. Data Inf. Qual. 2015, 6, 23. [Google Scholar] [CrossRef]

- Blasch, E.P.; Salerno, J.J.; Tadda, G.P. Measuring the worthiness of situation assessment. In Proceedings of the 2011 IEEE National Aerospace and Electronics Conference (NAECON), Dayton, OH, USA, 20–22 July 2011; pp. 87–94. [Google Scholar] [CrossRef]

- van Laere, J. Challenges for IF performance evaluation in practice. In Proceedings of the 12th International Conference on Information Fusion, 2009, FUSION ’09, Seattle, WA, USA, 6–9 July 2009; pp. 866–873. [Google Scholar]

- Cheng, C.T.; Leung, H.; Maupin, P. A Delay-Aware Network Structure for Wireless Sensor Networks With In-Network Data Fusion. IEEE Sens. J. 2013, 13, 1622–1631. [Google Scholar] [CrossRef]

- Shao, H.; Lin, J.; Zhang, L.; Galar, D.; Kumar, U. A novel approach of multisensory fusion to collaborative fault diagnosis in maintenance. Inf. Fusion 2021, 74, 65–76. [Google Scholar] [CrossRef]

- Jan, M.A.; Zakarya, M.; Khan, M.; Mastorakis, S.; Menon, V.G.; Balasubramanian, V.; Rehman, A.U. An AI-enabled lightweight data fusion and load optimization approach for Internet of Things. Future Gener. Comput. Syst. 2021, 122, 40–51. [Google Scholar] [CrossRef]

- Dong, W.; Yang, L.; Gravina, R.; Fortino, G. ANFIS fusion algorithm for eye movement recognition via soft multi-functional electronic skin. Inf. Fusion 2021, 71, 99–108. [Google Scholar] [CrossRef]

- Li, M.; Wang, F.; Jia, X.; Li, W.; Li, T.; Rui, G. Multi-source data fusion for economic data analysis. Neural Comput. Appl. 2021, 33, 4729–4739. [Google Scholar] [CrossRef]

- Xiong, X.; Youngman, B.D.; Economou, T. Data fusion with Gaussian processes for estimation of environmental hazard events. Environmetrics 2021, 32. [Google Scholar] [CrossRef]

- Afifi, H.; Ramaswamy, A.; Karl, H. A Reinforcement Learning QoI/QoS-Aware Approach in Acoustic Sensor Networks. In Proceedings of the 2021 IEEE 18th Annual Consumer Communications & Networking Conference (CCNC), Las Vegas, NV, USA, 9–12 January 2021; pp. 1–6. [Google Scholar] [CrossRef]

- Smith, D.; Singh, S. Approaches to Multisensor Data Fusion in Target Tracking: A Survey. IEEE Trans. Knowl. Data Eng. 2006, 18, 1696–1710. [Google Scholar] [CrossRef]

- Li, H.; Nashashibi, F.; Yang, M. Split Covariance Intersection Filter: Theory and Its Application to Vehicle Localization. IEEE Trans. Intell. Transp. Syst. 2013, 14, 1860–1871. [Google Scholar] [CrossRef]

- Ardeshir Goshtasby, A.; Nikolov, S. Image fusion: Advances in the state of the art. Inf. Fusion 2007, 8, 114–118. [Google Scholar] [CrossRef]

- Uribe, Y.F.; Alvarez-Uribe, K.C.; Peluffo-Ordoñez, D.H.; Becerra, M.A. Physiological Signals Fusion Oriented to Diagnosis—A Review; Springer: Cham, Switzerland, 2018; pp. 1–15. [Google Scholar] [CrossRef]

- Zapata, J.C.; Duque, C.M.; Rojas-Idarraga, Y.; Gonzalez, M.E.; Guzmán, J.A.; Becerra Botero, M.A. Data fusion applied to biometric identification—A review. In Proceedings of the Colombian Conference on Computing, Cali, Colombia, 19–22 September 2017; Springer: Cham, Switzerland, 2017. [Google Scholar] [CrossRef]

- Arsalaan, A.S.; Nguyen, H.; Coyle, A.; Fida, M. Quality of information with minimum requirements for emergency communications. Ad Hoc Netw. 2021, 111, 102331. [Google Scholar] [CrossRef]

- Londoño-Montoya, E.; Gomez-Bayona, L.; Moreno-López, G.; Duarte, C.; Marín, L.; Becerra, M. Regression fusion framework: An approach for human capital evaluation. In Proceedings of the European Conference on Knowledge Management, ECKM, Barcelona, Spain, 7–8 September 2017; Volume 1. [Google Scholar]

- Abdelgawad, A.; Bayoumi, M. Data Fusion in WSN; Springer: Boston, MA, USA, 2012; pp. 17–35. [Google Scholar] [CrossRef]

- Liu, X.; Dong, X.L.; Ooi, B.C.; Srivastava, D. Online Data Fusion, 2011. Proc. VLDB Endowment. 2011, 11, 932–943. [Google Scholar] [CrossRef]

- Khaleghi, B.; Khamis, A.; Karray, F.O.; Razavi, S.N. Multisensor data fusion: A review of the state-of-the-art. Inf. Fusion 2013, 14, 28–44. [Google Scholar] [CrossRef]

- Yokoya, N.; Grohnfeldt, C.; Chanussot, J. Hyperspectral and Multispectral Data Fusion: A comparative review of the recent literature. IEEE Geosci. Remote Sens. Mag. 2017, 5, 29–56. [Google Scholar] [CrossRef]

- Modak, S.K.S.; Jha, V.K. Multibiometric fusion strategy and its applications: A review. Inf. Fusion 2019, 49, 174–204. [Google Scholar] [CrossRef]

- Olabarrieta, P.; Del, S. Método y Dispositivo de Estimación de la Probabilidad de Error de Medida Para Sistemas Distribuidos de Sensores. Google Patents, No. 073,458, 23 June 2011. [Google Scholar]

- Weller, W.T.; Pepus, G.B. Portable Apparatus and Method for Decision Support for Real Time Automated Multisensor Data Fusion and Analysis. United States Patent Application No. 10,346,725, 9 July 2019. [Google Scholar]

- Hershey, P.C.; Dehnert, R.E.; Williams, J.J.; Wisniewski, D.J. System and Method for Asymmetric Missile Defense. U.S. Patent No. 9,726,460, 8 August 2017. [Google Scholar]

- Rein, K.; Biermann, J. Your high-level information is my low-level data—A new look at terminology for multi-level fusion. In Proceedings of the 2013 16th International Conference on Information Fusion (FUSION), Istanbul, Turkey, 9–12 July 2013; pp. 412–417. [Google Scholar]

- Forzieri, G.; Tanteri, L.; Moser, G.; Catani, F. Mapping natural and urban environments using airborne multi-sensor ADS40-MIVIS-LiDAR synergies. Int. J. Appl. Earth Obs. Geoinf. 2013, 23, 313–323. [Google Scholar] [CrossRef]

- Xiao, S.; Li, B.; Yuan, X. Maximizing precision for energy-efficient data aggregation in wireless sensor networks with lossy links. Ad Hoc Netw. 2015, 26, 103–113. [Google Scholar] [CrossRef]

- Li, Y. Optimal multisensor integrated navigation through information space approach. Phys. Commun. 2014, 13, 44–53. [Google Scholar] [CrossRef]

- Safari, S.; Shabani, F.; Simon, D. Multirate multisensor data fusion for linear systems using Kalman filters and a neural network. Aerosp. Sci. Technol. 2014, 39, 465–471. [Google Scholar] [CrossRef]

- Rodríguez, S.; De Paz, J.; Villarrubia, G.; Zato, C.; Bajo, J.; Corchado, J. Multi-agent information fusion system to manage data from a WSN in a residential home. Inf. Fusion 2015, 23, 43–57. [Google Scholar] [CrossRef] [Green Version]

- Boström, H.; Andler, S.F.; Brohede, M.; Johansson, R.; Karlsson, E.; Laere, J.V.; Niklasson, L.; Nilsson, M.; Persson, A.; Ziemke, T. On the Definition of Information Fusion as a Field of Research. IKI Technical Reports, 2007. Available online: https://www.diva-portal.org/smash/record.jsf?pid=diva2%3A2391&dswid=8841 (accessed on 4 June 2021).

- Castanedo, F. A Review of Data Fusion Techniques. Sci. World J. 2013. [Google Scholar] [CrossRef]

- Steinberg, A.N.; Bowman, C.L.; White, F.E. Revisions to the JDL Data Fusion. In SPIE Digital Library; SPIE: Orlando, FL, USA, 1999. [Google Scholar] [CrossRef]

- White, F. Data Fusion Lexicon; Data Fusion Subpanel of the Joint Directors of Laboratories: Washington, DC, USA, 1991. [Google Scholar] [CrossRef] [Green Version]

- Dragos, V.; Rein, K. Integration of soft data for information fusion: Pitfalls, challenges and trends. In Proceedings of the 2014 17th International Conference on Information Fusion (FUSION), Salamanca, Spain, 7–10 July 2014; pp. 1–8. [Google Scholar]

- Sidek, O.; Quadri, S. A review of data fusion models and systems. Int. J. Image Data Fusion 2012, 3, 3–21. [Google Scholar] [CrossRef]

- Todoran, I.G.; Lecornu, L.; Khenchaf, A.; Caillec, J.M.L. Information quality evaluation in fusion systems. In Proceedings of the 16th International Conference on Information Fusion, Istanbul, Turkey, 9–12 July 2013. [Google Scholar]

- Clifford, G.; Lopez, D.; Li, Q.; Rezek, I. Signal quality indices and data fusion for determining acceptability of electrocardiograms collected in noisy ambulatory environments. Comput. Cardiol. 2011, 2011, 285–288. [Google Scholar]

- Li, Q.; Mark, R.G.; Clifford, G.D. Robust heart rate estimation from multiple asynchronous noisy sources using signal quality indices and a Kalman filter. Physiol. Meas. 2008, 29, 15. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Rogova, G.L. Information Quality in Information Fusion and Decision Making with Applications to Crisis Management. In Fusion Methodologies in Crisis Management; Springer International Publishing: Cham, Switzerland, 2016; pp. 65–86. [Google Scholar] [CrossRef]

- Rogova, G.; Hadzagic, M.; St-Hilaire, M.; Florea, M.C.; Valin, P. Context-based information quality for sequential decision making. In Proceedings of the 2013 IEEE International Multi-Disciplinary Conference on Cognitive Methods in Situation Awareness and Decision Support (CogSIMA), San Diego, CA, USA, 25–28 February 2013; pp. 16–21. [Google Scholar] [CrossRef]

- Blasch, E.; Valin, P.; Bosse, E. Measures of effectiveness for high-level fusion. In Proceedings of the 2010 13th International Conference on Information Fusion, Edinburgh, UK, 26–29 July 2010. [Google Scholar]

- Becerra, M.A.; Alvarez-Uribe, K.C.; Peluffo-Ordoñez, D.H. Low Data Fusion Framework Oriented to Information Quality for BCI Systems; Springer: Cham, Switzerland, 2018; pp. 289–300. [Google Scholar] [CrossRef]

- Jesus, G.; Casimiro, A.; Oliveira, A. A Survey on Data Quality for Dependable Monitoring in Wireless Sensor Networks. Sensors 2017, 17, 2010. [Google Scholar] [CrossRef]

- Abedjan, Z.; Golab, L.; Naumann, F. Profiling relational data: A survey. VLDB J. 2015, 24, 557–581. [Google Scholar] [CrossRef] [Green Version]

- Caruccio, L.; Deufemia, V.; Polese, G. Mining relaxed functional dependencies from data. Data Min. Knowl. Discov. 2020, 34, 443–477. [Google Scholar] [CrossRef]

- Caruccio, L.; Deufemia, V.; Naumann, F.; Polese, G. Discovering Relaxed Functional Dependencies based on Multi-attribute Dominance. IEEE Trans. Knowl. Data Eng. 2020, 1. [Google Scholar] [CrossRef]

- Berti-Équille, L.; Harmouch, H.; Naumann, F.; Novelli, N.; Thirumuruganathan, S. Discovery of genuine functional dependencies from relational data with missing values. Proc. VLDB Endow. 2018, 11, 880–892. [Google Scholar] [CrossRef] [Green Version]

- Mitchell, H.B. Introduction. In Data Fusion: Concepts and Ideas; Springer: Berlin/Heidelberg, Germany, 2012; pp. 1–14. [Google Scholar] [CrossRef]

- Dasarathy, B. Sensor fusion potential exploitation-innovative architectures and illustrative applications. Proc. IEEE 1997, 85, 24–38. [Google Scholar] [CrossRef]

- Esteban, J.; Starr, A.; Willetts, R.; Hannah, P.; Bryanston-Cross, P. A Review of data fusion models and architectures: Towards engineering guidelines. Neural Comput. Appl. 2005, 14, 273–281. [Google Scholar] [CrossRef] [Green Version]

- Luo, R.; Yih, C.C.; Su, K.L. Multisensor fusion and integration: Approaches, applications, and future research directions. IEEE Sens. J. 2002, 2, 107–119. [Google Scholar] [CrossRef]

- Durrant-Whyte, H.F. Sensor Models and Multisensor Integration. Int. J. Rob. Res. 1988, 7, 97–113. [Google Scholar] [CrossRef]

- Luo, R.; Kay, M. Multisensor integration and fusion in intelligent systems. IEEE Trans. Syst. Man Cybern. 1989, 19, 901–931. [Google Scholar] [CrossRef]

- Foo, P.H.; Ng, G.W. High-level Information Fusion: An Overview. J. Adv. Inf. Fusion 2013, 8, 33–72. [Google Scholar]

- Bossé, E.; Roy, J.; Wark, S. Concepts, Models, and Tools for Information Fusion; Artech House: Norwood, MA, USA, 2007; p. 376. [Google Scholar]

- Elmenreich, W. A Review on System Architectures for Sensor Fusion Applications. In Software Technologies for Embedded and Ubiquitous Systems; Obermaisser, R., Nah, Y., Puschner, P., Rammig, F.J., Eds.; Lecture Notes in Computer Science; Springer: Berlin/Heidelberg, Germany, 2007; pp. 547–559. [Google Scholar]

- Das, S.K. High-Level Data Fusion; Artech House: Norwood, MA, USA, 2008; p. 373. [Google Scholar]

- Schoess, J.; Castore, G. A Distributed Sensor Architecture For Advanced Aerospace Systems. Int. Soc. Opt. Photonics 1988, 0931, 74. [Google Scholar] [CrossRef]

- Rasmussen, J. Skills, rules, and knowledge; signals, signs, and symbols, and other distinctions in human performance models. IEEE Trans. Syst. Man Cybern. 1983, SMC-13, 257–266. [Google Scholar] [CrossRef]

- Pau, L.F. Sensor data fusion. J. Intell. Robot. Syst. 1988, 1, 103–116. [Google Scholar] [CrossRef]

- Harris, C.J.; Bailey, A.; Dodd, T.J. Multi-Sensor Data Fusion in Defence and Aerospace. Aeronaut. J. 1998, 102, 229–244. [Google Scholar]

- White, F.E. A model for data fusion. In Proceedings of the 1st National Symposium on Sensor Fusion, Naval Training Station, Orlando, FL, USA, 5–8 April 1988; Volume 2. [Google Scholar]

- Bedworth, M.; O’Brien, J. The Omnibus model: A new model of data fusion? IEEE Aerosp. Electron. Syst. Mag. 2000, 15, 30–36. [Google Scholar] [CrossRef] [Green Version]

- Shahbazian, E. Introduction to DF: Models and Processes, Architectures, Techniques and Applications. In Multisensor Fusion; Hyder, A.K., Shahbazian, E., Waltz, E., Eds.; NATO Science Series; Springer: Dordrecht, The Netherlands, 2002; pp. 71–97. [Google Scholar]

- Thomopoulos, S.C.A. Sensor integration and data fusion. J. Robot. Syst. 1990, 7, 337–372. [Google Scholar] [CrossRef]

- Carvalho, H.; Heinzelman, W.; Murphy, A.; Coelho, C. A general data fusion architecture. In Proceedings of the Sixth International Conference of Information Fusion, Cairns, QLD, Australia, 8–11 July 2003. [Google Scholar] [CrossRef] [Green Version]

- Endsley, M.R. Toward a Theory of Situation Awareness in Dynamic Systems. Hum. Factors J. Hum. Factors Ergon. Soc. 1995, 37, 32–64. [Google Scholar] [CrossRef]

- Nassar, M.; Kanaan, G.; Awad, H. Framework for analysis and improvement of data-fusion algorithms. In Proceedings of the 2010 The 2nd IEEE International Conference on Information Management and Engineering (ICIME), Chengdu, China, 16–18 April 2010; pp. 379–382. [Google Scholar] [CrossRef]

- Salerno, J. Information fusion: A high-level architecture overview. In Proceedings of the Fifth International Conference on Information Fusion, FUSION 2002, (IEEE Cat.No.02EX5997), Annapolis, MD, USA, 8–11 July 2002; Volume 1, pp. 680–686. [Google Scholar] [CrossRef]

- Blasch, E.; Plano, S. JDL Level 5 Fusion Model “User Refinement” Issues and Applications in Group Tracking; International Society for Optics and Photonics: Bellingham, WA, USA, 2002; Volume 4729, pp. 270–279. [Google Scholar] [CrossRef]

- Synnergren, J.; Gamalielsson, J.; Olsson, B. Mapping of the JDL data fusion model to bioinformatics. In Proceedings of the 2007 IEEE International Conference on Systems, Man and Cybernetics, Montreal, QC, Canada, 7–10 October 2007; pp. 1506–1511. [Google Scholar] [CrossRef]

- Schreiber-Ehle, S.; Koch, W. The JDL model of data fusion applied to cyber-defence—A review paper. In Proceedings of the 2012 Workshop on Sensor Data Fusion: Trends, Solutions, Applications (SDF), Bonn, Germany, 4–6 September 2012; pp. 116–119. [Google Scholar] [CrossRef]

- Timonen, J.; Laaperi, L.; Rummukainen, L.; Puuska, S.; Vankka, J. Situational awareness and information collection from critical infrastructure. In Proceedings of the 2014 6th International Conference On Cyber Conflict (CyCon 2014), Tallinn, Estonia, 3–6 June 2014; pp. 157–173. [Google Scholar] [CrossRef]

- Polychronopoulos, A.; Amditis, A.; Scheunert, U.; Tatschke, T. Revisiting JDL model for automotive safety applications: The PF2 functional model. In Proceedings of the 2006 9th International Conference on Information Fusion, Florence, Italy, 10–13 July 2006. [Google Scholar] [CrossRef]

- Zhang, Y.; Yang, H.L.; Prasad, S.; Pasolli, E.; Jung, J.; Crawford, M. Ensemble Multiple Kernel Active Learning For Classification of Multisource Remote Sensing Data. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2014, 8, 845–858. [Google Scholar] [CrossRef]

- Das, S.; Grecu, D. COGENT: Cognitive Agent to Amplify Human Perception and Cognition. In Proceedings of the Fourth International Conference on Autonomous Agents, Barcelona, Spain, 3–7 June 2000; AGENTS ’00. ACM: New York, NY, USA, 2000; pp. 443–450. [Google Scholar] [CrossRef]

- Cinar, G.; Principe, J. Adaptive background estimation using an information theoretic cost for hidden state estimation. In Proceedings of the 2011 International Joint Conference on Neural Networks (IJCNN), San Jose, CA, USA, 31 July–5 August 2011; pp. 489–494. [Google Scholar] [CrossRef] [Green Version]

- Sung, W.T.; Tsai, M.H. Data fusion of multi-sensor for IOT precise measurement based on improved PSO algorithms. Comput. Math. Appl. 2012, 64, 1450–1461. [Google Scholar] [CrossRef] [Green Version]

- Madnick, S.E.; Wang, R.Y.; Lee, Y.W.; Zhu, H. Overview and Framework for Data and Information Quality Research. J. Data Inf. Qual. 2009, 1, 1–22. [Google Scholar] [CrossRef]

- Stvilia, B.; Gasser, L.; Twidale, M.B.; Smith, L.C. A framework for information quality assessment. J. Am. Soc. Inf. Sci. Technol. 2007, 58, 1720–1733. [Google Scholar] [CrossRef]

- Gu, Y.; Shen, H.; Bai, G.; Wang, T.; Liu, X. QoI-aware incentive for multimedia crowdsensing enabled learning system. Multimed. Syst. 2020, 26, 3–16. [Google Scholar] [CrossRef]

- Demoulin, N.T.M.; Coussement, K. Acceptance of text-mining systems: The signaling role of information quality. Inf. Manag. 2020, 57, 103120. [Google Scholar] [CrossRef]

- Torres, R.; Sidorova, A. Reconceptualizing information quality as effective use in the context of business intelligence and analytics. Int. J. Inf. Manag. 2019, 49, 316–329. [Google Scholar] [CrossRef]

- Juran, J.M.J.M. Juran on Quality by Design: The New Steps for Planning Quality into Goods and Services; Free Press: New York, NY, USA, 1992; p. 538. [Google Scholar]

- Wang, R.Y.; Strong, D.M. Beyond Accuracy: What Data Quality Means to Data Consumers. J. Manag. Inf. Syst. 1996, 12, 5–33. [Google Scholar] [CrossRef]

- Evans, J.R.J.R.; Lindsay, W.M. The Management and Control of Quality; Thomson/South-Western: Nashville, TN, USA, 2005. [Google Scholar]

- O’Brien, J.A.; Marakas, G.M. Introduction to Information Systems; McGraw-Hill/Irwin: New York, NY, USA, 2005; p. 543. [Google Scholar]

- Vaziri, R.; Mohsenzadeh, M.; Habibi, J. TBDQ: A Pragmatic Task-Based Method to Data Quality Assessment and Improvement. PLoS ONE 2016, 11, e0154508. [Google Scholar] [CrossRef] [Green Version]

- Bovee, M.; Srivastava, R.P.; Mak, B. A conceptual framework and belief-function approach to assessing overall information quality. Int. J. Intell. Syst. 2003, 18, 51–74. [Google Scholar] [CrossRef] [Green Version]

- Kahn, B.K.; Strong, D.; Wang, R. Information quality benchmarks: Product and service performance. Commun. ACM 2002, 45, 184–192. [Google Scholar] [CrossRef]

- Helfert, M. Managing and Measuring Data Quality in Data Warehousing. In Proceedings of the World Multiconference on Systemics, Cybernetics and Informatics, Orlando, FL, USA, 22–25 July 2001. [Google Scholar]

- Naumann, F. Quality-Driven Query Answering for Integrated Information Systems; Springer: Berlin/Heidelberg, Germany, 2002. [Google Scholar]

- Ge, M.; Helfert, M.; Jannach, D. Information Quality Assessment: Validating Measurement. In Proceedings of the ECIA 2011 Proceedings, 19th European Conference on Information Systems—ICT and Sustainable Service Development, ECIS 2011, Helsinki, Finland, 9–11 June 2011. [Google Scholar]

- Moges, H.T.; Dejaeger, K.; Lemahieu, W.; Baesens, B. A multidimensional analysis of data quality for credit risk management: New insights and challenges. Inf. Manag. 2013, 50, 43–58. [Google Scholar] [CrossRef]

- ISO. International Standard ISO/IEC 25012:2008 Software Engineering—Software Product Quality Requirements and Evaluation (SQuaRE); Technical Report; International Organization for Standarization: Geneva, Switzerland, 2008. [Google Scholar]

- Kenett, R.S.; Shmueli, G. Information Quality, 1st ed.; John Wiley & Sons, Ltd.: Chichester, UK, 2016. [Google Scholar] [CrossRef]

- Botega, L.C.; de Souza, J.O.; Jorge, F.R.; Coneglian, C.S.; de Campos, M.R.; de Almeida Neris, V.P.; de Araújo, R.B. Methodology for Data and Information Quality Assessment in the Context of Emergency Situational Awareness. Univers. Access Inf. Soc. 2017, 16, 889–902. [Google Scholar] [CrossRef]

- Wang, R.Y. A Product Perspective on Total Data Quality Management. Commun. ACM 1998, 41, 58–65. [Google Scholar] [CrossRef]

- Jeusfeld, M.A.; Quix, C.; Jarke, M. Design and analysis of quality information for data warehouses. In Lecture Notes in Computer Science; Springer: Berlin/Heidelberg, Germany, 1998; pp. 349–362. [Google Scholar]

- English, L.P. Improving Data Warehouse and Business Information Quality: Methods for Reducing Costs and Increasing Profits; John Wiley & Sons, Inc.: New York, NY, USA, 1999. [Google Scholar]

- Lee, Y.W.; Strong, D.M.; Kahn, B.K.; Wang, R.Y. AIMQ: A methodology for information quality assessment. Inf. Manag. 2002, 40, 133–146. [Google Scholar] [CrossRef]

- Pipino, L.L.; Lee, Y.W.; Wang, R.Y. Data Quality Assessment. Commun. ACM 2002, 45, 211–218. [Google Scholar] [CrossRef]

- Eppler, M.; Muenzenmayer, P. Measuring information quality in the web context: A survey of state-of-the-art instruments and an application methodology. In Proceedings of the 7th International Conference on Information Quality, MIT Sloan School of Management, Cambridge, MA, USA, 8–10 November 2002; pp. 187–196. [Google Scholar]

- van Solingen, R.; Basili, V.; Caldiera, G.; Rombach, H.D. Goal Question Metric (GQM) Approach. In Encyclopedia of Software Engineering; John Wiley & Sons, Inc.: Hoboken, NJ, USA, 2002. [Google Scholar] [CrossRef]

- Falorsi, P.; Pallara, S.; Pavone, A.; Alessandroni, A.; Massella, E.; Scannapieco, M. Improving the quality of toponymic data in the italian public administration. In Proceedings of the ICDT Workshop on Data Quality in Cooperative Information Systems (DQCIS), Siena, Italy, 10–11 January 2003. [Google Scholar]

- Su, Y.; Jin, Z. A Methodology for Information Quality assessment in the Designing and Manufacturing Processes of Mechanical Products. In Proceedings of the 9th International Conference on Information Quality, Cambridge, MA, USA, 5–7 November 2004; pp. 447–465. [Google Scholar]

- Monograph, R.; Wang, E.; Pierce, S.; Madnick, S.; Fisher, C.W.; Loshin, D. Enterprise Knowledge Management—The Data Quality Approach; Series in Data Management Systems; Morgan Kaufmann: Burlington, MA, USA, 2001. [Google Scholar]

- Redman, T.C.; Godfrey, A.B. Data Quality for the Information Age, 1st ed.; Artech House, Inc.: Norwood, MA, USA, 1997. [Google Scholar]

- Scannapieco, M.; Virgillito, A.; Marchetti, C.; Mecella, M.; Baldoni, R. The DaQuinCIS architecture: A platform for exchanging and improving data quality in cooperative information systems. Inf. Syst. 2004, 29, 551–582. [Google Scholar] [CrossRef]

- De Amicis, F.; Batini, C. A methodology for data quality assessment on financial data. Stud. Commun. Sci. SCKM 2004, 4, 115–137. [Google Scholar]

- Long, J.; Seko, C. A cyclic-hierarchical method for database data-quality evaluation and improvement. In Advances in Management Information Systems-Information Quality Monograph (AMISIQ); Routledge: New York, NY, USA, 2005. [Google Scholar]

- Cappiello, C.; Ficiaro, P.; Pernici, B. HIQM: A Methodology for Information Quality Monitoring, Measurement, and Improvement; Springer: Berlin/Heidelberg, Germany, 2006; pp. 339–351. [Google Scholar] [CrossRef]

- Batini, C.; Cabitza, F.; Cappiello, C.; Francalanci, C.; di Milano, P. A Comprehensive Data Quality Methodology for Web and Structured Data. In Proceedings of the 2006 1st International Conference on Digital Information Management, Bangalore, India, 6–8 December 2006; pp. 448–456. [Google Scholar] [CrossRef]

- Alkhattabi, M.; Neagu, D.; Cullen, A. Information quality framework for e-learning systems. Knowl. Manag. E-Learn. 2010, 2, 340–362. [Google Scholar]

- Carlo, B.; Daniele, B.; Federico, C.; Simone, G. A Data Quality Methodology for Heterogeneous Data. Int. J. Database Manag. Syst. 2011, 3, 60–79. [Google Scholar] [CrossRef]

- Heidari, F.; Loucopoulos, P. Quality evaluation framework (QEF): Modeling and evaluating quality of business processes. Int. J. Account. Inf. Syst. 2014, 15, 193–223. [Google Scholar] [CrossRef]

- Chan, K.; Marcus, K.; Scott, L.; Hardy, R. Quality of information approach to improving source selection in tactical networks. In Proceedings of the 2015 18th International Conference on Information Fusion (Fusion), Washington, DC, USA, 6–9 July 2015; pp. 566–573. [Google Scholar]

- Belen Sağlam, R.; Taskaya Temizel, T. A framework for automatic information quality ranking of diabetes websites. Inform. Health Soc. Care 2015, 40, 45–66. [Google Scholar] [CrossRef] [PubMed]

- Vetrò, A.; Canova, L.; Torchiano, M.; Minotas, C.O.; Iemma, R.; Morando, F. Open data quality measurement framework: Definition and application to Open Government Data. Gov. Inf. Q. 2016, 33, 325–337. [Google Scholar] [CrossRef] [Green Version]

- Woodall, P.; Borek, A.; Parlikad, A.K. Evaluation criteria for information quality research. Int. J. Inf. Qual. 2016, 4, 124. [Google Scholar] [CrossRef]

- Kim, S.; Lee, J.G.; Yi, M.Y. Developing information quality assessment framework of presentation slides. J. Inf. Sci. 2017, 43, 742–768. [Google Scholar] [CrossRef]

- Li, Y.; Jha, D.K.; Member, S.; Ray, A.; Wettergren, T.A.; Member, S. Information Fusion of Passive Sensors for Detection of Moving Targets in Dynamic Environments. IEEE Trans. Cybern. 2017, 47, 93–104. [Google Scholar] [CrossRef]

- Stawowy, M.; Olchowik, W.; Rosiński, A.; Dąbrowski, T. The Analysis and Modelling of the Quality of Information Acquired from Weather Station Sensors. Remote Sens. 2021, 13, 693. [Google Scholar] [CrossRef]

- Bouhamed, S.A.; Kallel, I.K.; Yager, R.R.; Bossé, É.; Solaiman, B. An intelligent quality-based approach to fusing multi-source possibilistic information. Inf. Fusion 2020, 55, 68–90. [Google Scholar] [CrossRef]

- Snidaro, L.; García, J.; Llinas, J. Context-based Information Fusion: A survey and discussion. Inf. Fusion 2015, 25, 16–31. [Google Scholar] [CrossRef] [Green Version]

- Krause, M.; Hochstatter, I. Challenges in Modelling and Using Quality of Context (QoC); Springer: Berlin/Heidelberg, Germany, 2005; pp. 324–333. [Google Scholar] [CrossRef]

- Schilit, B.; Adams, N.; Want, R. Context-Aware Computing Applications. In Proceedings of the 1994 First Workshop on Mobile Computing Systems and Applications, Santa Cruz, CA, USA, 8–9 December 1994; pp. 85–90. [Google Scholar] [CrossRef]

- Gómez-Romero, J.; Serrano, M.A.; García, J.; Molina, J.M.; Rogova, G. Context-based multi-level information fusion for harbor surveillance. Inf. Fusion 2015, 21, 173–186. [Google Scholar] [CrossRef] [Green Version]

- Akman, V.; Surav, M. The Use of Situation Theory in Context Modeling. Comput. Intell. 1997, 13, 427–438. [Google Scholar] [CrossRef]

- Rogova, G.; Bosse, E. Information quality in information fusion. In Proceedings of the 2010 13th Conference on Information Fusion (FUSION), Edinburgh, UK, 26–29 July 2010; pp. 1–8. [Google Scholar] [CrossRef]

- Vetrella, A.R.; Fasano, G.; Accardo, D.; Moccia, A. Differential GNSS and Vision-Based Tracking to Improve Navigation Performance in Cooperative Multi-UAV Systems. Sensors 2016, 16, 2164. [Google Scholar] [CrossRef] [PubMed]

- Wu, F.; Huang, Y.; Yuan, Z. Domain-specific sentiment classification via fusing sentiment knowledge from multiple sources. Inf. Fusion 2017, 35, 26–37. [Google Scholar] [CrossRef]

- Oh, S.I.; Kang, H.B. Object Detection and Classification by Decision-Level. Sensors 2017, 17, 207. [Google Scholar] [CrossRef] [Green Version]

- Nakamura, E.F.; Pazzi, R.W. Target Tracking for Sensor Networks: A Survey. ACM Comput. Surv. 2016, 49, 1–31. [Google Scholar]

- Benziane, L.; Hadri, A.E.; Seba, A.; Benallegue, A.; Chitour, Y. Attitude Estimation and Control Using Linearlike Complementary Filters: Theory and Experiment. IEEE Trans. Control Syst. Technol. 2016, 24, 2133–2140. [Google Scholar] [CrossRef] [Green Version]

- Lassoued, K.; Bonnifait, P.; Fantoni, I. Cooperative Localization with Reliable Confidence Domains Between Vehicles Sharing GNSS Pseudoranges Errors with No Base Station. IEEE Intell. Transp. Syst. Mag. 2017, 9, 22–34. [Google Scholar] [CrossRef] [Green Version]

- Farsoni, S.; Bonfè, M.; Astolfi, L. A low-cost high-fidelity ultrasound simulator with the inertial tracking of the probe pose. Control Eng. Pract. 2017, 59, 183–193. [Google Scholar] [CrossRef]

- Cao, N.; Member, S.; Choi, S.; Masazade, E. Sensor Selection for Target Tracking in Wireless Sensor Networks With Uncertainty. IEEE Trans. Signal Process. 2016, 64, 5191–5204. [Google Scholar] [CrossRef] [Green Version]

- El-shenawy, A.K.; Elsaharty, M.A.; Eldin, E. Neuro-Analogical Gate Tuning of Trajectory Data Fusion for a Mecanum-Wheeled Special Needs Chair. PLoS ONE 2017, 12, e0169036. [Google Scholar] [CrossRef]

- Kreibich, O.; Neuzil, J.; Smid, R. Quality-Based Multiple-Sensor Fusion in an Industrial Wireless Sensor Network for MCM. IEEE Trans. Ind. Electron. 2014, 61, 4903–4911. [Google Scholar] [CrossRef]

- Masehian, E.; Jannati, M.; Hekmatfar, T. Cooperative mapping of unknown environments by multiple heterogeneous mobile robots with limited sensing. Robot. Auton. Syst. 2017, 87, 188–218. [Google Scholar] [CrossRef]

- García, J.; Luis, Á.; Molina, J.M. Quality-of-service metrics for evaluating sensor fusion systems without ground truth. In Proceedings of the 2016 19th International Conference on Information Fusion (FUSION), Heidelberg, Germany, 5–8 July 2016. [Google Scholar]

- Shaban, M.; Mahmood, A.; Al-Maadeed, S.A.; Rajpoot, N. An information fusion framework for person localization via body pose in spectator crowds. Inf. Fusion 2019, 51, 178–188. [Google Scholar] [CrossRef]

- Ahmed, K.T.; Ummesafi, S.; Iqbal, A. Content based image retrieval using image features information fusion. Inf. Fusion 2018, 51, 76–99. [Google Scholar] [CrossRef]

- Sun, Y.X.; Song, L.; Liu, Z.G. Belief-based system for fusing multiple classification results with local weights. Opt. Eng. 2018, 58, 1. [Google Scholar] [CrossRef]

- Hartling, S.; Sagan, V.; Sidike, P.; Maimaitijiang, M.; Carron, J.; Hartling, S.; Sagan, V.; Sidike, P.; Maimaitijiang, M.; Carron, J. Urban Tree Species Classification Using a WorldView-2/3 and LiDAR Data Fusion Approach and Deep Learning. Sensors 2019, 19, 1284. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Vivone, G.; Addesso, P.; Chanussot, J. A Combiner-Based Full Resolution Quality Assessment Index for Pansharpening. IEEE Geosci. Remote Sens. Lett. 2019, 16, 437–441. [Google Scholar] [CrossRef]

- Koyuncu, M.; Yazici, A.; Civelek, M.; Cosar, A.; Sert, M. Visual and Auditory Data Fusion for Energy-Efficient and Improved Object Recognition in Wireless Multimedia Sensor Networks. IEEE Sens. J. 2019, 19, 1839–1849. [Google Scholar] [CrossRef]

- Klatt, E. The human interface of biomedical informatics. J. Pathol. Inform. 2018, 9, 30. [Google Scholar] [CrossRef] [PubMed]

- Becerra, M.A.; Londoño-Delgado, E.; Pelaez-Becerra, S.M.; Castro-Ospina, A.E.; Mejia-Arboleda, C.; Durango, J.; Peluffo-Ordóñez, D.H. Electroencephalographic Signals and Emotional States for Tactile Pleasantness Classification; Springer: Cham, Switzerland, 2018; pp. 309–316. [Google Scholar] [CrossRef]

- Becerra, M.A.; Londoño-Delgado, E.; Pelaez-Becerra, S.M.; Serna-Guarín, L.; Castro-Ospina, A.E.; Marin-Castrillón, D.; Peluffo-Ordóñez, D.H. Odor Pleasantness Classification from Electroencephalographic Signals and Emotional States; Springer: Cham, Switzerland, 2018; pp. 128–138. [Google Scholar] [CrossRef]

| Model | Description | Level 0 | Level 1 | Level 2 | Level 3 | Level 4 | Level 5 | N/A JDL |

|---|---|---|---|---|---|---|---|---|

| JDL/JDL/User JDL [60] | This is the most popular functional model. It is used in nonmilitary applications. The User JDL model corresponds to level 5. | Level 0 | Level 1 | Level 2 | Level 3 | Level 4 | Level 5 | - |

| VDFM [61] | It is derived from the JDL model and focuses on fusing human information inputs. | Data fusion processes | Data fusion processes/Visual fusion | Concept of interest | Context of concept | Control/Human | Human | - |

| DFD [55] | This functional model describes the abstraction of data. | DAI-DAO, DAI-FEO, FEI-FEO | FEI-DEO, DEI-DEO | N/A | N/A | N/A | N/A | - |

| DIKW [62,63] | This model is represented by a four-level knowledge pyramid. | N/A | Data | Informa- tion | Knowl- edge | N/A | N/A | Wisdom |

| DBDFA [64] | It is focus on confidence to merge sensors | N/A | data fusion | N/A | N/A | N/A | N/A | - |

| SAWAR [62,65] | It focuses on data fusion from a human perspective and considering the context. | State of environment | Perception of elements in current situation | Awareness of current situation | Projection of future states | Perfor- mance of actions | - | Decision |

| PAU [66] | It is based on behavioural knowledge | Sensing | FE, association, DF, Analysis and aggregation | Represen- tation | N/A | N/A | N/A | - |

| WF [56,67,68,69] | This model includes three levels, each with two processes. | Sensing and signal processing. | FE and pattern processing. | Situation assessment and decision making | N/A | Controls | N/A | - |

| MSIFM [59] | It applies two processes: the multi-sensor integration and the fusion which is addressed in hierarchical fusion (HF) centers. | Sensors | HF | N/A | N/A | N/A | N/A | - |

| RIPH [62,63,70] | In this model, the inputs correspond to perceptions (stimulus processing); and the outputs, to actions (motor processing). | N/A | Skill based processing behavior | Rule based processing | knowledge based processing | N/A | N/A | - |

| Thomo- poulos [68,71] | It consists of three modules that fuse data. | N/A | Signal-level fusion. | Evidence-level fusion. | Dynamics-level fusion. | N/A | N/A | - |

| TRIP [60] | This model consists of six levels. In addition, its data processing is hierarchical and bidirectional to meet human information requirements. | Collection evaluation and Collection stage | Object state and information stage | situation stage | Objective stage | N/A | N/A | - |

| DFA-UML [72] | It is based on three functional levels expressed using UML language and it is oriented to data and features. | Data oriented | Task oriented (variable) | Mixture of data and variable | Decide | N/A | N/A | - |

| OODA Loop [22]/E-OODA/D-OODA [60] | It is represented using a four-step feedback system. The E-OODA model proposes a decomposition of each level to give more details. The D-OODA model provides some details to decompose each stage. | Observe | Observe | Orient | Decide | Decide | Act. | - |

| OmniB [69] | It attempts to combine the JDL and I-cycle models. | Observe: sensing and signal processing | Orient: feature extraction and Pattern recognition | Decide: context processing/decision making | Decision fusion | N/A | Act | - |

| I-cycle [30,73] | It follows four sequential stages for information fusion in order to disseminate information demanded by users. | Planning and direction. | Collection | Collation | Evaluation and dissemination | N/A | N/A | - |

| OTO [30] | It has four sequential stages. | Actor | Perceiver | Director | Manager | N/A | N/A | - |

| OCIFM [60] | This model covers the characteristics of the JDL and OmniB models and considers psychological aspects. It is a guide to design IF systems. | Stimuli | Detection and standardization | Behavior/Pragmatic Analysis | Pragmatic analysis | Global goal setting | Global goal setting | Psycho considerations |

| FBedw [22] | It is considered to be an adaptive control system that includes six main stages (sense, perceive, direct, manage, effect and control) applied to local and global processes. | Sense | Perceive | Manage | Effect | Direct | N/A | Control |

| Model | Advantages | Disadvantages/Limitations/Gaps |

|---|---|---|

| JDL/JDL/User JDL [60] | It is a common and general functional model. | It is difficult to set data and applications in a specific level of this model [81] and to reuse it. Also, it is not based on a human perspective. |

| VDFM [61] | It is complementary to the JDL model and focuses on human interaction. | It is only oriented towards high-level fusion and follows a sequence in some processes. |

| DFD [55] | It describes the input and output of data and combines them at any level. However, the level of the output must be higher than or equal to that of the input. In addition, it is considered to be a well-structured model that serves to characterize sensor-oriented data fusion processes. | It loses its fusion power as the processes advance into each level. Additionally, noise spreads at each level. Besides being sensor-oriented, this model is completely hierarchical. |

| DIKW [62,63] | It is similar to the JDL model. | It is hierarchical. |

| DBDFA [64] | It is a very simple model | It is only oriented towards low-level fusion and limited to numerical data obtained from sensors. |

| SAWAR [62,65]. | It expands the level 3 of the JDL model. Situation awareness within a volume of time and space. | It is only oriented towards high-level fusion and follows a sequence in some processes. |

| PAU [66] | It is a general model that is easily interpreted and easy to use. Include low data fusion stages and situation assessment. | It has not feedback to improve the data processing chain. |

| WF [56,67,68,69] | It lacks feedback among levels. | It lacks feedback among levels. |

| MSIFM [59] | It is a very simple model that consider the task to integrate the sensors. | It is limited to sensor fusion. |

| RIPH [62,63,70] | It handles three levels of processing skills and cognitive rules, where there are no regulations and routines to deal with situation levels. The Generic Error Modeling System is an improved version of this model [24,82]. | It does not include a clear definition of each stage and a management technique for the top layer. |

| Thomopoulos [68,71] | It is a very simple model. | It does not generally include a Human–Computer Interaction (HCI) stage and can be developed in several ways. |

| TRIP [60] | It is a general model that is easily interpreted and easy to use. Moreover, it analyzes the human loop. | It may present limitations due to hierarchy matters. |

| DFA- UML [72] | It can be configured based on the measuring environment. The availability of data sources gradually degrades from the point of view of the environment and resources change. Moreover, this model has been tested in different domains. | It is only oriented towards high-level fusion and follows a sequence in some processes. |

| OODA [22]/E-OODA/D-OODA [60] | It can clearly separate the system’s tasks and provide feedback. In addition, it offers a clear view of the system’s tasks. | It does not show the effect of the action stage on the other stages. Additionally, it is not structured in such a way that it can separately identify tasks in the sensor fusion system. |

| OmniB [69] | It shows the processing stages in closed loop and includes a control stage (Act). | It is difficult define the treatment of the data for each level. |

| I-cycle [30,73] | It is a general model. | It does not divide the system’s tasks. Moreover, it is considered an incomplete data fusion model because it lacks some required specific aspects. |

| OTO [30] | It details the different system’s roles. | It does not divide the system’s tasks. |

| OCIFM [60] | It is based on a human perspective and can be a good guide to design dynamic data fusion systems, as it combines the best of the JDL and OmniB models. | It can be a complex model, which may lead to confusion in terms of its application. |

| FBedw [22] | It features a coupling between the local and global processes, considering the objectives and standards that should be achieved. | It lacks formality. |

| Criteria | Definition |

|---|---|

| Accuracy | It indicates the distance from a reference or ground truth. |

| Precision | It refers to “The degree to which data has attributes that are exact or that provide discrimination in a specific context of use” [101]. |

| Compliance | It corresponds to the extent to which data are adhered to standards, conventions, or regulations [101]. |

| Confidentiality | It denotes the level of security of data to be accessed and interpreted only by authorized users [101]. |

| Portability | It concerns “The degree to which data has attributes that enable it to be installed, replaced or moved from one system to another preserving the existing quality in a specficic context of use” [101]. |

| Recoverability | It refers to “The degree to which data has attributes that enable it to maintain and preserve a specified level of operations and quality, even in the event of failure, in a specific context of use” [101]. |

| Believability | It assesses the reliability of the information object which is linked to its truthfulness and credibility. |

| Objectivity | It evaluates the bias, prejudice, and impartiality of the information object. |

| Reputation | It corresponds to an assessment given by users to the information and its source, based on different properties suitable for the task. |

| Value-Added | It denotes the extent to which information is suitable and advantageous for the task. |

| Efficiency | It assesses the capability of data to quickly provide useful information for the task. |

| Relevancy | It concerns the importance and utility of the information for the task. |

| Timeliness | It is also known as currency and is defined as the age of the information or the degree to which it is up-to-date to execute the task required by the application. |

| Completeness | It evaluates the absence of information and depends on the precision of value and inclusion of its granularity.. |

| Data amount | It refers to the data volume suitable for the task. |

| Interpretability | It defines the level of clear presentation of the information object to fulfill structures of language and symbols specifically required by users or applications. |

| Consistency | It establishes the level of logical and consistent relationships between multiple attributes, values, and elements of an information object to form coherent concepts and meanings, without contradiction, concerning an information object. |

| Conciseness | It determines the level of compactness (abbreviated and complete) of the information. |

| Manipulability | It defines the ease of adaptability of the information object to be aggregated or combined. |

| Accessibility | It refers to the capability to access or not available information. It can be measured based on how fast and easy information is obtained. |

| Security | It corresponds to the level of protection of the information object against damage or fraudulent access. |

| Actionable | It indicates whether data are ready for use. |

| Traceability | It establishes the degree to which the information object is traced to the source. |

| Verifiability | It concerns the level of opportunity to test the correctness of the information object within the context of a particular activity. |

| Cohesiveness | It denotes the level of concentration of the information with respect to a theme. |

| Complexity | It determines the relationship between the connectivity and diversity of an information object. |

| Redundancy | It refers to the repetition of attributes obtained from different sources or processes in a specific context, which serves to define an information object. |

| Naturalness | It corresponds to the extent to which the attributes of the information object approximate to its context. |

| Volatility | It denotes the amount of time during which the information is valid and available for a specific task. |

| Authority | It refers to the level of reputation of the information within the context of a community or culture. |

| Intrinsic | Contextual | Representational | Accessibility |

|---|---|---|---|

| Accuracy | Value-added | Interpretability | Access Security |

| Believability | Relevancy | Ease understanding | |

| Objectivity | Timeliness | Representational consistency | |

| Reputation | Completeness | Representational conciseness | |

| Data Amount | Manipulability |

| Content | Technical | Intellectual | Instantiation |

|---|---|---|---|

| Accuracy | Availability | Believability | Data amount |

| Completeness | Latency | Objectivity | Representational Conciseness |

| Customer support | Price | Reputation | Representational Consistency |

| Documentation | Quality of Service (QoS) | Understandbility | |

| Interpretability | Response | Verifiability | |

| Relevancy | Security | ||

| Value-added | Timeliness |

| Intrinsic | Accuracy/Validity, cohesiveness, complexity, semantic consistency, structural consistency, informativeness/redundancy, naturalness, precision/completeness. |

| Relational/contextual | Accuracy, accessibility, complexity, naturalness, informativeness/redundancy, relevance(aboutness), precision/completeness, security, semantic consistency, structural consistency, verifiability, volatility. |

| Reputational | Authority |

| Methodology/Framework | Application | NC-P1 | NC-P2 | NC-P3 | Year |

|---|---|---|---|---|---|

| Total Data Quality Management (TDQM) [104] | Marketing—data consumers | 12 | 225 | 341 | 1998 |

| Data Warehouse Quality (DWQ) methodology [105] | Data warehousing | 3 | 15 | 21 | 1998 |

| Total Information Quality Management (TIQM) [106] | Data warehouse and business | 1 | 43 | 23 | 1999 |

| A Methodology for Information Quality Assessment (AIMQ) [107] | Benchmarking in organizations | 0 | 267 | 673 | 2002 |

| Information Quality Assessment for Web Information Systems (IQAWIS) [98] | Web information systems | 0 | 21 | 65 | 2002 |

| Data Quality Assessment (DQA) [108] | Organizational field | 0 | 273 | 468 | 2002 |

| Information Quality Measurement (IQM) [109] | Web context | 0 | 39 | 93 | 2002 |

| Goal Question Metric (GQM) methodology [110] | Adaptable to different environments | 0 | 0 | 29 | 2002 |

| ISTAT methodology [111] | IQ of Cesus | 0 | 0 | 0 | 2003 |

| Activity-based Measuring and Evaluating of Product Information Quality (AMEQ) methodology [112] | Manufacturing | 0 | 6 | 7 | 2004 |

| Loshin’s methodology or Cost-effect Of Low Data Quality (COLDQ) [113,114] | Cost–benefit of IQ | – | – | – | 2004 |

| Data Quality in Cooperative Information Systems (DaQuinCIS) [115] | Cooperative information systems | 0 | 51 | 34 | 2004 |

| Methodology for the Quality Assessment of Financial Data (QAFD) [116] | Finance applications | – | – | – | 2004 |

| Canadian Institute for Health Information (CIHI) methodology [117] | IQ of Health information | – | – | – | 2005 |

| Hybrid Information Quality Management (HIQM) [118] | General purpose for detecting and correcting errors | 0 | 3 | 9 | 2006 |

| Comprehensive Methodology for Data Quality Management (CDQ) [119] | General purpose | – | – | – | 2007 |

| Information Quality Assessment Framework (IQAF) [86] | General purpose | 0 | 33 | 234 | 2007 |

| IQ assessment framework for e-learning systems [120] | E-learning systems | 0 | 1 | 42 | 2010 |

| Heterogenous Data Quality Methodology (HDQM) [121] | General purpose | 0 | 0 | 38 | 2011 |

| Quality evaluation framework [122] | Business processes | 0 | 0 | 34 | 2014 |

| Analytic hierarchy process (AHP) QoI [123] | Mobile Ad-hoc Networks—wireless sensor networks | 0 | 0 | 6 | 2015 |

| Methodology to evaluate important dimensions of information quality in systems [5] | General purpose | 0 | 0 | 14 | 2015 |

| A framework for automatic information quality ranking [124] | Health—diabetes websites | 0 | 0 | 3 | 2015 |

| Open data quality measurement framework [125] | Government data | 0 | 0 | 119 | 2016 |

| Evaluation criteria for IQ Research [126] | Models, frameworks, and methodologies | 0 | 0 | 0 | 2016 |

| Pragmatic Task-Based Method to Data Quality Assessment and Improvement (TBDQ) [94] | IQ assessment and improvement | 0 | 0 | 4 | 2016 |

| Information Quality Assessment Methodology in the Context of Emergency Situational Awareness (IQESA) [103] | Context of emergency situational awareness | 0 | 0 | 7 | 2017 |

| Framework for information quality assessment of presentation slides [127] | IQ Assessment of presentation slides | 0 | 0 | 1 | 2017 |

| An approach to quality evaluation [128] | Accounting information—linguistic environment | 0 | 0 | 26 | 2017 |

| Methodology to quality evaluation [129] | Weather Station Sensors | 0 | 0 | 0 | 2021 |

| Methodology/Framework | Application | NC-P1 | NC-P2 | NC-P3 | Year |

|---|---|---|---|---|---|

| Quality evaluation in Information Fusion Systems (IFS) [47] | Defense | 0 | 0 | 92 | 2010 |

| Information Quality Evaluation in Fusion Systems (IQEFS) [42] | General purpose | 0 | 0 | 10 | 2013 |

| Context-Based Information Quality Conceptual Framework (CIQCF) [46] | Sequential decision making | 0 | 0 | 14 | 2013 |

| Context-Based Information Quality Conceptual Framework (CIQCF) [45] | Decision making in crisis management and human systems | 0 | 0 | 8 | 2016 |

| Low Data Fusion Framework (LDFF) [48] | Brain computer interface | 0 | 0 | 4 | 2018 |

| Intelligent quality-based approach [130] | fusing multi-source possibilistic information | 0 | 0 | 3 | 2020 |

| Dynamic weight allocation method [12] | multisource information fusion | 0 | 0 | 0 | 2021 |

| Metric | NC | Metric | NC | Metric | NC |

|---|---|---|---|---|---|

| Receiver Operating Characteristic (ROC)/AUC curve/Correctness/False pos., false neg. | 4 | Signal to Noise ratio | 2 | Spatio-Temporal info/Preservation | 1 |

| Accuracy | 5 | Fusion/Relative gain | 2 | Entropy | 2 |

| Response/Execution/Time | 9 | Credibility | 1 | Relevance | 1 |

| Detection rate/False alarm/Detection probability | 7 | Reliability | 1 | Distance/Position/Performance/Probability Error | 10 |

| Purity | 1 | Mutual information | 1 | Computation Cost | 3 |

| Confidence | 1 |

| Metric | NC | Metric | NC | Metric | NC |

|---|---|---|---|---|---|

| Accuracy | 8 | Time complexity | 1 | Full resolution | 1 |

| delay/Communication cost/Energy consumption | Standard deviation/Attitude error | Confidence | |||

| Computational complexity | 1 | Readiness | 1 | Correct detection probability/ROC | 1 |

| Mean Square Error (MSE) | 4 | F1 score | 2 | False alarm | 2 |

| Sensitivity, false positive, false negative, scenario parameters (length of redundant lines, length of ignored lines, number of ignored lines, number of concave vertices, number of convex vertices, number of disjoint obstacles, length of walls) | 1 | Rate correctly detected. Measure of association and estimation: residual, residual covariance, Kalman gain updated utility state, updated utility uncertainty | 1 | Fusion break rate Rate of fusion tracks Track recombination rate | 1 |

| Intersection over Union (IoU) | 1 | Recall | 3 | Precision | 6 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Becerra, M.A.; Tobón, C.; Castro-Ospina, A.E.; Peluffo-Ordóñez, D.H. Information Quality Assessment for Data Fusion Systems. Data 2021, 6, 60. https://0-doi-org.brum.beds.ac.uk/10.3390/data6060060

Becerra MA, Tobón C, Castro-Ospina AE, Peluffo-Ordóñez DH. Information Quality Assessment for Data Fusion Systems. Data. 2021; 6(6):60. https://0-doi-org.brum.beds.ac.uk/10.3390/data6060060

Chicago/Turabian StyleBecerra, Miguel A., Catalina Tobón, Andrés Eduardo Castro-Ospina, and Diego H. Peluffo-Ordóñez. 2021. "Information Quality Assessment for Data Fusion Systems" Data 6, no. 6: 60. https://0-doi-org.brum.beds.ac.uk/10.3390/data6060060