Object Recognition in Aerial Images Using Convolutional Neural Networks

Abstract

:1. Introduction

1.1. Motivation and Objectives

1.2. Background

2. Methods

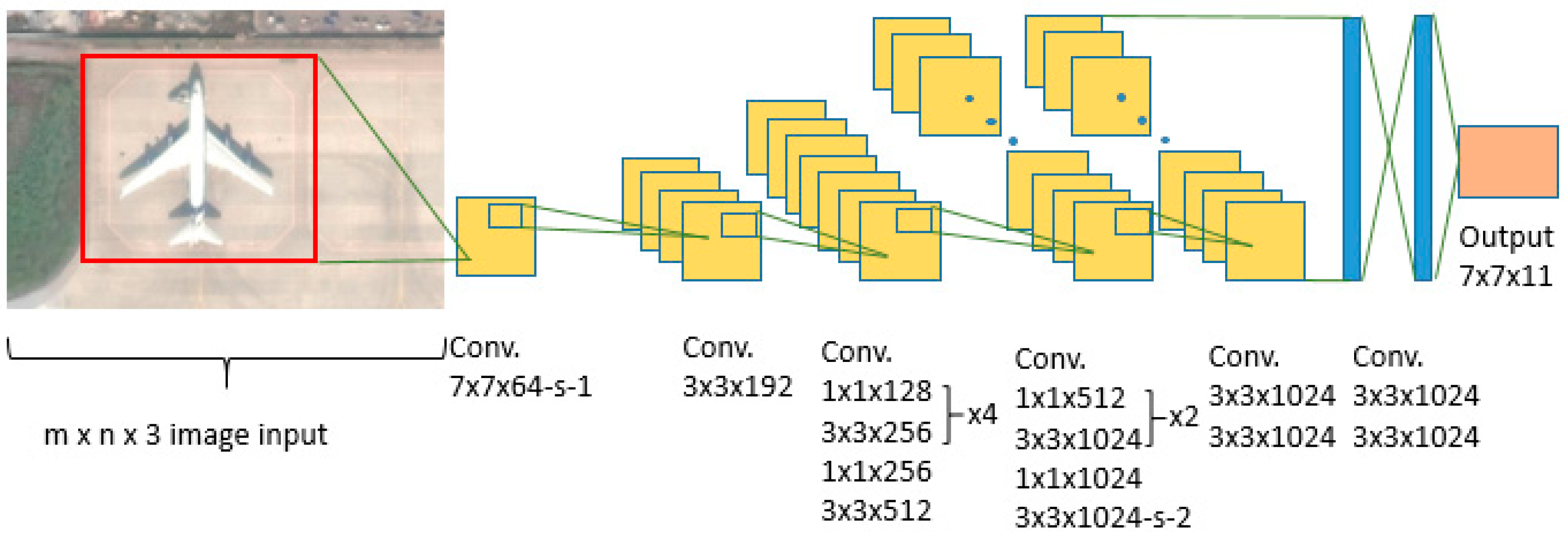

2.1. Network Architecture

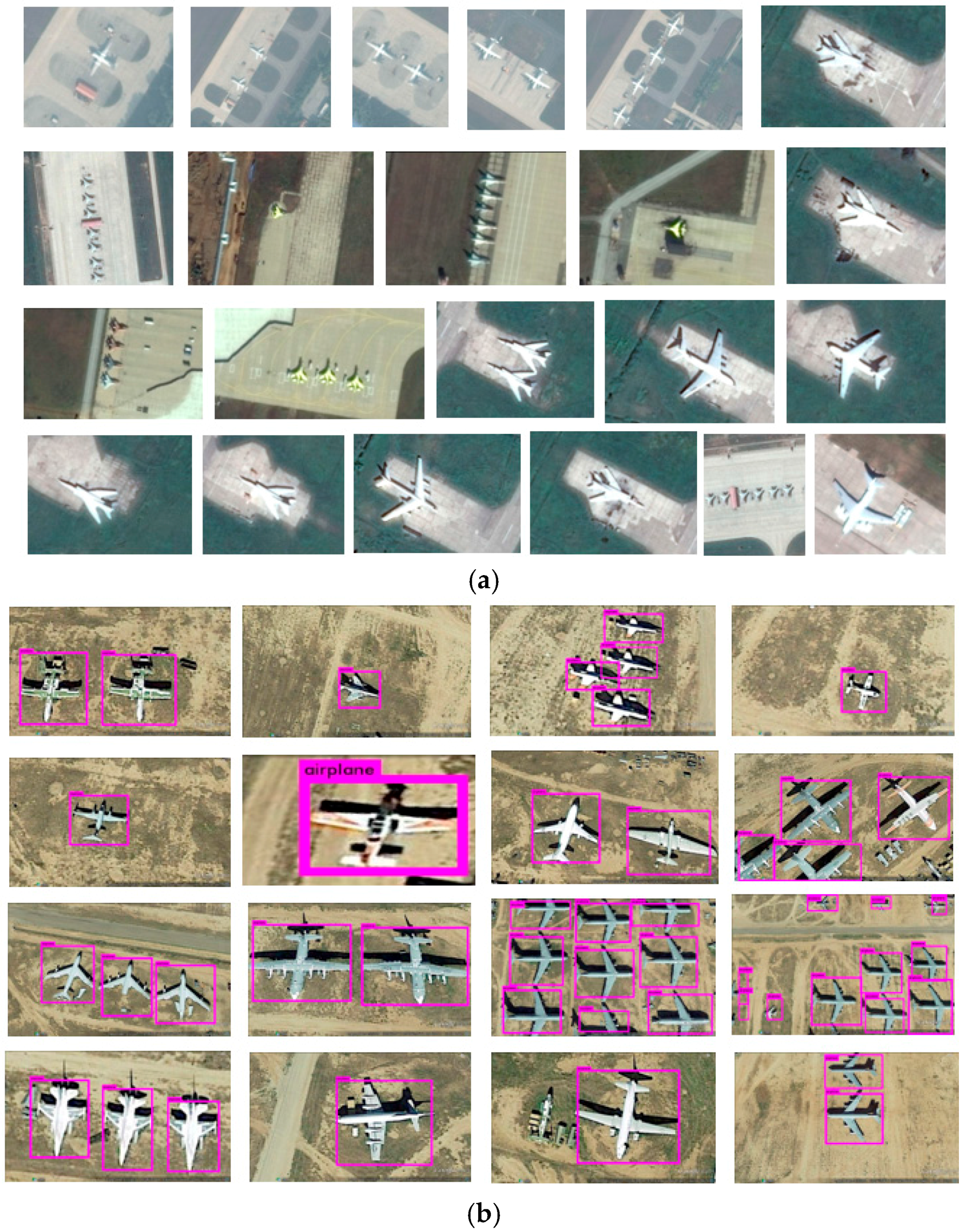

2.2. Network Training

3. Results

3.1. Neural Network Validation

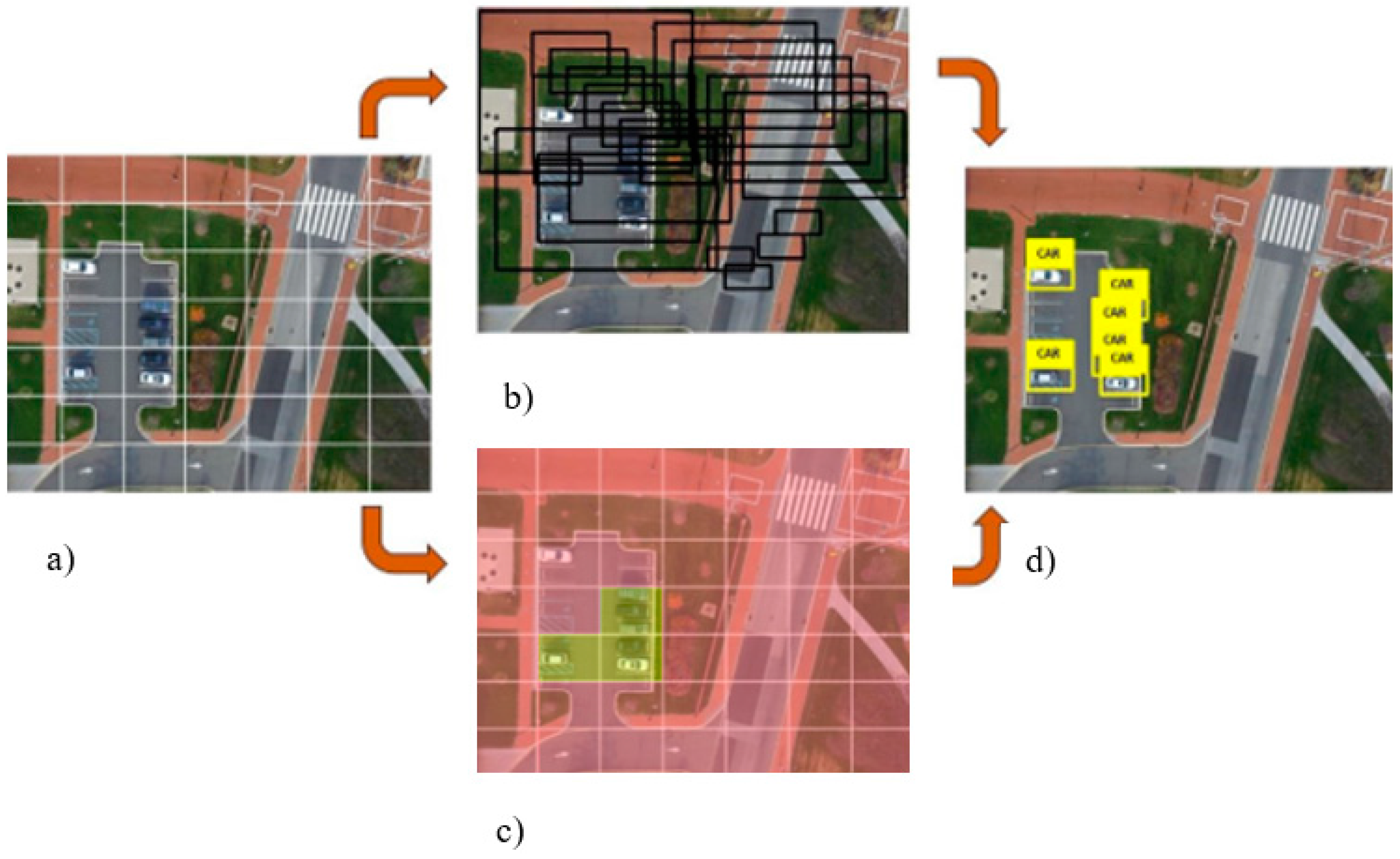

3.2. Real-Time Object Recognition from UAV Video Feed

4. Conclusions

Potential Applications in the Transportation and Civil Engineering Field

Author Contributions

Conflicts of Interest

References

- Barrientos, A.; Colorado, J.; Cerro, J.; Martinez, A.; Rossi, C.; Sanz, D.; Valente, J. Aerial remote sensing in agriculture: A practical approach to area coverage and path planning for fleets of mini aerial robots. J. Field Robot. 2011, 28, 667–689. [Google Scholar] [CrossRef]

- Andriluka, M.; Schnitzspan, P.; Meyer, J.; Kohlbrecher, S.; Petersen, K.; Von Stryk, O.; Roth, S.; Schiele, B. Vision based victim detection from unmanned aerial vehicles. In Proceedings of the 2010 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Taipei, Taiwan, 18–22 October 2010; pp. 1740–1747. [Google Scholar]

- Huerzeler, C.; Naldi, R.; Lippiello, V.; Carloni, R.; Nikolic, J.; Alexis, K.; Siegwart, R. AI Robots: Innovative aerial service robots for remote inspection by contact. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Tokyo, Japan, 3–7 November 2013; p. 2080. [Google Scholar]

- Ortiz, A.; Bonnin-Pascual, F.; Garcia-Fidalgo, E. Vessel Inspection: A Micro-Aerial Vehicle-based Approach. J. Intell. Robot. Syst. 2014, 76, 151–167. [Google Scholar] [CrossRef]

- Snavely, N.; Seitz, S.M.; Szeliski, R. Photo tourism: Exploring photo collections in 3D. ACM Trans. Graph. (TOG) 2006, 25, 835–846. [Google Scholar] [CrossRef]

- Sherrah, J. Fully Convolutional Networks for Dense Semantic Labelling of High-Resolution Aerial Imagery. Available online: https://arxiv.org/pdf/1606.02585.pdf (accessed on 8 June 2017).

- Qu, T.; Zhang, Q.; Sun, S. Vehicle detection from high-resolution aerial images using spatial pyramid pooling-based deep convolutional neural networks. Multimed. Tools Appl. 2016, 1–13. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Spatial pyramid pooling in deep convolutional networks for visual recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 37, 1904–1916. [Google Scholar] [CrossRef] [PubMed]

- Wang, Q.; Rasmussen, C.; Song, C. Fast, Deep Detection and Tracking of Birds and Nests. In Proceedings of the International Symposium on Visual Computing, Las Vegas, NV, USA, 12–14 December 2016; pp. 146–155. [Google Scholar]

- Howard, A.G. Some Improvements on Deep Convolutional Neural Network Based Image Classification. Available online: https://arxiv.org/ftp/arxiv/papers/1312/1312.5402.pdf (accessed on 8 June 2017).

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet Classification with Deep Convolutional Neural Networks. Available online: http://papers.nips.cc/paper/4824-imagenet-classification-with-deep-convolutional-neural-networks.pdf (accessed on 8 June 2017).

- Sermanet, P.; Eigen, D.; Zhang, X.; Mathieu, M.; Fergus, R.; LeCun, Y. Overfeat: Integrated Recognition, Localization and Detection Using Convolutional Networks. Available online: https://arxiv.org/pdf/1312.6229.pdf (accessed on 14 June 2017).

- Donahue, J.; Jia, Y.; Vinyals, O.; Hoffman, J.; Zhang, N.; Tzeng, E.; Darrell, T. Decaf: A Deep Convolutional Activation Feature for Generic Visual Recognition. Available online: http://proceedings.mlr.press/v32/donahue14.pdf (accessed on 8 June 2017).

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. Available online: http://www.cv-foundation.org/openaccess/content_cvpr_2016/papers/Redmon_You_Only_Look_CVPR_2016_paper.pdf (accessed on 8 June 2017).

- Visual Object Classes Challenge 2012 (VOC2012). Available online: http://host.robots.ox.ac.uk/pascal/VOC/voc2012/ (accessed on 8 June 2017).

- Visual Object Classes Challenge 2007 (VOC2007). Available online: http://host.robots.ox.ac.uk/pascal/VOC/voc2007/ (accessed on 8 June 2017).

- BB Boxing Labeling Tool. 2015. Available online: https://github.com/puzzledqs/BBox-Label-Tool (accessed on 12 April 2015).

- Civil Data Analytics. Autonomous Object Recognition Software for Drones & Vehicles. Available online: http://www.civildatanalytics.com/uav-technology.html (accessed 2 February 2016).

| Classification | Class | Detected | |

|---|---|---|---|

| Airplane | Not Airplane | ||

| Actual | Airplane | 526 | 14 |

| Not Airplane | 2 | NA | |

© 2017 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Radovic, M.; Adarkwa, O.; Wang, Q. Object Recognition in Aerial Images Using Convolutional Neural Networks. J. Imaging 2017, 3, 21. https://0-doi-org.brum.beds.ac.uk/10.3390/jimaging3020021

Radovic M, Adarkwa O, Wang Q. Object Recognition in Aerial Images Using Convolutional Neural Networks. Journal of Imaging. 2017; 3(2):21. https://0-doi-org.brum.beds.ac.uk/10.3390/jimaging3020021

Chicago/Turabian StyleRadovic, Matija, Offei Adarkwa, and Qiaosong Wang. 2017. "Object Recognition in Aerial Images Using Convolutional Neural Networks" Journal of Imaging 3, no. 2: 21. https://0-doi-org.brum.beds.ac.uk/10.3390/jimaging3020021