1. Introduction

The production of speech sounds consists of the co-ordinated and synchronised movements of an estimated 160 muscles operating the articulators, such as the lips and the tongue, to change the overall shape of the vocal tract (i.e., the mouth–throat channel). These changes in configuration alter the resonant properties of the vocal tract and hence its filter characteristics, which in turn modify, e.g., the periodic sound generated by the vibration of the vocal folds in the larynx [

1]. This process enables human beings to produce an immense range of different speech sounds (i.e., vowels and consonants), which are referred to as

phonemes. In a recent survey of the speech–sound inventories of 1672 languages of the world, it was found that these languages use a total of 2155 different speech sounds [

2]. This indicates that the sound production capability of mankind is truly immense considering that languages typically only have 35 speech sounds in their inventory. The production of speech sounds by human beings is studied in the science of (articulatory) phonetics.

In a phonetic perspective, speech sounds are typically described in terms of their manner and place of articulation [

3]. Manner of articulation refers to the degree to which the vocal channel is constricted by the articulators. In

plosives, for instance, the airflow through the vocal tract is fully blocked (e.g., by placing the tip and sides of the tongue against the roof of the mouth), which gives rise to sounds like [p, t, k] and [b, d, g]. In

fricatives, the tongue is raised and approximates the palate to such a degree that a very small opening remains for the airflow to escape with a clear hissing sound quality. Examples of fricatives are [f, s, v, z]. In

resonants, the tongue is also raised from its neutral position towards the palate but the gap remains relatively big so that the airstream does not become turbulent and the sound remains without hiss. In English, [j] and [w] are examples of resonants, but this constriction type is also found in most of the English vowels.

Place of articulation refers to the area in the vocal tract where the constriction occurs. In sounds like [t] and [d], for example, the constriction occurs in the alveolar area (i.e., close to the superior alveolar ridge, a small protuberance just behind the upper front teeth). In sounds like [k] and [g], the occlusion occurs in the velar area, with the tongue making contact with the back part of the roof of the mouth. In this perspective, the tongue is a very productive articulator, covering articulations in the dental area (anterior) to those in the pharyngeal area (posterior). In terms of place of articulation, 52% of the speech sounds in languages of the world have an articulation involving the tongue [

4].

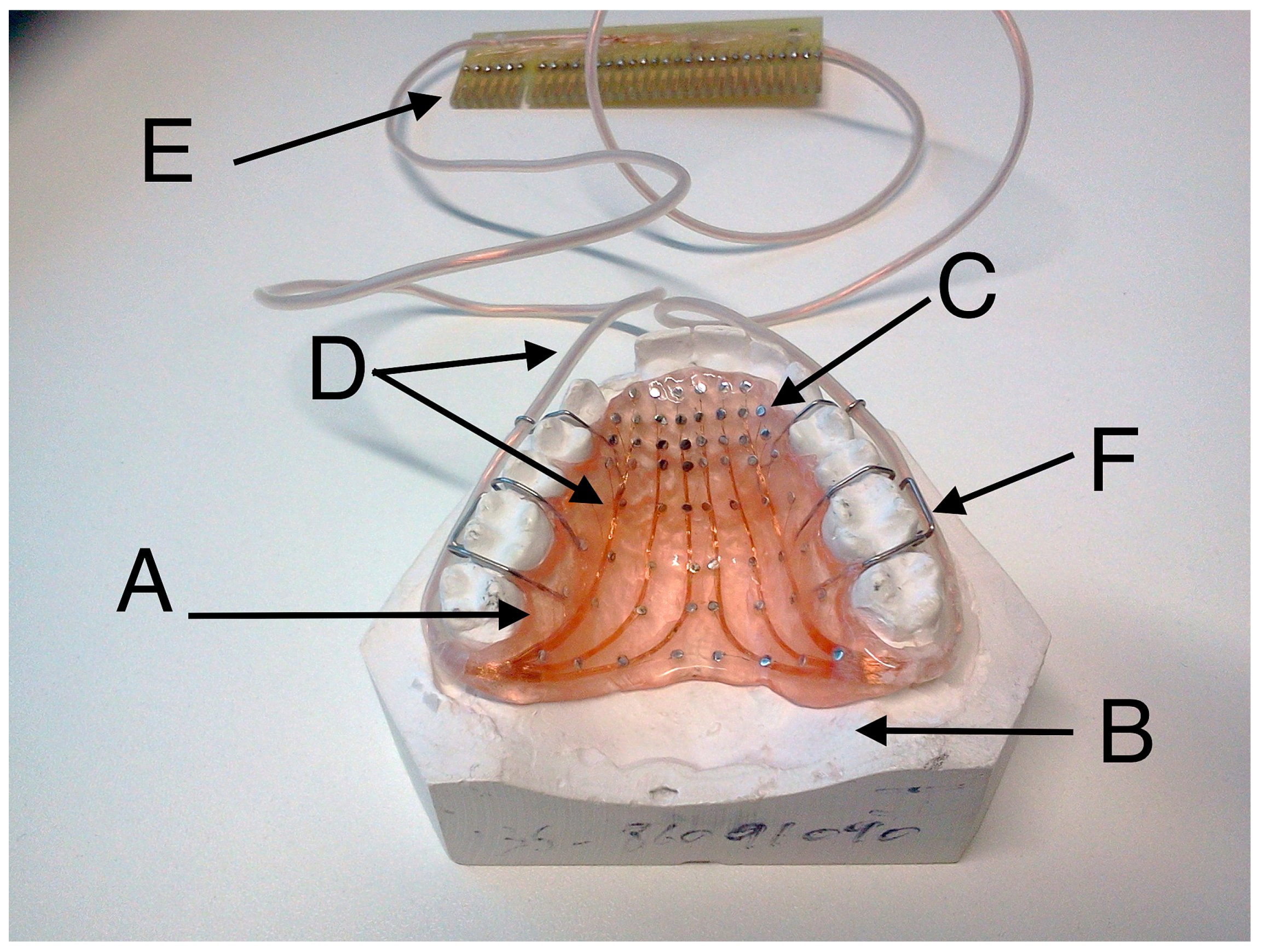

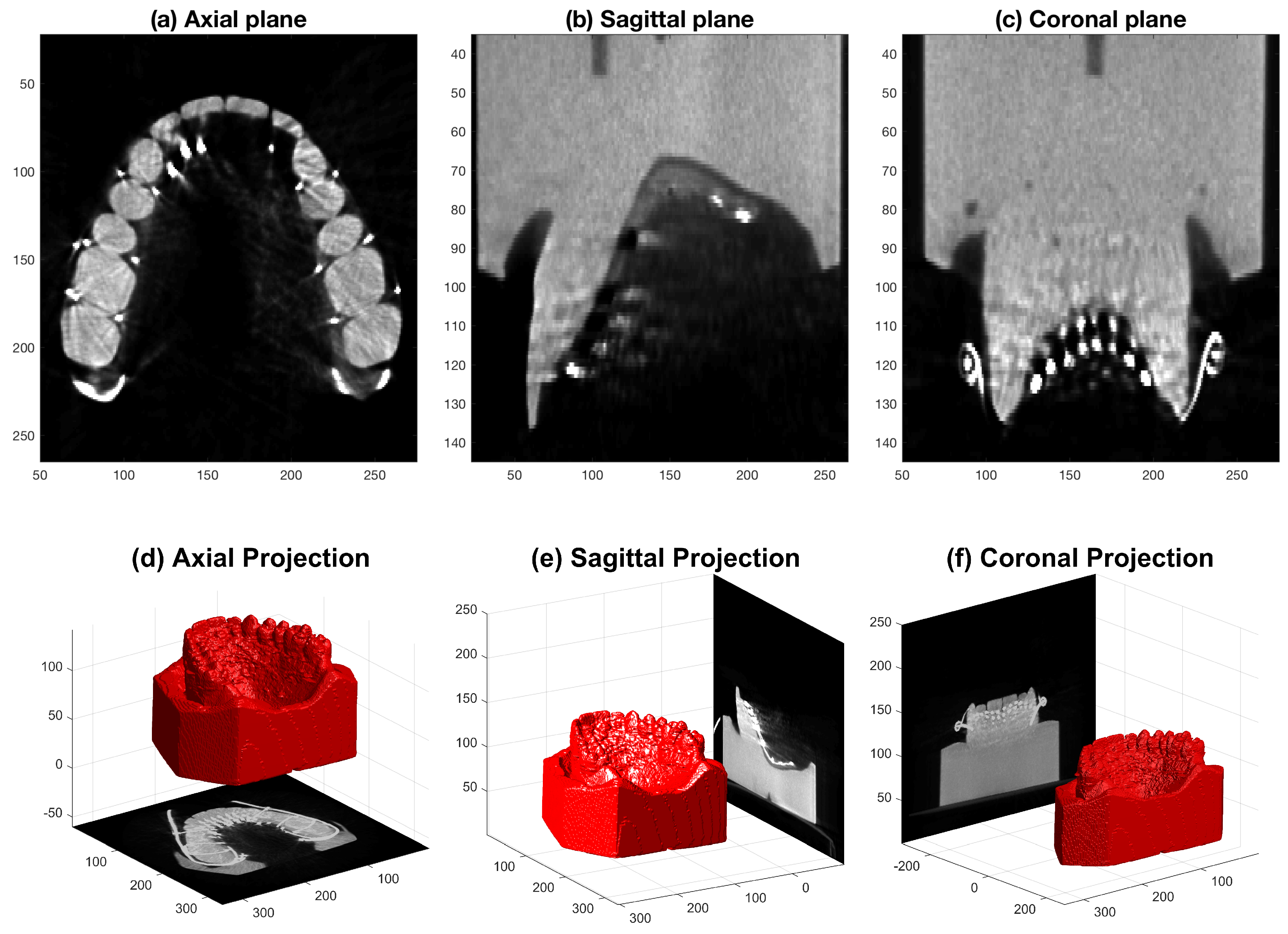

The place of consonant articulation and the timing of tongue contact with the hard palate can be visualised by means of the technique of electropalatography (EPG) [

5,

6]. In this instrumental technique, tongue–palate contact is recorded by means of a grid of electrodes placed on the surface of a thin artificial acrylic palate. The artificial palate is custom made to fit against a speaker’s hard palate by obtaining a plaster dental impression of the upper jaw (

Figure 1) [

7].

In electropalatographic investigations of speech, a participant’s speech is recorded while wearing the artificial palate. Tongue contact with the palate activates the electrodes in the contact area, since these react electrically to the humidity of the saliva on the surface of the tongue. The speech recordings can consist of free speech, or more likely, a set of pre-selected sentences that are designed to investigate particular combinations of speech sounds. The temporal sequence of tongue–palate contact patterns is recorded in temporal registration with the acoustic signal. In the present study, the synchronised EPG and audio data were obtained from a freely available web database known as MOCHA (MultiCHannel Articulatory database: English) TIMIT [

8], which consists of 460 English sentences that include the main connected speech processes and are read by female and male speakers.

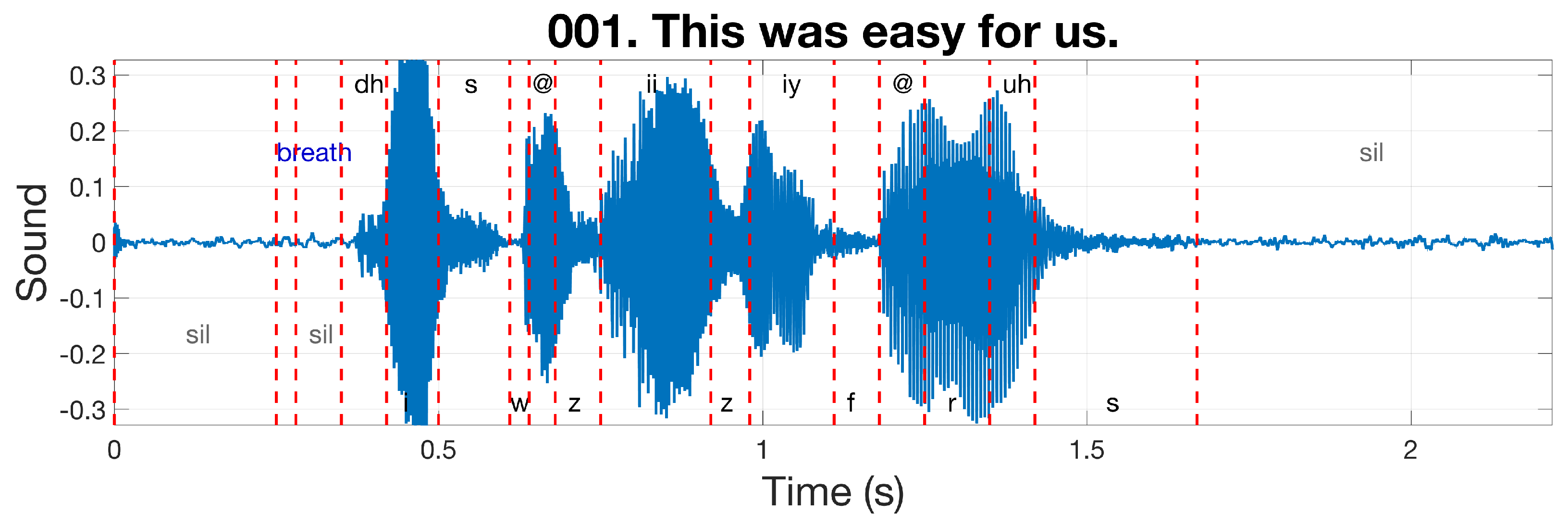

Figure 2 illustrates one sentence of the MOCHA TIMIT database; the soundwave (recorded at a 16 kHz sampling rate) is displayed with the corresponding phonemes separated by vertical dashed lines.

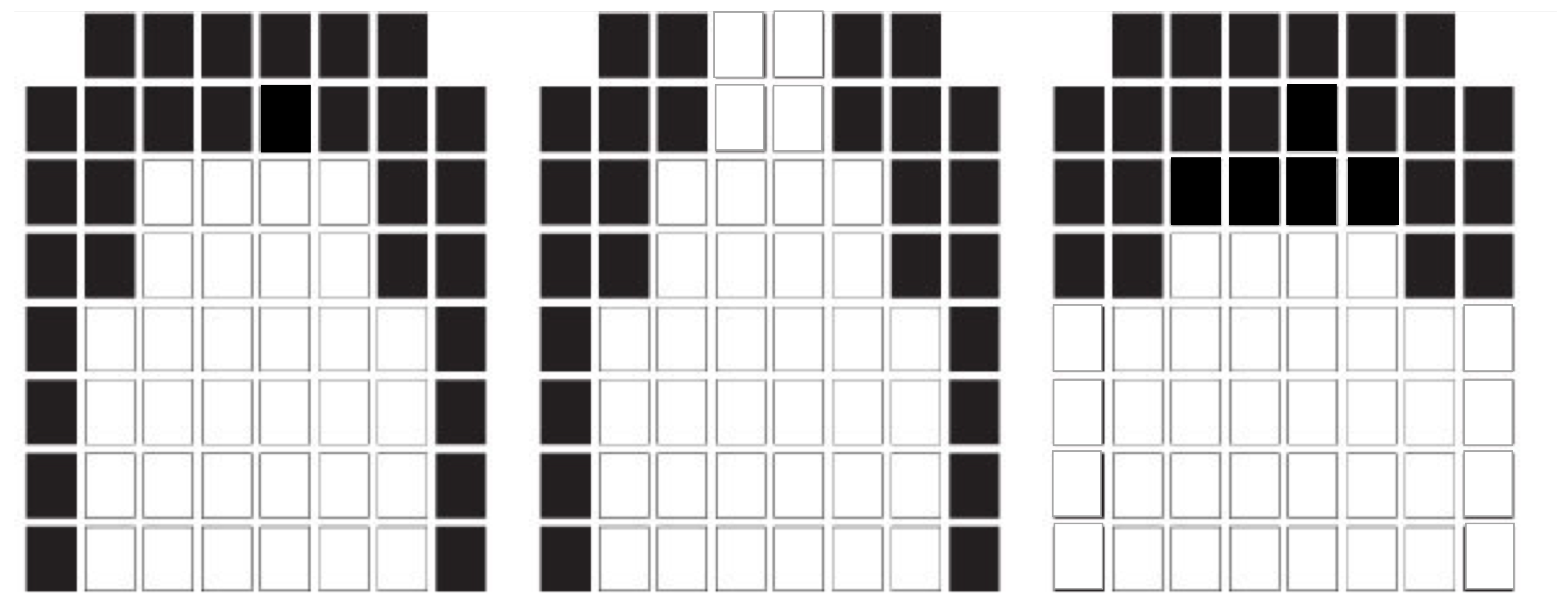

The synchronised EPG data are recorded in a separate file. Once a contact is registered by an electrode, an electrical signal is sent to an external processing unit [

9], and a graphical display of the pattern of electrode excitation is shown either printed on paper or on a screen (

Figure 3). When palatograms are shown on a screen, they can provide dynamic real-time visual feedback on the location and timing of tongue contacts with the hard palate. This direct articulatory information can be used during therapy to monitor and improve articulation patterns, especially in children [

10,

11,

12]. Visual feedback is particularly important in rehabilitating children with hearing impairment. EPG has the potential to be useful in the assessment and remediation of a variety of speech disorders [

13], including those due to hearing impairment [

14], cleft palate [

15], and Down’s syndrome [

12]. The technique has also been used to study tongue–palate contact patterns for different inventories of vowels and consonants [

16,

17].

In phonetic studies of speech production, it has been implicitly assumed that the different postures for speech are symmetrical in the left–right plane of the vocal tract, i.e., that the contact between the tongue and the palate on the right-hand side is equally extensive as the contact on the left. Characterisation of articulation asymmetry in native speakers would contribute to a better understanding of the speech production process and its relationship with both neural organisation and the anatomy of speech organs. From a practical viewpoint, it could provide a reference for Speech and Language Therapists when treating speech deficiencies in which asymmetry plays a role (e.g., dysarthria). For example, if normative data show that a particular speech sound is often produced in a highly symmetrical way, then asymmetrical articulation of this speech sound resulting from weakness or paralysis of the muscles on one side of the mouth (which is very common in speech disorders due to stroke) could result in reduced intelligibility for the sound in question.

Asymmetries in tongue posture during the articulation of speech sounds have only been systematically investigated in a very small number of electropalatography studies (e.g., [

18,

19]). The general conclusion was that, irrespective of the language involved, the vast majority of palatograms show some degree of asymmetrical tongue–palate contact (i.e., there is at least one active electrode for which the electrode at the mirror position is inactive). There was, however, little consistency in the direction and amount of asymmetry, which seemed to differ depending on the individual speaker and/or the speech sound. Furthermore, these conclusions were based on the data of a very small number of speakers (i.e., a grand total of 15). A recent meta-analysis provides evidence from a much larger sample [

20]. This study reviewed 1500 previously published palatograms representing a total of 225 speakers in 10 different languages. It was found that 83% of these palatograms showed some degree of asymmetrical contact. Palatograms with more tongue–palate contact on the left (45%) outnumbered those with more contact on the right (38%). The direction and amount of asymmetry depended on the place and manner of articulation. The results of this extensive review are being used to design an empirical EPG study with 20 speakers in which the direction and amount of asymmetry in tongue–palate contact will be studied as a function of (a) the type of speech sound, (b) anatomical asymmetries in speakers’ hard palates, and (c) speaker handedness. However, there may be a confounding factor due to the fact that the electropalate has been hand-made (a factor referred to herein as “palate manufacture”). Thus, even if there were no anatomical asymmetry, it could still be the case that electrodes in corresponding positions on the left and right side of the palate may not be positioned at equal vertical distances from the tongue. This could occur, for example, if the electropalate is not of perfectly uniform thickness such that electrodes placed on its surface do not exactly mimic the contours of the speaker’s hard palate. It could also occur if two corresponding electrodes (one on the left and one on the right) are not positioned at equal distances from the mid-sagittal plane. In summary, asymmetry in the palatogram for a given speech sound may arise from three sources: the shape of the speaker’s hard palate (i.e., anatomy), the positioning of the electrodes (i.e., palate manufacture) and the movement of the tongue.

The present paper describes a series of tools for (i) the analysis of asymmetry in palatograms and (ii) the distinction between asymmetry that arises from anatomical or manufacturing causes and asymmetry that is due to the movement of the tongue. Firstly, it introduces processing algorithms that allow the analysis of asymmetry in palatograms and that enable asymmetry metrics to be calculated for different speech sounds. Secondly, it describes an image processing framework that segments the main elements of an EPG palate to analyse the locations of the electrodes with respect to the sagittal plane. This provides information about asymmetry that arises due to a combination of speaker anatomy and palate manufacture.

A preliminary version of this work, presented at the 22nd Medical Image Understanding and Analysis (MIUA) Conference [

21], focused solely on the imaging and segmentation of the artificial palate. The present study also describes the analysis and visualisation of speech signals, and the derivation of asymmetry metrics from palatograms. Furthermore, the algorithms have been made publicly available through GitHub (see

Supplementary Materials).

2. Display and Analysis of Audio Signals and Palatograms

Whilst there are useful software packages to visualise and analyse speech (e.g.,

Praat [

22]), researchers sometimes require more flexibility to analyse data of interest. Thus, this section describes a series of MATLAB

® (The Mathworks™, Natick, MA, USA) routines that are available open-source in the

GitHub software development platform [

23]. The most important functions are contained in the main folder (

https://github.com/reyesaldasoro/ElectroPalatography). The extension

.m indicates a file in which a MATLAB

® function is saved, e.g.,

interpretLabelledPhonemes.m. Alternatively, the functions can be presented as they are used with input and output parameters, e.g.,

output = function(input); for example:

TextGrid = Lab_to_TextGrid(dataIn);.

2.1. Conversion between Data Formats

The MOCHA TIMIT database, in addition to containing synchronised EPG and acoustic data, has a

.lab (‘label’) file saved for each sentence produced by each speaker. In this file, each phoneme is represented by a row, which contains the start and finish times of the phoneme followed by a code to identify the phoneme. An example of the first 8 lines of the

.lab file for the sentence ”This was easy for us” is shown in the

Appendix A.

In phonetics research, a common means of recording the start and finish times of phonemes is via a

TextGrid file, a proprietary format of the popular software Praat. These files have a more complicated format than the MOCHA TIMIT

.lab files, with markup fields that allow words and phonemes to be identified. The files use the extension .

TextGrid and an example of the first part of a file is shown in the

Appendix A.

Conversion from .lab to .TextGrid could be a valuable tool for researchers who wish to use the MOCHA TIMIT database. It is performed with the function TextGrid = Lab_to_TextGrid(dataIn);. The input parameter dataIn can be either a file name (which is read, converted to .TextGrid and saved in the folder where the .lab file is located) or a folder, in which case all the .lab files in the folder are converted to .TextGrid.

The code requires one intermediate function to convert the .lab file to a MATLAB® cell. This function can be used separately to analyse, for example, acoustic or EPG data at the level of the phoneme in MATLAB®. There is a parallel function to read a .TextGrid file and convert it to a MATLAB® cell. Both of these functions are called from the function interpretLabelledPhonemes.m, which reads a file name as the input and, as the output, produces a MATLAB® cell containing the words (if available) and the phonemes along with their corresponding start and finish times. The function automatically detects the name of the file (it can end in ”d” for .TextGrid or ”b” for .lab), and calls the correct function, either convert_LAB_to_Phonemes.m or convert_TextGrid_to_Phonemes.m.

2.2. Sound Waves and Palatograms

In the MOCHA TIMIT database, the sound waves are saved as audio files, with the extension .wav, and the palatograms are saved in a file with the extension .epg.

The function readAudioFile.m reads the audio file and automatically calculates some important parameters that will be used later on: sample rate, number of samples, maximum and minimum amplitudes.

The process of reading the electropalatography data from an .epg file requires several files: readPalatogram.m, EPG_to_Palatogram.m, asymmetry_projection.m and EPG_Boxes.mat. These files are necessary as many parameters are calculated in this step, including the whole time sequence of palatograms and their asymmetry characteristics (to be described below). It is recommended to read the audio file prior to reading the EPG data, as the audio sample rate is necessary for some calculations.

2.3. Spectrum Through a Short-Time Fourier Transform

The Fourier Transform [

24], which is often calculated using the popular Fast Fourier Algorithm [

25], is a well-known mathematical operation that translates a signal from the spatial or time domain into the Fourier or frequency domain. The transformation of sound signals into the Fourier domain is widely used in phonetics, as it provides information about how the energy contained in each phoneme is distributed along the frequency spectrum. Different calculation methods are possible, which lend themselves to different display formats (see

Figure 4). For example, if the transformation is applied to a time window corresponding to an entire phoneme, the frequency components of the phoneme can be visualised by showing the amplitude of the Fourier signal on the vertical axis as a function of the frequency on the horizontal axis. Alternatively, an audio signal can be partitioned into smaller time windows, each of which is transformed separately. The amplitude is then converted to brightness and arranged vertically, where the vertical axis represents frequency. Columns of brightness corresponding to consecutive time-points (or window numbers) are then arranged sequentially (from left to right) to form an image known as a spectrogram. Thus, the level of brightness at any point in the image represents the amplitude of the Fourier signal at a particular frequency and time. The short-time Fourier Transform of the audio signal is calculated with the function

shortTimeFourierAnalysis.m, which is used in the following way:

EPG_parameters=shortTimeFourierAnalysis(EPG_parameters);.

2.4. Asymmetry Visualisation

Asymmetry in an EPG investigation refers to the outcome where electrodes are activated on one side of the palatogram, whilst those in the mirror position are not. The software described here allows the analysis of asymmetry in several ways. First of all, each palatogram is analysed for asymmetry; electrodes are recorded as either active in pairs (symmetric) or active on the left/right side only (

Figure 5). The information per palatogram is saved within one of the fields of

EPG_parameters, which is a structure. Specifically, it is saved in

EPG_parameters.PalatogramAsym(:,:,:,1), where

1 indicates that the first palatogram in the sequence is the palatogram of interest. Thus, asymmetry maps for individual palatograms can be displayed with the following command:

imagesc(EPG_parameters.PalatogramAsym(:,:,:,1)). Montages are a useful means of visualising asymmetry maps for a sequence of palatograms simultaneously, which can be achieved using the following command:

montage(EPG_parameters.PalatogramAsym(:,:,:,1:130)). An example of a montage is shown in

Figure 6a.

Two types of cumulative image can be generated to display the pattern of asymmetry across a sequence of images. First, the activation of each electrode is accumulated to provide a map that shows the total activation of the electrodes. Such an image can be used to observe whether the activation is symmetrical on the left and right side of the palate and can be displayed in the following way:

displayPalatogram(EPG_parameters,-1) (

Figure 6b). Second, the activation of the electrode is accumulated only if it was activated asymmetrically, i.e., only if the corresponding electrode in the mirror position was not activated. This can be displayed in the following way:

displayPalatogram(EPG_parameters,-2) (

Figure 6c). Both of these display formats allow the pattern of asymmetry across a sentence to be observed in a single image.

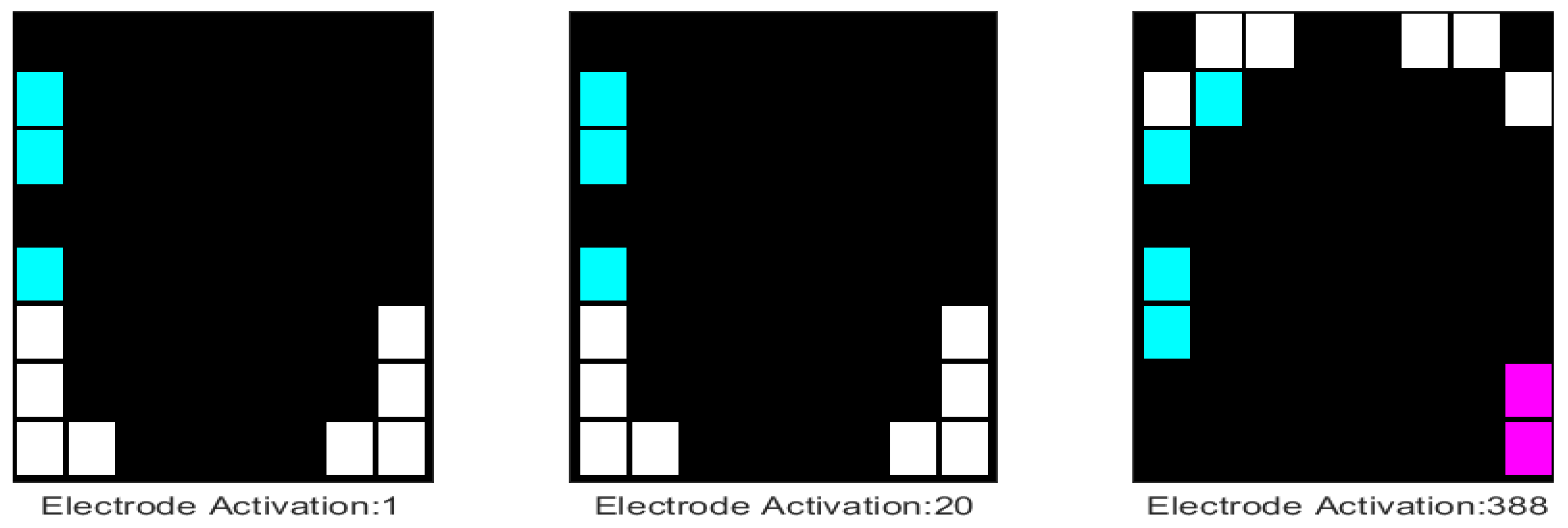

The previous visualisations are useful as an indication of the average asymmetry for a given sentence or speaker. However, as mentioned in

Section 1, the direction and amount of asymmetry are likely to depend on the type of speech sound. Thus, it could be of interest to analyse the pattern of asymmetry as a function of individual phonemes. The specific tools for a per-phoneme approach are described in the folder

ElectroPalatography/extractPhonemes/, and can be invoked by running the file

ComparePhonemes.m. The user selects a set of phonemes of interest, for instance:

listPhonemes = {’d’,’s’,’ng’}; (where ’ng’ is the phoneme that occurs at the end of the word ’sing’, for example), as well as a folder containing the set of sentences to be analysed. For each occurrence of each phoneme in this set of sentences, the programme extracts a cumulative palatogram that is obtained by summing over all the palatograms corresponding to the duration of the phoneme. These palatograms are stored as a 3D matrix in column 2 of the variable

avPhoneme_tot. The following illustrations were obtained from 69 different sentences of the MOCHA TIMIT database (chosen at random), uttered by 2 different speakers called

fsew and

msak. The first letter

f/m corresponds to female/male and the remaining three letters correspond to the initials of the speaker. The asymmetry pattern for these two speakers for the three phonemes mentioned above is shown in

Figure 7. Note that these palatograms indicate the accumulation of the individual electrode activations over time across (a) all occurrences of the phoneme within the set of 69 sentences and (b) the total duration of each occurrence of each phoneme. It can be seen that speaker

fsew exhibits greater asymmetry than speaker

msak.

In addition to the visualisation, metrics representing the amount and direction of asymmetry in the palatogram are calculated in two different ways: (a) The number of activations on the left/right side of the palatogram is divided by the total number of activations. Thus, a perfectly symmetrical case would have a result 0.5/0.5, whilst a perfectly asymmetrical case would correspond to either 1/0 or 0/1 (note that the sum of the two values is always equal to 1). The two values are stored in columns 3 and 4, respectively, of the variable

avPhoneme_tot. The asymmetry values reported in

Figure 7 use this notation. (b) A single asymmetry metric is defined as: (number of right activations - number of left activations) / (number of right activations + number of left activations). Thus, a perfectly symmetrical case would have a result 0, whilst a perfectly asymmetrical case would correspond to either +1 or −1. These values are stored for each phoneme occurrence in column 9 of

avPhoneme_tot.

It is also possible to visualise how the asymmetry metric varies across different realisations of the same phoneme. This is illustrated in

Figure 8. Thus, for the phoneme [s], for example, there were approximately 80 occurrences of the phoneme across the 69 sentences. The asymmetry index shown on the y-axis was obtained according to method (a) described above, where only the first value is shown (column 3 of the variable

avPhoneme_tot). In phonetics, the term ”coarticulation” is used to refer to the fact that in continuous speech, the articulatory features of an individual speech sound may be influenced by the phonetic context, i.e., the preceding and/or subsequent speech sound. The MOCHA TIMIT dataset was designed to test a wide variety of the phoneme sequences that occur in English. Thus, the variation in asymmetry across different realisations of the same phoneme in

Figure 8 is mainly due to variation in the adjacent speech sounds. In [

26], we use the MOCHA TIMIT data to show that [r] and [l] exhibit greater variability in asymmetry than other phonemes, which is in line with literature suggesting that these sounds are particularly prone to coarticulatory effects. Future work will also examine the change in the pattern of asymmetry across the duration of individual phonemes, for different phonemes produced in a variety of phonetic contexts.

4. Discussion

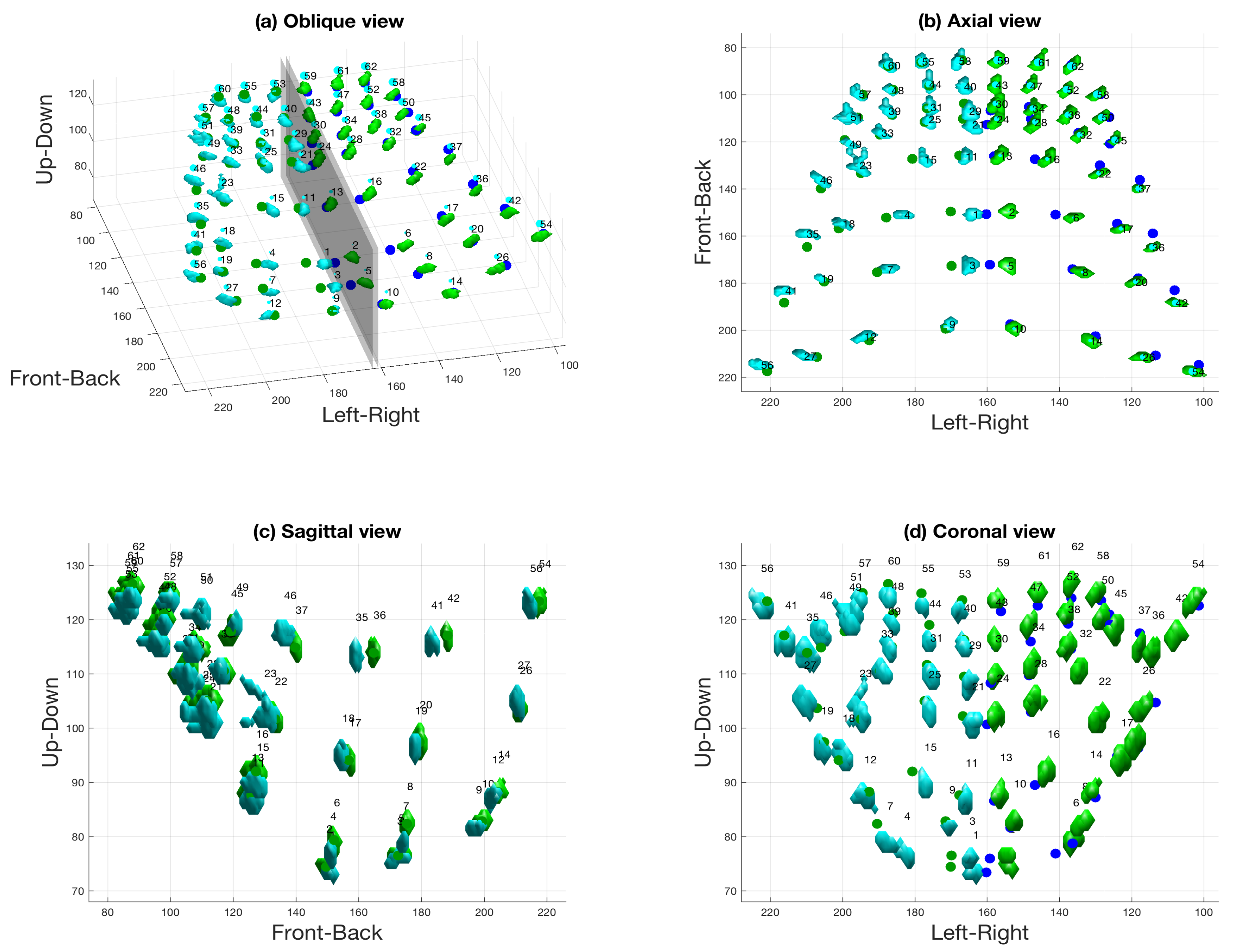

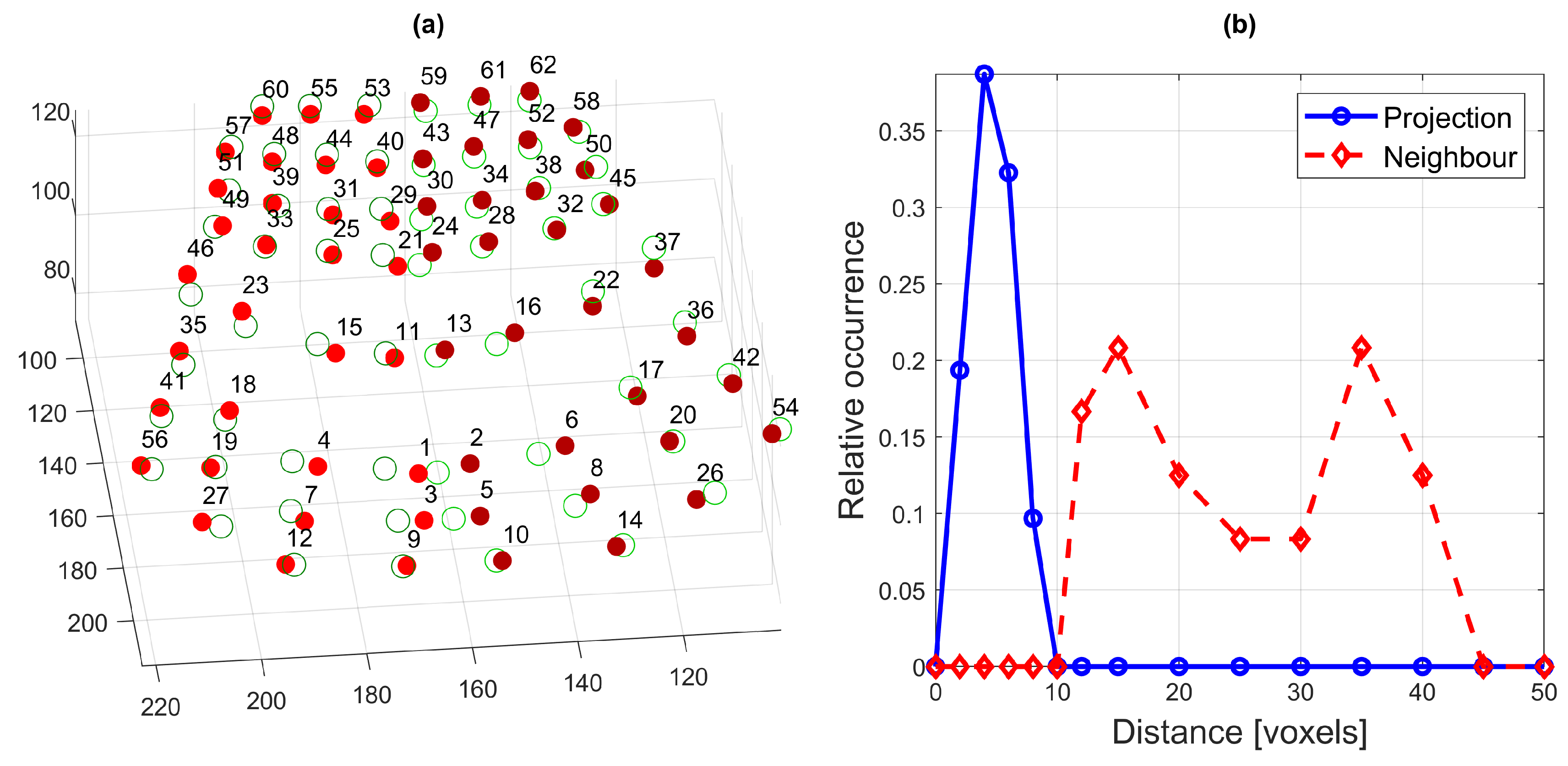

The segmentation steps described above provided a successful segmentation of the electrodes as single elements that were uniquely located in the three-dimensional space. In the case of this particular palate, any asymmetry observed in a palatogram is due only to the positioning of the tongue and not due to the shape of the hard palate of the speaker and/or irregularities in the palate manufacture.

From

Figure 12b, it can be seen that the majority of the electrodes corresponded to their mirror position, especially in the anterior section and the bottom row of the palate; that is, the dots are barely visible, as they coincide with the positions of the electrodes. A few electrodes seem to be displaced in the front-back direction (electrodes 35, 36, 41, 42), while others are displaced in the left–right direction (electrodes 1, 2, 3, 5). The displacement in the up-down direction, which is the main direction of interest, can be appreciated in

Figure 12c,d. It can be observed that the displacement is very small, as there are no dots to be seen in the sagittal view, and in the coronal view, the visible dots are mainly due to the left–right displacement seen in the axial view. In the case of this particular palate, any asymmetry observed in a palatogram is due only to the positioning of the tongue and not due to the shape of the speaker’s hard palate and/or irregularities in the palate manufacture.

This work provides a set of tools that can be used in the analysis of electropalatography, and especially in the analysis of asymmetry – both asymmetry in the pattern of activation recorded by the electropalate, and asymmetry in electrode positioning due to the speaker’s anatomy and/or palate manufacture. There is considerable interest in the asymmetry of the mandible [

29,

30] and the shape and morphometry of the palatal rugae [

31,

32]. As mentioned in

Section 1, future work will aim to determine whether speaker handedness influences left–right asymmetry in tongue movement. To this end, individuals will be selected in two groups, each with different hand-dominance.

Another future direction will be the creation of three-dimensional visualisation outputs, which better reflect the anatomy of the phenomenon than the palatograms previously shown. This may be particularly useful in therapy where the provision of real-time visual feedback of tongue movement can be effective in the remediation of certain types of intractable speech problem.

Finally, this paper has established the methodology for producing master calibration palatograms for different speech sounds. These palatograms could capture the most prototypical realisation of the speech sounds of English provided that a large enough sample would be available. The processing tools presented in this paper allow determination of the variability in the pattern of tongue–palate contact due to individual variation and varying phonetic context. Such information could be used to objectively assess speech accuracy. It should be kept in mind, however, that the relationship between speech production and speech perception may be more complex than suggested here, especially in clinical populations.