Spectrum Correction Using Modeled Panchromatic Image for Pansharpening

Abstract

:1. Introduction

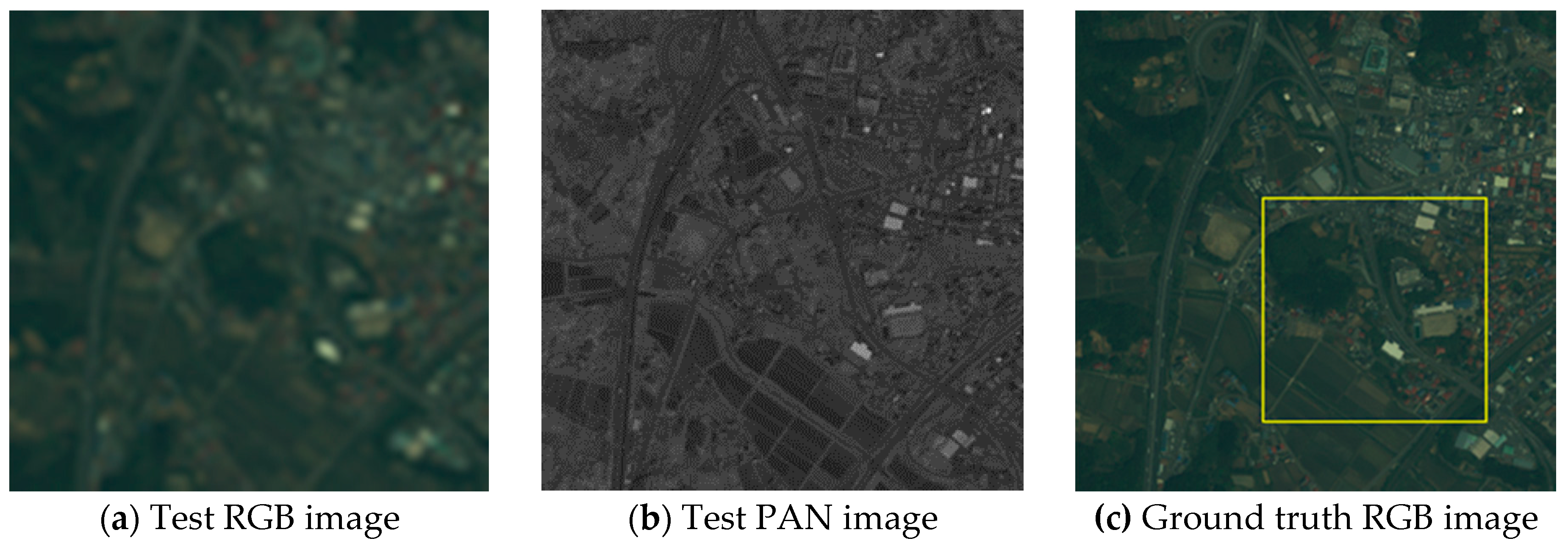

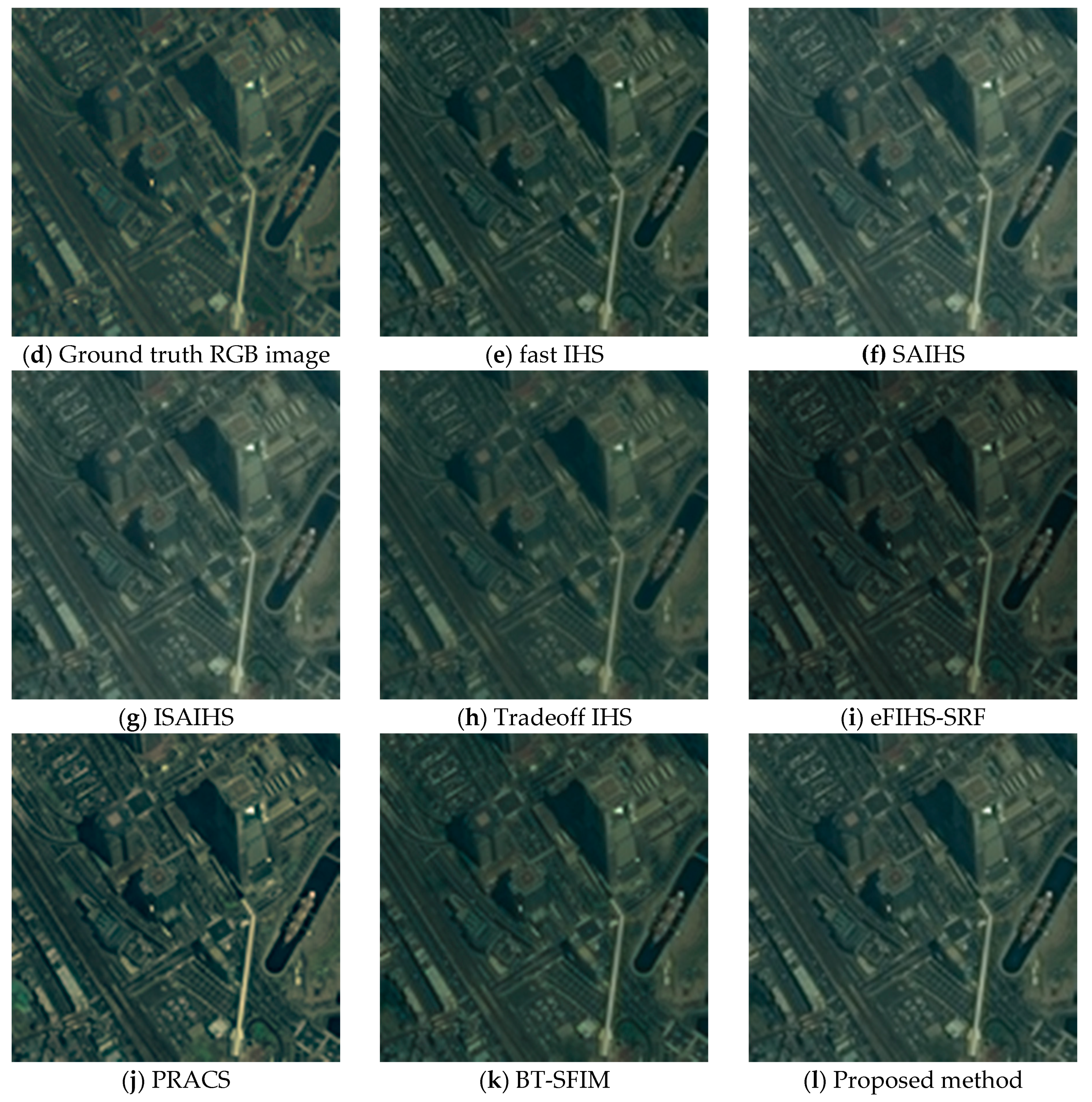

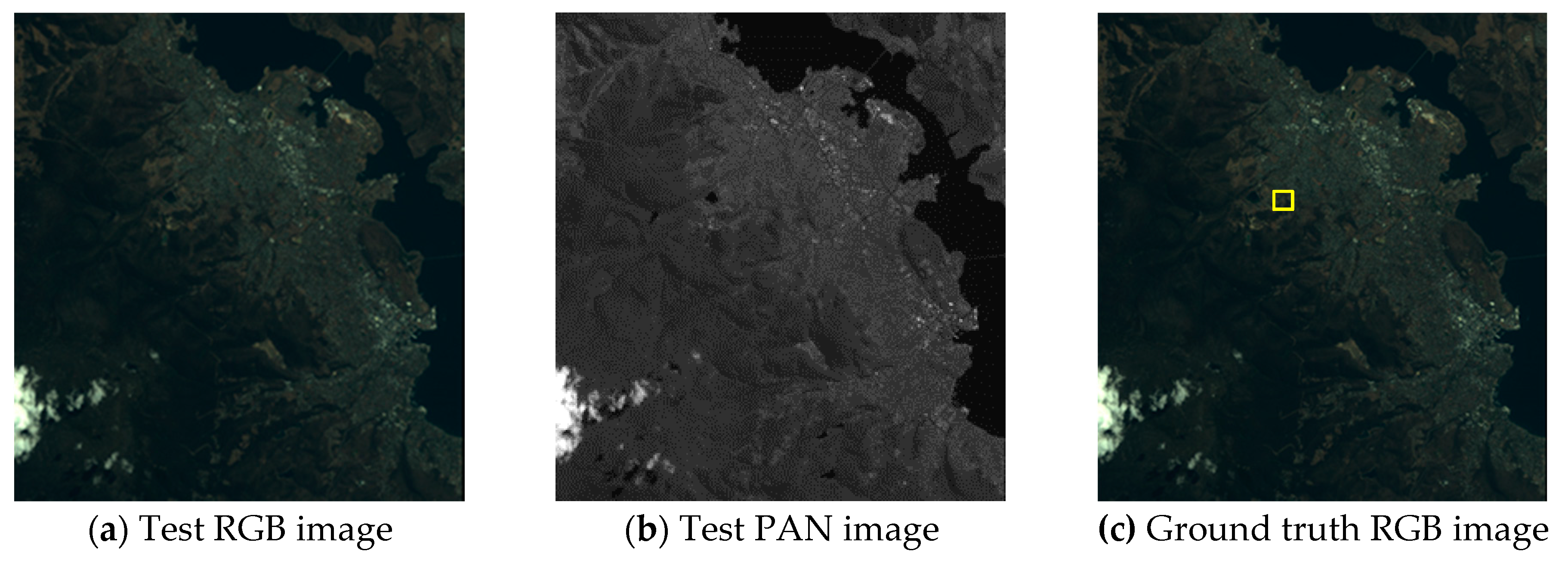

2. Materials and Methods

2.1. Image Datasets

2.2. Proposed Method

2.2.1. Notation

2.2.2. High-Spatial-Resolution Intensity of the RGB Image

2.2.3. Low-Spatial-Resolution PAN Image Model

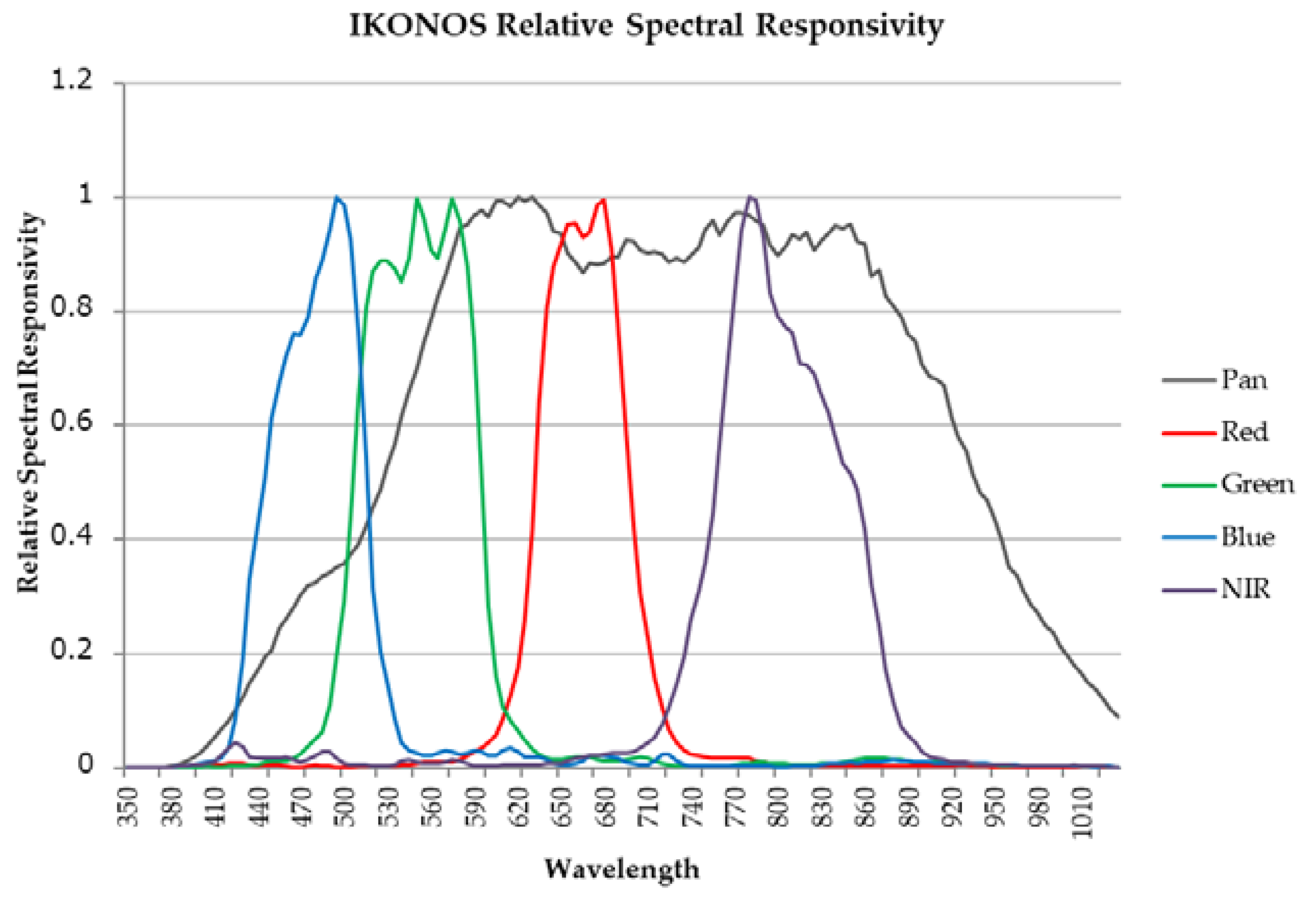

- The relative spectral response of the PAN band shows low sensitivity from the blue to green bands [27].

- The relative spectral responses of the PAN band show low sensitivity in some regions of the red and NIR bands.

- The PAN band includes the NIR band.

2.2.4. Estimation of the Intensity Correction Coefficient

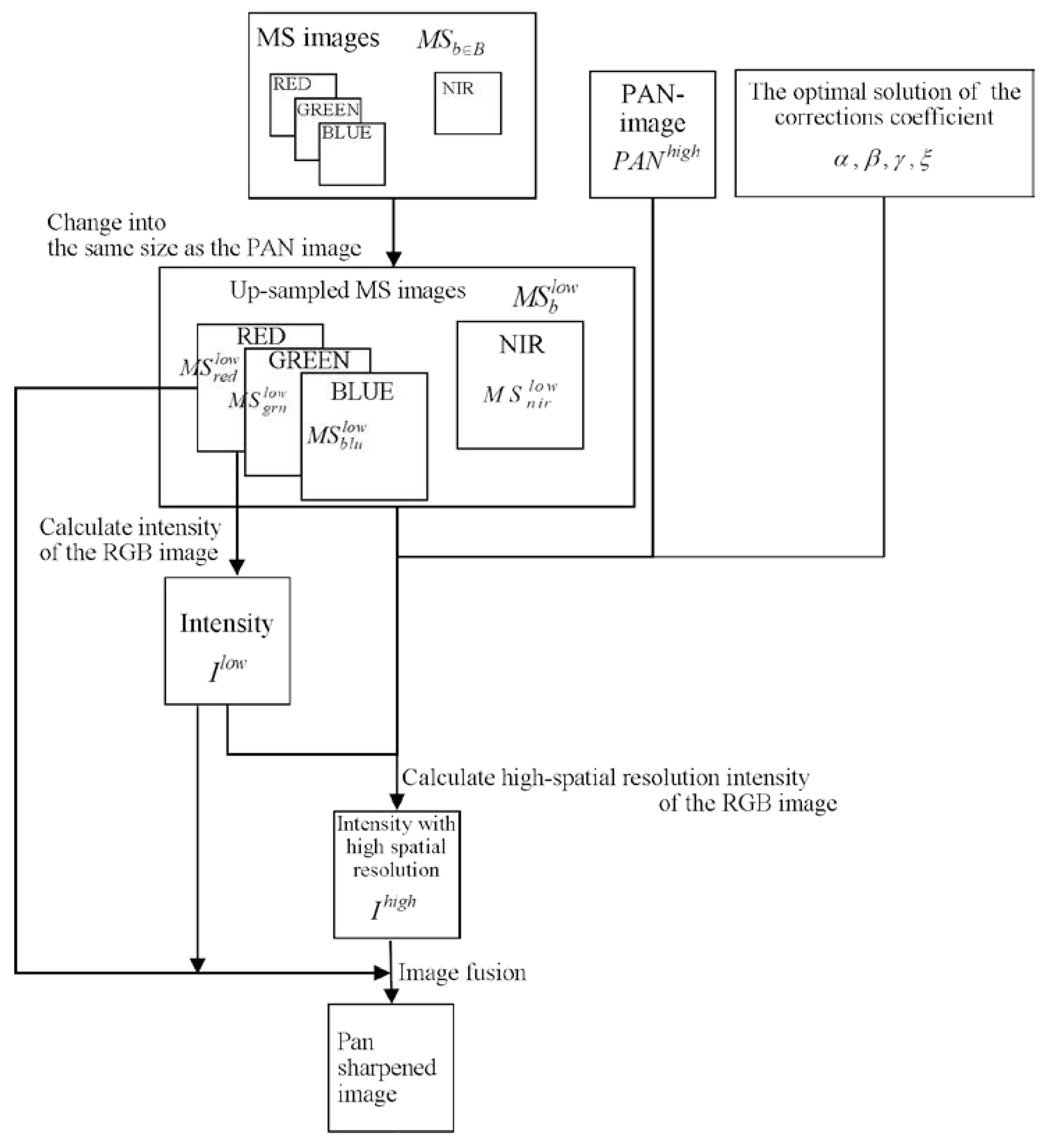

2.2.5. Fusion Process

- Change the MS images into the same size as that of the PAN image using bicubic interpolation, and produce enlarged MS images .

- Calculate the enlarged low-spatial-resolution intensity from , as expressed by Equation (1).

- Calculate the high-spatial-resolution intensity of using the estimated correction coefficients, , and using Equations (3) and (4).

- Synthesize the PS image from , and with GIHS, as expressed by Equation (7).

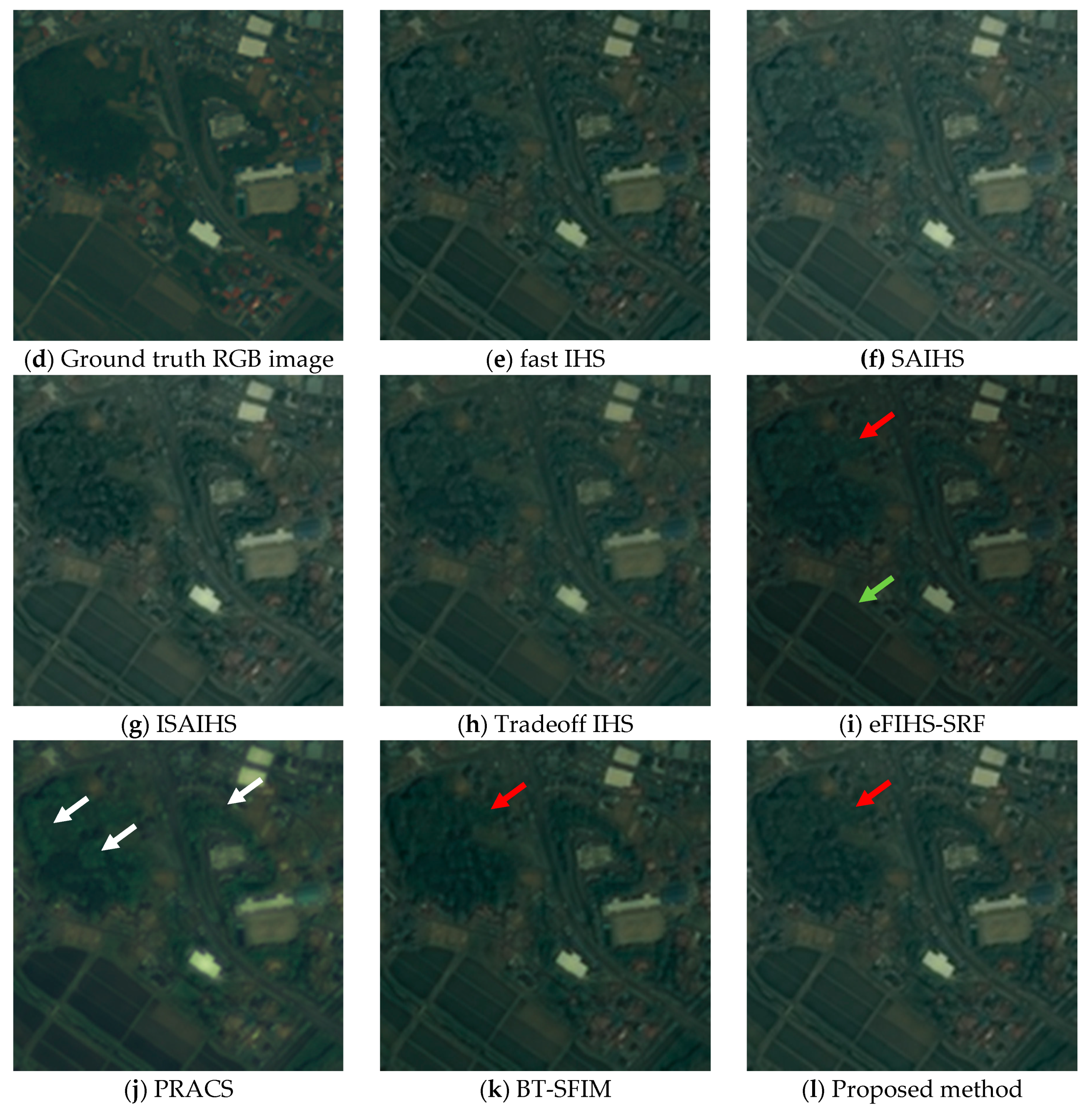

3. Results

3.1. Experimental Setup

3.2. Image Quality Metric

3.2.1. Notation

3.2.2. Numerical Quality Metrics

3.3. Experimental Results

4. Discussion

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Pohl, C.; Van Genderen, J.L. Review article Multisensor image fusion in remote sensing: Concepts, methods and applications. Int. J. Remote Sens. 1998, 19, 823–854. [Google Scholar] [CrossRef] [Green Version]

- Zhou, J.; Civco, D.L.; Silander, J.A. A wavelet transform method to merge Landsat TM and SPOT panchromatic data. Int. J. Remote Sens. 1998, 19, 743–757. [Google Scholar] [CrossRef]

- Laben, C.A.; Brower, B.V. Process for Enhancing the Spatial Resolution of Multispectral Imagery Using Pan-Sharpening. U.S. Patent 6,011,875, 4 January 2000. [Google Scholar]

- Aiazzi, B.; Baronti, S.; Selva, M. Improving component substitution pansharpening through multivariate regression of MS+Pan data. IEEE Trans. Geosci. Remote Sens. 2007, 45, 3230–3239. [Google Scholar] [CrossRef]

- Mallat, S.G. A theory for multiresolution signal decomposition: The wavelet representation. IEEE Trans. Pattern Anal. Mach. Intell. 1989, 17, 674–693. [Google Scholar] [CrossRef] [Green Version]

- Nason, G.P.; Silverman, B.W. The Stationary Wavelet Transform and some Statistical Applications; Springer: New York, NY, USA, 1995; ISBN 9780387945644. [Google Scholar]

- Shensa, M.J. The Discrete Wavelet Transform: Wedding the À Trous and Mallat Algorithms. IEEE Trans. Signal Process. 1992, 40, 2464–2482. [Google Scholar] [CrossRef] [Green Version]

- Burt, P.J.; Adelson, E.H. The Laplacian Pyramid as a Compact Image Code. IEEE Trans. Commun. 1983, 31, 532–540. [Google Scholar] [CrossRef]

- Starck, J.L.; Candès, E.J.; Donoho, D.L. The curvelet transform for image denoising. IEEE Trans. Image Process. 2002, 11, 670–684. [Google Scholar] [CrossRef] [Green Version]

- Do, M.N.; Vetterli, M. The contourlet transform: An efficient directional multiresolution image representation. IEEE Trans. Image Process. 2005, 14, 2091–2106. [Google Scholar] [CrossRef] [Green Version]

- Zhang, Q.; Guo, B. Multifocus image fusion using the nonsubsampled contourlet transform. Signal Process. 2009, 89, 1334–1346. [Google Scholar] [CrossRef]

- Guo, K.; Labate, D. Optimally sparse multidimensional representation using shearlets. SIAM J. Math. Anal. 2007, 39, 298–318. [Google Scholar] [CrossRef] [Green Version]

- Luo, X.; Zhang, Z.; Wu, X. A novel algorithm of remote sensing image fusion based on shift-invariant Shearlet transform and regional selection. AEU Int. J. Electron. Commun. 2016, 70, 186–197. [Google Scholar] [CrossRef]

- Vicinanza, M.R.; Restaino, R.; Vivone, G.; Dalla Mura, M.; Chanussot, J. A pansharpening method based on the sparse representation of injected details. IEEE Geosci. Remote Sens. Lett. 2015, 12, 180–184. [Google Scholar] [CrossRef]

- Dong, C.; Loy, C.C.; He, K.; Tang, X. Image Super—Resolution Using Deep Convolutional Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2016, 38, 295–307. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Fasbender, D.; Radoux, J.; Bogaert, P. Bayesian data fusion for adaptable image pansharpening. IEEE Trans. Geosci. Remote Sens. 2008, 46, 1847–1857. [Google Scholar] [CrossRef]

- Wang, X.; Bai, S.; Li, Z.; Song, R.; Tao, J. The PAN and ms image pansharpening algorithm based on adaptive neural network and sparse representation in the NSST domain. IEEE Access 2019, 7, 52508–52521. [Google Scholar] [CrossRef]

- Fei, R.; Zhang, J.; Liu, J.; Du, F.; Chang, P.; Hu, J. Convolutional Sparse Representation of Injected Details for Pansharpening. IEEE Geosci. Remote Sens. Lett. 2019, 16, 1595–1599. [Google Scholar] [CrossRef]

- Yin, H. PAN-Guided Cross-Resolution Projection for Local Adaptive Sparse Representation- Based Pansharpening. IEEE Trans. Geosci. Remote Sens. 2019, 57, 4938–4950. [Google Scholar] [CrossRef]

- Imani, M. Band dependent spatial details injection based on collaborative representation for pansharpening. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2018, 11, 4994–5004. [Google Scholar] [CrossRef]

- Garzelli, A.; Nencini, F.; Capobianco, L. Optimal MMSE pan sharpening of very high resolution multispectral images. IEEE Trans. Geosci. Remote Sens. 2008, 46, 228–236. [Google Scholar] [CrossRef]

- Thomas, C.; Ranchin, T.; Wald, L.; Chanussot, J. Synthesis of multispectral images to high spatial resolution: A critical review of fusion methods based on remote sensing physics. IEEE Trans. Geosci. Remote Sens. 2008, 46, 1301–1312. [Google Scholar] [CrossRef] [Green Version]

- Vivone, G.; Alparone, L.; Chanussot, J.; Dalla Mura, M.; Garzelli, A.; Licciardi, G.A.; Restaino, R.; Wald, L. A critical comparison among pansharpening algorithms. IEEE Trans. Geosci. Remote Sens. 2015, 53, 2565–2586. [Google Scholar] [CrossRef]

- Ghamisi, P.; Rasti, B.; Yokoya, N.; Wang, Q.; Hofle, B.; Bruzzone, L.; Bovolo, F.; Chi, M.; Anders, K.; Gloaguen, R.; et al. Multisource and multitemporal data fusion in remote sensing: A comprehensive review of the state of the art. IEEE Geosci. Remote Sens. Mag. 2019, 7, 6–39. [Google Scholar] [CrossRef] [Green Version]

- Qiu, R.; Zhang, X.; Wei, B. An Improved Spatial/Spectral-Resolution Image Fusion Method Based on IHS-MC for IKONOS Imagery. In Proceedings of the 2009 2nd International Congress on Image and Signal Processing, Tianjin, China, 17–19 October 2009; pp. 1–4. [Google Scholar]

- Tu, T.M.; Su, S.C.; Shyu, H.C.; Huang, P.S. A new look at IHS-like image fusion methods. Inf. Fusion 2001, 2, 177–186. [Google Scholar] [CrossRef]

- Tu, T.M.; Huang, P.S.; Hung, C.L.; Chang, C.P. A fast intensity-hue-saturation fusion technique with spectral adjustment for IKONOS imagery. IEEE Geosci. Remote Sens. Lett. 2004, 1, 309–312. [Google Scholar] [CrossRef]

- Choi, M. A new intensity-hue-saturation fusion approach to image fusion with a tradeoff parameter. IEEE Trans. Geosci. Remote Sens. 2006, 44, 1672–1682. [Google Scholar] [CrossRef] [Green Version]

- Choi, M.; Kim, H.H.; Cho, N. An improved intensity-hue-saturation method for IKONOS image fusion. Int. J. Remote Sens. 2006, 13. [Google Scholar]

- González-Audícana, M.; Otazu, X.; Fors, O.; Alvarez-Mozos, J. A low computational-cost method to fuse IKONOS images using the spectral response function of its sensors. IEEE Trans. Geosci. Remote Sens. 2006, 44, 1683–1690. [Google Scholar] [CrossRef]

- Kay, S.M. Fundamentals of Statistical Signal Processing: Estimation Theory; PTR Prentice Hall: Upper Saddle River, NJ, USA, 1993; ISBN 9780133457117. [Google Scholar]

- Choi, J.; Yu, K.; Kim, Y. A new adaptive component-substitution-based satellite image fusion by using partial replacement. IEEE Trans. Geosci. Remote Sens. 2011, 49, 295–309. [Google Scholar] [CrossRef]

- Tu, T.M.; Hsu, C.L.; Tu, P.Y.; Lee, C.H. An adjustable pan-sharpening approach for IKONOS/QuickBird/GeoEye-1/ WorldView-2 imagery. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2012, 5, 125–134. [Google Scholar] [CrossRef]

- Vivone, G.; Simoes, M.; Dalla Mura, M.; Restaino, R.; Bioucas-Dias, J.M.; Licciardi, G.A.; Chanussot, J. Pansharpening based on semiblind deconvolution. IEEE Trans. Geosci. Remote Sens. 2015, 53, 1997–2010. [Google Scholar] [CrossRef]

- Otazu, X.; González-Audícana, M.; Fors, O.; Núñez, J. Introduction of Sensor Spectral Response into Image Fusion Methods. Application to Wavelet-Based Methods. IEEE Trans. Geosci. Remote Sens. 2005, 43, 2376–2385. [Google Scholar] [CrossRef] [Green Version]

- Wald, L.; Ranchin, T.; Mangolini, M. Fusion of satellite images of different spatial resolutions: Assessing the quality of resulting images. Photogramm. Eng. Remote Sens. 1997, 63, 691–699. [Google Scholar]

- IKONOS Relative Spectral Response. Available online: http://www.geoeye.com/CorpSite/assets/docs/technical-papers/2008/IKONOS_Relative_Spectral_Response.xls (accessed on 4 April 2010).

- Wang, Z.; Bovik, A.C. A Universal Image Quality Index. IEEE Signal Process. Lett. 2002, 9, 81–84. [Google Scholar] [CrossRef]

- Wald, L. Quality of high resolution synthesised images: Is there a simple criterion? In Proceedings of the Third Conference “Fusion of Earth Data: Merging Point Measurements, Raster Maps and Remotely Sensed Images”, Sophia Antipolis, France, 26–28 January 2000; Ranchin, T., Wald, L., Eds.; SEE/URISCA: Nice, France, 2000; pp. 99–103. [Google Scholar]

- Kruse, F.A.; Lefkoff, A.B.; Boardman, J.W.; Heidebrecht, K.B.; Shapiro, A.T.; Barloon, P.J.; Goetz, A.F.H. The spectral image processing system (SIPS)-interactive visualization and analysis of imaging spectrometer data. Remote Sens. Environ. 1993, 44, 145–163. [Google Scholar] [CrossRef]

| Image | Nihonmatsu | Yokohama | Tasmania | |

|---|---|---|---|---|

| Original image | PAN image | 1024 × 1024 | 1792 × 1792 | 12,112 × 13,136 |

| MS image | 256 × 256 | 448 × 448 | 3028 × 3284 | |

| Test image | PAN image | 256 × 256 | 448 × 448 | 3028 × 3284 |

| MS image | 64 × 64 | 112 × 112 | 757 × 821 | |

| Image | Estimation of Intensity Correction Coefficients | Image Fusion |

|---|---|---|

| PAN image | Down-sampled Test PAN image | Test PAN image |

| MS images | Test MS images | Up-sampled test MS images |

| Correction Coefficient | Nihonmatsu | Yokohama | Tasmania |

|---|---|---|---|

| (NIR) | 0.3857 | 0.3789 | 0.5734 |

| (Blue) | 0.2199 | 0.2549 | 0.2310 |

| (Green) | 0.1980 | 0.1123 | 0.0000 |

| (Red) | 0.0486 | 0.1099 | 0.1039 |

| Method | CC | UIQI | ||||

|---|---|---|---|---|---|---|

| Nihonmatsu | Yokohama | Tasmania | Nihonmatsu | Yokohama | Tasmania | |

| Ideal value | 1.0 | 1.0 | ||||

| fast IHS | 0.783 | 0.914 | 0.934 | 0.717 | 0.901 | 0.686 |

| SAIHS | 0.830 | 0.936 | 0.743 | 0.905 | 0.638 | |

| ISAIHS | 0.887 | 0.939 | 0.949 | 0.708 | ||

| Tradeoff IHS | 0.843 | 0.954 | 0.804 | 0.909 | ||

| eFIHS-SRF | 0.818 | 0.914 | 0.949 | 0.733 | 0.825 | 0.821 |

| PRACS | 0.867 | 0.865 | 0.862 | 0.808 | 0.791 | 0.508 |

| BT-SFIM | 0.930 | 0.851 | 0.760 | |||

| Proposed method | 0.883 | 0.966 | 0.864 | 0.910 | 0.935 | |

| Method | ERGAS | SAM | ||||

|---|---|---|---|---|---|---|

| Nihonmatsu | Yokohama | Tasmania | Nihonmatsu | Yokohama | Tasmania | |

| Ideal value | 0.0 | 0.0 | ||||

| fast IHS | 3.471 | 11.440 | 1.898 | 2.367 | 2.912 | |

| SAIHS | 6.653 | 4.772 | 17.775 | 2.198 | 2.339 | 3.932 |

| ISAIHS | 4.258 | 4.470 | 15.612 | 1.861 | 2.297 | 3.643 |

| Tradeoff IHS | 3.175 | |||||

| eFIHS-SRF | 6.407 | 7.647 | 4.578 | 1.638 | ||

| PRACS | 2.870 | 4.758 | 8.932 | 1.993 | 3.192 | 5.246 |

| BT-SFIM | 4.193 | 4.138 | 9.222 | 2.004 | 2.408 | 2.712 |

| Proposed method | 2.673 | 2.697 | 3.625 | 1.753 | 2.176 | 1.984 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Tsukamoto, N.; Sugaya, Y.; Omachi, S. Spectrum Correction Using Modeled Panchromatic Image for Pansharpening. J. Imaging 2020, 6, 20. https://0-doi-org.brum.beds.ac.uk/10.3390/jimaging6040020

Tsukamoto N, Sugaya Y, Omachi S. Spectrum Correction Using Modeled Panchromatic Image for Pansharpening. Journal of Imaging. 2020; 6(4):20. https://0-doi-org.brum.beds.ac.uk/10.3390/jimaging6040020

Chicago/Turabian StyleTsukamoto, Naoko, Yoshihiro Sugaya, and Shinichiro Omachi. 2020. "Spectrum Correction Using Modeled Panchromatic Image for Pansharpening" Journal of Imaging 6, no. 4: 20. https://0-doi-org.brum.beds.ac.uk/10.3390/jimaging6040020