Hand Gesture Recognition Based on Computer Vision: A Review of Techniques

Abstract

:1. Introduction

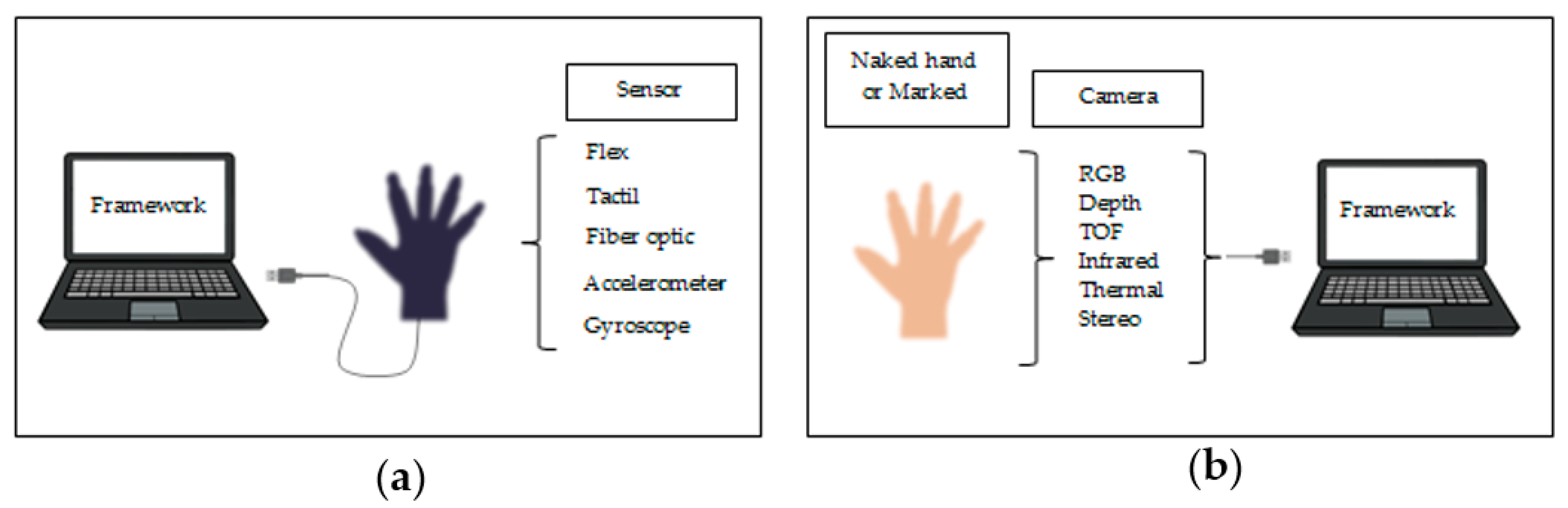

2. Hand Gesture Methods

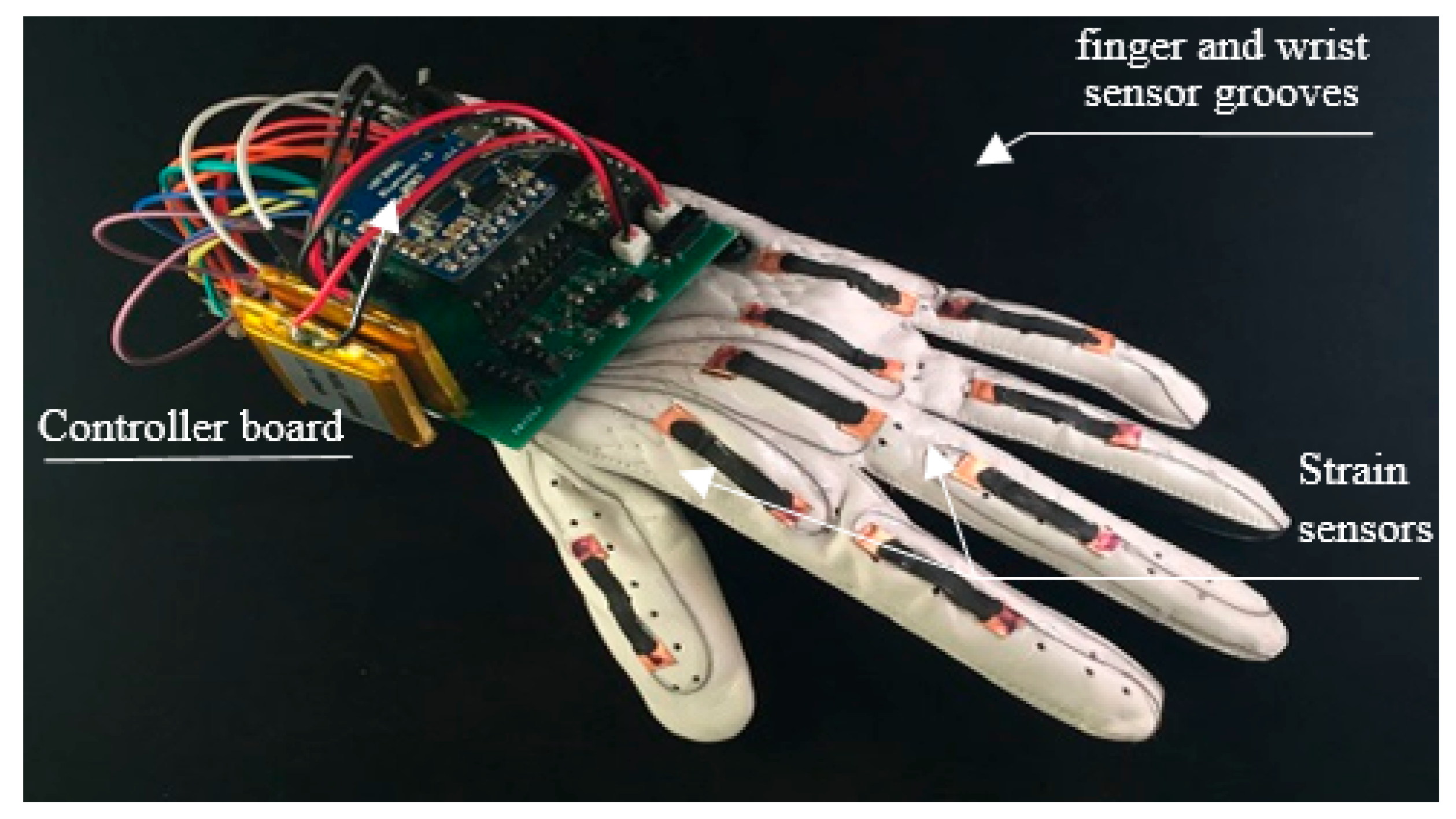

2.1. Hand Gestures Based on Instrumented Glove Approach

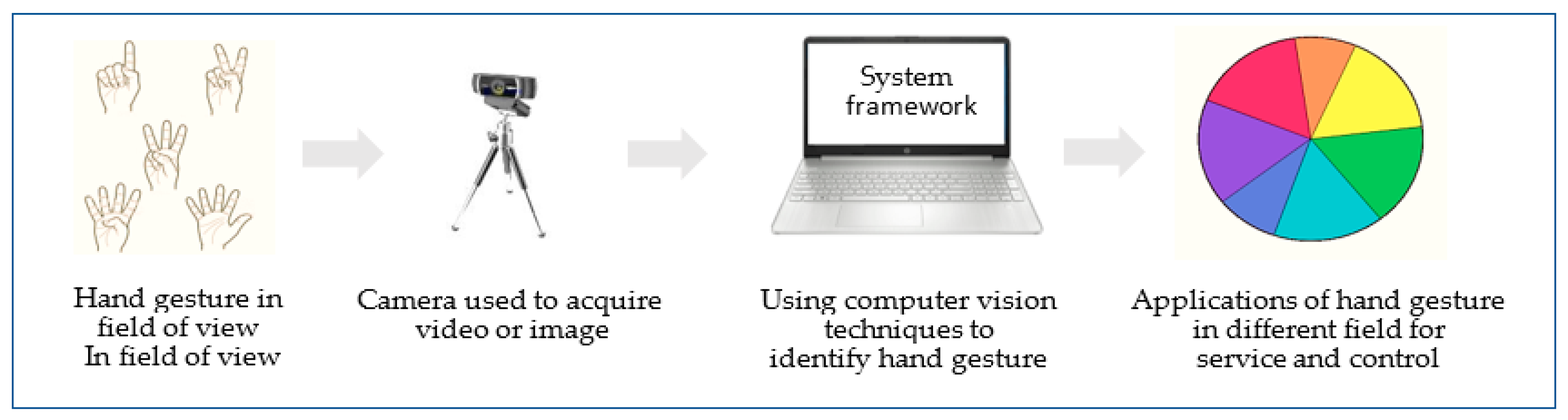

2.2. Hand Gestures Based on Computer Vision Approach

2.2.1. Color-Based Recognition:

Color-Based Recognition Using Glove Marker

Color-Based Recognition of Skin Color

- red, green, blue (R–G–B and RGB-normalized);

- hue and saturation (H–S–V, H–S–I and H–S–L);

- luminance (YIQ, Y–Cb–Cr and YUV).

2.2.2. Appearance-Based Recognition

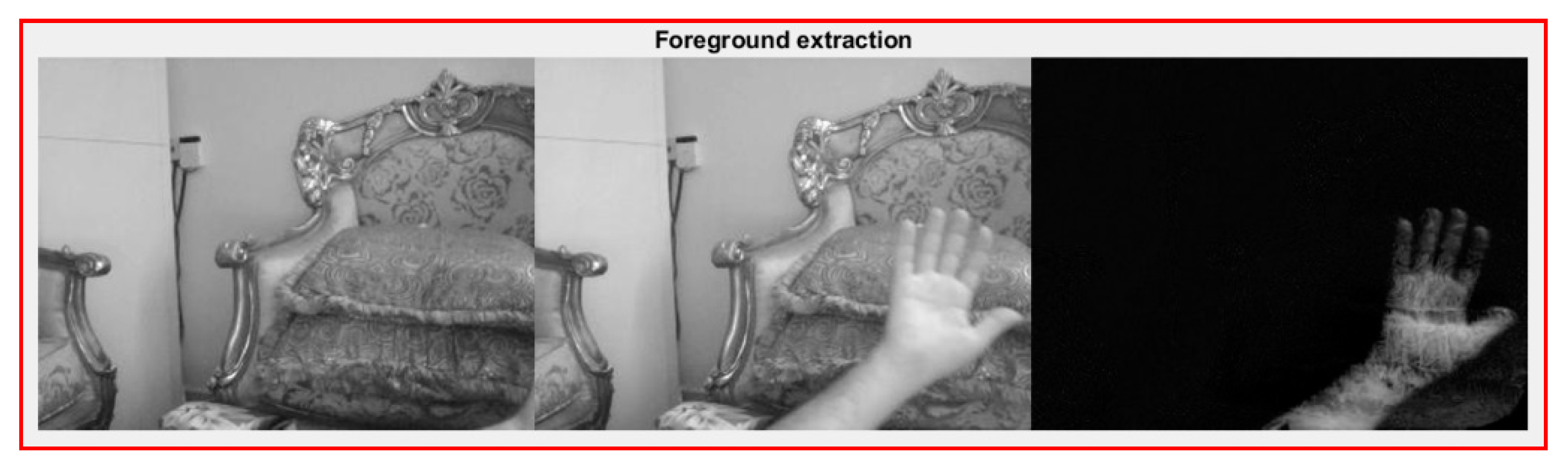

2.2.3. Motion-Based Recognition

2.2.4. Skeleton-Based Recognition

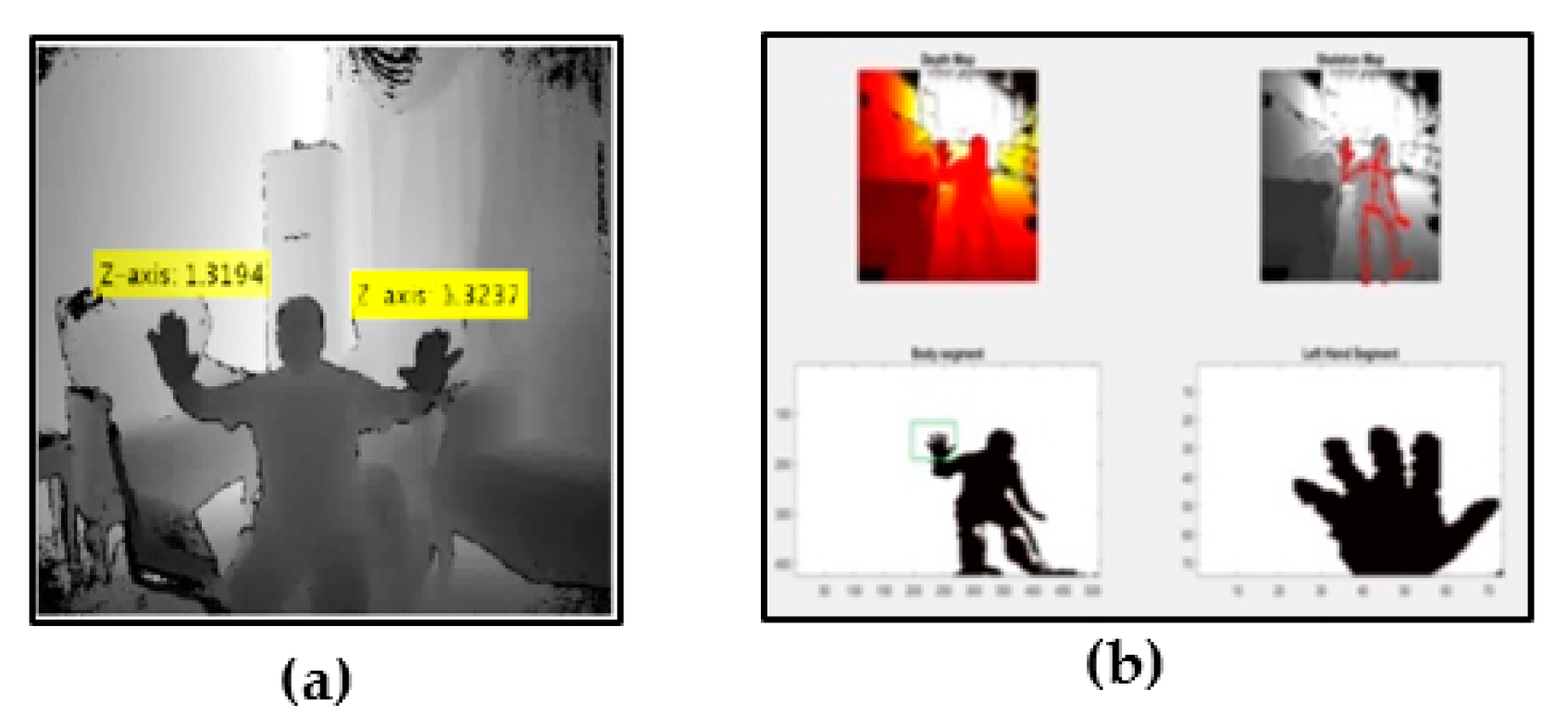

2.2.5. Depth-Based Recognition

2.2.6. 3D Model-Based Recognition

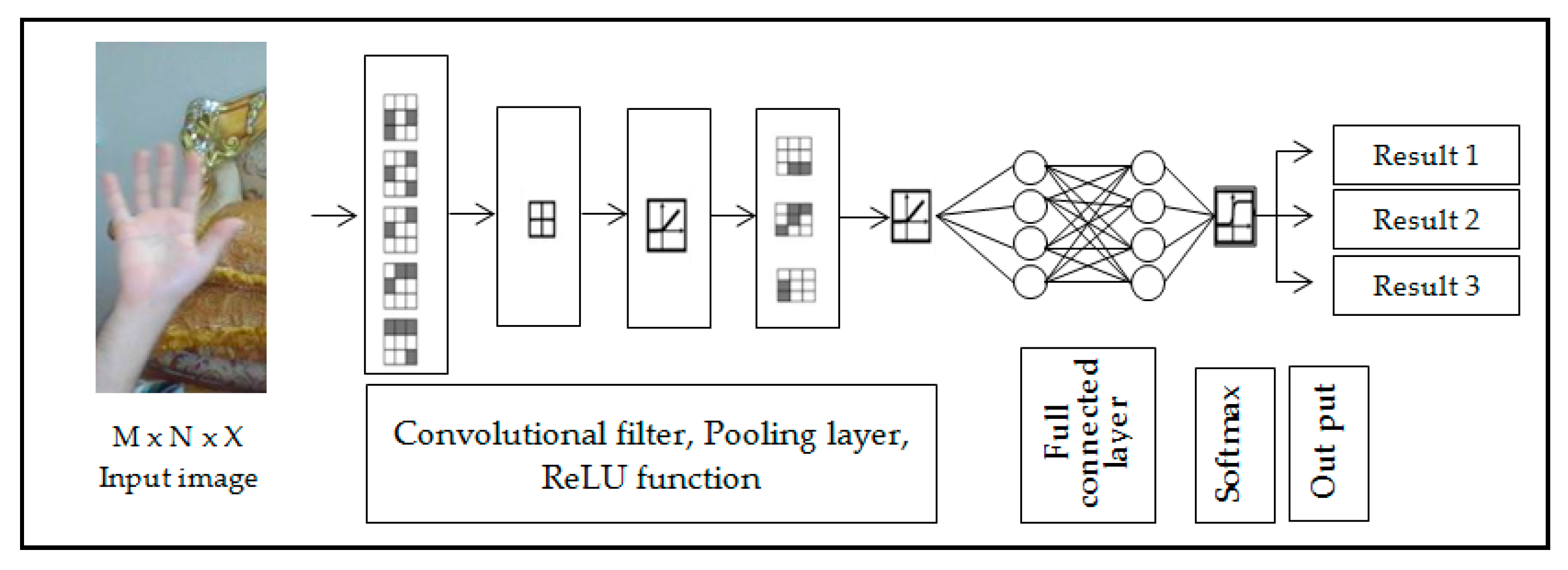

2.2.7. Deep-Learning Based Recognition

3. Application Areas of Hand Gesture Recognition Systems

3.1. Clinical and Health

3.2. Sign Language Recognition

3.3. Robot Control

3.4. Virtual Environment

3.5. Home Automation

3.6. Personal Computer and Tablet

3.7. Gestures for Gaming

4. Research Gaps and Challenges

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Zhigang, F. Computer gesture input and its application in human computer interaction. Mini Micro Syst. 1999, 6, 418–421. [Google Scholar]

- Mitra, S.; Acharya, T. Gesture recognition: A survey. IEEE Trans. Syst. Man Cybern. Part C Appl. Rev. 2007, 37, 311–324. [Google Scholar] [CrossRef]

- Ahuja, M.K.; Singh, A. Static vision based Hand Gesture recognition using principal component analysis. In Proceedings of the 2015 IEEE 3rd International Conference on MOOCs, Innovation and Technology in Education (MITE), Amritsar, India, 1–2 October 2015; pp. 402–406. [Google Scholar]

- Kramer, R.K.; Majidi, C.; Sahai, R.; Wood, R.J. Soft curvature sensors for joint angle proprioception. In Proceedings of the 2011 IEEE/RSJ International Conference on Intelligent Robots and Systems, San Francisco, CA, USA, 25–30 September 2011; pp. 1919–1926. [Google Scholar]

- Jesperson, E.; Neuman, M.R. A thin film strain gauge angular displacement sensor for measuring finger joint angles. In Proceedings of the Annual International Conference of the IEEE Engineering in Medicine and Biology Society, New Orleans, LA, USA, 4–7 November 1988; pp. 807–vol. [Google Scholar]

- Fujiwara, E.; dos Santos, M.F.M.; Suzuki, C.K. Flexible optical fiber bending transducer for application in glove-based sensors. IEEE Sens. J. 2014, 14, 3631–3636. [Google Scholar] [CrossRef]

- Shrote, S.B.; Deshpande, M.; Deshmukh, P.; Mathapati, S. Assistive Translator for Deaf & Dumb People. Int. J. Electron. Commun. Comput. Eng. 2014, 5, 86–89. [Google Scholar]

- Gupta, H.P.; Chudgar, H.S.; Mukherjee, S.; Dutta, T.; Sharma, K. A continuous hand gestures recognition technique for human-machine interaction using accelerometer and gyroscope sensors. IEEE Sens. J. 2016, 16, 6425–6432. [Google Scholar] [CrossRef]

- Lamberti, L.; Camastra, F. Real-time hand gesture recognition using a color glove. In Proceedings of the International Conference on Image Analysis and Processing, Ravenna, Italy, 14–16 September 2011; pp. 365–373. [Google Scholar]

- Wachs, J.P.; Kölsch, M.; Stern, H.; Edan, Y. Vision-based hand-gesture applications. Commun. ACM 2011, 54, 60–71. [Google Scholar] [CrossRef] [Green Version]

- Pansare, J.R.; Gawande, S.H.; Ingle, M. Real-time static hand gesture recognition for American Sign Language (ASL) in complex background. JSIP 2012, 3, 22132. [Google Scholar] [CrossRef] [Green Version]

- Van den Bergh, M.; Carton, D.; De Nijs, R.; Mitsou, N.; Landsiedel, C.; Kuehnlenz, K.; Wollherr, D.; Van Gool, L.; Buss, M. Real-time 3D hand gesture interaction with a robot for understanding directions from humans. In Proceedings of the 2011 Ro-Man, Atlanta, GA, USA, 31 July–3 August 2011; pp. 357–362. [Google Scholar]

- Wang, R.Y.; Popović, J. Real-time hand-tracking with a color glove. ACM Trans. Graph. 2009, 28, 1–8. [Google Scholar]

- Desai, S.; Desai, A. Human Computer Interaction through hand gestures for home automation using Microsoft Kinect. In Proceedings of the International Conference on Communication and Networks, Xi’an, China, 10–12 October 2017; pp. 19–29. [Google Scholar]

- Rajesh, R.J.; Nagarjunan, D.; Arunachalam, R.M.; Aarthi, R. Distance Transform Based Hand Gestures Recognition for PowerPoint Presentation Navigation. Adv. Comput. 2012, 3, 41. [Google Scholar]

- Kaur, H.; Rani, J. A review: Study of various techniques of Hand gesture recognition. In Proceedings of the 2016 IEEE 1st International Conference on Power Electronics, Intelligent Control and Energy Systems (ICPEICES), Delhi, India, 4–6 July 2016; pp. 1–5. [Google Scholar]

- Murthy, G.R.S.; Jadon, R.S. A review of vision based hand gestures recognition. Int. J. Inf. Technol. Knowl. Manag. 2009, 2, 405–410. [Google Scholar]

- Khan, R.Z.; Ibraheem, N.A. Hand gesture recognition: A literature review. Int. J. Artif. Intell. Appl. 2012, 3, 161. [Google Scholar] [CrossRef] [Green Version]

- Suriya, R.; Vijayachamundeeswari, V. A survey on hand gesture recognition for simple mouse control. In Proceedings of the International Conference on Information Communication and Embedded Systems (ICICES2014), Chennai, India, 27–28 February 2014; pp. 1–5. [Google Scholar]

- Sonkusare, J.S.; Chopade, N.B.; Sor, R.; Tade, S.L. A review on hand gesture recognition system. In Proceedings of the 2015 International Conference on Computing Communication Control and Automation, Pune, India, 26–27 February 2015; pp. 790–794. [Google Scholar]

- Garg, P.; Aggarwal, N.; Sofat, S. Vision based hand gesture recognition. World Acad. Sci. Eng. Technol. 2009, 49, 972–977. [Google Scholar]

- Dipietro, L.; Sabatini, A.M.; Member, S.; Dario, P. A survey of glove-based systems and their applications. IEEE Trans. Syst. Man Cybern. Part C Appl. Rev. 2008, 38, 461–482. [Google Scholar] [CrossRef]

- LaViola, J. A survey of hand posture and gesture recognition techniques and technology. Brown Univ. Provid. RI 1999, 29. Technical Report no. CS-99-11. [Google Scholar]

- Ibraheem, N.A.; Khan, R.Z. Survey on various gesture recognition technologies and techniques. Int. J. Comput. Appl. 2012, 50, 38–44. [Google Scholar]

- Hasan, M.M.; Mishra, P.K. Hand gesture modeling and recognition using geometric features: A review. Can. J. Image Process. Comput. Vis. 2012, 3, 12–26. [Google Scholar]

- Shaik, K.B.; Ganesan, P.; Kalist, V.; Sathish, B.S.; Jenitha, J.M.M. Comparative study of skin color detection and segmentation in HSV and YCbCr color space. Procedia Comput. Sci. 2015, 57, 41–48. [Google Scholar] [CrossRef] [Green Version]

- Ganesan, P.; Rajini, V. YIQ color space based satellite image segmentation using modified FCM clustering and histogram equalization. In Proceedings of the 2014 International Conference on Advances in Electrical Engineering (ICAEE), Vellore, India, 9–11 January 2014; pp. 1–5. [Google Scholar]

- Brand, J.; Mason, J.S. A comparative assessment of three approaches to pixel-level human skin-detection. In Proceedings of the 15th International Conference on Pattern Recognition. ICPR-2000, Barcelona, Spain, 3–7 September 2000; Volume 1, pp. 1056–1059. [Google Scholar]

- Jones, M.J.; Rehg, J.M. Statistical color models with application to skin detection. Int. J. Comput. Vis. 2002, 46, 81–96. [Google Scholar] [CrossRef]

- Brown, D.A.; Craw, I.; Lewthwaite, J. A som based approach to skin detection with application in real time systems. BMVC 2001, 1, 491–500. [Google Scholar]

- Zarit, B.D.; Super, B.J.; Quek, F.K.H. Comparison of five color models in skin pixel classification. In Proceedings of the International Workshop on Recognition, Analysis, and Tracking of Faces and Gestures in Real-Time Systems, In Conjunction with ICCV’99 (Cat. No. PR00378). Corfu, Greece, 26–27 September 1999; pp. 58–63. [Google Scholar]

- Albiol, A.; Torres, L.; Delp, E.J. Optimum color spaces for skin detection. In Proceedings of the 2001 International Conference on Image Processing (Cat. No. 01CH37205), Thessaloniki, Greece, 7–10 October 2001; Volume 1, pp. 122–124. [Google Scholar]

- Sigal, L.; Sclaroff, S.; Athitsos, V. Estimation and prediction of evolving color distributions for skin segmentation under varying illumination. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, CVPR 2000 (Cat. No. PR00662). Hilton Head Island, SC, USA, 15 June 2000; Volume 2, pp. 152–159. [Google Scholar]

- Chai, D.; Bouzerdoum, A. A Bayesian approach to skin color classification in YCbCr color space. In Proceedings of the 2000 TENCON Proceedings. Intelligent Systems and Technologies for the New Millennium (Cat. No. 00CH37119), Kuala Lumpur, Malaysia, 24–27 September 2000; Volume 2, pp. 421–424. [Google Scholar]

- Menser, B.; Wien, M. Segmentation and tracking of facial regions in color image sequences. In Proceedings of the Visual Communications and Image Processing 2000, Perth, Australia, 20–23 June 2000; Volume 4067, pp. 731–740. [Google Scholar]

- Kakumanu, P.; Makrogiannis, S.; Bourbakis, N. A survey of skin-color modeling and detection methods. Pattern Recognit. 2007, 40, 1106–1122. [Google Scholar] [CrossRef]

- Perimal, M.; Basah, S.N.; Safar, M.J.A.; Yazid, H. Hand-Gesture Recognition-Algorithm based on Finger Counting. J. Telecommun. Electron. Comput. Eng. 2018, 10, 19–24. [Google Scholar]

- Sulyman, A.B.D.A.; Sharef, Z.T.; Faraj, K.H.A.; Aljawaryy, Z.A.; Malallah, F.L. Real-time numerical 0-5 counting based on hand-finger gestures recognition. J. Theor. Appl. Inf. Technol. 2017, 95, 3105–3115. [Google Scholar]

- Choudhury, A.; Talukdar, A.K.; Sarma, K.K. A novel hand segmentation method for multiple-hand gesture recognition system under complex background. In Proceedings of the 2014 International Conference on Signal Processing and Integrated Networks (SPIN), Noida, India, 20–21 February 2014; pp. 136–140. [Google Scholar]

- Stergiopoulou, E.; Sgouropoulos, K.; Nikolaou, N.; Papamarkos, N.; Mitianoudis, N. Real time hand detection in a complex background. Eng. Appl. Artif. Intell. 2014, 35, 54–70. [Google Scholar] [CrossRef]

- Khandade, S.L.; Khot, S.T. MATLAB based gesture recognition. In Proceedings of the 2016 International Conference on Inventive Computation Technologies (ICICT), Coimbatore, India, 26–27 August 2016; Volume 1, pp. 1–4. [Google Scholar]

- Karabasi, M.; Bhatti, Z.; Shah, A. A model for real-time recognition and textual representation of malaysian sign language through image processing. In Proceedings of the 2013 International Conference on Advanced Computer Science Applications and Technologies, Kuching, Malaysia, 23–24 December 2013; pp. 195–200. [Google Scholar]

- Zeng, J.; Sun, Y.; Wang, F. A natural hand gesture system for intelligent human-computer interaction and medical assistance. In Proceedings of the 2012 Third Global Congress on Intelligent Systems, Wuhan, China, 6–8 November 2012; pp. 382–385. [Google Scholar]

- Hsieh, C.-C.; Liou, D.-H.; Lee, D. A real time hand gesture recognition system using motion history image. In Proceedings of the 2010 2nd international conference on signal processing systems, Dalian, China, 5–7 July 2010; Volume 2, pp. V2–394. [Google Scholar]

- Van den Bergh, M.; Koller-Meier, E.; Bosché, F.; Van Gool, L. Haarlet-based hand gesture recognition for 3D interaction. In Proceedings of the 2009 Workshop on Applications of Computer Vision (WACV), Snowbird, UT, USA, 7–8 December 2009; pp. 1–8. [Google Scholar]

- Van den Bergh, M.; Van Gool, L. Combining RGB and ToF cameras for real-time 3D hand gesture interaction. In Proceedings of the 2011 IEEE workshop on applications of computer vision (WACV), Kona, HI, USA, 5–7 January 2011; pp. 66–72. [Google Scholar]

- Chen, L.; Wang, F.; Deng, H.; Ji, K. A survey on hand gesture recognition. In Proceedings of the 2013 International conference on computer sciences and applications, Wuhan, China, 14–15 December 2013; pp. 313–316. [Google Scholar]

- Shimada, A.; Yamashita, T.; Taniguchi, R. Hand gesture based TV control system—Towards both user-& machine-friendly gesture applications. In Proceedings of the 19th Korea-Japan Joint Workshop on Frontiers of Computer Vision, Incheon, Korea, 30 January–1 February 2013; pp. 121–126. [Google Scholar]

- Chen, Q.; Georganas, N.D.; Petriu, E.M. Real-time vision-based hand gesture recognition using haar-like features. In Proceedings of the 2007 IEEE instrumentation & measurement technology conference IMTC 2007, Warsaw, Poland, 1–3 May 2007; pp. 1–6. [Google Scholar]

- Kulkarni, V.S.; Lokhande, S.D. Appearance based recognition of american sign language using gesture segmentation. Int. J. Comput. Sci. Eng. 2010, 2, 560–565. [Google Scholar]

- Fang, Y.; Wang, K.; Cheng, J.; Lu, H. A real-time hand gesture recognition method. In Proceedings of the 2007 IEEE International Conference on Multimedia and Expo, Beijing, China, 2–5 July 2007; pp. 995–998. [Google Scholar]

- Licsár, A.; Szirányi, T. User-adaptive hand gesture recognition system with interactive training. Image Vis. Comput. 2005, 23, 1102–1114. [Google Scholar] [CrossRef]

- Zhou, Y.; Jiang, G.; Lin, Y. A novel finger and hand pose estimation technique for real-time hand gesture recognition. Pattern Recognit. 2016, 49, 102–114. [Google Scholar] [CrossRef]

- Pun, C.-M.; Zhu, H.-M.; Feng, W. Real-time hand gesture recognition using motion tracking. Int. J. Comput. Intell. Syst. 2011, 4, 277–286. [Google Scholar] [CrossRef]

- Bayazit, M.; Couture-Beil, A.; Mori, G. Real-time Motion-based Gesture Recognition Using the GPU. In Proceedings of the MVA, Yokohama, Japan, 20–22 May 2009; pp. 9–12. [Google Scholar]

- Molina, J.; Pajuelo, J.A.; Martínez, J.M. Real-time motion-based hand gestures recognition from time-of-flight video. J. Signal Process. Syst. 2017, 86, 17–25. [Google Scholar] [CrossRef] [Green Version]

- Prakash, J.; Gautam, U.K. Hand Gesture Recognition. Int. J. Recent Technol. Eng. 2019, 7, 54–59. [Google Scholar]

- Xi, C.; Chen, J.; Zhao, C.; Pei, Q.; Liu, L. Real-time Hand Tracking Using Kinect. In Proceedings of the 2nd International Conference on Digital Signal Processing, Tokyo, Japan, 25–27 February 2018; pp. 37–42. [Google Scholar]

- Devineau, G.; Moutarde, F.; Xi, W.; Yang, J. Deep learning for hand gesture recognition on skeletal data. In Proceedings of the 2018 13th IEEE International Conference on Automatic Face & Gesture Recognition (FG 2018), Xi’an, China, 15–19 May 2018; pp. 106–113. [Google Scholar]

- Jiang, F.; Wu, S.; Yang, G.; Zhao, D.; Kung, S.-Y. independent hand gesture recognition with Kinect. Signal Image Video Process. 2014, 8, 163–172. [Google Scholar] [CrossRef]

- Konstantinidis, D.; Dimitropoulos, K.; Daras, P. Sign language recognition based on hand and body skeletal data. In Proceedings of the 2018-3DTV-Conference: The True Vision-Capture, Transmission and Display of 3D Video (3DTV-CON), Helsinki, Finland, 3–5 June 2018; pp. 1–4. [Google Scholar]

- De Smedt, Q.; Wannous, H.; Vandeborre, J.-P.; Guerry, J.; Saux, B.L.; Filliat, D. 3D hand gesture recognition using a depth and skeletal dataset: SHREC’17 track. In Proceedings of the Workshop on 3D Object Retrieval, Lyon, France, 23–24 April 2017; pp. 33–38. [Google Scholar]

- Chen, Y.; Luo, B.; Chen, Y.-L.; Liang, G.; Wu, X. A real-time dynamic hand gesture recognition system using kinect sensor. In Proceedings of the 2015 IEEE International Conference on Robotics and Biomimetics (ROBIO), Zhuhai, China, 6–9 December 2015; pp. 2026–2030. [Google Scholar]

- Karbasi, M.; Muhammad, Z.; Waqas, A.; Bhatti, Z.; Shah, A.; Koondhar, M.Y.; Brohi, I.A. A Hybrid Method Using Kinect Depth and Color Data Stream for Hand Blobs Segmentation; Science International: Lahore, Pakistan, 2017; Volume 29, pp. 515–519. [Google Scholar]

- Ren, Z.; Meng, J.; Yuan, J. Depth camera based hand gesture recognition and its applications in human-computer-interaction. In Proceedings of the 2011 8th International Conference on Information, Communications & Signal Processing, Singapore, 13–16 December 2011; pp. 1–5. [Google Scholar]

- Dinh, D.-L.; Kim, J.T.; Kim, T.-S. Hand gesture recognition and interface via a depth imaging sensor for smart home appliances. Energy Procedia 2014, 62, 576–582. [Google Scholar] [CrossRef] [Green Version]

- Raheja, J.L.; Minhas, M.; Prashanth, D.; Shah, T.; Chaudhary, A. Robust gesture recognition using Kinect: A comparison between DTW and HMM. Optik 2015, 126, 1098–1104. [Google Scholar] [CrossRef]

- Ma, X.; Peng, J. Kinect sensor-based long-distance hand gesture recognition and fingertip detection with depth information. J. Sens. 2018, 2018, 5809769. [Google Scholar] [CrossRef] [Green Version]

- Kim, M.-S.; Lee, C.H. Hand Gesture Recognition for Kinect v2 Sensor in the Near Distance Where Depth Data Are Not Provided. Int. J. Softw. Eng. Its Appl. 2016, 10, 407–418. [Google Scholar] [CrossRef]

- Li, Y. Hand gesture recognition using Kinect. In Proceedings of the 2012 IEEE International Conference on Computer Science and Automation Engineering, Beijing, China, 22–24 June 2012; pp. 196–199. [Google Scholar]

- Song, L.; Hu, R.M.; Zhang, H.; Xiao, Y.L.; Gong, L.Y. Real-time 3d hand gesture detection from depth images. Adv. Mater. Res. 2013, 756, 4138–4142. [Google Scholar] [CrossRef]

- Pal, D.H.; Kakade, S.M. Dynamic hand gesture recognition using kinect sensor. In Proceedings of the 2016 International Conference on Global Trends in Signal Processing, Information Computing and Communication (ICGTSPICC), Jalgaon, India, 22–24 December 2016; pp. 448–453. [Google Scholar]

- Karbasi, M.; Bhatti, Z.; Nooralishahi, P.; Shah, A.; Mazloomnezhad, S.M.R. Real-time hands detection in depth image by using distance with Kinect camera. Int. J. Internet Things 2015, 4, 1–6. [Google Scholar]

- Bakar, M.Z.A.; Samad, R.; Pebrianti, D.; Aan, N.L.Y. Real-time rotation invariant hand tracking using 3D data. In Proceedings of the 2014 IEEE International Conference on Control System, Computing and Engineering (ICCSCE 2014), Batu Ferringhi, Malaysia, 28–30 November 2014; pp. 490–495. [Google Scholar]

- Desai, S. Segmentation and Recognition of Fingers Using Microsoft Kinect. In Proceedings of the International Conference on Communication and Networks, Paris, France, 21–25 May 2017; pp. 45–53. [Google Scholar]

- Lee, U.; Tanaka, J. Finger identification and hand gesture recognition techniques for natural user interface. In Proceedings of the 11th Asia Pacific Conference on Computer Human Interaction, Bangalore, India, 24–27 September 2013; pp. 274–279. [Google Scholar]

- Bakar, M.Z.A.; Samad, R.; Pebrianti, D.; Mustafa, M.; Abdullah, N.R.H. Finger application using K-Curvature method and Kinect sensor in real-time. In Proceedings of the 2015 International Symposium on Technology Management and Emerging Technologies (ISTMET), Langkawai Island, Malaysia, 25–27 August 2015; pp. 218–222. [Google Scholar]

- Tang, M. Recognizing Hand Gestures with Microsoft’s Kinect; Department of Electrical Engineering of Stanford University: Palo Alto, CA, USA, 2011. [Google Scholar]

- Bamwenda, J.; Özerdem, M.S. Recognition of Static Hand Gesture with Using ANN and SVM. Dicle Univ. J. Eng. 2019, 10, 561–568. [Google Scholar]

- Tekin, B.; Bogo, F.; Pollefeys, M. H+ O: Unified egocentric recognition of 3D hand-object poses and interactions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 4511–4520. [Google Scholar]

- Wan, C.; Probst, T.; Van Gool, L.; Yao, A. Self-supervised 3d hand pose estimation through training by fitting. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 10853–10862. [Google Scholar]

- Ge, L.; Ren, Z.; Li, Y.; Xue, Z.; Wang, Y.; Cai, J.; Yuan, J. 3d hand shape and pose estimation from a single rgb image. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 10833–10842. [Google Scholar]

- Taylor, J.; Bordeaux, L.; Cashman, T.; Corish, B.; Keskin, C.; Sharp, T.; Soto, E.; Sweeney, D.; Valentin, J.; Luff, B. Efficient and precise interactive hand tracking through joint, continuous optimization of pose and correspondences. ACM Trans. Graph. 2016, 35, 1–12. [Google Scholar] [CrossRef]

- Malik, J.; Elhayek, A.; Stricker, D. Structure-aware 3D hand pose regression from a single depth image. In Proceedings of the International Conference on Virtual Reality and Augmented Reality, London, UK, 22–23 October 2018; pp. 3–17. [Google Scholar]

- Tsoli, A.; Argyros, A.A. Joint 3D tracking of a deformable object in interaction with a hand. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 484–500. [Google Scholar]

- Chen, Y.; Tu, Z.; Ge, L.; Zhang, D.; Chen, R.; Yuan, J. So-handnet: Self-organizing network for 3d hand pose estimation with semi-supervised learning. In Proceedings of the IEEE International Conference on Computer Vision, Seoul, Korea, 27 October–2 November 2019; pp. 6961–6970. [Google Scholar]

- Ge, L.; Ren, Z.; Yuan, J. Point-to-point regression pointnet for 3d hand pose estimation. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 475–491. [Google Scholar]

- Wu, X.; Finnegan, D.; O’Neill, E.; Yang, Y.-L. Handmap: Robust hand pose estimation via intermediate dense guidance map supervision. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 237–253. [Google Scholar]

- Cai, Y.; Ge, L.; Cai, J.; Yuan, J. Weakly-supervised 3d hand pose estimation from monocular rgb images. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 666–682. [Google Scholar]

- Alnaim, N.; Abbod, M.; Albar, A. Hand Gesture Recognition Using Convolutional Neural Network for People Who Have Experienced A Stroke. In Proceedings of the 2019 3rd International Symposium on Multidisciplinary Studies and Innovative Technologies (ISMSIT), Ankara, Turkey, 11–13 October 2019; pp. 1–6. [Google Scholar]

- Chung, H.; Chung, Y.; Tsai, W. An efficient hand gesture recognition system based on deep CNN. In Proceedings of the 2019 IEEE International Conference on Industrial Technology (ICIT), Melbourne, Australia, 13–15 February 2019; pp. 853–858. [Google Scholar]

- Bao, P.; Maqueda, A.I.; del-Blanco, C.R.; García, N. Tiny hand gesture recognition without localization via a deep convolutional network. IEEE Trans. Consum. Electron. 2017, 63, 251–257. [Google Scholar] [CrossRef] [Green Version]

- Li, G.; Tang, H.; Sun, Y.; Kong, J.; Jiang, G.; Jiang, D.; Tao, B.; Xu, S.; Liu, H. Hand gesture recognition based on convolution neural network. Cluster Comput. 2019, 22, 2719–2729. [Google Scholar] [CrossRef]

- Lin, H.-I.; Hsu, M.-H.; Chen, W.-K. Human hand gesture recognition using a convolution neural network. In Proceedings of the 2014 IEEE International Conference on Automation Science and Engineering (CASE), Taipei, Taiwan, 18–22 August 2014; pp. 1038–1043. [Google Scholar]

- John, V.; Boyali, A.; Mita, S.; Imanishi, M.; Sanma, N. Deep learning-based fast hand gesture recognition using representative frames. In Proceedings of the 2016 International Conference on Digital Image Computing: Techniques and Applications (DICTA), Gold Coast, Australia, 30 November–2 December 2016; pp. 1–8. [Google Scholar]

- Wu, X.Y. A hand gesture recognition algorithm based on DC-CNN. Multimed. Tools Appl. 2019, 1–13. [Google Scholar] [CrossRef]

- Nguyen, X.S.; Brun, L.; Lézoray, O.; Bougleux, S. A neural network based on SPD manifold learning for skeleton-based hand gesture recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 12036–12045. [Google Scholar]

- Lee, D.-H.; Hong, K.-S. Game interface using hand gesture recognition. In Proceedings of the 5th International Conference on Computer Sciences and Convergence Information Technology, Seoul, Korea, 30 November–2 December 2010; pp. 1092–1097. [Google Scholar]

- Gallo, L.; Placitelli, A.P.; Ciampi, M. Controller-free exploration of medical image data: Experiencing the Kinect. In Proceedings of the 2011 24th international symposium on computer-based medical systems (CBMS), Bristol, UK, 27–30 June 2011; pp. 1–6. [Google Scholar]

- Zhao, X.; Naguib, A.M.; Lee, S. Kinect based calling gesture recognition for taking order service of elderly care robot. In Proceedings of the The 23rd IEEE international symposium on robot and human interactive communication, Edinburgh, UK, 25–29 August 2014; pp. 525–530. [Google Scholar]

| Author | Type of Camera | Resolution | Techniques/Methods for Segmentation | Feature Extract Type | Classify Algorithm | Recognition Rate | No. of Gestures | Application Area | Invariant Factor | Distance from Camera |

|---|---|---|---|---|---|---|---|---|---|---|

| [37] | off-the-shelf HD webcam | 16 Mp | Y–Cb–Cr | finger count | maximum distance of centroid two fingers | 70% to 100% | 14 gestures | HCI | light intensity, size, noise | 150 to 200 mm |

| [38] | computer camera | 320 × 250 pixels | Y–Cb–Cr | finger count | expert system | 98% | 6 gestures | deaf-mute people | heavy light during capturing | – |

| [11] | Fron-Tech E-cam (web camera) | 10 Mp | RGB threshold & edge detection Sobel method | A–Z alphabet hand gesture | feature matching (Euclidian distance) | 90.19% | 26 static gestures | (ASL) American sign language | – | 1000 mm |

| [15] | webcam | 640 × 480 pixels | HIS & distance transform | finger count | distance transform method & circular profiling | 100% > according limitation | 6 gestures | control the slide during a presentation | location of hand | – |

| [39] | webcam | – | HIS & frame difference & Haar classifier | dynamic hand gestures | contour matching difference with the previous | – | hand segment | HCI | sensitive to moving background | – |

| [40] | webcam | 640 × 480 pixels | HSV & motion detection (hybrid technique) | hand gestures | (SPM) classification technique | 98.75% | hand segment | HCI | – | – |

| [41] | video camera | 640 × 480 pixels | HSV & cross-correlation | hand gestures | Euclidian distance | 82.67% | 15 gestures | man–machine interface (MMI) | – | – |

| [42] | digital or cellphone camera | 768 × 576 pixels | HSV | hand gestures | division by shape | – | hand segment | Malaysian sign language | objects have the same skin color some & hard edges | – |

| [43] | web camera | 320 × 240 pixels | red channel threshold segmentation method | hand postures | combine information from multiple cures of the motion, color and shape | 100% | 5 hand postures | HCI wheelchair control | – | – |

| [44] | Logitech portable webcam C905 | 320 × 240 pixels | normalized R, G, original red | hand gestures | Haar-like directional patterns & motion history image | 93.13 static 95.07 dynamic Percent | 2 static 4 dynamic gestures | man–machine interface (MMI) | – | (< 1) mm (1000–1500) mm (1500–2000) mm |

| [45] | high resolution cameras | 640 × 480 pixels | HIS & Gaussian mixture model (GMM) & second histogram | hand postures | Haarlet-based hand gesture | 98.24% correct classification rate | 10 postures | manipulating 3D objects & navigating through a 3D model | changes in illumination | – |

| [46] | ToF camera & AVT Marlin color camera | 176 × 144 & 640 × 480 pixels | histogram-based skin color probability & depth threshold | hand gestures | 2D Haarlets | 99.54% | hand segment | real-time hand gesture interaction system | – | 1000 mm |

| Author | Type of Camera | Resolution | Techniques/ Methods for Segmentation | Feature Extract Type | Classify Algorithm | RECOGNITION RATE | No. of Gestures | Application Area | Dataset Type | Invariant Factor | Distance from Camera |

|---|---|---|---|---|---|---|---|---|---|---|---|

| [49] | Logitech Quick Cam web camera | 320 × 240 pixels | Haar -like features & AdaBoost learning algorithm | hand posture | parallel cascade structure | above 90% | 4 hand postures | real-time vision-based hand gesture classification | Positive and negative hand sample collected by author | – | – |

| [50] | webcam-1.3 | 80 × 64 resize image for train | OTSU & canny edge detection technique for gray scale image | hand sign | feed-forward back propagation neural network | 92.33% | 26 static signs | American Sign Language | Dataset created by author | low differentiation | different distances |

| [51] | camera video | 320 × 240 pixels | Gaussian model describes hand color in HSV & AdaBoost algorithm | hand gesture | palm–finger configuration | 93% | 6 hand gestures | real-time hand gesture recognition method | – | – | – |

| [52] | camera–projector system | 384 × 288 pixels | background subtraction method | hand gesture | Fourier-based classification | 87.7% | 9 hand gestures | user-independent application | ground truth data set collected manually | point coordinates geometrically distorted & skin color | – |

| [53] | Monocular web camera | 320 × 240 pixels | combine Y–Cb–Cr & edge extraction & parallel finger edge appearance | hand posture based on finger gesture | finger model | – | 14 static gestures | substantial applications | The test data are collected from videos captured by web-camera | variation in lightness would result in edge extraction failure | ≤ 500 mm |

| Author | Type of Camera | Resolution | Techniques/ Methods for Segmentation | Feature Extract Type | Classify Algorithm | Recognition Rate | No. of Gestures | Application Area | Dataset Type | Invariant Factor | Distance from Camera |

|---|---|---|---|---|---|---|---|---|---|---|---|

| [54] | off-the-shelf cameras | – | RGB, HSV, Y–Cb–Cr & motion tracking | hand gesture | histogram distribution model | 97.33% | 10 gestures | human–computer interface | Data set created by author | other object moving and background issue | – |

| [55] | Canon GL2 camera | 720 × 480 pixels | face detection & optical flow | motion gesture | leave-one-out cross-validation | – | 7 gestures | gesture recognition system | Data set created by author | – | – |

| [56] | time of flight (TOF) SR4000 | 176 × 144 pixels | depth information, motion patterns | motion gesture | motion patterns compared | 95% | 26 gestures | interaction with virtual environments | cardinal directions dataset | depth range limitation | 3000 mm |

| [57] | digital camera | – | YUV & CAMShift algorithm | hand gesture | naïve Bayes classifier | high | unlimited | human and machine system | Data set created by author | changed illumination, rotation problem, position problem | – |

| Author | Type of Camera | Resolution | Techniques/ Methods for Segmentation | Feature Extract Type | Classify Algorithm | Recognition Rate | No. of Gestures | Application Area | Dataset Type | Invariant Factor | Distance from Camera |

|---|---|---|---|---|---|---|---|---|---|---|---|

| [58] | Kinect camera depth sensor | 512 × 424 pixels | Euclidean distance & geodesic distance | fingertip | skeleton pixels extracted | – | hand tracking | real time hand tracking method | – | – | – |

| [59] | Intel Real Sense depth camera | – | skeleton data | hand-skeletal joints’ positions | convolutional neural network (CNN) | 91.28% 84.35% | 14 gestures 28 gestures | classification method | Dynamic Hand Gesture-14/28 (DHG) dataset | only works on complete sequences | – |

| [60] | Kinect camera | 240 × 320 pixels | Laplacian-based contraction | skeleton points clouds | Hungarian algorithm | 80% | 12 gestures | hand gesture recognition method | ChaLearn Gesture Dataset (CGD2011) | HGR less performance in the viewpoint 0◦condition | – |

| [61] | RGB video sequence recorded | – | vision-based approach & skeletal data | hand and body skeletal features | skeleton classification network | – | hand gesture | sign language recognition | LSA64 dataset | difficulties in extracting skeletal data because of occlusions | – |

| [62] | Intel Real Sense depth camera | 640 × 480 pixels | depth and skeletal dataset | hand gesture | supervised learning classifier support vector machine (SVM) with a linear kernel | 88.24% 81.90% | 14 gestures 28 gestures | hand gesture application | Create SHREC 2017 track “3D Hand Skeletal Dataset | – | – |

| [63] | Kinect v2 camera sensor | 512 × 424 pixels | depth metadata | dynamic hand gesture | SVM | 95.42% | 10 gesture 26 gesture | Arabic numbers (0–9) letters (26) | author own dataset | low recognition rate, “O”, “T” and “2” | – |

| [64] | Kinect RGB camera & depth sensor | 640 × 480 | skeleton data | hand blob | – | – | hand gesture | Malaysian sign language | – | – | – |

| Author | Type of Camera | Resolution | Techniques/ Methods for Segmentation | Feature Extract Type | Classify Algorithm | Recognition Rate | No. of Gestures | Application Area | Invariant Factor | Distance from Camera |

|---|---|---|---|---|---|---|---|---|---|---|

| [65] | Kinect V1 | RGB - 640 × 480 depth - 320 × 240 | threshold & near-convex shape | finger gesture | finger–earth movers distance (FEMD) | 93.9% | 10 gestures | human–computer interactions (HCI) | – | – |

| [68] | Kinect V2 | RGB - 1920 × 1080 depth - 512 × 424 | local neighbor method & threshold segmentation | fingertip | convex hull detection algorithm | 96% | 6 gestures | natural human–robot interaction | – | (500–2000) mm |

| [69] | Kinect V2 | Infrared sensor depth - 512 × 424 | operation of depth and infrared images | finger counting & hand gesture | number of separate areas | – | finger count & two hand gestures | mouse-movement controlling | – | < 500 mm |

| [70] | Kinect V1 | RGB - 640 × 480 depth - 320 × 240 | depth thresholds | finger gesture | finger counting classifier & finger name collect & vector matching | 84% one hand 90% two hand | 9 gestures | chatting with speech | – | (500–800) mm |

| [71] | Kinect V1 | RGB - 640 × 480 depth - 320 × 240 | frame difference algorithm | hand gesture | automatic state machine (ASM) | 94% | hand gesture | human–computer interaction | – | – |

| [72] | Kinect V1 | RGB - 640 × 480 depth - 320 × 240 | skin & motion detection & Hu moments an orientation | hand gesture | discrete hidden Markov model (DHMM) | – | 10 gestures | human–computer interfacing | – | – |

| [14] | Kinect V1 | depth - 640 × 480 | range of depth image | hand gestures 1–5 | kNN classifier & Euclidian distance | 88% | 5 gestures | electronic home appliances | – | (250–650) mm |

| [73] | Kinect V1 | depth - 640 × 480 | distance method | hand gesture | – | – | hand gesture | human–computer interaction (HCI) | – | – |

| [74] | Kinect V1 | depth - 640 × 480 | threshold range | hand gesture | – | – | hand gesture | hand rehabilitation system | – | 400–1500 mm |

| [75] | Kinect V2 | RGB - 1920 × 1080 depth - 512 × 424 | Otsu’s global threshold | finger gesture | kNN classifier & Euclidian distance | 90% | finger count | human–computer interaction (HCI) | hand not identified if it’s not connected with boundary | 250–650 mm |

| [76] | Kinect V1 | RGB - 640 × 480 depth - 640 × 480 | depth-based data and RGB data together | finger gesture | distance from the device and shape bases matching | 91% | 6 gesture | finger mouse interface | – | 500––800 mm |

| [77] | Kinect V1 | depth - 640 × 480 | depth threshold and K-curvature | finger counting | depth threshold and K-curvature | 73.7% | 5 gestures | picture selection application | detection fingertips should though the hand was moving or rotating | – |

| [78] | Kinect V1 | RGB - 640 × 480 depth - 320 × 240 | integrate the RGB and depth information | hand gesture | forward recursion & SURF | 90% | hand gesture | virtual environment | – | – |

| [79] | Kinect V2 | depth - 512 × 424 | skeletal data stream & depth & color data streams | hand gesture | support vector machine (SVM) & artificial neural networks (ANN) | 93.4% for SVM 98.2% for ANN | 24 alphabets hand gesture | American Sign Language | – | 500––800 mm |

| Author | Type of Camera | Techniques/ Methods for Segmentation | Feature Extract Type | Classify Algorithm | Type of Error | Hardware Run | Application Area | Dataset Type | Runtime Speed |

|---|---|---|---|---|---|---|---|---|---|

| [80] | RGB camera | network directly predicts the control points in 3D | 3D hand poses, 6D object poses ,object classes and action categories | PnP algorithm & Single-shot neural network | Fingertips 48.4 mm Object coordinates 23.7 mm | real-time speed of 25 fps on an NVIDIA Tesla M40 | framework for understanding human behavior through 3Dhand and object interactions | First-person hand action (FPHA) dataset | 25 fps |

| [81] | Prime sense depth cameras | depth maps | 3D hand pose estimation & sphere model renderings | Pose estimation neural network | mean joint error (stack = 1) 12.6 mm (stack = 2) 12.3 mm | – | design hand pose estimation using self-supervision method | NYU Hand Pose Dataset | – |

| [82] | RGB-D camera | Single RGB image direct feed to the network | 3D hand shape and pose | train networks with full supervision | Mesh error 7.95 mm Pose error 8.03 mm | Nvidia GTX 1080 GPU | design model for estimate 3D hand shape from a monocular RGB image | Stereo hand pose tracking benchmark (STB) & Rendered Hand Pose Dataset (RHD) | 50 fps |

| [83] | Kinect V2 camera | segmentation mask Kinect body tracker | hand | machine learning | Marker error 5% subset of the frames in each sequence & pixel classification error | CPU only | interactions with virtual and augmented worlds | Finger paint dataset & NYU dataset used for comparison | high frame-rate |

| [84] | raw depth image | CNN-based hand segmentation | 3D hand pose regression pipeline | CNN-based algorithm | 3D Joint Location Error 12.9 mm | Nvidia Geforce GTX 1080 Ti GPU | applications of virtual reality (VR) | dataset contains 8000 original depth images created by authors | – |

| [85] | Kinect V2 camera | bounding box around the hand & hand mask | hand | appearance and the kinematics of the hand | percentage of template vertices over all frames | – | Interaction with deformable object & tracking | synthetic dataset generated with the Blender modeling software | – |

| [86] | RGBD data from 3 Kinect devices | regression-based method & hierarchical feature extraction | 3D hand pose estimation | 3D hand pose estimation via semi-supervised learning. | Mean error 7.7 mm | NVIDIA TITAN Xp GPU | human–computer interaction (HCI), computer graphics and virtual/augmented reality | For evaluation ICVL Dataset & MSRA Dataset & NYU Dataset | 58 fps |

| [87] | single depth images. | depth image | 3D hand pose | 3D point cloud of hand as network input and outputs heat-maps | mean error distances | Nvidia TITAN Xp GPU | (HCI), computer graphics and virtual/augmented reality | For evaluation NYU dataset & ICVL dataset & MSRA datasets | 41.8 fps |

| [88] | depth images | predicting heat maps of hand joints in detection-based methods | hand pose estimation | dense feature maps through intermediate supervision in a regression-based framework | mean error 6.68 mm maximal per-joint error 8.73 mm | GeForce GTX 1080 Ti | (HCI), virtual and mixed reality | For evaluation ‘HANDS 2017′ challenge dataset & first-person hand action | – |

| [89] | RGB-D cameras | – | 3D hand pose estimation | weakly supervised method | mean error 0.6 mm | GeForce GTX 1080 GPU with CUDA 8.0. | (HCI), virtual and mixed reality | Rendered hand pose (RHD) dataset | – |

| Author | Type of Camera | Resolution | Techniques/ Methods for Segmentation | Feature Extract Type | Classify Algorithm | Recognition Rate | No. of Gestures | Application Area | Dataset Type | Hardware Run |

|---|---|---|---|---|---|---|---|---|---|---|

| [90] | Different mobile cameras | HD and 4k | features extraction by CNN | hand gestures | Adapted Deep Convolutional Neural Network (ADCNN) | training set 100% test set 99% | 7 hand gestures | (HCI) communicate for people was injured Stroke | Created by video frame recorded | Core™ i7-6700 CPU @ 3.40 GHz |

| [91] | webcam | – | skin color detection and morphology & background subtraction | hand gestures | deep convolutional neural network (CNN) | training set 99.9% test set 95.61% | 6 hand gestures | Home appliance control (smart homes) | 4800 image collect for train and 300 for test | – |

| [92] | RGB image | 640 × 480 pixels | No segment stage Image direct fed to CNN after resizing | hand gestures | deep convolutional neural network | simple backgrounds 97.1% complex background 85.3% | 7 hand gestures | Command consumer electronics device such as mobiles phones and TVs | Mantecón et al.* dataset for direct testing | GPU with 1664 cores, base clock of 1050 MHz |

| [93] | Kinect | – | skin color modeling combined with convolution neural network image feature | hand gestures | convolution neural network & support vector machine | 98.52% | 8 hand gestures | – | image information collected by Kinect | CPUE 5-1620v4, 3.50 GHz |

| [94] | Kinect | Image size 200 × 200 | skin color -Y–Cb–Cr color space & Gaussian Mixture model | hand gestures | convolution neural network | Average 95.96% | 7 hand gestures | human hand gesture recognition system | image information collected by Kinect | – |

| [95] | video sequences recorded | – | Semantic segmentation based deconvolution neural network | hand gesture motion | convolution network (LRCN) deep | 95% | 9 hand gestures | intelligent vehicle applications | Cambridge gesture recognition dataset | Nvidia Geforce GTX 980 graphics |

| [96] | image | Original images in the database 248 × 256 or 128 × 128 pixels | Canny operator edge detection | hand gesture | double channel convolutional neural network (DC-CNN) & softmax classifier | 98.02% | 10 hand gestures | man–machine interaction | Jochen Triesch Database (JTD) & NAO Camera hand posture Database (NCD) | Core i5 processor |

| [97] | Kinect | – | – | Skeleton-based hand gesture recognition. | neural network based on SPD | 85.39% | 14 hand gestures | – | Dynamic Hand Gesture (DHG) dataset & First-Person Hand Action (FPHA) dataset | non-optimized CPU 3.4 GHz |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Oudah, M.; Al-Naji, A.; Chahl, J. Hand Gesture Recognition Based on Computer Vision: A Review of Techniques. J. Imaging 2020, 6, 73. https://0-doi-org.brum.beds.ac.uk/10.3390/jimaging6080073

Oudah M, Al-Naji A, Chahl J. Hand Gesture Recognition Based on Computer Vision: A Review of Techniques. Journal of Imaging. 2020; 6(8):73. https://0-doi-org.brum.beds.ac.uk/10.3390/jimaging6080073

Chicago/Turabian StyleOudah, Munir, Ali Al-Naji, and Javaan Chahl. 2020. "Hand Gesture Recognition Based on Computer Vision: A Review of Techniques" Journal of Imaging 6, no. 8: 73. https://0-doi-org.brum.beds.ac.uk/10.3390/jimaging6080073