Safety Culture Oversight: An Intangible Concept for Tangible Issues within Nuclear Installations

Abstract

:1. Introduction

2. Capturing Safety Culture

2.1. A Model Based on Safety Culture Observations

“During an inspection within the main control room, an alarm occurs. The Main Control Room operator directly clears the alarm without checking the alarm card. The operator explains to the inspector that the alarm was related to maintenance works on a system. Nevertheless, the operator is unable to describe the technical links between the maintenance intervention and the alarm. After a short investigation the inspector found that the link between the maintenance intervention and the alarm was not relevant”.

“Requested checklists related to the use of hot cells are not systematically completed. That remark has already been made several times to operators by the nuclear authority”.

2.2. Methodological Aspects of Safety Culture Observations

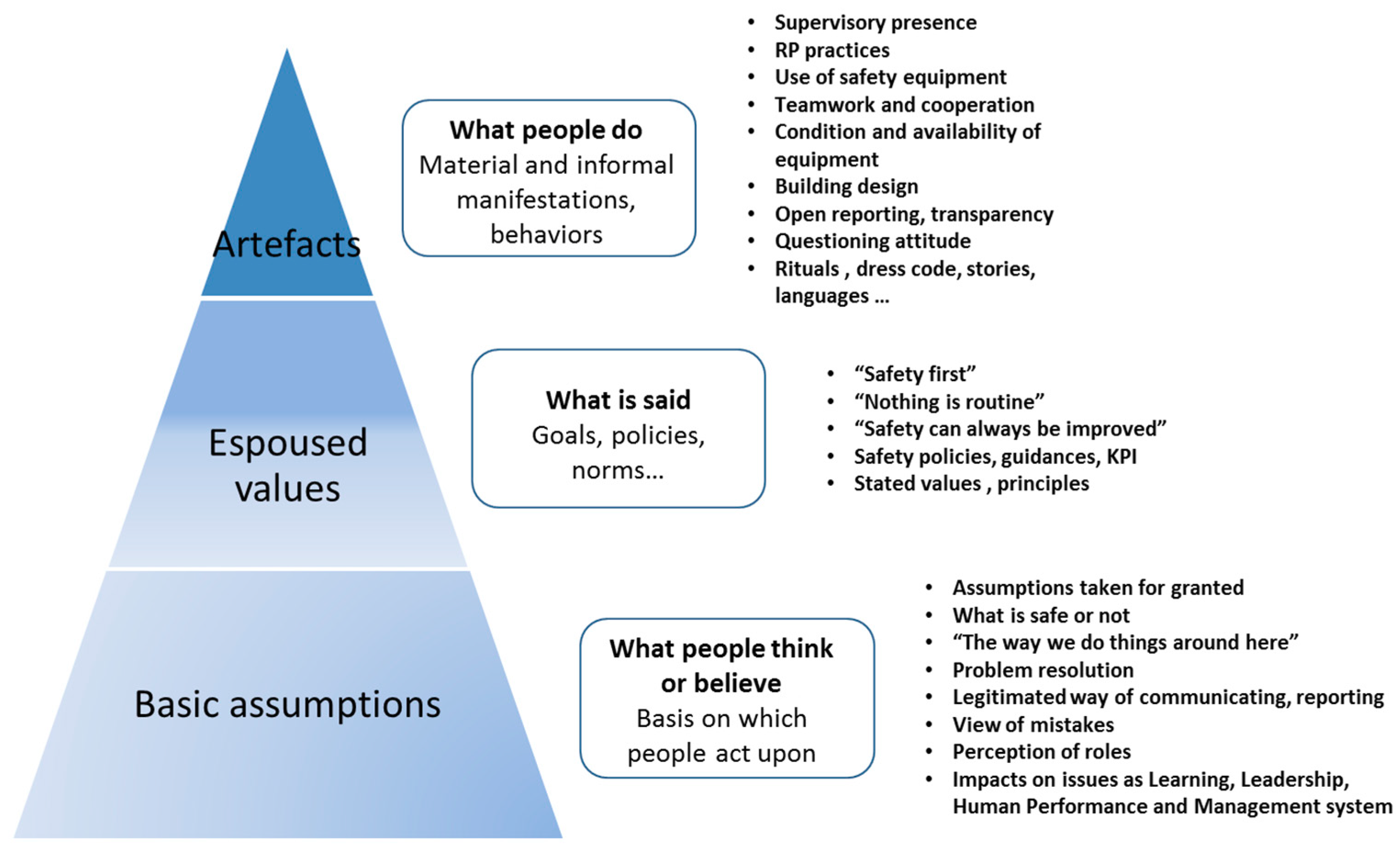

2.2.1. Seeking Visible and Invisible Elements

- Individual level: elements such as questioning attitude, individual awareness, accountability, reporting, rigorous and prudent approach…

- Group level: elements such as communication, teamwork, decision making, supervision, peer check…

- Organisational level: elements such as definition of responsibilities, definition and control of practices, qualification and training, review functions, management commitment, procedures, safety policies, resources…

2.2.2. Observations are Rather Descriptive than Normative

“A manager of the Operation department goes on the field after work hours in order to check all work in progress. Some gaps are observed and reported by the manager to the team”.

2.2.3. Toward a Deep Understanding of the Workplace

(§1) “During a routine inspection in the main control room of the unit X, it has been observed a discrepancy between the level of the tank ICS C07 (Intermediate Cooling System) indicating 86% and the X-DOC-15 procedure referencing a Technical Specifications criterion of 56% < N < 80% (TS 16.XXX).

(§2) The observation has been made at the beginning of the morning shift in the control room. The unit operated at full power. Questioned about the tank level, the operator in charge stated that he was not aware of this indication: “I rarely take this level into account. It’s not in my procedure. We do not check it systematically”. Rapidly, the chief operator opened the Technical Specifications and stated that the tank maximum level was not reported in the TS. Only the minimum level was reported”.

3. Assessing Safety Culture Observations

3.1. Safety Culture Assessment through a Quantitative Approach

3.2. Safety Culture Assessment through a Qualitative Approach

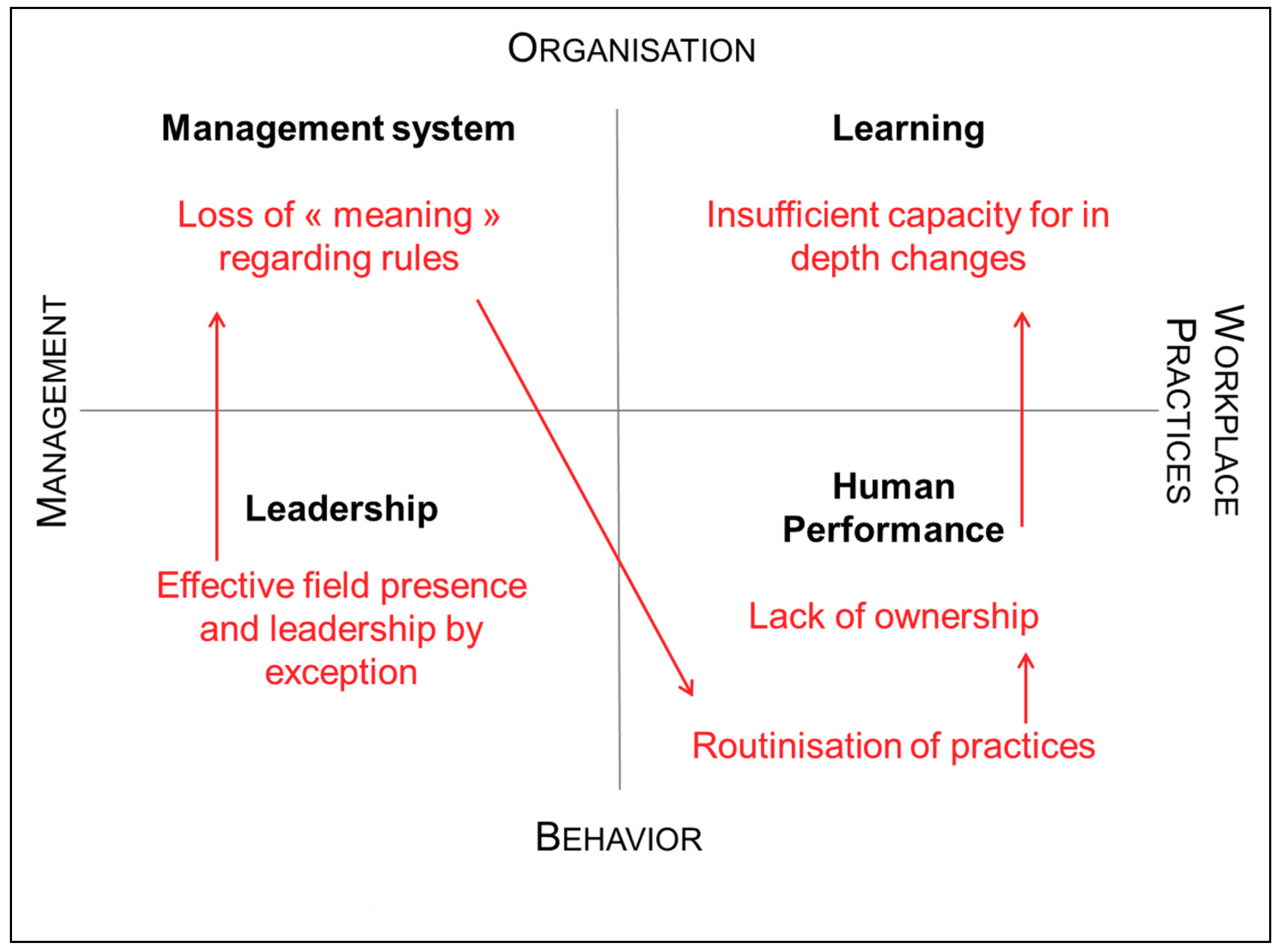

3.3. Safety Culture Oversight from a Regulatory Perspective

- Management system: within this dimension we can find safety culture elements such as safety policies, work process, procedures, interfaces… The main issue here is to assess the level of integration of safety within the management system and related documentation;

- Leadership: within this dimension we can find safety culture elements such as commitment, decision making, supervision... The main issue here is to assess the level of managers’ involvement regarding operations management;

- Human performance: within this dimension we can find safety culture elements such as a questioning attitude, compliance, team skills, situation awareness… The main issue here is to assess the consistency between field practices and human performance principles as well as the adaptation capabilities of field operators;

- Learning: within this dimension we can find safety culture elements such as reporting or assessment practices, knowledge transfer, continuous improvement… The main issue here is to assess the learning capabilities of the organisation.

4. Applying the Model: An NPP Case Study

- Management system: Loss of meaning regarding rules;

- Leadership: Lack of effective field presence and leadership by exception;

- Human performance: Lack of ownership and routinisation of practices;

- Learning: Insufficient capacity for in depth changes.

- “Designed organization”: firstly, the global rules are followed;

- “Engineered organization”: these rules can be considered as not necessary because their usefulness is no longer perceived;

- “Applied organization”: it appears that local rules take precedence in daily practices;

- “Failure”: ultimately it is the whole system that becomes vulnerable.

5. Conclusions: Tangible Effects of Safety Culture Oversight

Funding

Conflicts of Interest

References

- Guldenmund, F.W. The Nature of Safety Culture: A Review of Theory and Research. Saf. Sci. 2000, 34, 215–257. [Google Scholar] [CrossRef]

- Guldenmund, F.W. (Mis)understanding Safety Culture and its Relationship to Safety Management. Risk Anal. 2010, 30, 1466–1480. [Google Scholar] [CrossRef] [PubMed]

- Lee, T. Assessment of Safety Culture at a Nuclear Reprocessing Plant. Work Stress 1998, 12, 217–237. [Google Scholar] [CrossRef]

- Lee, T.; Harrison, K. Assessing Safety Culture in Nuclear Power Stations. Saf. Sci. 2000, 34, 61–97. [Google Scholar] [CrossRef]

- Wilpert, B.; Itoigawa, N. Safety Culture in Nuclear Power Operations; Taylor and Francis: London, UK; New York, NY, USA, 2001. [Google Scholar]

- Harvey, J.; Erdos, G.; Bolam, H.; Cox, M.A.A.; Kennedy, J.N.P.; Gregory, D.T. An Analysis of Safety Culture Attitudes in a Highly Regulated Environment. Work Stress 2002, 16, 18–36. [Google Scholar] [CrossRef]

- Findley, M.; Smith, S.; Gorski, J.; O’neil, M. Safety Climate among Job Positions in a Nuclear Decommissioning and Demolition Industry: Employees’ Self-reported Safety Attitudes and Perceptions. Saf. Sci. 2007, 45, 875–889. [Google Scholar] [CrossRef]

- Mengolini, A.; Debarberis, L. Safety Culture Enhancement through the Implementation of IAEA Guidelines. Reliab. Eng. Syst. Saf. 2007, 92, 520–529. [Google Scholar] [CrossRef]

- Reiman, T.; Pietikainen, E.; Oedewald, P.; Gotcheva, N. System Modelling with the DISC Framework: Evidence from Safety-critical Domains. Work 2012, 41, 3018–3025. [Google Scholar] [PubMed]

- Mariscal, M.A.; Garcia Herrero, S.; Toca Otero, A. Assessing Safety Culture in the Spanish Nuclear Industry through the Use of Working Groups. Saf. Sci. 2012, 50, 1237–1246. [Google Scholar] [CrossRef]

- Garcia Herrero, S.; Mariscal, M.A.; Gutierrez, J.M.; Toca Otero, A. Bayesian Network Analysis of Safety Culture and Organizational Culture in a Nuclear Power Plant. Saf. Sci. 2013, 53, 82–95. [Google Scholar] [CrossRef]

- Rollenhagen, C.; Westerlund, J.; Naswall, K. Professional Subcultures in Nuclear Power Plants. Saf. Sci. 2013, 59, 78–85. [Google Scholar] [CrossRef]

- Schobel, M.; Lostermann, A.; Lasalle, R.; Beck, J.; Manzey, D. Digging Deeper! Insights from Multi-method Assessment of Safety Culture in Nuclear Power Plant based on Schein’s Culture Model. Saf. Sci. 2017, 95, 38–49. [Google Scholar] [CrossRef]

- Van Nunen, K.; Li, J.; Reniers, G.; Ponnet, K. Bibliometric Analysis of Safety Culture Research. Saf. Sci. 2018, 108, 248–258. [Google Scholar] [CrossRef]

- Antonsen, S.; Nilsen, M.; Almklov, P.G. Regulating the Intangible. Searching for Safety Culture in the Norwegian Petroleum Industry. Saf. Sci. 2017, 92, 232–240. [Google Scholar] [CrossRef]

- IAEA Safety Reports Series. Performing Safety Culture Self-Assessments; IAEA: Vienna, Austria, 2016; No. 83. [Google Scholar]

- Bernard, B. Safety Culture as a Way of Responsive Regulation: Proposal for a Nuclear Safety Culture Oversight Model. Int. Nucl. Saf. J. 2014, 3, 1–11. [Google Scholar]

- IAEA Safety Reports Series. Safety. Culture; INSAG-4; IAEA: Vienna, Austria, 1991. [Google Scholar]

- Schein, E.H. Organizational Culture and Leadership: A Dynamic View; Jossey Bass: San Francisco, CA, USA, 1985. [Google Scholar]

- Glendon, A.I.; Stanton, N.A. Perspectives on Safety Culture. Saf. Sci. 2000, 34, 193–214. [Google Scholar] [CrossRef]

- RichteR, A.; Koch, C. Integration, Differentiation and Ambiguity in Safety Cultures. Saf. Sci. 2004, 42, 703–722. [Google Scholar] [CrossRef]

- Naevestad, T.-O. Mapping Research on Culture and Safety in High-Risk Organizations: Arguments for a Sociotechnical Understanding of Safety Culture. J. Conting. Crisis Manag. 2009, 7, 126–136. [Google Scholar] [CrossRef]

- Smith-Crowe, K.; Burke, M.J.; Landis, R.S. Organizational Climate as a Moderator of Safety Knowledge-Safety Performance Relationship. J. Organ. Behav. 2003, 24, 861–876. [Google Scholar] [CrossRef]

- Do Nascimento, C.S.; Andrade, D.A.; De Mesquita, R.N. Psychometric model for safety culture assessment in nuclear research facilities. Nucl. Eng. Des. 2017, 314, 227–237. [Google Scholar] [CrossRef]

- Warszawska, K.; Kraslawski, A. Method for quantitative assessment of safety culture. J. Prev. Process. Ind. 2016, 42, 27–34. [Google Scholar] [CrossRef]

- Cox, S.J.; Flin, R. Safety Culture: Philosopher’s Stone or a Man of Straw? Work Stress 1998, 12, 189–201. [Google Scholar] [CrossRef]

- Zohar, F. Safety Climate in Industrial Organisations—Theoretical and Applied Implications. J. Appl. Psychol. 1980, 65, 96–102. [Google Scholar] [CrossRef] [PubMed]

- Flin, R.; Mearns, K.; O’connor, P.; Bryden, R. Measuring Safety Climate: Identifying the Common Features. Saf. Sci. 2000, 34, 177–192. [Google Scholar] [CrossRef]

- Guldenmund, F.W. The Use of Questionnaires in Safety Culture Research—An Evaluation. Saf. Sci. 2007, 45, 723–743. [Google Scholar] [CrossRef]

- Marquardt, N.; Gades, R.; Robelski, S. Implicit Social Cognition and Safety Culture. Hum. Factors Ergon. Manuf. Serv. Ind. 2012, 22, 213–234. [Google Scholar] [CrossRef]

- Hopkins, A. Studying Organisational Cultures and their Effects on Safety. Saf. Sci. 2006, 44, 875–889. [Google Scholar] [CrossRef]

- Vaughan, D. The Challenger Launch Decision. Risky Technologies, Culture and Deviance at NASA; Chicago University Press: Chicago, IL, USA, 1996. [Google Scholar]

- Reiman, T.; Oedewald, P. Measuring Maintenance Culture and Maintenance Core Task with CULTURE-questionnaire—A Case Study in the Power Industry. Saf. Sci. 2004, 42, 859–889. [Google Scholar] [CrossRef]

- Gherardi, S.; Nicolini, D.; Odella, F. What Do You Mean by Safety? Conflicting Perspectives on Accident Causation and Safety Management in a Construction Firm. J. Conting. Crisis Manag. 1998, 6, 202–213. [Google Scholar] [CrossRef]

- Rochlin, G.I. Safe Operation as a Social Construct. Ergonomics 1999, 42, 1549–1560. [Google Scholar] [CrossRef]

- Atak, A.; Kingma, S. Safety Culture in an Aircraft Maintenance organisation: A View from the Inside. Saf. Sci. 2011, 49, 268–278. [Google Scholar] [CrossRef]

- Antonsen, S. The Relationship between Culture and Safety on Offshore Supply Vessels. Saf. Sci. 2009, 47, 1118–1128. [Google Scholar] [CrossRef]

- Naevestad, T.-O. Safety Understandings among Crane Operators and Process Operators on a Norwegian Offshore Platform. Saf. Sci. 2008, 46, 520–534. [Google Scholar] [CrossRef]

- Farrington-Darby, T.; Pickup, L.; Wilson, J.R. Safety Culture in Railway Maintenance. Saf. Sci. 2005, 43, 39–60. [Google Scholar] [CrossRef]

- Brooks, B. Not Drowning, Waving! Safety Management and Occupational Culture in an Australian Commercial Fishing Port. Saf. Sci. 2005, 43, 795–814. [Google Scholar] [CrossRef]

- Perin, C. Shouldering Risks. The Culture of Control in the Nuclear Power Industry; Princeton University Press: Princeton, NJ, USA; Oxford, UK, 2005. [Google Scholar]

- Bourrier, M. Organizing Maintenance Work at Two American Nuclear Power Plant. J. Conting. Crisis Manag. 1996, 4, 104–112. [Google Scholar] [CrossRef]

- Snook, S.A. Friendly Fire: The Accidental Shootdown of US Black Hawks over Northern Iraq; Princeton University Press: Princeton, NJ, USA, 2000. [Google Scholar]

- Bernard, B. Comprendre les Facteurs Humains et Organisationnels. Sûreté nucléaire et organisations à risques; EDP-Sciences: Paris, France, 2014. [Google Scholar]

© 2018 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Bernard, B. Safety Culture Oversight: An Intangible Concept for Tangible Issues within Nuclear Installations. Safety 2018, 4, 45. https://0-doi-org.brum.beds.ac.uk/10.3390/safety4040045

Bernard B. Safety Culture Oversight: An Intangible Concept for Tangible Issues within Nuclear Installations. Safety. 2018; 4(4):45. https://0-doi-org.brum.beds.ac.uk/10.3390/safety4040045

Chicago/Turabian StyleBernard, Benoît. 2018. "Safety Culture Oversight: An Intangible Concept for Tangible Issues within Nuclear Installations" Safety 4, no. 4: 45. https://0-doi-org.brum.beds.ac.uk/10.3390/safety4040045