Sympérasmology: A Proposal for the Theory of Synthetic System Knowledge

Abstract

:1. Introduction

1.1. The Broad Context of the Work

1.2. Setting the Stage for Discussion: System Knowledge in the Literature

1.3. The Addressed Research Phenomenon and Why It Is of Importance

- What differentiates system knowledge from the other bodies of knowledge?

- What are typical manifestations and enactments of system knowledge?

- What are the cybernetic and computational fundamentals of system knowledge?

- What are the genuine sources of system knowledge?

- What does conscious knowing mean for intellectualized systems?

- What is a system able to know by its sensing, reasoning, and learning capabilities?

- Why and how does a system get to know something?

- What are the proper criteria for system knowledge?

- What are the genuine mechanisms of generating system knowledge?

- Can a system know, be aware of, and understand what it knows?

- What way can system knowledge be verified, validated, and consolidated?

- What is the future of system knowledge?

1.4. The Contents of the Paper

2. The Current State of the Research Field

2.1. Characteristics of Human Knowledge

2.2. Categories of Human Knowledge

2.3. Characteristics of System Knowledge

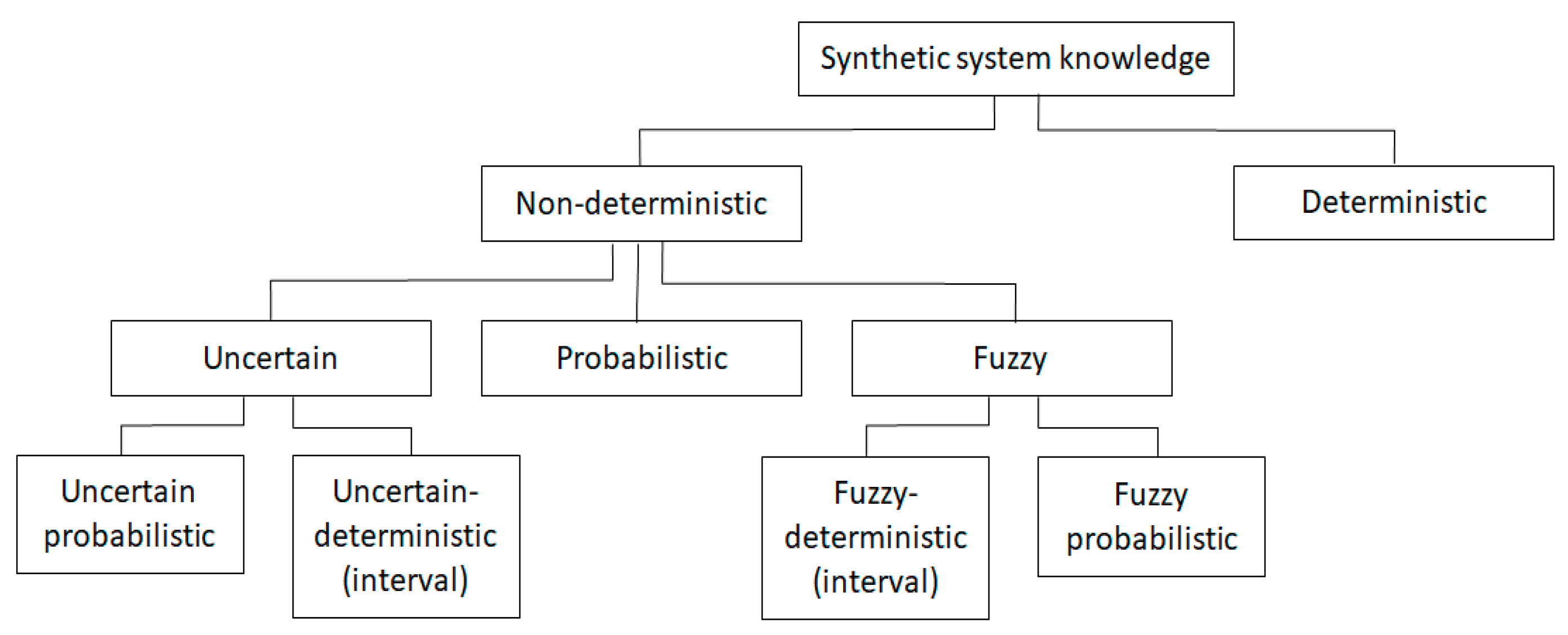

2.4. Categories of System Knowledge

2.5. The Main Forms and Mechanisms of Knowledge Inferring/Reasoning

2.6. The Main Functions of Knowledge Engineering and Management

3. Gnoseology of Common Knowledge

3.1. Fundamentals of Gnoseology

3.2. Potentials of Gnoseology

4. Epistemology of Scientific Knowledge

4.1. Fundamentals of Epistemology

4.2. Potentials of Epistemology

5. A Proposal for the Theory of Synthetic System Knowledge

5.1. The Major Findings and Their Implications

- Time has come to consider system knowledge not only as an asset, but also as the subject of knowledge theoretical investigations. It may provide not only better insights in the nature, development, and potentials of system knowledge, but it may advise on the trends and future possibilities.

- The picture the literature offers about system knowledge is rather blurred. The two major constituents of systelligence, the dynamic body of synthetic system knowledge and the set of self-adaptive processing mechanisms, are not yet treated in synergy.

- The basis of system knowledge is human knowledge, the tacit part of which is difficult to elicit and transfer into engineered systems. On the other hand, the intangible part of human knowledge is at least as important for the development of smartly behaving systems, as the tangible part.

- The literature did not offer generic and broadly accepted answers to several fundamental questions concerning the essence, creation, aggregation, handling, and exploitation of system knowledge.

- Human knowledge is one of the most multi-faceted phenomena, which has been intensively debated and investigated since the ancient time. Perhaps the largest challenge is not its heterogeneity, but the explosion of knowledge in both the individual and the organizational dimensions.

- Categorization, structuring, and formalization of human knowledge makes it possible to transfer it to engineering systems, but current computational processing is restricted mainly to syntactic (symbolic) level, and only partly to semantic level.

- System knowledge is characterized by an extreme heterogeneity not only with respect to its contents, but also to its representation, storage, and processing. The computational processing of these different bodies of knowledge need appropriate reasoning mechanisms.

- There are five major families of ampliative computational mechanisms, which, however, cannot be easily combined and directly interoperate due to the differences in the representation of knowledge and in the nature of the computational algorithms.

- The abovementioned issues pose challenges to both knowledge engineering and management, likewise, the issue of compositionality of smart and intelligent engineered systems. One of the challenges is self-acquisition and self–management of problem-solving knowledge by systems, as a consequence of their increasing of smartness and autonomy.

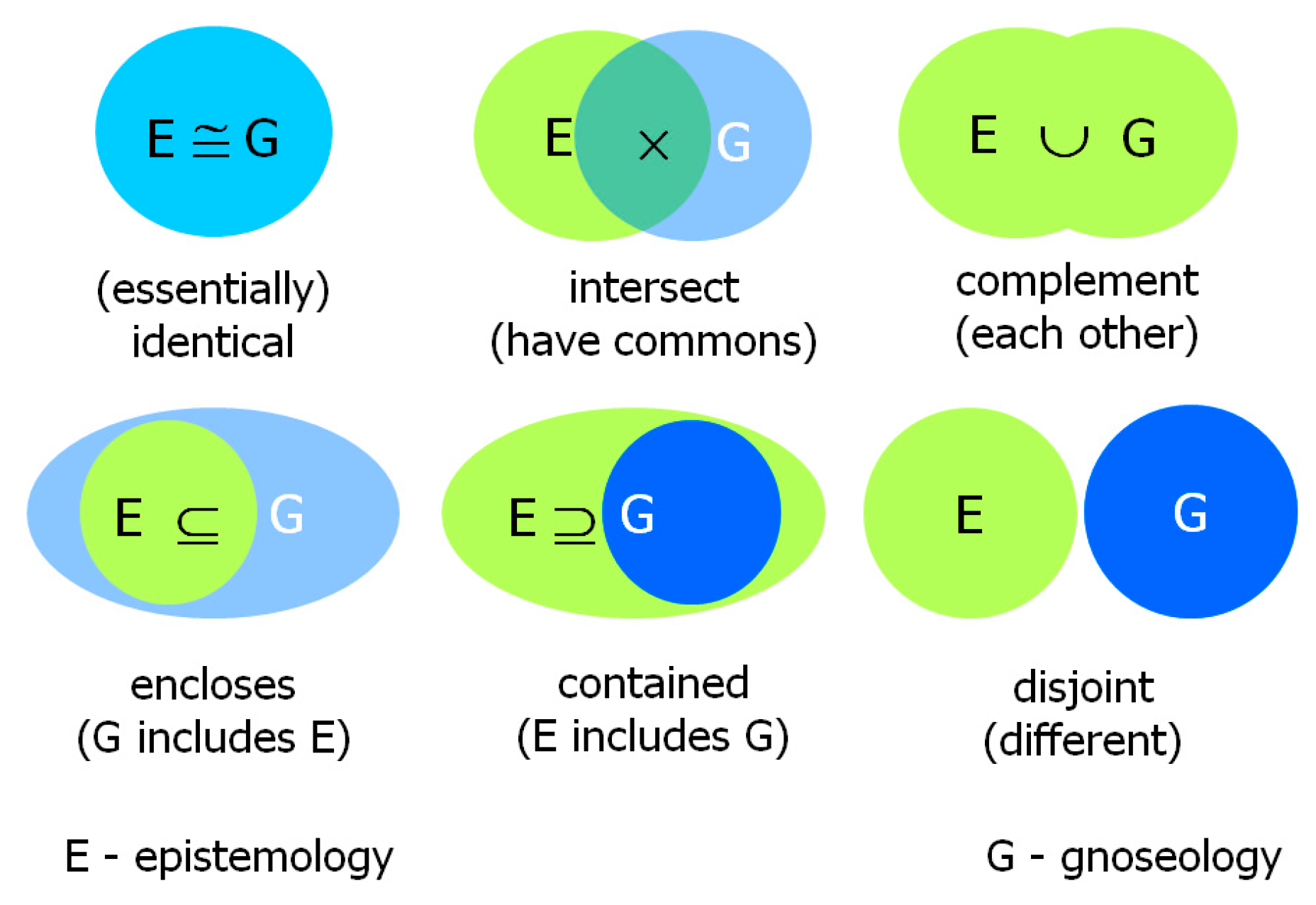

- The two known alternative theories of knowledge, offered by gnoseology and epistemology, focus on the manifestations of the common knowledge and of the scientific knowledge, respectively. Their relationship to synthetic system knowledge is unclear and uncertain.

- Synthetic system knowledge is composed of bodies/chunks of scientific knowledge and of common knowledge, as well as chunks/pieces of system inferred and reasoned knowledge. Its entirety and its run-time acquired parts are addressed neither by modern gnoseology nor contemporary epistemology.

5.2. Proposing Sympérasmology as the Key Theory of System Knowledge

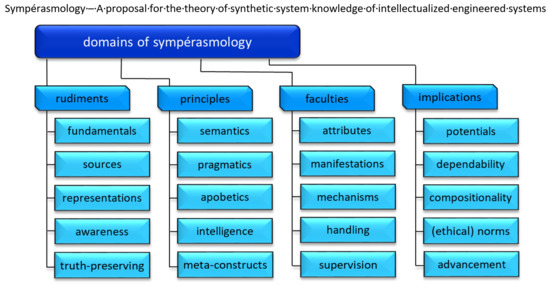

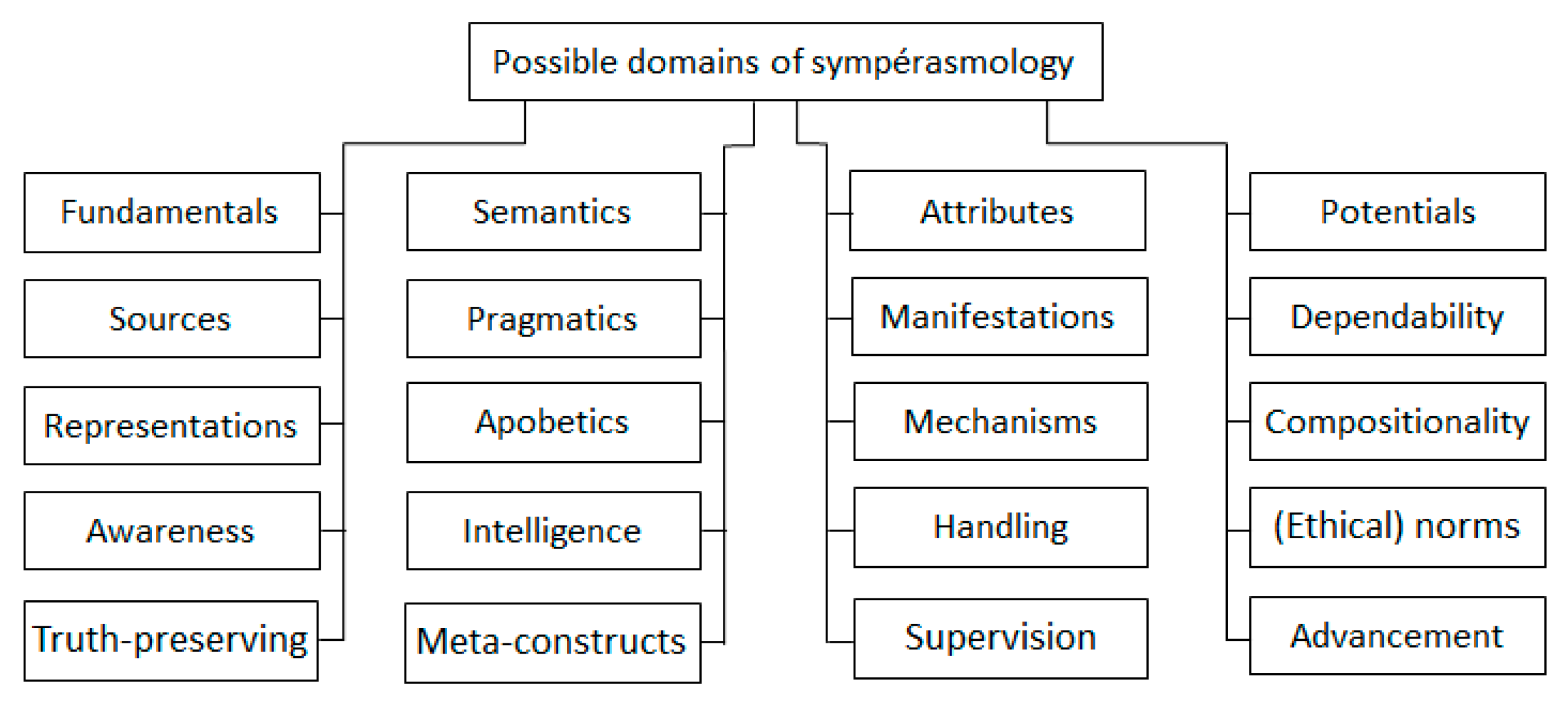

5.3. Domains of Sympérasmological Investigations

Funding

Conflicts of Interest

References

- Nuvolari, A. Understanding successive industrial revolutions: A “development block” approach. Environ. Innov. Soc. Transit. 2019, 32, 33–44. [Google Scholar] [CrossRef]

- Wang, Y.; Falk, T.H. From information to intelligence revolution: A perspective of Canadian research on brain and its applications. In Proceedings of the IEEE SMC’18-BMI’18: Workshop on Global Brain Initiatives, Miyazaki, Japan, 7–10 October 2018; pp. 3–4. [Google Scholar]

- Makridakis, S. The forthcoming artificial intelligence (AI) revolution: Its impact on society and firms. Futures 2017, 90, 46–60. [Google Scholar] [CrossRef]

- Kastenhofer, K. Do we need a specific kind of technoscience assessment? Taking the convergence of science and technology seriously. Poiesis Prax. 2010, 7, 37–54. [Google Scholar] [CrossRef]

- Canton, J. Designing the future: NBIC technologies and human performance enhancement. Ann. N. Y. Acad. Sci. 2004, 1013, 186–198. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Wetter, K.J. Implications of technologies converging at the nano-scale. In Nanotechnologien nachhaltig gestalten. Konzepte und Praxis fur eine verantwortliche Entwicklung und Anwendung; Institut für Kirche und Gesellschaft: Iserlohn, Germany, 2006; pp. 31–41. [Google Scholar]

- Ma, J.; Ning, H.; Huang, R.; Liu, H.; Yang, L.T.; Chen, J.; Min, G. Cybermatics: A holistic field for systematic study of cyber-enabled new worlds. IEEE Access 2015, 3, 2270–2280. [Google Scholar] [CrossRef]

- Zhou, X.; Zomaya, A.Y.; Li, W.; Ruchkin, I. Cybermatics: Advanced strategy and technology for cyber-enabled systems and applications. Future Gener. Comput. Syst. 2018, 79, 350–353. [Google Scholar] [CrossRef]

- Horváth, I.; Gerritsen, B.H. Cyber-physical systems: Concepts, technologies and implementation principles. In Proceedings of the TMCE 2012 Symposium, Karlsruhe, Germany, 7–11 May 2012; Volume 1, pp. 7–11. [Google Scholar]

- Horváth, I.; Rusák, Z.; Li, Y. Order beyond chaos: Introducing the notion of generation to characterize the continuously evolving implementations of cyber-physical systems. In Proceedings of the 2017 ASME International Design Engineering Technical Conferences and Computers and Information in Engineering Conference, Cleveland, OH, USA, 6–9 August 2017. V001T02A015. [Google Scholar]

- Wang, Y. On abstract intelligence: Toward a unifying theory of natural, artificial, machinable, and computational intelligence. Int. J. Softw. Sci. Comput. Intell. 2009, 1, 1–17. [Google Scholar] [CrossRef] [Green Version]

- Wang, Y. Towards the abstract system theory of system science for cognitive and intelligent systems. Complex Intell. Syst. 2015, 1, 1–22. [Google Scholar] [CrossRef] [Green Version]

- Diao, Y.; Hellerstein, J.L.; Parekh, S.; Griffith, R.; Kaiser, G.; Phung, D. Self-managing systems: A control theory foundation. In Proceedings of the 12th International Conference and Workshops on the Engineering of Computer-Based Systems, Greenbelt, MD, USA, 4–7 April 2005; pp. 441–448. [Google Scholar]

- Horváth, I. A computational framework for procedural abduction done by smart cyber-physical systems. Designs 2019, 3, 1. [Google Scholar] [CrossRef] [Green Version]

- Lehrer, K. Theory of Knowledge; Westview Press, Inc.: Boulder, CO, USA, 2018. [Google Scholar]

- Cooper, D.; LaRocca, G. Knowledge-based techniques for developing engineering applications in the 21st century. In Proceedings of the 7th AIAA Aviation Technology, Integration and Operations Conference, Belfast, UK, 18–20 September 2007; pp. 1–22. [Google Scholar]

- de Mántaras, R.L. Towards artificial intelligence: Advances, challenges, and risks. Mètode Sci. Stud. J. Annu. Rev. 2019, 9, 119–125. [Google Scholar]

- Smith, R.G. Knowledge-based systems concepts, techniques, examples. Can. High Technol. 1985, 3, 238–281. [Google Scholar]

- Wang, Y. On cognitive foundations and mathematical theories of knowledge science. In Artificial Intelligence: Concepts, Methodologies, Tools, and Applications; IGI Global: Hershey, PA, USA, 2017; pp. 889–914. [Google Scholar]

- Peng, Y.; Reggia, J.A. Abductive Inference Models for Diagnostic Problem-Solving; Springer Science & Business Media: Berlin/Heidelberg, Germany, 2012. [Google Scholar]

- Mishra, K.N. A novel mechanism for cloud data management in distributed environment. Data Intensive Comput. Appl. Big Data 2018, 29, 267. [Google Scholar]

- De Vries, M.J. The nature of technological knowledge: Extending empirically informed studies into what engineers know. Techne Res. Philos. Technol. 2003, 6, 117–130. [Google Scholar] [CrossRef]

- Alavi, M.; Leidner, D.E. Review: Knowledge management and knowledge management systems: Conceptual foundations and research issues. MIS Q. 2001, 25, 107–136. [Google Scholar] [CrossRef]

- Moser, P.K.; Vander, N.A. Human Knowledge: Classical and Contemporary Approaches; Oxford University Press: Oxford, UK, 2002. [Google Scholar]

- Faucher, J.B.; Everett, A.M.; Lawson, R. What do we know about knowledge? Knowl. Manag. Theor. Found. 2008, 41–78. [Google Scholar]

- Berkeley, G. The Principles of Human Knowledge; Warnock, G.J., Ed.; Fontana: London, UK, 1962. [Google Scholar]

- Wiig, K. Knowledge Management Foundations: Thinking about Thinking—How People and Organizations Create, Represent and Use Knowledge; Schema Press: Houston, TX, USA, 1993. [Google Scholar]

- Allee, V. The Knowledge Evolution: Expanding Organizational Intelligence; Routledge: New York, NY, USA, 1997. [Google Scholar]

- Blackler, F. Knowledge, knowledge work and organizations: An overview and interpretation. Organ. Sci. 1995, 16, 1021–1046. [Google Scholar] [CrossRef] [Green Version]

- Sveiby, K.E. The New Organizational Wealth: Managing & Measuring Knowledge-Based Assets; Berrett-Koehler Publishers: Oakland, CA, USA, 1997. [Google Scholar]

- Sehai, E. Knowledge management in Ethiopian agriculture. In Proceedings of the 14th ESAP Conference, Addis Ababa, Ethiopia, 5–7 September 2006; pp. 75–84. [Google Scholar]

- Drucker, P.F. The rise of the knowledge society. Wilson Q. 1993, 17, 52–72. [Google Scholar]

- Seager, W. Theories of Consciousness: An Introduction and Assessment; Routledge: New York, NY, USA, 2016. [Google Scholar]

- Block, N. Comparing the major theories of consciousness. In The Cognitive Neurosciences IV; MIT Press: Boston, MA, USA, 2009. [Google Scholar]

- Newell, A. The knowledge level. Artif. Intell. 1982, 18, 87–127. [Google Scholar] [CrossRef]

- Stokes, D.E. Pasteur’s Quadrant: Basic Science and Technological Innovation; Brookings Institution Press: Washington, DC, USA, 1997. [Google Scholar]

- Bratianu, C.; Andriessen, D. Knowledge as energy: A metaphorical analysis. In Proceedings of the 9th European Conference on Knowledge Management, Reading, Southampton, UK, 4–5 September 2008; pp. 75–82. [Google Scholar]

- Zack, M. What knowledge-problems can information technology help to solve? Proc. AMCIS 1998, 216, 644–646. [Google Scholar]

- Ackoff, R.L. From data to wisdom. J. Appl. Syst. Anal. 1989, 16, 3–9. [Google Scholar]

- Frické, M. The knowledge pyramid: A critique of the DIKW hierarchy. J. Inf. Sci. 2009, 35, 131–142. [Google Scholar] [CrossRef]

- Jennex, M.E.; Bartczak, S.E. A revised knowledge pyramid. Int. J. Knowl. Manag. 2013, 9, 19–30. [Google Scholar] [CrossRef] [Green Version]

- Gorman, M.E. Types of knowledge and their roles in technology transfer. J. Technol. Transf. 2002, 27, 219–231. [Google Scholar] [CrossRef]

- Barley, W.C.; Treem, J.W.; Kuhn, T. Valuing Multiple Trajectories of Knowledge: A Critical Review and Agenda for Knowledge Management Research. Acad. Manag. Ann. 2018, 12, 278–317. [Google Scholar] [CrossRef]

- Byosiere, P.; Ingham, M. Knowledge domains as driving forces for competitive advantage: Empirical evidence in a telecommunications MNC. In Proceedings of the Strategic Management Society Conference, Paris, France, 22–25 September 2002; pp. 1–12. [Google Scholar]

- Alavi, M.; Leidner, D. Knowledge management systems: Emerging views and practices from the field. In Proceedings of the International Conference on System Sciences, Maui, HI, USA, 5–8 January 1999; p. 239. [Google Scholar]

- Polányi, M. Tacit knowing: Its bearing on some problems of philosophy. In Knowing and being: Essays by Michael Polanyi; University of Chicago: Chicago, IL, USA, 1962; pp. 159–180. [Google Scholar]

- De Jong, T.; Ferguson-Hessler, M.G. Types and qualities of knowledge. Educ. Psychol. 1996, 31, 105–113. [Google Scholar] [CrossRef] [Green Version]

- Burgin, M. Theory of Knowledge: Structures and Processes; World Scientific: Singapore, 2016; Volume 5. [Google Scholar]

- Fahey, L.; Prusak, L. The eleven deadliest sins of knowledge management. Calif. Manag. Rev. 1998, 40, 265–276. [Google Scholar] [CrossRef]

- Fuchs, C. Self-organization and knowledge management. In Philosophische Perspektiven. Beiträge zum VII. Internationalen Kongress der ÖGP; Ontos: Frankfurt/M. Lancaster, Germany, 2005; pp. 351–356. [Google Scholar]

- Wang, Y.; Kinsner, W.; Kwong, S.; Leung, H.; Lu, J.; Smith, M.H.; Trajkovic, L.; Tunstel, E.; Plataniotis, K.N.; Yen, G.G. Brain-Inspired Systems: A Transdisciplinary Exploration on Cognitive Cybernetics, Humanity, and Systems Science Toward Autonomous Artificial Intelligence. IEEE Syst. Man Cybern. Mag. 2020, 6, 6–13. [Google Scholar] [CrossRef]

- Mele, C.; Pels, J.; Polese, F. A brief review of systems theories and their managerial applications. Serv. Sci. 2010, 2, 126–135. [Google Scholar] [CrossRef]

- Vincenti, W.G. What Engineers Know and How They Know It; John Hopkins Press: Baltimore, MD, USA, 1990. [Google Scholar]

- Ropohl, G. Knowledge Types in Technology. Int. J. Technol. Des. Educ. 1997, 7, 65–72. [Google Scholar] [CrossRef]

- Jonassen, D.H. Toward a design theory of problem solving. Educ. Technol. Res. Dev. 2000, 48, 63–85. [Google Scholar] [CrossRef]

- Anderson, J.D. Cognitive Psychology and Its Implications; W.H. Freeman: San Francisco, CA, USA, 1980. [Google Scholar]

- Friege, G.; Lind, G. Types and Qualities of Knowledge and their Relations to Problem Solving in Physics. Int. J. Sci. Math. Educ. 2006, 4, 437–465. [Google Scholar] [CrossRef]

- Goicoechea, A. Expert system models for inference with imperfect knowledge: A comparative study. J. Stat. Plan. Inference 1988, 20, 245–277. [Google Scholar] [CrossRef]

- Harman, G. Knowledge, inference, and explanation. Am. Philos. Q. 1968, 5, 164–173. [Google Scholar]

- Harrison, J. Handbook of Practical Logic and Automated Reasoning; Cambridge University Press: New York, NY, USA, 2009. [Google Scholar]

- Solaz-Portolés, J.J.; Sanjosé, V. Types of knowledge and their relations to problem solving in science: Directions for practice. Sísifo. Educ. Sci. J. 2008, 6, 105–112. [Google Scholar]

- Reed, S.K.; Pease, A. Reasoning from imperfect knowledge. Cogn. Syst. Res. 2017, 41, 56–72. [Google Scholar] [CrossRef]

- Valdma, M. A general classification of information and systems. Oil Shale 2007, 24, 265–276. [Google Scholar]

- Müller, V.C.; Bostrom, N. Future progress in artificial intelligence: A survey of expert opinion. In Fundamental Issues of Artificial Intelligence; Springer: Berlin/Heidelberg, Germany, 2017; pp. 553–571. [Google Scholar]

- Dreyfus, H.L.; Hubert, L. What Computers Still Can’t Do: A Critique of Artificial Reason; MIT Press: Boston, MA, USA, 1992. [Google Scholar]

- Greenwood, J.D. Self-knowledge: Inference, perception and articulation. Theor. Philos. Psychol. 1990, 10, 39. [Google Scholar] [CrossRef]

- Funge, J.; Tu, X.; Terzopoulos, D. Cognitive modeling: Knowledge, reasoning and planning for intelligent characters. In Proceedings of the 26th Annual Conference on Computer Graphics and Interactive Techniques, Los Angeles, CA, USA, 8–13 August 1999; pp. 29–38. [Google Scholar]

- Nagao, M. Knowledge and Inference; Elsevier: Amsterdam, The Netherlands, 2013. [Google Scholar]

- Biel, J.I.; Martin, N.; Labbe, D.; Gatica-Perez, D. Bites ‘n’ bits: Inferring eating behavior from contextual mobile data. Proc. ACM Interact. Mob. Wearable Ubiquitous Technol. 2018, 1, 1–33. [Google Scholar] [CrossRef]

- Halpern, J.Y. Reasoning about knowledge: An overview. In Theoretical Aspects of Reasoning about Knowledge; Morgan Kaufmann: Burlington, MA, USA, 1986; pp. 1–17. [Google Scholar]

- Chen, X.; Jia, S.; Xiang, Y. A review: Knowledge reasoning over knowledge graph. Expert Syst. Appl. 2020, 141, 112948. [Google Scholar] [CrossRef]

- Krawczyk, D.C. Introduction to reasoning. In Reasoning—The Neuroscience of How We Think; Academic Press: Cambridge, MA, USA, 2018; pp. 1–11. [Google Scholar]

- Liebowitz, J. Knowledge management and its link to artificial intelligence. Expert Syst. Appl. 2001, 20, 1–6. [Google Scholar] [CrossRef]

- Goswami, U. Inductive and Deductive Reasoning, In The Wiley-Blackwell Handbook of Childhood Cognitive Development; Wiley-Blackwell: Hoboken, NJ, USA, 2011; pp. 399–419. [Google Scholar]

- Arthur, W.B. Inductive reasoning and bounded rationality. Am. Econ. Rev. 1994, 84, 406–411. [Google Scholar]

- Johnson-Laird, P.N. Models of deduction. In Reasoning: Representation and Process in Children and Adults; Psychology Press: Hove, East Sussex, UK, 1975; pp. 7–54. [Google Scholar]

- Johnson-Laird, P.N. Deductive reasoning. Annu. Rev. Psychol. 1999, 50, 109–135. [Google Scholar] [CrossRef]

- Aliseda, A. Abductive Reasoning; Springer Science and Business Media LLC: Berlin/Heidelberg, Germany, 2006; Volume 330. [Google Scholar]

- Hirata, K. A classification of abduction: Abduction for logic programming. Mach. Intell. 1993, 14, 405. [Google Scholar]

- Joyce, J.M. Causal reasoning and backtracking. Philos. Stud. 2010, 147, 139. [Google Scholar] [CrossRef]

- Rollier, B.; Turner, J.A. Planning forward by looking backward: Retrospective thinking in strategic decision making. Decis. Sci. 1994, 25, 169–188. [Google Scholar] [CrossRef]

- Cohen, P.R.; Grinberg, M.R. A theory of heuristic reasoning about uncertainty. AI Mag. 1983, 4, 17. [Google Scholar]

- Cohen, P.R. Heuristic Reasoning About Uncertainty: An Artificial Intelligence Approach; Pitman Publishing, Inc.: Marshfield, MA, USA, 1985. [Google Scholar]

- Treur, J. Heuristic reasoning and relative incompleteness. Int. J. Approx. Reason. 1993, 8, 51–87. [Google Scholar] [CrossRef] [Green Version]

- Schittkowski, K. Heuristic Reasoning in Mathematical Programming. In System Modelling and Optimization; Springer: Berlin/Heidelberg, Germany, 1990; pp. 940–950. [Google Scholar]

- Studer, R.; Benjamins, V.; Fensel, D. Knowledge engineering: Principles and methods. Data Knowl. Eng. 1998, 25, 161–197. [Google Scholar] [CrossRef] [Green Version]

- Wiig, K.M. Knowledge management: Where did it come from and where will it go? Expert Syst. Appl. 1997, 13, 1–14. [Google Scholar] [CrossRef]

- Baskerville, R.; Dulipovici, A. The theoretical foundations of knowledge management. Knowl. Manag. Res. Pract. 2006, 4, 83–105. [Google Scholar] [CrossRef]

- Panetto, H.; Whitman, L. Knowledge engineering for enterprise integration, interoperability and networking: Theory and applications. Data Knowl. Eng. 2016, 105, 1–4. [Google Scholar] [CrossRef]

- Kelly, R. Practical Knowledge Engineering; Elsevier: Amsterdam, The Netherlands, 2016. [Google Scholar]

- De Melo, A.T.; Caves, L.S. Complex systems of knowledge integration: A pragmatic proposal for coordinating and enhancing inter/transdisciplinarity. In From Astrophysics to Unconventional Computation; Springer: Cham, Switzerland, 2020; pp. 337–362. [Google Scholar]

- Amiri, S.; Bajracharya, S.; Goktolga, C.; Thomason, J.; Zhang, S. Augmenting knowledge through statistical, goal-oriented human-robot dialog. arXiv 2019, arXiv:1907.03390. [Google Scholar]

- Barwise, J. Three views of common knowledge. In Readings in Formal Epistemology; Springer: Cham, Switzerland, 2016; pp. 759–772. [Google Scholar]

- McCosh, J. Gnosiology. The Intuitions of the Mind: Inductively Investigated, 3rd ed.; Hurst & Company Publishers: New York, NY, USA, 1872; pp. 284–315. [Google Scholar]

- Papastephanou, M. Aristotelian gnoseology and work-based learning. In Learning, Work and Practice: New Understandings; Springer: Dordrecht, The Netherlands, 2013; pp. 107–119. [Google Scholar]

- Llano, A. Gnoseology; Sinag-Tala Publishers, Inc.: Manila, Philippines, 2011. [Google Scholar]

- Baumgarten, A.G. Aesthetica (1750); Olms: Hildesheim, Germany, 1986. [Google Scholar]

- Nguyen, K.V. Gnoseology: In Relation to Truth, Knowledge and Metaphysics. Available online: http://philpapers.org/rec/NGUGIR (accessed on 4 November 2020).

- Kafkalides, Z. Knowledge as an emotional and intellectual realization of the unconscious. Gnosiology, Psychedelic Drugs and Prenatal Experiences. Neuro Endocrinol. Lett. 2000, 21, 326. [Google Scholar]

- Vićanović, I.D. Image, Identity, Reality: Gnoseology of Painting. Belgrade Engl. Lang. Lit. Stud. 2012, 4, 55–61. [Google Scholar] [CrossRef] [Green Version]

- Artemi, E. The divine gnosiology of Gregory of Nyssa and Nicholas of Cusa. Int. J. Soc. Sci. Humanit. Res. 2015, 3, 11–19. [Google Scholar]

- Kotarbiński, T. Gnosiology: The Scientific Approach to The Theory of Knowledge; Pergamon Press: Oxford, UK, 1966. [Google Scholar]

- Nikitchenko, M. Gnoseology-based approach to foundations of informatics. In Proceedings of the 7th International Conference on ICT in Education, Research and Industrial Applications: Integration, Harmonization and Knowledge Transfer, Kherson, Ukraine, 4–7 May 2011; p. 27. [Google Scholar]

- Mignolo, W. Local Histories/Global Designs: Coloniality, Subaltern Knowledges, and Border Thinking; Princeton University Press: Princeton, NJ, USA, 2012; p. 13. [Google Scholar]

- Li, D. Part II—An Ontological Perspective of Value. Front Matter. In Value Theory: A Research into Subjectivity; Springer Science & Business Media: Berlin/Heidelberg, Germany, 2013; pp. 99–101. [Google Scholar]

- Gomes, W.B. Gnosiology versus epistemology: Distinction between the psychological foundations for individual knowledge and philosophical foundations for universal knowledge. Temas em Psicologia 2009, 17, 37–46. [Google Scholar]

- Moreno, A.L.; Wainer, R. From gnosiology to epistemology: A scientific path to an evidence-based therapy. Revista Brasileira de Terapia Comportamental e Cognitiva 2014, 16, 41–54. [Google Scholar]

- Eikeland, O. From epistemology to gnoseology—Understanding the knowledge claims of action research. Manag. Res. News 2007, 30, 344–358. [Google Scholar] [CrossRef]

- Question: What Are the Major Conceptual Differences between Epistemology and Gnoseology? Available online: https://www.researchgate.net/post/What_are_the_major_conceptual_differences_between_epistemology_and_gnoseology (accessed on 4 November 2020).

- Audi, R. Epistemology. A Contemporary Introduction to the Theory of Knowledge; Routledge: New York, NY, USA, 1998. [Google Scholar]

- Ferrier, J.F. Lectures on Greek Philosophy and Other Philosophical Remains of James Frederick Ferrier; William Blackwood and Sons: Edinburgh, UK; London, UK, 1866. [Google Scholar]

- Dancy, J. An Introduction to Contemporary Epistemology; Blackwell: Hoboken, NJ, USA, 1985; Volume 27. [Google Scholar]

- Goldman, A.I. Epistemology and Cognition; Harvard University Press: Cambridge, MA, USA, 1986. [Google Scholar]

- Hofer, B.K. Personal epistemology as a psychological and educational construct: An introduction. In Personal Epistemology: The Psychology of Beliefs about Knowledge and Knowing; Routledge: Taylor and Francis Group: London, UK, 2002; pp. 3–14. [Google Scholar]

- Corlett, J.A. Social epistemology and social cognition. Soc. Epistemol. 1991, 5, 135–149. [Google Scholar] [CrossRef]

- Klimovsky, G. Las Desventuras Del Conocimiento Científico. Una Introducción a La Epistemología; A-Z Editora: Buenos Aires, Argentina, 1994. [Google Scholar]

- McCain, K.; Kampourakis, K. What Is Scientific Knowledge? An Introduction to Contemporary Epistemology of Science; Routledge: New York, NY, USA, 2019. [Google Scholar]

- Hjørland, B. Concept theory. J. Am. Soc. Inf. Sci. Technol. 2009, 60, 1519–1536. [Google Scholar] [CrossRef]

- Helmer, O.; Rescher, N. On the Epistemology of the Inexact Sciences. Manag. Sci. 1959, 6, 25–52. [Google Scholar] [CrossRef]

- Ratcliff, R. Applying Epistemology to System Engineering: An Illustration. Procedia Comput. Sci. 2013, 16, 393–402. [Google Scholar] [CrossRef] [Green Version]

- Hassan, N.R.; Mingers, J.; Stahl, B.C. Philosophy and information systems: Where are we and where should we go? Eur. J. Inf. Syst. 2018, 27, 263–277. [Google Scholar] [CrossRef] [Green Version]

- Figueiredo, A.D. Toward an epistemology of engineering. In Proceedings of the 2008 Workshop on Philosophy and Engineering, London, UK, 10–12 November 2008. [Google Scholar]

- Frey, D. Epistemology in Engineering Systems. Working Paper Series, ESD-WP-2003-10; Massachusetts Institute of Technology, Engineering Systems Division: Cambridge, MA, USA, 2003; pp. 1–4. [Google Scholar]

- Boulding, K.E. The epistemology of complex systems. Eur. J. Oper. Res. 1987, 30, 110–116. [Google Scholar] [CrossRef]

- Hooker, C. Introduction to philosophy of complex systems: Part A: Towards a framework for complex systems. In Philosophy of Complex Systems; Elsevier: Amsterdam, The Netherlands, 2011; pp. 3–90. [Google Scholar]

- Möbus, C. Towards an Epistemology on Intelligent Design and Modelling Environments: The Hypothesis Testing Approach. In Proceedings of the European Conference on Artificial Intelligence in Education—EuroAIED, Lisbon, Portugal, 30 September–2 October 1996; pp. 52–58. [Google Scholar]

- Houghton, L. Generalization and systemic epistemology: Why should it make sense? Syst. Res. Behav. Sci. 2009, 26, 99–108. [Google Scholar] [CrossRef]

- Horváth, I. An initial categorization of foundational research in complex technical systems. J. Zhejiang Univ. A 2015, 16, 681–705. [Google Scholar] [CrossRef] [Green Version]

- Thannhuber, M.J. Theory of system knowledge: An introduction to the proposed theoretical framework—Understanding and describing knowledge and intelligence on an abstract system level. In The Intelligent Enterprise: Theoretical Concepts and Practical Implications; Springer: Heidelberg, Germany, 2005; pp. 53–66. [Google Scholar]

- Shamshiri, M. The theory of knowledge in contemporary epistemology. J. Fundam. Appl. Sci. 2016, 8, 30. [Google Scholar] [CrossRef] [Green Version]

- Gitt, W. Information, science and biology. Tech. J. 1996, 10, 181–187. [Google Scholar]

| Knowledge Engineering Activities | ||

|---|---|---|

| Creation | Treatment | Utilization |

| K-acquisition | K-modelling | K-testing |

| K-retrieval | K-meta-modelling | K-dissemination |

| K-inferring | K-representation | K-marketing |

| K-reasoning | K-storage | K-maintenance |

| K-fusion | K-organization | K-sharing |

| K-integration | K-explanation | |

| K-refinement | K-distribution | |

| K-discovery | ||

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Horváth, I. Sympérasmology: A Proposal for the Theory of Synthetic System Knowledge. Designs 2020, 4, 47. https://0-doi-org.brum.beds.ac.uk/10.3390/designs4040047

Horváth I. Sympérasmology: A Proposal for the Theory of Synthetic System Knowledge. Designs. 2020; 4(4):47. https://0-doi-org.brum.beds.ac.uk/10.3390/designs4040047

Chicago/Turabian StyleHorváth, Imre. 2020. "Sympérasmology: A Proposal for the Theory of Synthetic System Knowledge" Designs 4, no. 4: 47. https://0-doi-org.brum.beds.ac.uk/10.3390/designs4040047