Medium-Term Regional Electricity Load Forecasting through Machine Learning and Deep Learning

Abstract

:1. Introduction

2. Previous Works

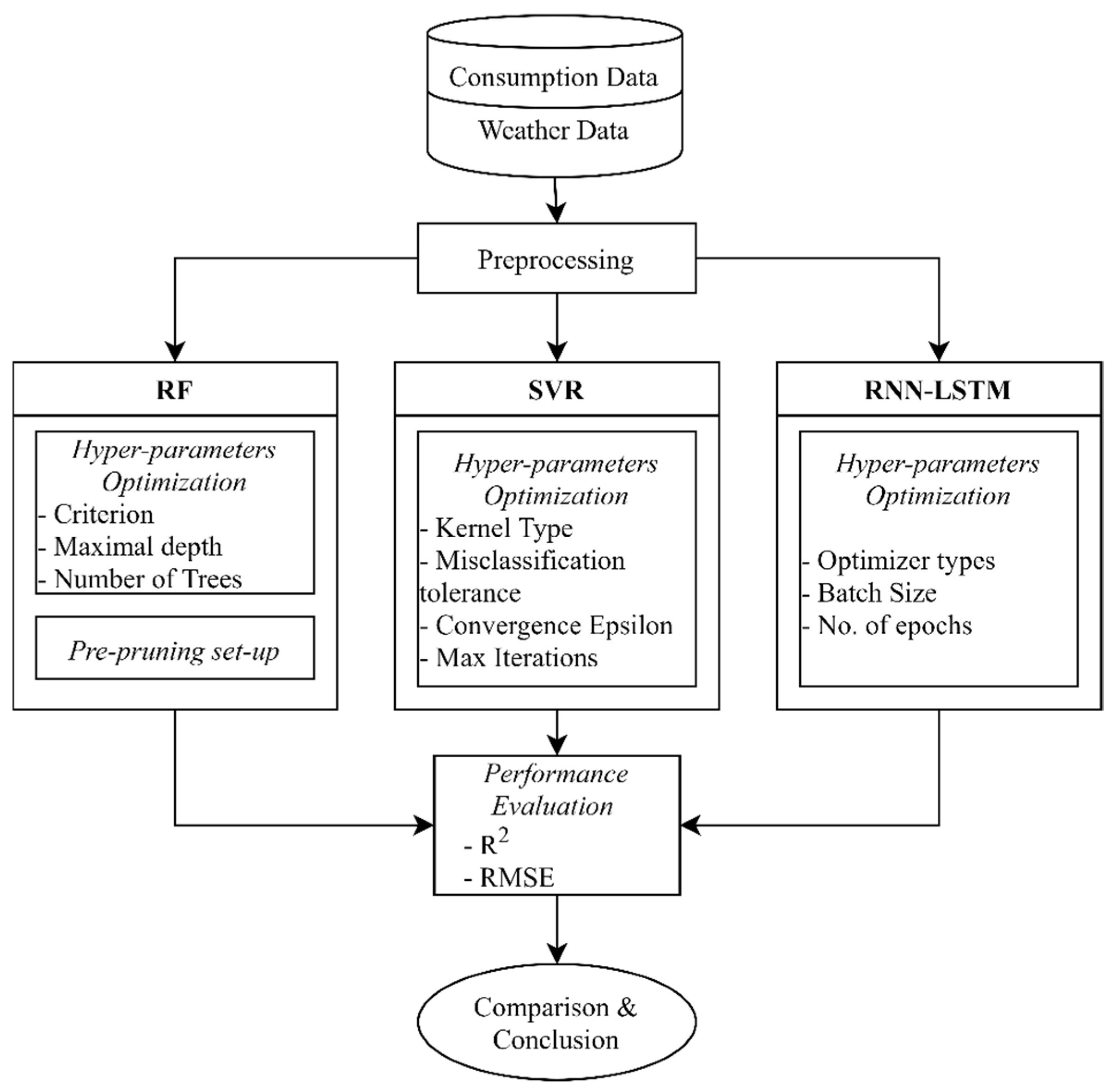

3. Materials and Methods

3.1. Random Forest (RF)

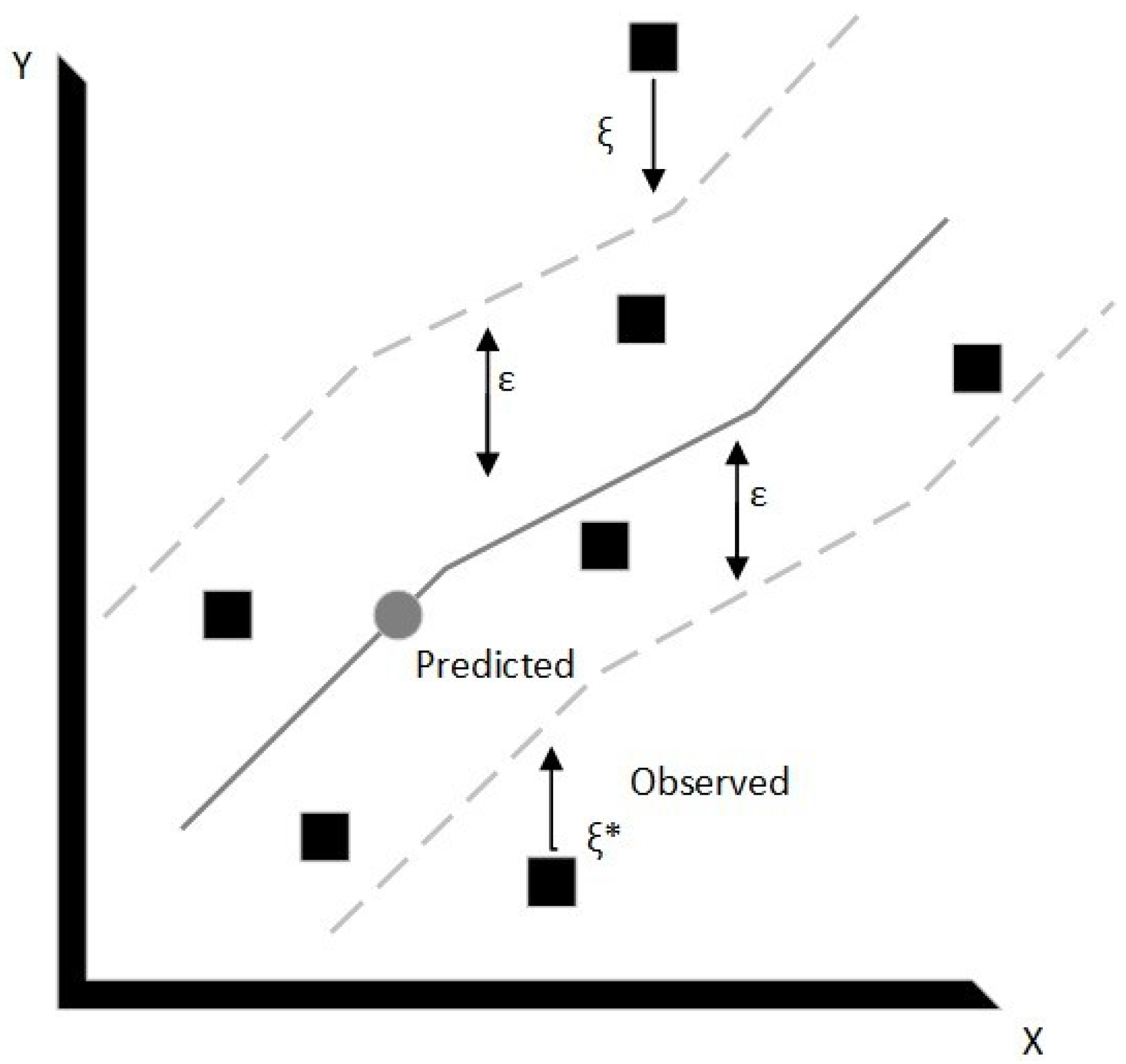

3.2. Support Vector Machine (SVM)

3.3. Deep Learning

4. Results

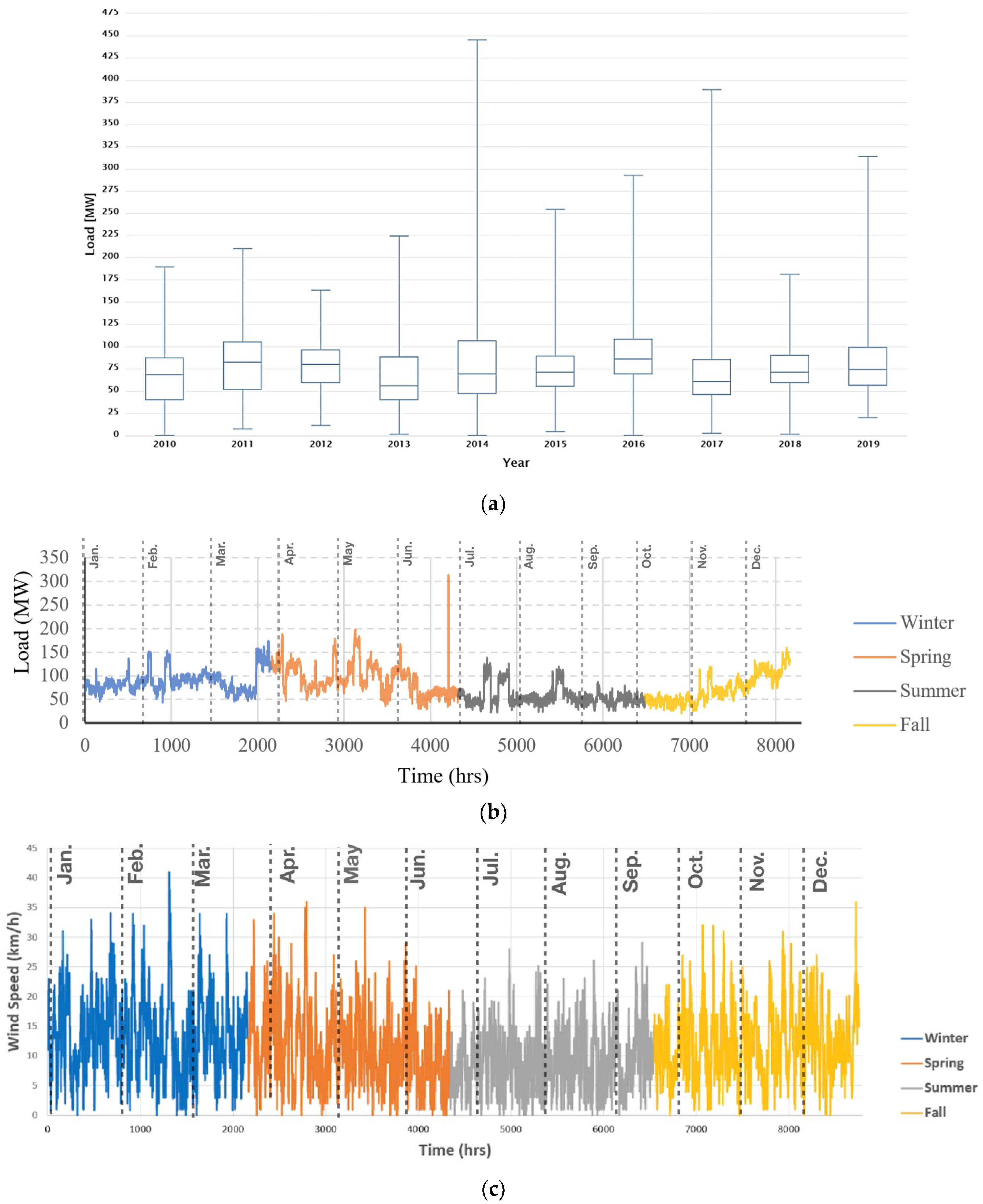

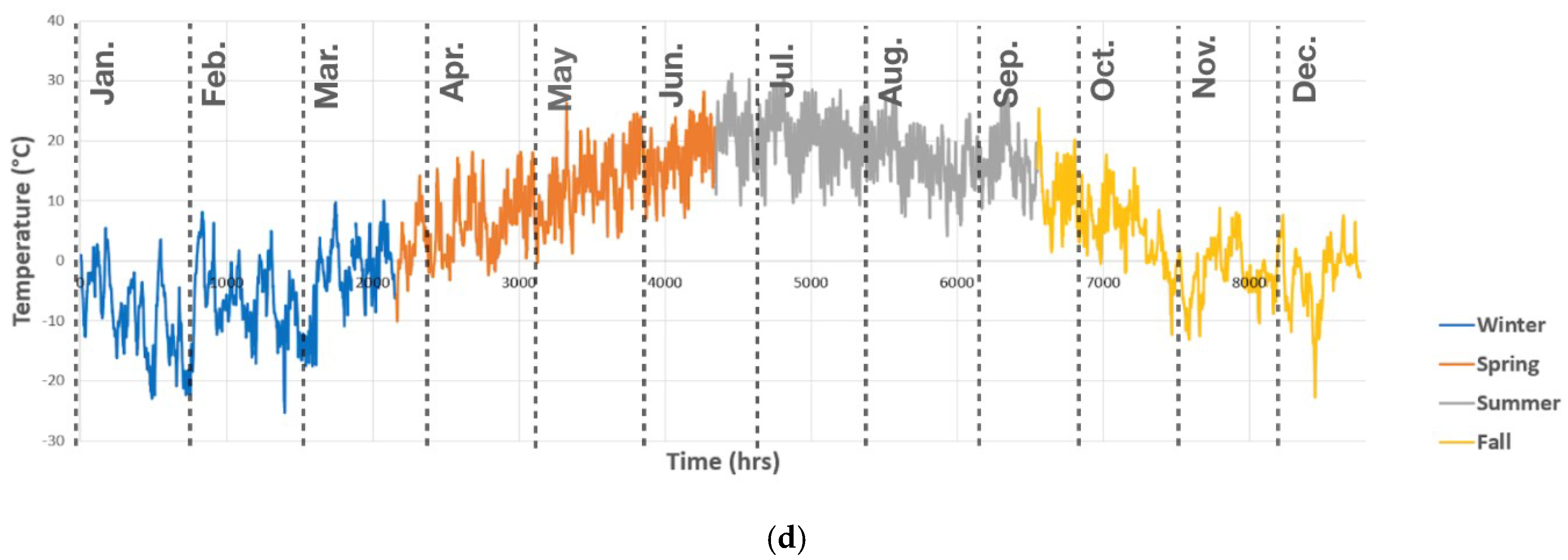

4.1. Case Study

4.2. Implementation

5. Discussion

Validation with the Literature

6. Conclusions

Author Contributions

Funding

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Cook, J.; Nuccitelli, D.; Green, S.A.; Richardson, M.; Winkler, B.; Painting, R.; Way, R.; Jacobs, P.; Skuce, A. Quantifying the consensus on anthropogenic global warming in the scientific literature. Environ. Res. Lett. 2013, 8. [Google Scholar] [CrossRef] [Green Version]

- Braun, M.R.; Altan, H.; Beck, S.B.M. Using regression analysis to predict the future energy consumption of a supermarket in the UK. Appl. Energy 2014, 130, 305–313. [Google Scholar] [CrossRef] [Green Version]

- Jenkins, D.; Liu, Y.; Peacock, A.D. Climatic and internal factors affecting future UK office heating and cooling energy consumptions. Energy Build. 2008, 40, 874–881. [Google Scholar] [CrossRef]

- Ahmad, T.; Chen, H. Potential of three variant machine-learning models for forecasting district level medium-term and long-term energy demand in smart grid environment. Energy 2018, 160, 1008–1020. [Google Scholar] [CrossRef]

- Soliman, S.A.; Al-kandari, A.M. Electrical Load Forecasting. Modeling and Model Construction; Butterworth-Heinemann: Oxford, UK, 2010; ISBN 0123815444. [Google Scholar]

- Karthika, S.; Margaret, V.; Balaraman, K. Hybrid short term load forecasting using ARIMA-SVM. Innov. Power Adv. Comput. Technol. i-PACT 2017, 2017, 1–7. [Google Scholar] [CrossRef]

- Li, Q.; Ren, P.; Meng, Q. Prediction Model of Annual Energy Consumption of Residential Buildings. In Proceedings of the 2010 International Conference on Advances in Energy Engineering, ICAEE, Beijing, China, 19–20 June 2010; IEEE: New York, NY, USA, 2010; pp. 223–226. [Google Scholar]

- Jain, R.K.; Smith, K.M.; Culligan, P.J.; Taylor, J.E. Forecasting energy consumption of multi-family residential buildings using support vector regression: Investigating the impact of temporal and spatial monitoring granularity on performance accuracy. Appl. Energy 2014, 123, 168–178. [Google Scholar] [CrossRef]

- Ruiz-Abellón, M.D.C.; Gabaldón, A.; Guillamón, A. Load forecasting for a campus university using ensemble methods based on regression trees. Energies 2018, 11, 2038. [Google Scholar] [CrossRef] [Green Version]

- Ahmad, M.W.; Mourshed, M.; Rezgui, Y. Trees vs Neurons: Comparison between random forest and ANN for high-resolution prediction of building energy consumption. Energy Build. 2017, 147, 77–89. [Google Scholar] [CrossRef]

- Wang, Z.; Wang, Y.; Zeng, R.; Srinivasan, R.S.; Ahrentzen, S. Random Forest based hourly building energy prediction. Energy Build. 2018, 171, 11–25. [Google Scholar] [CrossRef]

- Khan, P.W.; Byun, Y.C.; Lee, S.J.; Kang, D.H.; Kang, J.Y.; Park, H.S. Machine learning-based approach to predict energy consumption of renewable and nonrenewable power sources. Energies 2020, 13, 4870. [Google Scholar] [CrossRef]

- Khan, P.W.; Byun, Y.-C. Genetic Algorithm Based Optimized Feature Engineering and Hybrid Machine Learning for Effective Energy Consumption Prediction. IEEE Access 2020, 8, 196274–196286. [Google Scholar] [CrossRef]

- Khan, P.W.; Byun, Y.C.; Lee, S.J.; Park, N. Machine learning based hybrid system for imputation and efficient energy demand forecasting. Energies 2020, 13, 2681. [Google Scholar] [CrossRef]

- Lahouar, A.; Ben Hadj Slama, J. Day-ahead load forecast using random forest and expert input selection. Energy Convers. Manag. 2015, 103, 1040–1051. [Google Scholar] [CrossRef]

- Neto, A.H.; Fiorelli, F.A.S. Comparison between detailed model simulation and artificial neural network for forecasting building energy consumption. Energy Build. 2008, 40, 2169–2176. [Google Scholar] [CrossRef]

- Biswas, M.A.R.; Robinson, M.D.; Fumo, N. Prediction of residential building energy consumption: A neural network approach. Energy 2016, 117, 84–92. [Google Scholar] [CrossRef]

- Hong, T.; Pinson, P.; Fan, S. Global energy forecasting competition 2012. Int. J. Forecast. 2014, 30, 357–363. [Google Scholar] [CrossRef]

- Bendaoud, N.M.M.; Farah, N. Using deep learning for short-term load forecasting. Neural Comput. Appl. 2020, 32, 15029–15041. [Google Scholar] [CrossRef]

- Thokala, N.K.; Bapna, A.; Chandra, M.G. A deployable electrical load forecasting solution for commercial buildings. In Proceedings of the 2018 IEEE International Conference on Industrial Technology (ICIT), Lyon, France, 20–22 February 2018; Volume 2018, pp. 1101–1106. [Google Scholar] [CrossRef]

- Koschwitz, D.; Frisch, J.; van Treeck, C. Data-driven heating and cooling load predictions for non-residential buildings based on support vector machine regression and NARX Recurrent Neural Network: A comparative study on district scale. Energy 2018, 165, 134–142. [Google Scholar] [CrossRef]

- Agrawal, R.K.; Muchahary, F.; Tripathi, M.M. Long term load forecasting with hourly predictions based on long-short-term-memory networks. In Proceedings of the 2018 IEEE Texas Power and Energy Conference (TPEC), College Station, TX, USA, 8–9 February 2018; Volume 2018, pp. 1–6. [Google Scholar] [CrossRef]

- Lee, S.; Cho, S.; Kim, S.-H.; Kim, J.; Chae, S.; Jeong, H.; Kim, T. Deep Neural Network Approach for Prediction of Heating Energy Consumption in Old Houses. Energies 2020, 14, 122. [Google Scholar] [CrossRef]

- Shi, H.; Xu, M.; Li, R. Deep Learning for Household Load Forecasting-A Novel Pooling Deep RNN. IEEE Trans. Smart Grid 2018, 9, 5271–5280. [Google Scholar] [CrossRef]

- Tarik Rashid, B.Q.; Huang, M.-T.K.; Gleeson, B. Auto-Regressive Recurrent Neural Network Approach for Electricity Load Forecasting. Int. J. Comput. Intell. 2006, 3, 1304–2386. Available online: https://www.researchgate.net/publication/287828870_Auto-regressive_recurrent_neural_network_approach_for_electricity_load_forecasting (accessed on 20 June 2020).

- Rahman, A.; Srikumar, V.; Smith, A.D. Predicting electricity consumption for commercial and residential buildings using deep recurrent neural networks. Appl. Energy 2018, 212, 372–385. [Google Scholar] [CrossRef]

- Warrior, K.P.; Shrenik, M.; Soni, N. Short-Term Electrical Load Forecasting Using Predictive Machine Learning Models. In Proceedings of the 2016 IEEE Annual India Conference, INDICON, Bangalore, India, 16–18 December 2016. [Google Scholar]

- Li, C.; Tao, Y.; Ao, W.; Yang, S.; Bai, Y. Improving forecasting accuracy of daily enterprise electricity consumption using a random forest based on ensemble empirical mode decomposition. Energy 2018, 165, 1220–1227. [Google Scholar] [CrossRef]

- Grolinger, K.; L’Heureux, A.; Capretz, M.A.M.; Seewald, L. Energy forecasting for event venues: Big data and prediction accuracy. Energy Build. 2016, 112, 222–233. [Google Scholar] [CrossRef] [Green Version]

- Du, K.L.; Swamy, M.N.S. Neural Networks in a Softcomputing Framework; Springer-Verlag: London, UK, 2006; ISBN 1846283027. [Google Scholar]

- Goodfellow, I.; Bengio, Y.; Courville, A. Deep Learning; MIT Press: Cambridge, MA, USA, 2016; Available online: http://www.deeplearningbook.org (accessed on 20 December 2020).

- Zhang, X.Y.; Yin, F.; Zhang, Y.M.; Liu, C.L.; Bengio, Y. Drawing and Recognizing Chinese Characters with Recurrent Neural Network. IEEE Trans. Pattern Anal. Mach. Intell. 2018, 40, 849–862. [Google Scholar] [CrossRef] [Green Version]

- Hochreiter, S. Untersuchungen zu Dynamischen Neuronalen Netzen. Diploma Thesis, Technische Universität München, München, Germany, 1991. [Google Scholar]

- Bengio, Y.; Simard, P.; Frasconi, P. Patrice Simard; Paolo Frasconi Learning Long-term Dependencies with Gradient Descent is Difficult. IEEE Trans. Neural Netw. 1994, 5, 157. [Google Scholar] [CrossRef]

- Hochreiter, S.; Schmidhuber, J. Long Short-Term Memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef]

- Wen, L.; Zhou, K.; Yang, S.; Lu, X. Optimal load dispatch of community microgrid with deep learning based solar power and load forecasting. Energy 2019, 171, 1053–1065. [Google Scholar] [CrossRef]

- Menezes, J.M.P.; Barreto, G.A. Long-term time series prediction with the NARX network: An empirical evaluation. Neurocomputing 2008, 71, 3335–3343. [Google Scholar] [CrossRef]

- Ardalani-Farsa, M.; Zolfaghari, S. Chaotic time series prediction with residual analysis method using hybrid Elman-NARX neural networks. Neurocomputing 2010, 73, 2540–2553. [Google Scholar] [CrossRef]

- Srivastava, N.; Hinton, G.; Krizhevsky, A.; Sutskever, I.; Salakhutdinov, R. Dropout: A Simple Way to Prevent Neural Networks from Overfitting. J. Mach. Learn. Res. 2014, 15, 1929–1958. [Google Scholar]

- Census Profile, 2016 Census—Bruce, County [Census Division], Ontario and Newfoundland and Labrador [Province], (n.d.). Available online: https://www12.statcan.gc.ca/census-recensement/2016/dp-pd/prof/details/page.cfm?Lang=E&Geo1=CD&Code1=3541&Geo2=PR&Code2=10&Data=Count&SearchText=Bruce&SearchType=Begins&SearchPR=01&B1=All&TABID=1 (accessed on 10 August 2020).

- Data Directory, (n.d.). Available online: http://www.ieso.ca/en/ (accessed on 28 April 2020).

- Historical Data—Climate—Environment and Climate Change Canada, (n.d.). Available online: https://climate.weather.gc.ca/historical_data/search_historic_data_e.html (accessed on 20 June 2020).

- Data Directory, (n.d.). Available online: https://www.ieso.ca/en/Power-Data/Data-Directory (accessed on 8 December 2020).

- Gurubel, K.J.; Osuna-Enciso, V.; Cardenas, J.J.; Coronado-Mendoza, A.; Perez-Cisneros, M.A.; Sanchez, E.N. Neural forecasting and optimal sizing for hybrid renewable energy systems with grid-connected storage system. J. Renew. Sustain. Energy 2016, 8. [Google Scholar] [CrossRef]

- Cheng, Y.; Xu, C.; Mashima, D.; Thing, V.L.L.; Wu, Y. PowerLSTM: Power Demand Forecasting Using Long Short-Term Memory Neural Network. In Proceedings of the Advanced Data Mining and Applications: 13th International Conference, Singapore, 5–6 November 2017; Springer: Berlin/Heidelberg, Germany, 2017. Available online: https://0-link-springer-com.brum.beds.ac.uk/chapter/10.1007/978-3-319-69179-4_51 (accessed on 5 April 2020).

- Jung, S.M.; Park, S.; Jung, S.W.; Hwang, E. Monthly electric load forecasting using transfer learning for smart cities. Sustainability 2020, 12, 6364. [Google Scholar] [CrossRef]

| Parameters | Setting | |

|---|---|---|

| Criterion | Least Squares | |

| Maximal depth | 30 | |

| Number of Trees | 150 | |

| Pre-pruning parameters | Minimal gain | 0.01 |

| Minimal leaf size | 2 | |

| Minimal size for split | 4 | |

| Number of pre-pruning alternatives | 3 |

| Parameters | Setting |

|---|---|

| Kernel Type | dot |

| Misclassification tolerance, C | 0 |

| Convergence Epsilon, ε | 0.001 |

| Max Iterations | 10,000 |

| Parameters | Values/Types |

|---|---|

| No. hidden layers | 3 |

| No. neurons per hidden layer | 50 |

| Activation function | Hyperbolic tangent (tanh) |

| Optimizer types | {Adam, RMSprop} |

| Batch Size | {20,25,32} |

| No. of epochs | {5,10,15,20} |

| Model | RMSE | R2 | Computation Time (min) | ||

|---|---|---|---|---|---|

| Mean ± SD | Range | Mean ± SD | Range | ||

| LSTM | 9.569 ± 3.050 | [7.16,15.00] | 0.921 ± 0.0341 | [0.85,0.95] | 93 |

| NARX | 6.987 ± 1.433 | [5.52,9.75] | 0.953 ± 0.0142 | [0.93,0.98] | 16 |

| SVM | 11.878 ± 2.447 | [7.89,15.22] | 0.886 ± 0.0345 | [0.84,0.94] | 244 |

| RF | 13.028 ± 3.483 | [10.05,21.49] | 0.853 ± 0.0489 | [0.78,0.91] | 50 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Shirzadi, N.; Nizami, A.; Khazen, M.; Nik-Bakht, M. Medium-Term Regional Electricity Load Forecasting through Machine Learning and Deep Learning. Designs 2021, 5, 27. https://0-doi-org.brum.beds.ac.uk/10.3390/designs5020027

Shirzadi N, Nizami A, Khazen M, Nik-Bakht M. Medium-Term Regional Electricity Load Forecasting through Machine Learning and Deep Learning. Designs. 2021; 5(2):27. https://0-doi-org.brum.beds.ac.uk/10.3390/designs5020027

Chicago/Turabian StyleShirzadi, Navid, Ameer Nizami, Mohammadali Khazen, and Mazdak Nik-Bakht. 2021. "Medium-Term Regional Electricity Load Forecasting through Machine Learning and Deep Learning" Designs 5, no. 2: 27. https://0-doi-org.brum.beds.ac.uk/10.3390/designs5020027