Spatial–Temporal Attention Two-Stream Convolution Neural Network for Smoke Region Detection

Abstract

:1. Introduction

2. Materials and Methods

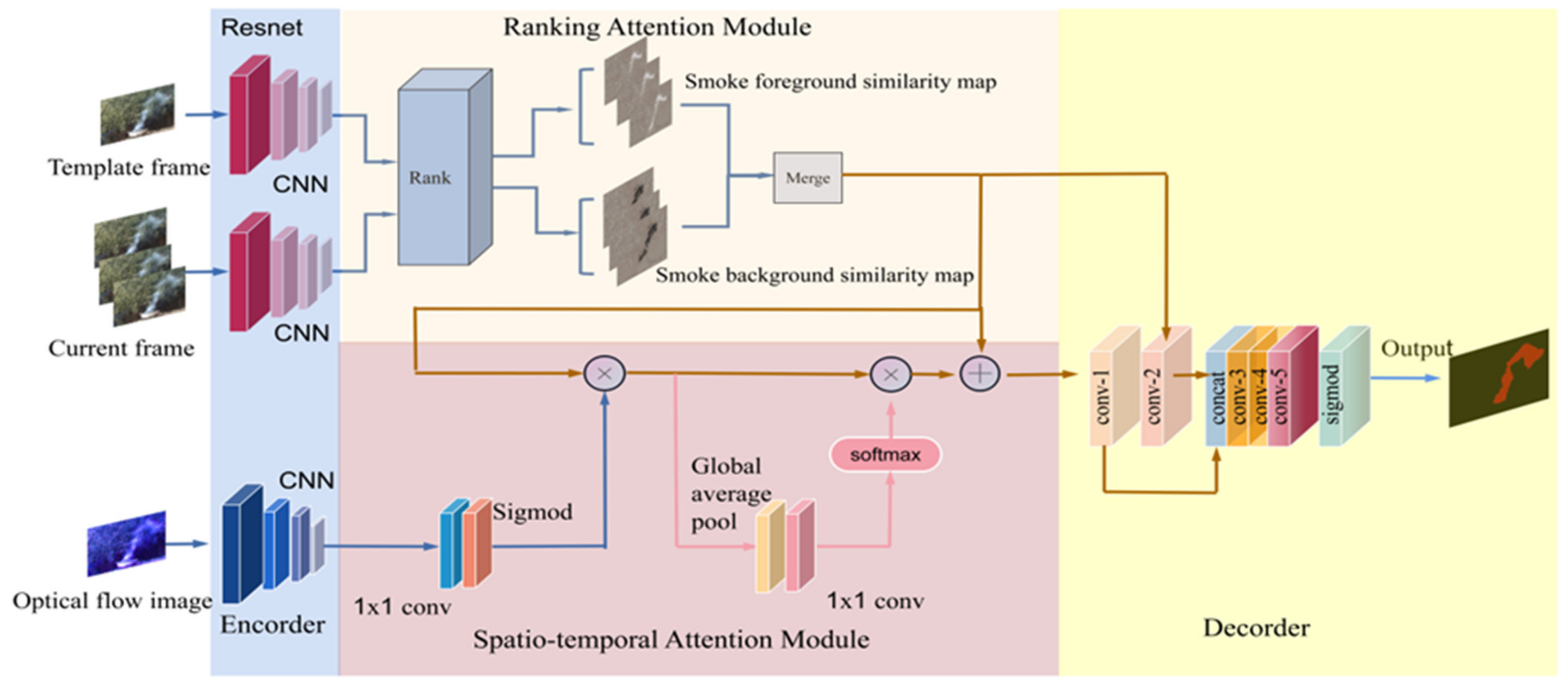

2.1. Network Structure

2.2. Spatio-Temporal Attention Module

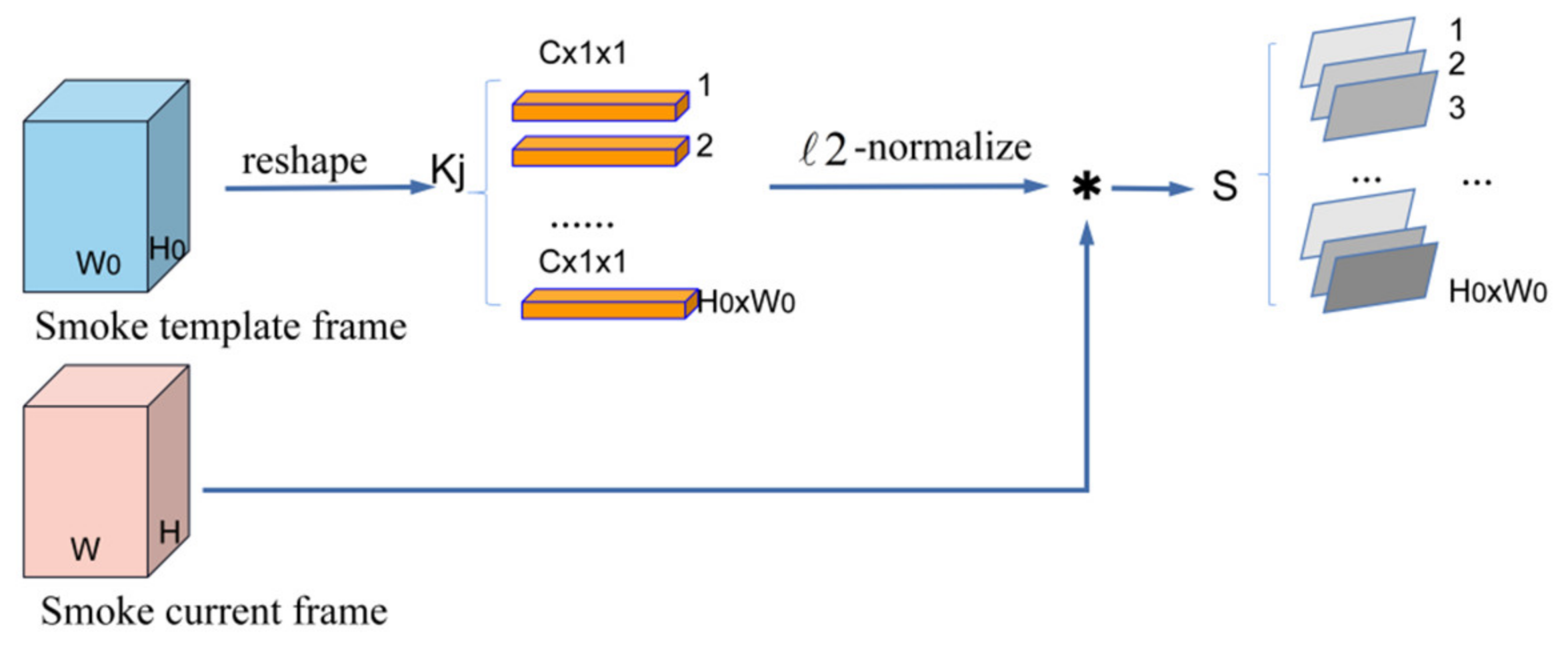

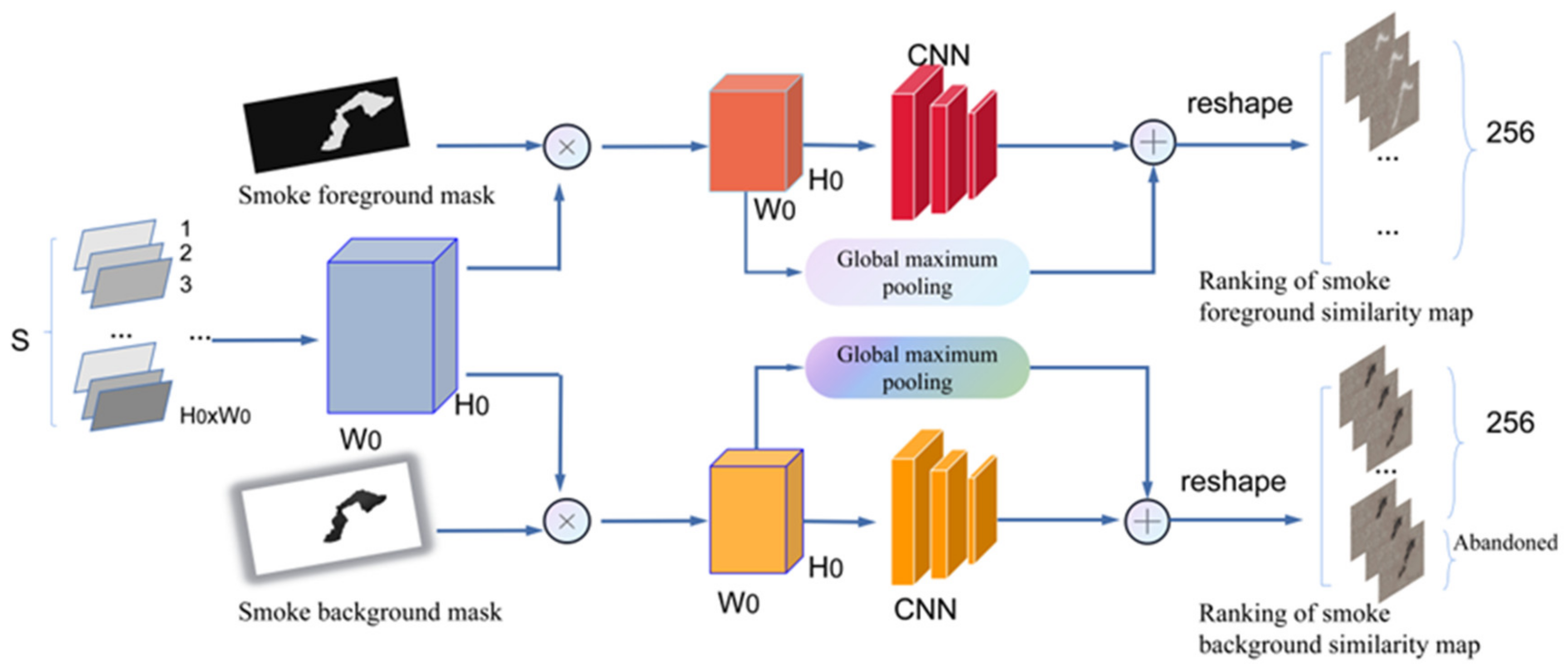

2.3. Smoke Ranking Module

2.4. Datasets

2.5. Model Evaluation

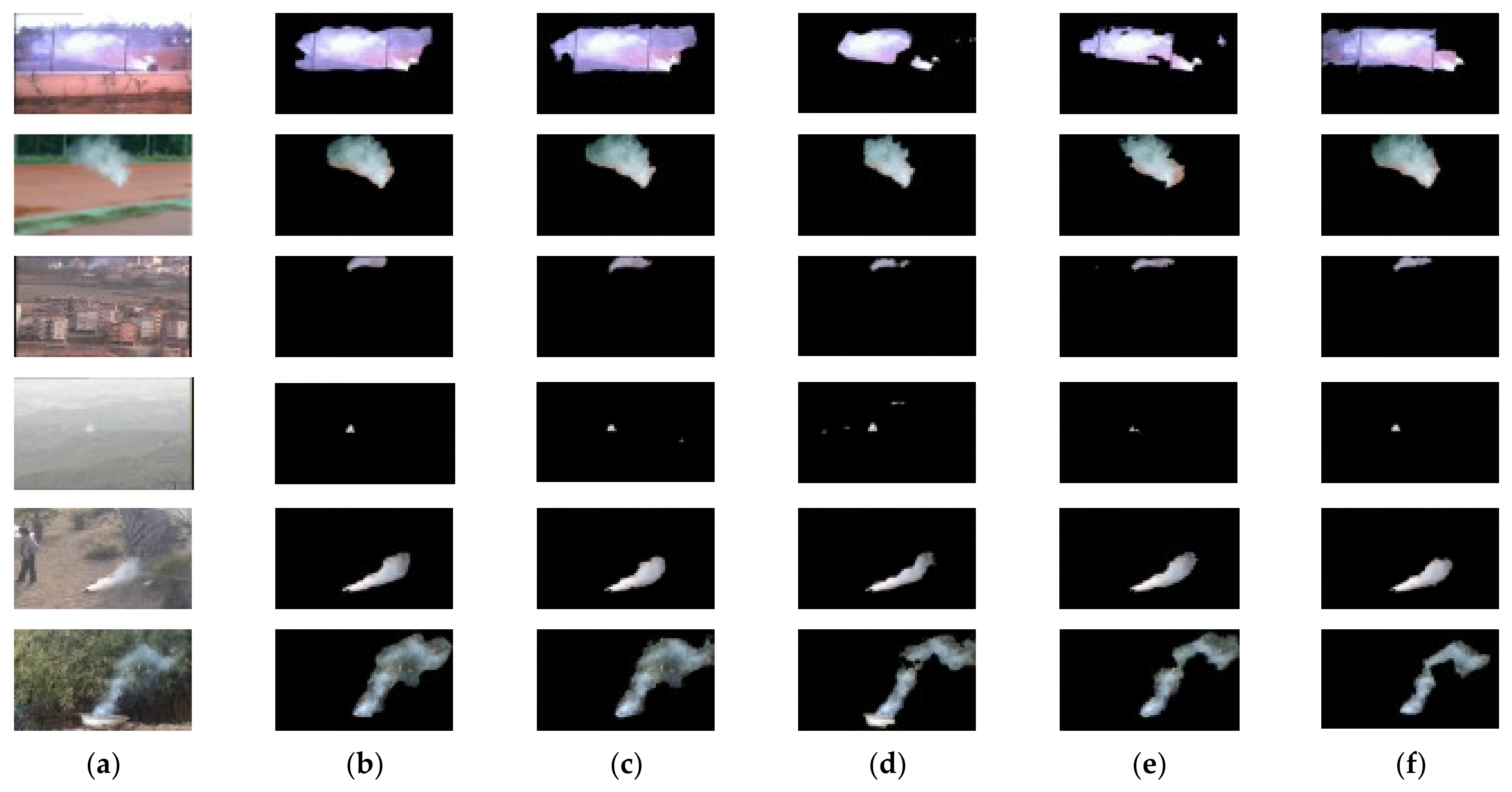

3. Results

3.1. Training

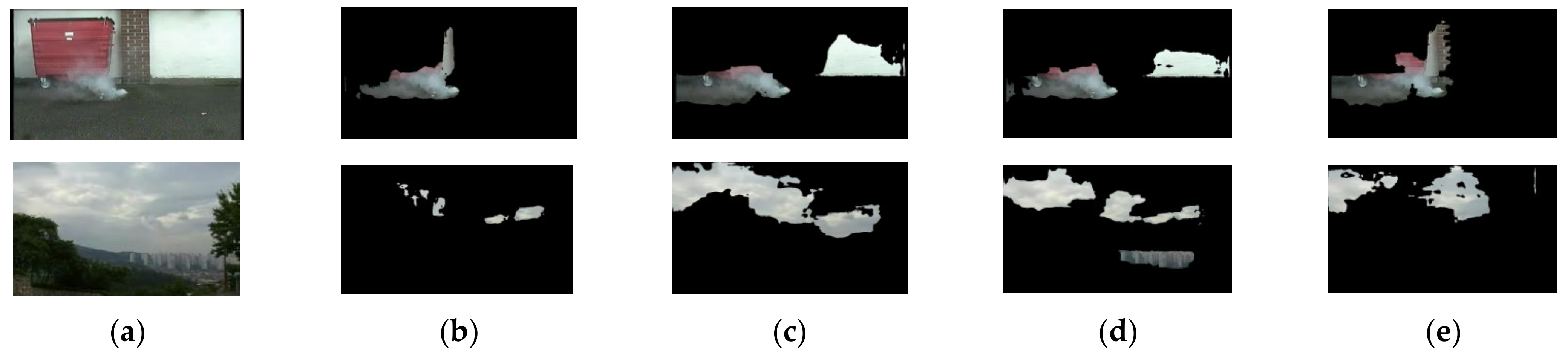

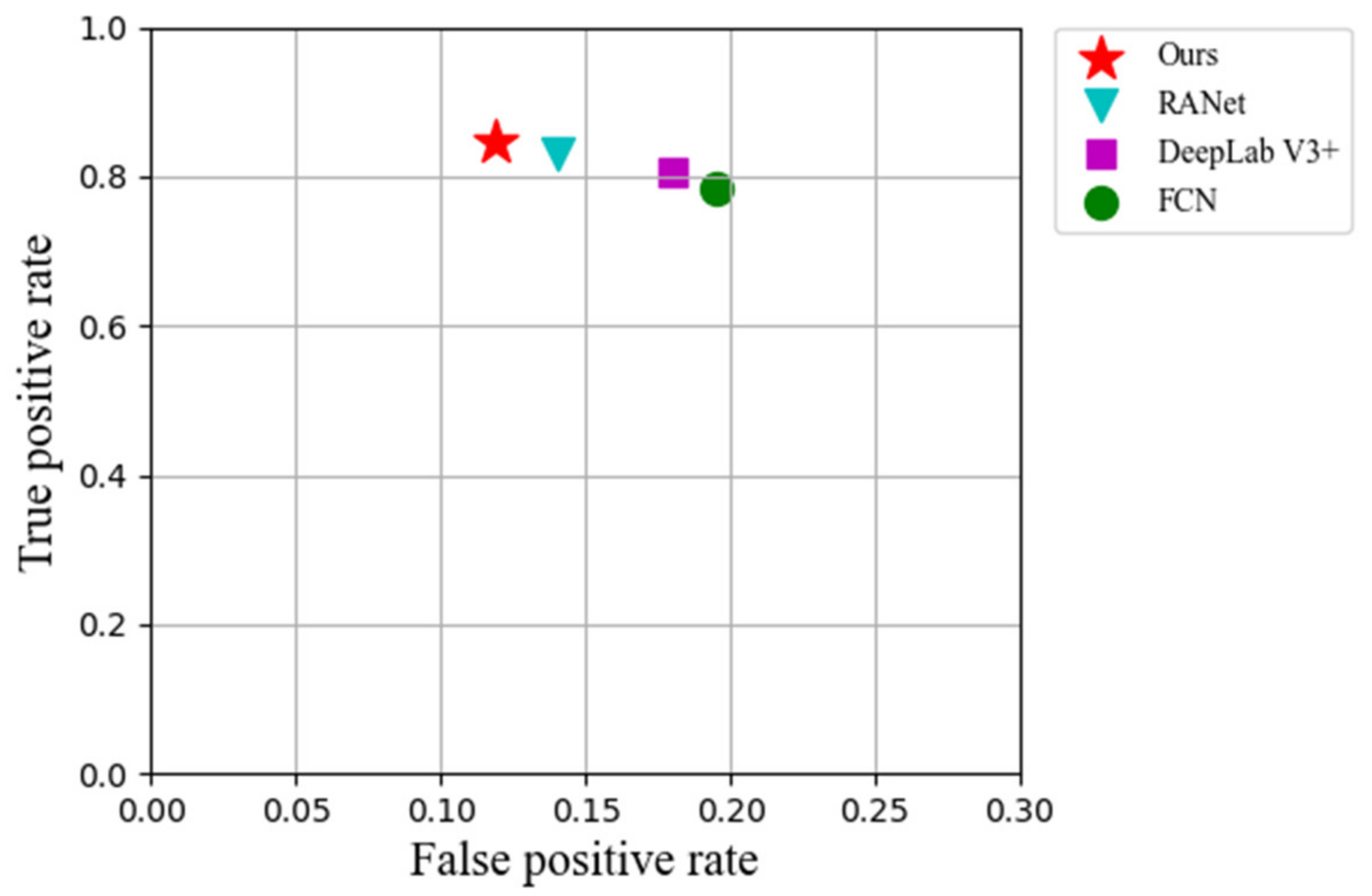

3.2. Comparison

3.3. Anti-Interference Test

4. Conclusions and Future Works

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Ho, C.-C. Machine vision-based real-time early flame and smoke detection. Meas. Sci. Technol. 2009, 20, 045502. [Google Scholar] [CrossRef]

- Genovese, A.; Labati, R.D.; Piuri, V.; Scotti, F. Wildfire Smoke Detection Using Computational Intelligence Techniques. In Proceedings of the IEEE International Conference on Computational Intelligence for Measurement Systems & Applications, Ottawa, ON, Canada, 19–21 September 2011; pp. 1–6. [Google Scholar]

- Xiong, Z.; Rodrigo Caballero, R.; Wang, H.; Finn, A.M.; Lelic, M.A.; Peng, P.Y. Video-based smoke detection: Possibilities, techniques, and challenges. J. Hubei Radio Telev. Univ. 2007, 112–114. Available online: https://www.academia.edu/30284548/Video_Based_Smoke_Detection_Possibilities_Techniques_and_Challenges (accessed on 30 September 2021).

- Töreyin, B.U.; Dedeolu, Y.; Çetin, A.E. Wavelet Based Real-Time Smoke Detection in Video. In Proceedings of the European Signal Processing Conference, Antalya, Turkey, 4–8 September 2005; pp. 4–8. [Google Scholar]

- Filonenko, A.; Hernandez, D.C.; Jo, K.H. Fast Smoke Detection for Video Surveillance using CUDA. IEEE Trans. Ind. Inform. 2017, 14, 725–733. [Google Scholar] [CrossRef]

- Maruta, H.; Nakamura, A.; Kurokawa, F. A New Approach for Smoke Detection with Texture Analysis and Support Vector Machine. In Proceedings of the IEEE International Symposium on Industrial Electronics, Bari, Italy, 4–7 July 2010; pp. 1550–1555. [Google Scholar]

- Yuan, F. Video-based smoke detection with histogram sequence of LBP and LBPV pyramids. Fire Saf. J. 2011, 46, 132–139. [Google Scholar] [CrossRef]

- Yu, C.; Fang, J.; Wang, J.; Zhang, Y. Video Fire Smoke Detection Using Motion and Color Features. Fire Technol. 2010, 46, 651–663. [Google Scholar]

- Yuan, F. A fast accumulative motion orientation model based on integral image for video smoke detection. Pattern Recognit. Lett. 2008, 29, 925–932. [Google Scholar] [CrossRef]

- He, T.; Liu, Y.; Xu, C.; Zhou, X.; Hu, Z.; Fan, J. A Fully Convolutional Neural Network for Wood Defect Location and Identification. IEEE Access 2019, 7, 123453–123462. [Google Scholar] [CrossRef]

- Jin, X.; Che, J.; Chen, Y. Weed Identification Using Deep Learning and Image Processing in Vegetable Plantation. IEEE Access 2021, 9, 10940–10950. [Google Scholar] [CrossRef]

- Frizzi, S.; Kaabi, R.; Bouchouicha, M.; Ginoux, J.-M.; Moreau, E.; Fnaiech, F. Convolutional Neural Network for Video Fire and Smoke Detection. In Proceedings of the Conference of the IEEE Industrial Electronics Society, Florence, Italy, 23–26 October 2016; pp. 877–882. [Google Scholar]

- Yuan, F.; Zhang, L.; Xia, X.; Wan, B.; Huang, Q.; Li, X. Deep smoke segmentation. Neurocomputing 2019, 357, 248–260. [Google Scholar] [CrossRef]

- Muhammad, K.; Ahmad, J.; Lv, Z.; Bellavista, P.; Yang, P.; Baik, S.W. Efficient Deep CNN-Based Fire Detection and Localization in Video Surveillance Applications. IEEE Trans. Syst. Man Cybern. Syst. 2018, 49, 1419–1434. [Google Scholar] [CrossRef]

- Khan, S.; Muhammad, K.; Mumtaz, S.; Baik, S.W.; de Albuquerque, V.H.C. Energy-Efficient Deep CNN for Smoke Detection in Foggy IoT Environment. IEEE Internet Things J. 2019, 6, 9237–9245. [Google Scholar] [CrossRef]

- Zhang, Q.-X.; Lin, G.-H.; Zhang, Y.-M.; Xu, G.; Wang, J.-J. Wildland Forest Fire Smoke Detection Based on Faster R-CNN using Synthetic Smoke Images. Procedia Eng. 2018, 211, 441–446. [Google Scholar] [CrossRef]

- Lin, G.; Zhang, Y.; Guo, X.; Zhang, Q. Smoke Detection on Video Sequences Using 3D Convolutional Neural Networks. Fire Technol. 2019, 55, 1827–1847. [Google Scholar] [CrossRef]

- Xu, G.; Zhang, Y.; Zhang, Q.; Lin, G.; Wang, Z.; Jia, Y.; Wang, J. Video Smoke Detection Based on Deep Saliency Network. Fire Saf. J. 2019, 105, 277–285. [Google Scholar] [CrossRef] [Green Version]

- Verstockt, S.; Beji, T.; Potter, P.D.; Hoecke, S.V.; Sette, B.; Merci, B.; Walle, R. Video Driven Fire Spread Forecasting Using Multi-modal LWIR and Visual Flame and Smoke Data. Pattern Recognit. Lett. 2013, 34, 62–69. [Google Scholar] [CrossRef]

- Zen, R.I.M.; Widyanto, M.R.; Kiswanto, G.; Dharsono, G.; Nugroho, Y.S. Dangerous Smoke Classification Using Mathematical Model of Meaning. Procedia Eng. 2013, 62, 963–971. [Google Scholar] [CrossRef] [Green Version]

- Pan, J.; Ou, X.M.; Xu, L. A Collaborative Region Detection and Grading Framework for Forest Fire Smoke Using Weakly Supervised Fine Segmentation and Lightweight Faster-RCNN. Forests 2012, 12, 768. [Google Scholar] [CrossRef]

- Ba, R.; Chen, C.; Yuan, J.; Song, W.; Lo, S. SmokeNet: Satellite Smoke Scene Detection Using Convolutional Neural Network with Spatial and Channel-Wise Attention. Remote Sens. 2019, 11, 1702. [Google Scholar] [CrossRef] [Green Version]

- Wang, Z.; Xu, J.; Liu, L.; Zhu, F.; Shao, L. RANet Ranking Attention Network for Fast Video Object Segmentation. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Korea, 27 October–3 November 2019; pp. 3978–3987. [Google Scholar]

- Barmpoutis, P.; Stathaki, T.; Dimitropoulos, K.; Grammalidis, N. Early Fire Detection Based on Aerial 360-Degree Sensors, Deep Convolution Neural Networks and Exploitation of Fire Dynamic Textures. Remote Sens. 2020, 12, 3177. [Google Scholar] [CrossRef]

- Fan, Q.; Zhuo, W.; Tang, C.-K.; Tai, Y.-W. Few-Shot Object Detection with Attention-RPN and Multi-Relation Detector. In Proceedings of the 2020 IEEE/CVF Conference on Computer and Pattern Recogition (CVPR), Seattle, WA, USA, 14–19 June 2020; pp. 4012–4021. [Google Scholar]

| Layer Name | Input | Output | |

|---|---|---|---|

| Conv1 | 7 × 7,64, stride 2 | ||

| Pool Conv2_x | 3 × 3 max pool, stride 2 | ||

| Conv3_x | |||

| Conv4_x |

| Dataset | Sample Type | Total Number of Images | Source |

|---|---|---|---|

| Dataset 1 | Smoke samples | 6000 | Recorded by us |

| Dataset 2 | Smoke-like samples | 1500 | Recorded by us |

| Dataset 3 | Smoke samples | 18,000 | Public dataset |

| Dataset 4 | Smoke-like samples | 4500 | Public dataset |

| Algorithm | Video 1 | Video 2 | Video 3 | Video 4 | Video 5 | Video 6 | Mean |

|---|---|---|---|---|---|---|---|

| FCN | 64.5% | 82.6% | 81.0% | 72.8% | 81.2% | 69.4% | 75.25% |

| Deeplab V3+ | 67.8% | 87.7% | 82.9% | 73.1% | 87.6% | 73.9% | 78.83% |

| RANet | 70.4% | 86.9% | 83.8% | 78.3% | 86.1% | 75.6% | 80.18% |

| Ours | 78.7% | 87.2% | 86.7% | 78.7% | 87.3% | 82.5% | 83.52% |

| Algorithm | Video1 | Video2 | Video3 | Video4 | Video5 | Video6 | Mean |

|---|---|---|---|---|---|---|---|

| FCN | 70.2% | 85.1% | 85.2% | 76.7% | 85.7% | 76.9% | 79.97% |

| Deeplab V3+ | 72.5% | 89.3% | 86.3% | 75.5% | 89.6% | 78.3% | 81.92% |

| RANet | 73.9% | 88.3% | 87.6% | 80.1% | 88.9% | 79.4% | 83.03% |

| Ours | 79.8% | 89.1% | 88.5% | 81.9% | 89.3% | 85.9% | 85.75% |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ding, Z.; Zhao, Y.; Li, A.; Zheng, Z. Spatial–Temporal Attention Two-Stream Convolution Neural Network for Smoke Region Detection. Fire 2021, 4, 66. https://0-doi-org.brum.beds.ac.uk/10.3390/fire4040066

Ding Z, Zhao Y, Li A, Zheng Z. Spatial–Temporal Attention Two-Stream Convolution Neural Network for Smoke Region Detection. Fire. 2021; 4(4):66. https://0-doi-org.brum.beds.ac.uk/10.3390/fire4040066

Chicago/Turabian StyleDing, Zhipeng, Yaqin Zhao, Ao Li, and Zhaoxiang Zheng. 2021. "Spatial–Temporal Attention Two-Stream Convolution Neural Network for Smoke Region Detection" Fire 4, no. 4: 66. https://0-doi-org.brum.beds.ac.uk/10.3390/fire4040066