1. Introduction

The removal of plastic contamination in cotton lint is an issue of top priority for the U.S. cotton industry. One of the main sources of plastic contamination appearing in marketable cotton bales at the U.S. Department of Agriculture’s classing office is from the plastic used to wrap cotton modules produced by the new John Deere round module harvesters. Despite diligent efforts by cotton ginning personnel to remove all plastic encountered during unwrapping of the seed cotton modules, plastic still finds a way into the cotton gin’s processing system. Plastic contamination in cotton is thought to be a major contributor to the loss of a US

$0.02/kg premium that U.S. cotton used to obtain on the international market due to its reputation as one of the cleanest cottons in the world. Current data now show that U.S. cotton is trading at a US

$0.01/kg discount relative to the market, with a total loss of US

$0.034/kg with respect to market conditions prior to the wide-spread adoption of plastic-wrapped cotton modules. The cost of this loss to U.S. producers is in excess of US

$750 million annually [

1]. In order to help address this loss and mitigate plastic contamination at the cotton gin, inspection systems are being developed that utilize low-cost color cameras to observe plastic at various locations throughout the cotton gin for plastic wrap detection and control of plastic removal machinery.

This report covers the development of an image dataset that can be used to further improve modern detection systems that are needed to control removal machinery. The image dataset is included,

as a supplemental, along with this report as an open-source resource to help further advance the science and lower the barriers of development. The annotated image dataset, provided with this technical note, is supplied in the Pascal VOC format and is ready for import into Tensorflow [

2] or Keras [

3] deep learning model training and development environments. The deep learning format was selected given the recent wide scope of rapid advancements demonstrated in a diverse range of disciplines [

4,

5,

6]. The objective of this paper was to provide the background and relevant information in support of the auxiliary image dataset that is included with this paper. The value of a deep learning model is in no small measure associated with the image datasets utilized to build the models, as data collection requires an extensive amount of time and research funding. To ensure the value of an image dataset, the background information is critically important in terms of how the images are collected and under what specific conditions (lighting levels, lighting color–temperature, image sensor used, sensor settings etc.). This paper provides that key information so that future researchers looking at developing plastic contamination models can leverage this image dataset to achieve their objectives without having to duplicate the research that was undertaken to obtain the images presented herein.

2. Materials and Methods

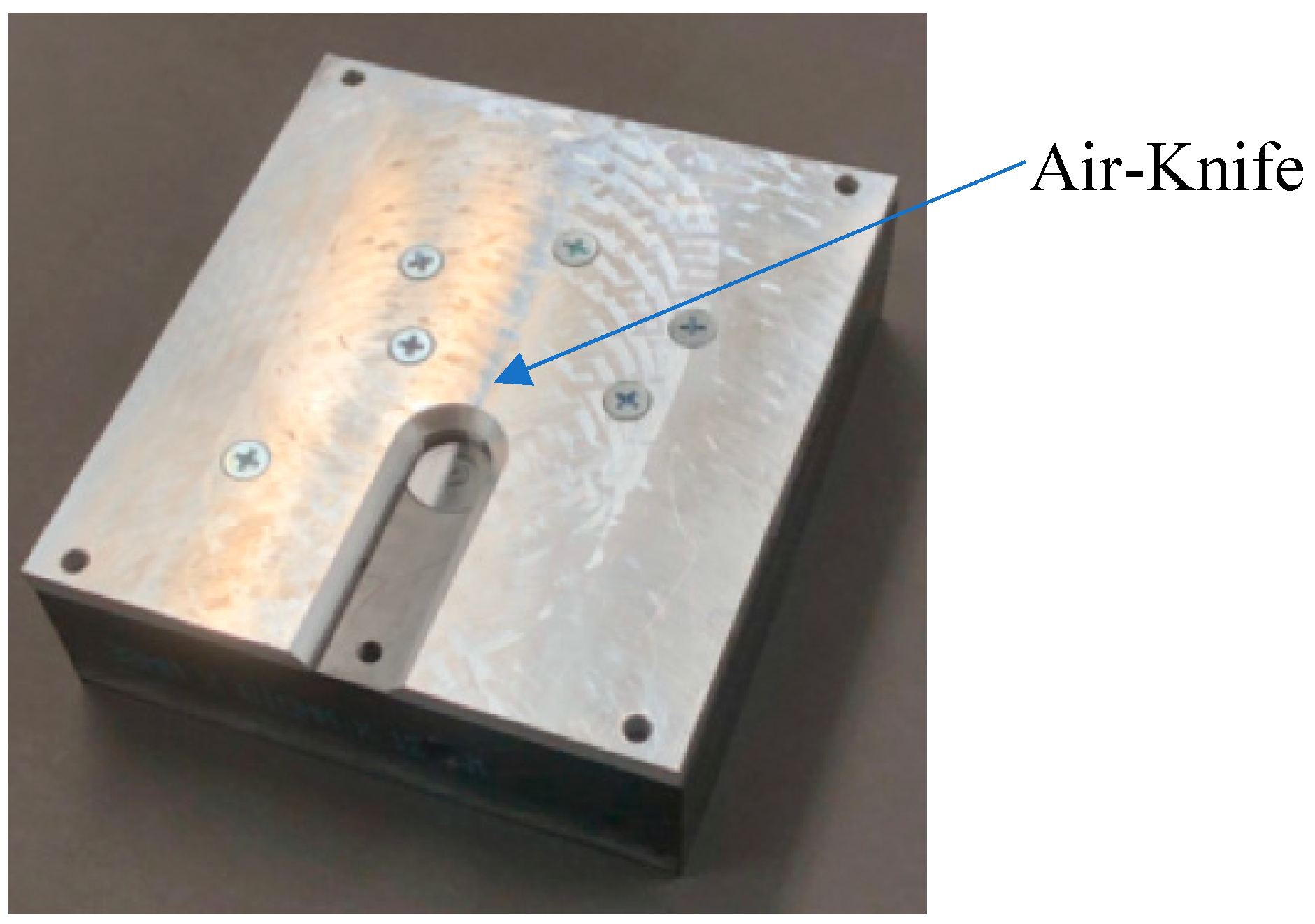

To capture images, a prototype system was developed and deployed for a commercial cotton gin. To keep the optics clean in the high-dust environment of a cotton gin, a custom camera housing was developed that included an integral air knife directed across the optics to keep it clear of dust and debris (

Figure 1).

The camera housing also held the computer node that was comprised of a low-cost embedded processing board with a Broadcom BCM2837 ARM central-processing-unit (CPU) that was configured to run Debian operating system (OS). The image acquisition software was written in C++. The camera comprised a low-cost cell-phone rolling-shutter image sensor (Sony IMX219PQ). To minimize the pipeline data transport delay between the imager and the CPU, a fast camera serial interface (CSI) bus was selected to couple the camera to the mobile industry processor interface (MIPI) that is embedded in the CPU. With cotton flowing under the camera at 1 m/s, the imager was configured to run at 40 frames per second (FPS) with a resolution of 640 × 480 pixels and an exposure time of 500 ms.

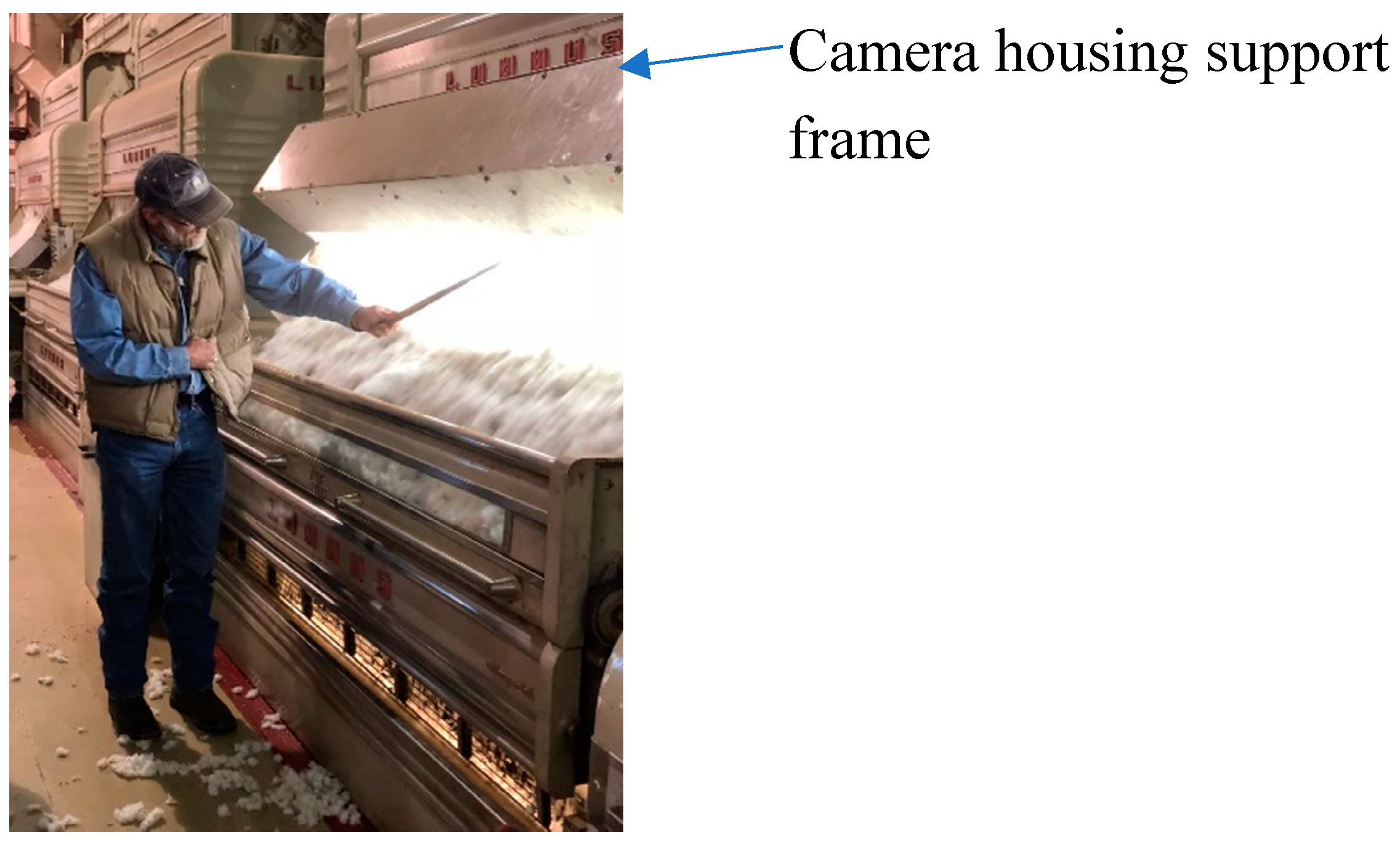

To span the entire 2.4 m width of the cotton gin-stand feeder, a support frame was constructed to hold the lights and the camera housing. The lighting was configured to provide 5000 K illumination from 628 to 892 lux with a mean of 772 lux and a standard deviation of 110 lux (14% of mean). The camera housings were spaced to provide six imaging nodes across the feeder, and the optics were configured to view 0.4 m of cotton along the long axis of the image in the horizontal axis.

Figure 2 shows manual testing of the camera system to assess detectability during gin operation.

The camera detection system was run for the duration of the 2018 and 2019 cotton ginning seasons at a commercial cotton gin. Example images of plastic contamination captured by the system are shown in

Figure 3. Leveraging these captured images, an image dataset was created and hand-edited to provide bounding boxes surrounding each item of interest in each and every image, such that the image dataset could be submitted to a deep learning model, via standard tools such as Keras, PyTorch, or Tensorflow. The image dataset annotations comprised three classes (plastic, cotton, and tray background) with:

401 occurrences of plastic contamination,

4751 occurrences of seed cotton

2847 occurrences of aluminum tray (background)

The seed cotton class included not only white seed cotton but also sticks, leaf trash, and cotton carpel segments (burs).

While the current prototype detection system using traditional color classifiers is able to detect most of the normal module-wrap plastic contaminant colors, (blue, green, pink, and yellow), it struggles when cotton is more degraded and becomes yellow-spotted, as the overlap in colors is significant, leading to large numbers of false positives which is unacceptable. Classification rates have been found in commercial trials to vary from 90% in very clean white cotton to 75% in cotton with high levels of leaves, burs, sticks, and yellow-spotted cotton. Other significant contaminant colors neglected in current image classifiers are white grocery bags, as against the white cotton background there is no way to separate the two. Other problematic colors are white and black plastic, as in the L*a*b* color-space used by the author’s classifier, both shows up in exactly the same place as cotton (a = 0, b = 0). While use of the luminance channel is an option, in practice, the white cotton that is in shadows on edges of the cotton bolls are grey, which is also very near center (a = 0, b = 0). Thus, while black is an easy color for the human visual system, a machine vision system utilizing a color space such as L*a*b* or similar color-spaces such as: hue-saturation-value, HSV; or hue-saturation-brightness, HSB; or hue-saturation-lightness, HSL, all will have similar difficulties. Hence, there is still a significant need for improvements in classifier development. A classifier based on deep learning that responds primarily to texture differences is hoped to have the potential to solve some of these issues and thereby improve classification rates.

To get to the next level of detectability, white or near-white plastic against a background of white cotton, the detection method needs to transition from traditional machine learning algorithms that utilize color difference-based detection to one that relies on texture differences. For deep learning training of deep-learning models such as convoluational-neural-networks, CNNs, a large dataset is necessary where objects from each class of interest must be identified and marked out as a sub-image portion in a bounding-box, where the coordinates of this sub-image bounding-box are provided to the CNN training algorithms through an annotation text file that provides bounding-box coordinates along with an associated class for each object in every image.

Figure 4 shows an example of one of the annotated images where the different colors for each bounding box represent a different class (yellow: tray, red: cotton, purple: plastic). As texture differences represent the proposed metric of detection, for the deep learning algorithms the included dataset with yellow plastic can be used for exploring this by converting the color images to gray-scale during the importation submission to the deep learning tools. That will ensure the deep learning algorithms simply do not utilize color in the back-propagation training of the CNN’s classifiers.