PDAM–STPNNet: A Small Target Detection Approach for Wildland Fire Smoke through Remote Sensing Images

Abstract

:1. Introduction

- (1)

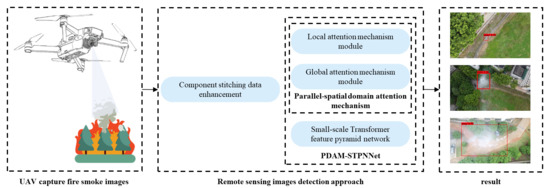

- Component stitching data enhancement is used to generate images with smaller scale targets in a scaled collage. The collage generates images of the same size as the original images, ensuring that the model can effectively detect small targets of forest fire smoke without incurring additional overheads to the model.

- (2)

- A parallel spatial domain attention mechanism is proposed, which contains a parallel local attention mechanism module and a global attention mechanism module as its sub-modules. The attention mechanism module explores the local deep texture features of smoke and the relationship between features, while the global attention mechanism module focuses on the global texture features of smoke, taking half of the number of channels of the feature map, respectively, and using concat fusion features to fully consider the smoke texture features and improve the results.

- (3)

- The small-scale transformer feature pyramid network is proposed to capture rich global and contextual information, with the aim of improving the detection of small targets in forest fire smoke detection tasks and avoiding the misdetection of small target smoke as far as possible.

2. Materials and Methods

2.1. Study Area

2.2. Component Stitching Data Enhancement

2.3. PDAM–STPNNet

2.3.1. Parallel Spatial Domain Attention Mechanism (PDAM)

2.3.2. Small-Scale Transformer Feature Pyramid Network (STPN)

2.4. UAV Forest Fire Monitoring System

3. Results

3.1. Dataset Acquisition

3.2. Experimental Preparation

3.2.1. Assessment Indicators

3.2.2. Experimental Environment

3.2.3. Experimental Setup

3.3. Comparison with YOLOX-L

3.4. Comparison between Different Models

3.5. Exploration of Methodological Effects and Ablation Experiments

3.5.1. Exploring the Effects of a Single Approach

3.5.2. Ablation Experiments with PDAM–STPNNet

3.6. Comparison of Visualisation Results

3.7. Model Performance Comparison on Public Datasets

3.7.1. Wildfire Observers and Smoke Recognition Homepage

3.7.2. Bowfire Dataset

3.8. Practical Application Tests

4. Discussion

4.1. Training and Test Datasets

4.2. Application and Future Work Directions

4.3. Advantages of the Method in This Paper

4.4. Analysis and Outlook

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| YOLO | You Only Look Once |

| IoU | Intersection over Union |

| mIoU | Mean Intersection over Union |

| CNN | Convolutional Neural Network |

| R-CNN | Region-based Convolutional Neural Network |

| SSD | Single Shot Multibox Detector |

| FPN | Feature Pyramid Network |

| FCN | Full Convolutional Network |

| ResNet | Residual Network |

| UAV | Unmanned Aerial Vehicle |

| SPP | Spatial Pyramid Pooling |

| TP | True Positive |

| FP | False Positive |

| FN | False Negative |

| TN | True Negative |

| AP | Average Precision |

| mAP | Mean Average Precision |

| AR | Average Recall |

| FPS | Frames Per Second |

| PDAM | Parallel spatial domain attention mechanism |

| STPN | Small-scale transformer feature pyramid network |

References

- Agus, C.; Azmi, F.F.; Ilfana, Z.R.; Wulandari, D.; Rachmanadi, D.; Harun, M.K.; Yuwati, T.W. The impact of Forest fire on the biodiversity and the soil characteristics of tropical Peatland. In Handbook of Climate Change and Biodiversity; Springer: Cham, Switzerland, 2019; pp. 287–303. [Google Scholar]

- Fachrie, M. Indonesia’s forest fire and haze pollution: An analysis of human security. Malays. J. Int. Relat. 2020, 8, 104–117. [Google Scholar] [CrossRef]

- Gramling, C. Here’s How Climate Change May Make Australia’s Wildfires More Common. 2020. Available online: https://www.sciencenews.org/article/how-climate-change-may-make-australia-wildfires-more-common (accessed on 4 June 2021).

- Cascio, W.E. Wildland fire smoke and human health. Sci. Total Environ. 2018, 624, 586–595. [Google Scholar] [CrossRef]

- Chowdary, V.; Gupta, M.K. Automatic forest fire detection and monitoring techniques: A survey. In Intelligent Communication, Control and Devices; Springer: Singapore, 2018; pp. 1111–1117. [Google Scholar]

- Jiang, W.; Wang, F.; Fang, L.; Zheng, X.; Qiao, X.; Li, Z.; Meng, Q. Modelling of wildland-urban interface fire spread with the heterogeneous cellular automata model. Environ. Model. Softw. 2021, 135, 104895. [Google Scholar] [CrossRef]

- Shah, R.; Satam, P.; Sayyed, M.A.; Salvi, P. Wireless Smoke Detector and Fire Alarm System. Int. Res. J. Eng. Technol. 2019, 6, 1407–1412. [Google Scholar]

- Amiaz, T.; Fazekas, S.; Chetverikov, D.; Kiryati, N. Detecting regions of dynamic texture. In International Conference on Scale Space and Variational Methods in Computer Vision; Springer: Berlin/Heidelberg, Germany, 2007; pp. 848–859. [Google Scholar]

- Toreyin, B.U.; Dedeoglu, Y.; Cetin, A.E. Contour based smoke detection in video using wavelets. In Proceedings of the 2006 14th European Signal Processing Conference, Florence, Italy, 4–8 September 2006; pp. 1–5. [Google Scholar]

- Ghassempour, S.; Girosi, F.; Maeder, A. Clustering multivariate time series using hidden Markov models. Int. J. Environ. Res. Public Health 2014, 11, 2741–2763. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Chunyu, Y.; Jun, F.; Jinjun, W.; Yongming, Z. Video fire smoke detection using motion and color features. Fire Technol. 2010, 46, 651–663. [Google Scholar] [CrossRef]

- Jiao, Z.; Zhang, Y.; Xin, J.; Mu, L.; Yi, Y.; Liu, H.; Liu, D. A deep learning based forest fire detection approach using UAV and YOLOv3. In Proceedings of the 2019 1st International Conference on Industrial Artificial Intelligence (IAI), Shenyang, China, 23–27 July 2019; pp. 1–5. [Google Scholar]

- Kim, H.W. A Study on Application Methods of Drone Technology. J. Korea Inst. Inf. Electron. Commun. Technol. 2017, 10, 601–608. [Google Scholar]

- Roldán-Gómez, J.J.; González-Gironda, E.; Barrientos, A. A Survey on Robotic Technologies for Forest Firefighting: Applying Drone Swarms to Improve Firefighters’ Efficiency and Safety. Sciences 2021, 11, 363. [Google Scholar] [CrossRef]

- Kinaneva, D.; Hristov, G.; Raychev, J.; Zahariev, P. Early forest fire detection using drones and artificial intelligence. In Proceedings of the 2019 42nd International Convention on Information and Communication Technology, Electronics and Microelectronics (MIPRO), Opatija, Croatia, 20–24 May 2019; pp. 1060–1065. [Google Scholar]

- Alexandrov, D.; Pertseva, E.; Berman, I.; Pantiukhin, I.; Kapitonov, A. Analysis of machine learning methods for wildfire security monitoring with an unmanned aerial vehicle. In Proceedings of the 2019 24th Conference of Open Innovations Association (FRUCT), Moscow, Russia, 8–12 April 2019; pp. 3–9. [Google Scholar]

- Tian, G.; Liu, J.; Zhao, H.; Yang, W. Small object detection via dual inspection mechanism for UAV visual images. Appl. Intell. 2021, 1–14. [Google Scholar] [CrossRef]

- Tong, K.; Wu, Y.; Zhou, F. Recent advances in small object detection based on deep learning: A review. Image Vis. Comput. 2020, 97, 103910. [Google Scholar] [CrossRef]

- Yu, X.; Gong, Y.; Jiang, N.; Ye, Q.; Han, Z. Scale match for tiny person detection. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, Snowmass, CO, USA, 1–5 March 2020; pp. 1257–1265. [Google Scholar]

- Kisantal, M.; Wojna, Z.; Murawski, J.; Naruniec, J.; Cho, K. Augmentation for small object detection. arXiv 2019, arXiv:1902.07296. [Google Scholar]

- Sitaula, C.; Xiang, Y.; Aryal, S.; Lu, X. Scene image representation by foreground, background and hybrid features. Expert Syst. Appl. 2021, 182, 115285. [Google Scholar] [CrossRef]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 21–23 June 1994; pp. 779–788. [Google Scholar]

- Redmon, J.; Farhadi, A. YOLO9000: Better, faster, stronger. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 7263–7271. [Google Scholar]

- Redmon, J.; Farhadi, A. Yolov3: An incremental improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar]

- Ge, Z.; Liu, S.; Wang, F.; Li, Z.; Sun, J. Yolox: Exceeding yolo series in 2021. arXiv 2021, arXiv:2107.08430. [Google Scholar]

- Chen, Y.; Zhang, M.; Yang, X.; Xu, Y. The research of forest fire monitoring application. In Proceedings of the 2010 18th International Conference on Geoinformatics, Beijing, China, 18–20 June 2010; pp. 1–5. [Google Scholar]

- Chen, Y.; Zhang, P.; Li, Z.; Li, Y.; Zhang, X.; Meng, G.; Xiang, S.; Sun, J.; Jia, J. Stitcher: Feedback-driven data provider for object detection. arXiv 2020, arXiv:2004.12432. [Google Scholar]

- Luo, P.; Ren, J.; Peng, Z.; Zhang, R.; Li, J. Differentiable learning-to-normalize via switchable normalization. arXiv 2018, arXiv:1806.10779. [Google Scholar]

- Zhang, J.; Li, C.; Grzegorzek, M. Applications of Artificial Neural Networks in Microorganism Image Analysis: A Comprehensive Review from Conventional Multilayer Perceptron to Popular Convolutional Neural Network and Potential Visual Transformer. arXiv 2021, arXiv:2108.00358. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is all you need. In Advances in Neural Information Processing Systems; Curran Associates Inc.: Hook, NY, USA, 2017; pp. 5998–6008. [Google Scholar]

- Xu, R.; Lin, H.; Lu, K.; Cao, L.; Liu, Y. A Forest Fire Detection System Based on Ensemble Learning. Forests 2021, 12, 217. [Google Scholar] [CrossRef]

- Cetin, E. Computer Vision Based Fire Detection Dataset. 2015. Available online: http://signal.ee.bilkent.edu.tr/VisiFire/Demo/SmokeClips/ (accessed on 20 December 2015).

- University of Salerno. Smoke Detection Dataset. 2015. Available online: http://mivia.unisa.it/ (accessed on 20 December 2015).

- University of Science and Technology of China, State Key Lab of Fire Science. Available online: http://staff.ustc.edu.cn/,yfn/vsd.html (accessed on 20 December 2015).

- Keimyung University. Wildfire Smoke Video Database (CVPR Lab, Keimyung University). 2012. Available online: https://cvpr.kmu.ac.kr/ (accessed on 20 December 2015).

- Shamsoshoara, A.; Afghah, F.; Razi, A.; Zheng, L.; Fulé, P.Z.; Blasch, E. Aerial Imagery Pile burn detection using Deep Learning: The FLAME dataset. Comput. Netw. 2021, 193, 108001. [Google Scholar] [CrossRef]

- Wildfire Observers and Smoke Recognition Homepage. Available online: http://wildfire.fesb.hr/index.php?option=com_content&view=article&id=59&Itemid=55 (accessed on 20 December 2015).

- Bowfire Dataset. Available online: https://bitbucket.org/gbdi/bowfire-dataset/downloads/ (accessed on 20 December 2015).

| Hardware environment | CPU | AMD Ryzen 7 5800H with Radeon Graphics |

| RAM | 64 GB | |

| Video memory | 16 GB | |

| GPU | NVIDIA GeForce RTX 3080Ti | |

| Software environment | OS | Windows 10 |

| CUDA Toolkit V11.1; CUDNN V8.0.4; Python 3.8.8; torch 1.8.1; torchvision 0.9.1 | ||

| Imaging device | Angle jitter: ±0.02° Effective pixel: 36 million Phase detection focus: 567 Contrast detection focus: 425 Viewfinder coverage: 74% | |

| Size of Input Images | Batch_Size | Momentum | Initial Learning Rate | Decay | Iterations |

|---|---|---|---|---|---|

| 608 * 608 | 16 | 0.9 | 0.005 | 0.002 | 150 epochs |

| Method | YOLOX-L | PDAM–STPNNet |

|---|---|---|

| mAP (%) | 67.34 | 77.86 |

| mAP50(%) | 81.15 | 88.01 |

| mAP75(%) | 71.87 | 80.54 |

| AR | 41.19 | 49.23 |

| FPS | 77.1 | 58.6 |

| Parameter | 54.18 M | 57.19 M |

| GFLOPs | 155.61 | 164.31 |

| Infertime | 14.5 ms | 16.1 ms |

| Method | Backbone | mAP | mAP50 | mAP75 | AR | FPS |

|---|---|---|---|---|---|---|

| Two-stage detectors | ||||||

| Fast R-CNN | ResNet-101 | 61.18 | 70.24 | 65.05 | 36.31 | - |

| Faster R-CNN | VGG16 | 63.11 | 72.51 | 66.42 | 37.02 | - |

| R-FCN | ResNet-101 | 64.91 | 74.52 | 66.98 | 38.74 | - |

| D-FCN | Aligned-Inception-Resnet | 69.01 | 79.26 | 71.57 | 42.99 | - |

| CoupleNet | ResNet-101 | 63.05 | 72.47 | 66.49 | 36.88 | - |

| FPN | ResNet-101 | 68.15 | 80.83 | 69.94 | 40.43 | - |

| Mask R-CNN | ResNet-101 | 72.11 | 81.96 | 75.00 | 45.19 | - |

| Regionlets | ResNet-101 | 70.97 | 81.68 | 74.26 | 44.82 | - |

| Libra R-CNN | RseNext-101 | 70.23 | 80.86 | 73.40 | 44.19 | - |

| SINPER | ResNet-101 | 71.89 | 81.46 | 74.62 | 44.86 | - |

| Cascade Mask R-CNN | ResNet-152 | 75.04 | 85.65 | 78.79 | 46.83 | - |

| D-RFCN + SNIP | DPN-98 | 76.73 | 86.88 | 80.19 | 47.29 | - |

| One-stage detectors | ||||||

| SSD512 | VGG16 | 59.41 | 70.30 | 61.89 | 37.31 | 81.2 |

| DSSD513 | ResNet-101 | 61.62 | 72.79 | 64.53 | 37.96 | 68.1 |

| FSAF | ResNext-101 | 76.12 | 85.86 | 76.73 | 46.94 | 22.8 |

| NAS-FPN | AmoebaNet | 79.86 | 90.03 | 81.02 | 49.76 | 19.6 |

| YOLOv3 + ASFF | Darknet53 | 65.81 | 77.10 | 69.52 | 41.64 | 31.4 |

| YOLOv4-L | CSP-Darknet53 | 66.21 | 79.26 | 69.52 | 41.73 | 34.8 |

| YOLOv5-L | CSP-Darknet53 | 65.38 | 78.93 | 69.73 | 41.40 | 82.9 |

| YOLOX-S | CSP-Darknet53 | 62.14 | 75.98 | 66.24 | 39.35 | 96.8 |

| YOLOX-M | CSP-Darknet53 | 63.58 | 77.12 | 67.38 | 39.83 | 87.6 |

| YOLOX-L | CSP-Darknet53 | 67.34 | 81.15 | 71.87 | 41.19 | 77.1 |

| YOLOX-L | AmoebaNet | 69.12 | 82.75 | 73.54 | 42.51 | 24.7 |

| YOLOX-X | CSP-Darknet53 | 68.58 | 82.37 | 73.19 | 41.97 | 62.9 |

| Ours | ||||||

| PDAM–STPNNet | AmoebaNet | 76.87 | 87.21 | 79.68 | 48.75 | 17.7 |

| PDAM–STPNNet | CSP-Darknet53 | 77.86 | 88.01 | 80.54 | 49.23 | 58.6 |

| Method | k = 12 | k = 22 | k = 32 |

|---|---|---|---|

| mAP (%) | 67.34 | 69.21 | 69.07 |

| mAP50(%) | 81.15 | 83.06 | 82.95 |

| mAP75 (%) | 71.87 | 73.74 | 73.60 |

| AR | 41.19 | 42.07 | 41.91 |

| FPS | 77.1 | 76.2 | 75.9 |

| Parameter | 54.18 M | 54.18 M | 54.18 M |

| GFLOPs | 155.61 | 157.39 | 157.17 |

| Method | mAP | mAP50 | mAP75 | AR |

|---|---|---|---|---|

| BN + ReLU | 69.64 | 83.74 | 74.22 | 42.47 |

| BN + Sigmoid | 69.58 | 83.57 | 74.17 | 42.39 |

| BN + SiLU | 69.96 | 83.91 | 74.53 | 42.68 |

| SN + ReLU | 70.10 | 84.16 | 74.81 | 42.94 |

| SN + Sigmoid | 70.06 | 84.11 | 74.78 | 42.92 |

| SN + SiLU | 70.42 | 84.31 | 75.03 | 43.16 |

| Method | mAP | mAP50 | mAP75 | AR | Param |

|---|---|---|---|---|---|

| No improvement | 67.34 | 81.15 | 71.87 | 41.19 | 54.18 M |

| Add SE Attention after CSP_2 | 67.46 | 81.29 | 72.15 | 41.37 | 55.95 M |

| Add CBAM Attention after CSP_2 | 67.59 | 81.34 | 72.28 | 41.54 | 56.17 M |

| Replace CSP_2 with transformer encoder | 69.26 | 82.97 | 74.38 | 42.56 | 53.56 M |

| Method | mAP | mAP50 | mAP75 | AR | FPS | Param | GFLOPs |

|---|---|---|---|---|---|---|---|

| YOLOX-L | 67.34 | 81.15 | 71.87 | 41.19 | 77.1 | 54.18 M | 155.61 |

| A | 69.21 | 83.06 | 73.74 | 42.07 | 76.2 | 54.18 M | 157.39 |

| B | 70.56 | 84.23 | 75.11 | 43.20 | 66.8 | 56.94 M | 159.13 |

| C | 70.42 | 84.31 | 75.03 | 43.16 | 66.3 | 56.02 M | 158.76 |

| D | 69.26 | 82.97 | 74.38 | 42.56 | 78.4 | 53.56 M | 154.27 |

| A + B | 72.64 | 86.15 | 76.99 | 44.08 | 65.9 | 56.94 M | 161.09 |

| A + C | 72.23 | 85.98 | 77.04 | 43.95 | 65.4 | 56.02 M | 160.87 |

| A + D | 71.39 | 84.68 | 76.56 | 43.42 | 77.3 | 53.56 M | 156.10 |

| B + C | 73.60 | 87.11 | 78.25 | 45.36 | 57.9 | 58.78 M | 163.83 |

| B + D | 72.95 | 86.10 | 77.94 | 45.03 | 68.1 | 56.32 M | 158.19 |

| C + D | 72.48 | 85.92 | 77.63 | 44.89 | 67.6 | 55.98 M | 157.72 |

| A + B + C | 75.83 | 89.26 | 80.43 | 46.84 | 56.7 | 58.78 M | 166.68 |

| A + B + D | 74.96 | 88.35 | 79.56 | 46.30 | 66.8 | 56.32 M | 159.94 |

| A + C + D | 74.81 | 88.24 | 79.58 | 46.22 | 66.1 | 56.13 M | 159.27 |

| B + C + D | 75.91 | 86.12 | 78.49 | 48.11 | 59.4 | 57.19 M | 162.50 |

| A + B + C + D (PDAM–STPNNet) | 77.86 | 88.01 | 80.54 | 49.23 | 58.6 | 57.19 M | 164.31 |

| Experimental Method | Detection Result | ||

|---|---|---|---|

| YOLOX-L |  |  |  |

| YOLOX-L with component stitching data enhancement |  |  |  |

| YOLOX-L with LAM |  |  |  |

| YOLOX-L with GAM |  |  |  |

| YOLOX-L with STPN |  |  |  |

| PDAM–STPNNet |  |  |  |

| a | b | c | |

| Method | YOLOX-L | YOLOv4 | YOLOv5 | YOLOR | PDAM–STPNNet |

|---|---|---|---|---|---|

| mAP(%) | 67.13 | 66.79 | 65.77 | 66.26 | 73.81 |

| mAP50(%) | 79.15 | 78.61 | 77.78 | 78.94 | 86.21 |

| mAP75(%) | 67.77 | 66.75 | 65.89 | 66.94 | 76.54 |

| AR | 41.39 | 41.02 | 40.49 | 40.69 | 45.23 |

| FPS | 59.78 | 35.75 | 112.4 | 50.78 | 51.6 |

| GFLOPs | 183.15 | 202.55 | 101.87 | 167.15 | 186.31 |

| Method | YOLOX-L | YOLOv4 | YOLOv5 | YOLOR | PDAM–STPNNet |

|---|---|---|---|---|---|

| mAP(%) | 55.86 | 56.39 | 50.81 | 55.24 | 60.52 |

| mAP50(%) | 65.18 | 66.57 | 66.84 | 67.58 | 74.22 |

| mAP75(%) | 53.47 | 54.93 | 53.78 | 54.23 | 63.12 |

| AR | 35.14 | 35.41 | 33.44 | 34.96 | 38.03 |

| FPS | 56.37 | 34.67 | 100.49 | 41.14 | 43.62 |

| GFLOPs | 196.97 | 221.19 | 111.38 | 179.74 | 200.04 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhan, J.; Hu, Y.; Cai, W.; Zhou, G.; Li, L. PDAM–STPNNet: A Small Target Detection Approach for Wildland Fire Smoke through Remote Sensing Images. Symmetry 2021, 13, 2260. https://0-doi-org.brum.beds.ac.uk/10.3390/sym13122260

Zhan J, Hu Y, Cai W, Zhou G, Li L. PDAM–STPNNet: A Small Target Detection Approach for Wildland Fire Smoke through Remote Sensing Images. Symmetry. 2021; 13(12):2260. https://0-doi-org.brum.beds.ac.uk/10.3390/sym13122260

Chicago/Turabian StyleZhan, Jialei, Yaowen Hu, Weiwei Cai, Guoxiong Zhou, and Liujun Li. 2021. "PDAM–STPNNet: A Small Target Detection Approach for Wildland Fire Smoke through Remote Sensing Images" Symmetry 13, no. 12: 2260. https://0-doi-org.brum.beds.ac.uk/10.3390/sym13122260