Multiple Defects Inspection of Dam Spillway Surface Using Deep Learning and 3D Reconstruction Techniques

Abstract

:1. Introduction

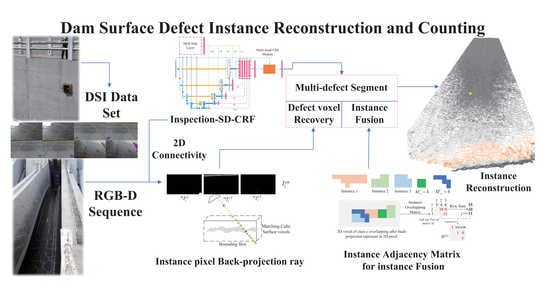

- We use the images collected by the wall-climbing robot to build the first pixel-level segmentation dataset of dam surface defects, including patched area, spot, erosion, crack, and spalling. Moreover, we propose a multi-level side-out structure with CRF layers optimization to improve the IoU of the model segmentation results for multiple classes.

- We solve the defects incomplete registration problem by proposing the class instance rays back-projection approach to re-register the disappeared defects pixels onto the surface 3D mesh model.

- We propose an instance adjacency matrix to fuse the same defect class instance by setting a 3D intersection over union threshold, which can facilitate the defect instance statistic problem.

2. Related Works

2.1. Concrete Surface Defect Detection Based on Deep Learning

2.2. 3D Reconstruction and Modeling

3. Robotic Visual Inspection System

3.1. Image Data Collection

3.2. Dam Surface Inspection (DSI) Data Set

3.3. Data Processing Pipeline of Inspection

4. Multi-Class Defect Segmentation

4.1. PAC Layer Guides Multi-Level Side-Out

4.2. Multi-Head CRF

4.3. Joint Partial Boundary Loss Function

5. 3D Multi-Class Defect Instance Reconstruction

| Algorithm1: Pseudo code for our algorithm |

|

5.1. Disadvantages of TSDF in Sparse Keyframes Instance Reconstruction

5.2. Keyframes Back-Projection and Voxel Attribute Update

5.3. Instance Fusion Using Volumetric IoU Threshold

6. Experiment and Result

6.1. DSI Defect Segmentation Test

6.1.1. Model Implementation and Training

6.1.2. Multi-Head CRF Experimental Study

6.2. Defect Surface Reconstruction Test

7. Future Work

8. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| MDPI | Multidisciplinary Digital Publishing Institute |

| DOAJ | Directory of Open Access Journals |

| TLA | Three letter acronym |

| LD | Linear dichroism |

| Pixel i’s position | |

| Define the scope of filter kernel | |

| v | Features of feature map |

| f | Feature of guide layer |

| CRF target function of Gibbs distribution | |

| Potts model | |

| Kernel equation of feature | |

| Label-related energy | |

| Represent loss function | |

| i’th image in image sequence | |

| Class c1 in segmetation result | |

| The l’th instance in class cn of | |

| V | Volume in 3D space |

| Scores, class index and instance index attribution of V | |

| Matrix element at i’th row and j’th column |

References

- Huang, S. Development and prospect of defect detection technology for concrete dams. Dam Saf. 2016, 3, 1. [Google Scholar]

- Wan, G.; Yang, J.; Zhang, Y.; Gu, W.; Liao, X. Selection of the maintenance and repairing equipment for flow surfaces and sidewalls of the drift holes and flood discharge holes in Three Gorges Dam. Hydro Power New Energy 2015, 45–47. [Google Scholar] [CrossRef]

- Khaloo, A.; Lattanzi, D.; Jachimowicz, A.; Devaney, C. Utilizing UAV and 3D computer vision for visual inspection of a large gravity dam. Front. Built Environ. 2018, 4, 31. [Google Scholar] [CrossRef] [Green Version]

- Ghahremani, K.; Khaloo, A.; Mohamadi, S.; Lattanzi, D. Damage detection and finite-element model updating of structural components through point cloud analysis. J. Aerosp. Eng. 2018, 31, 04018068. [Google Scholar] [CrossRef]

- Khaloo, A.; Lattanzi, D. Automatic detection of structural deficiencies using 4D Hue-assisted analysis of color point clouds. In Dynamics of Civil Structures, Volume 2; Springer: Berlin/Heidelberg, Germany, 2019; pp. 197–205. [Google Scholar]

- Angeli, S.; Lingua, A.M.; Maschio, P.; Piantelli, L.; Dugone, D.; Giorgis, M. Dense 3D model generation of a dam surface using UAV for visual inspection. In Proceedings of the International Conference on Robotics in Alpe-Adria Danube Region, Patras, Greece, 6–8 June 2018; pp. 151–162. [Google Scholar]

- Buffi, G.; Manciola, P.; Grassi, S.; Barberini, M.; Gambi, A. Survey of the Ridracoli Dam: UAV–based photogrammetry and traditional topographic techniques in the inspection of vertical structures. Geomat. Nat. Hazards Risk 2017, 8, 1562–1579. [Google Scholar] [CrossRef] [Green Version]

- Ridolfi, E.; Buffi, G.; Venturi, S.; Manciola, P. Accuracy analysis of a dam model from drone surveys. Sensors 2017, 17, 1777. [Google Scholar] [CrossRef] [Green Version]

- Oliveira, A.; Oliveira, J.F.; Pereira, J.M.; De Araújo, B.R.; Boavida, J. 3D modelling of laser scanned and photogrammetric data for digital documentation: The Mosteiro da Batalha case study. J. Real-Time Image Process. 2014, 9, 673–688. [Google Scholar] [CrossRef]

- Sakagami, N.; Yumoto, Y.; Takebayashi, T.; Kawamura, S. Development of dam inspection robot with negative pressure effect plate. J. Field Robot. 2019, 36, 1422–1435. [Google Scholar] [CrossRef]

- Hong, K.; Wang, H.; Zhu, B. Small Defect Instance Reconstruction Based on 2D Connectivity-3D Probabilistic Voting. In Proceedings of the 2021 IEEE International Conference on Robotics and Biomimetics (ROBIO), Sanya, China, 27–31 December 2021; pp. 1448–1453. [Google Scholar]

- Yeum, C.M.; Dyke, S.J.; Ramirez, J. Visual data classification in post-event building reconnaissance. Eng. Struct. 2018, 155, 16–24. [Google Scholar] [CrossRef]

- Gao, Y.; Mosalam, K.M. Deep transfer learning for image-based structural damage recognition. Comput. -Aided Civ. Infrastruct. Eng. 2018, 33, 748–768. [Google Scholar] [CrossRef]

- Li, R.; Yuan, Y.; Zhang, W.; Yuan, Y. Unified vision-based methodology for simultaneous concrete defect detection and geolocalization. Comput. -Aided Civ. Infrastruct. Eng. 2018, 33, 527–544. [Google Scholar] [CrossRef]

- Gao, Y.; Kong, B.; Mosalam, K.M. Deep leaf-bootstrapping generative adversarial network for structural image data augmentation. Comput. -Aided Civ. Infrastruct. Eng. 2019, 34, 755–773. [Google Scholar] [CrossRef]

- Yang, L.; Li, B.; Li, W.; Liu, Z.; Yang, G.; Xiao, J. Deep concrete inspection using unmanned aerial vehicle towards cssc database. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems, Vancouver, BC, Canada, 24–28 September 2017; pp. 24–28. [Google Scholar]

- Tang, Y.; Huang, Z.; Chen, Z.; Chen, M.; Zhou, H.; Zhang, H.; Sun, J. Novel visual crack width measurement based on backbone double-scale features for improved detection automation. Eng. Struct. 2023, 274, 115158. [Google Scholar] [CrossRef]

- Zhang, C.; Chang, C.c.; Jamshidi, M. Simultaneous pixel-level concrete defect detection and grouping using a fully convolutional model. Struct. Health Monit. 2021, 20, 2199–2215. [Google Scholar] [CrossRef]

- Azimi, M.; Eslamlou, A.D.; Pekcan, G. Data-driven structural health monitoring and damage detection through deep learning: State-of-the-art review. Sensors 2020, 20, 2778. [Google Scholar] [CrossRef] [PubMed]

- Li, S.; Zhao, X.; Zhou, G. Automatic pixel-level multiple damage detection of concrete structure using fully convolutional network. Comput. -Aided Civ. Infrastruct. Eng. 2019, 34, 616–634. [Google Scholar] [CrossRef]

- Spencer, B.F., Jr.; Hoskere, V.; Narazaki, Y. Advances in computer vision-based civil infrastructure inspection and monitoring. Engineering 2019, 5, 199–222. [Google Scholar] [CrossRef]

- Lorensen, W.E.; Cline, H.E. Marching cubes: A high resolution 3D surface construction algorithm. ACM Siggraph Comput. Graph. 1987, 21, 163–169. [Google Scholar] [CrossRef]

- Izadi, S.; Kim, D.; Hilliges, O.; Molyneaux, D.; Newcombe, R.; Kohli, P.; Shotton, J.; Hodges, S.; Freeman, D.; Davison, A.; et al. KinectFusion: Real-time 3D reconstruction and interaction using a moving depth camera. In Proceedings of the 24th Annual ACM Symposium on User Interface Software and Technology, Santa Barbara, CA, USA, 16–19 October 2011; pp. 559–568. [Google Scholar]

- Salas-Moreno, R.F.; Newcombe, R.A.; Strasdat, H.; Kelly, P.H.; Davison, A.J. Slam++: Simultaneous localisation and mapping at the level of objects. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Portland, OR, USA, 23–28 June 2013; pp. 1352–1359. [Google Scholar]

- Grinvald, M.; Tombari, F.; Siegwart, R.; Nieto, J. TSDF++: A multi-object formulation for dynamic object tracking and reconstruction. In Proceedings of the 2021 IEEE International Conference on Robotics and Automation (ICRA), Xi’an, China, 30 May–5 June 2021; pp. 14192–14198. [Google Scholar]

- Jahanshahi, M.R.; Masri, S.F. Adaptive vision-based crack detection using 3D scene reconstruction for condition assessment of structures. Autom. Constr. 2012, 22, 567–576. [Google Scholar] [CrossRef]

- Yang, L.; Li, B.; Yang, G.; Chang, Y.; Liu, Z.; Jiang, B.; Xiaol, J. Deep neural network based visual inspection with 3d metric measurement of concrete defects using wall-climbing robot. In Proceedings of the 2019 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), The Venetian Macao, Macau, 3–8 November 2019; pp. 2849–2854. [Google Scholar]

- Mur-Artal, R.; Tardós, J.D. Orb-slam2: An open-source slam system for monocular, stereo, and rgb-d cameras. IEEE Trans. Robot. 2017, 33, 1255–1262. [Google Scholar] [CrossRef] [Green Version]

- Insa-Iglesias, M.; Jenkins, M.D.; Morison, G. 3D visual inspection system framework for structural condition monitoring and analysis. Autom. Constr. 2021, 128, 103755. [Google Scholar] [CrossRef]

- Tang, Y.; Chen, M.; Lin, Y.; Huang, X.; Huang, K.; He, Y.; Li, L. Vision-based three-dimensional reconstruction and monitoring of large-scale steel tubular structures. Adv. Civ. Eng. 2020, 2020, 1236021. [Google Scholar] [CrossRef]

- Xie, S.; Tu, Z. Holistically-nested edge detection. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 1395–1403. [Google Scholar]

- Ronneberger, O.; Fischer, P.; Brox, T. U-net: Convolutional networks for biomedical image segmentation. In Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention, Munich, Germany, 5–9 October 2015; pp. 234–241. [Google Scholar]

- Su, H.; Jampani, V.; Sun, D.; Gallo, O.; Learned-Miller, E.; Kautz, J. Pixel-adaptive convolutional neural networks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 11166–11175. [Google Scholar]

- Krähenbühl, P.; Koltun, V. Parameter learning and convergent inference for dense random fields. In Proceedings of the International Conference on Machine Learning, Miami, FL, USA, 4–7 December 2013; pp. 513–521. [Google Scholar]

- Krähenbühl, P.; Koltun, V. Efficient inference in fully connected crfs with gaussian edge potentials. Adv. Neural Inf. Process. Syst. 2011, 24, 109–117. [Google Scholar]

- Berman, M.; Triki, A.R.; Blaschko, M.B. The lovász-softmax loss: A tractable surrogate for the optimization of the intersection-over-union measure in neural networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 4413–4421. [Google Scholar]

- Lin, T.Y.; Goyal, P.; Girshick, R.; He, K.; Dollár, P. Focal loss for dense object detection. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2980–2988. [Google Scholar]

- Kervadec, H.; Bouchtiba, J.; Desrosiers, C.; Granger, E.; Dolz, J.; Ayed, I.B. Boundary loss for highly unbalanced segmentation. In Proceedings of the International Conference on Medical Imaging with Deep Learning, London, UK, 8–10 July 2019; pp. 285–296. [Google Scholar]

- Chen, L.C.; Zhu, Y.; Papandreou, G.; Schroff, F.; Adam, H. Encoder-decoder with atrous separable convolution for semantic image segmentation. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 801–818. [Google Scholar]

- Qin, X.; Zhang, Z.; Huang, C.; Dehghan, M.; Zaiane, O.R.; Jagersand, M. U2-Net: Going deeper with nested U-structure for salient object detection. Pattern Recognit. 2020, 106, 107404. [Google Scholar] [CrossRef]

- Zhao, H.; Shi, J.; Qi, X.; Wang, X.; Jia, J. Pyramid scene parsing network. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 2881–2890. [Google Scholar]

| Class | Background | Crack | Spalling | Patched | Erosion | Spot |

|---|---|---|---|---|---|---|

| index | 0 | 1 | 2 | 3 | 4 | 5 |

| Train pixels (10) | 10,505 | 110.5 | 888.1 | 109.8 | 17.2 | 1.2 |

| Validate pixels (10) | 2971.2 | 51 | 233.4 | 52.8 | 8.76 | 0.76 |

| Test pixels (10) | 1666.7 | 26.5 | 120.2 | 28.9 | 6.97 | 0.41 |

| mIoU | Defect mIoU | Background | Crack | Spalling | Patch Area | Rope | Erosion | Spot | Model Size | |

|---|---|---|---|---|---|---|---|---|---|---|

| U-Net | 0.486 | 0.478 | 0.939 | 0.344 | 0.588 | 0.51 | 0.536 | 0.105 | 0.379 | 124.3 Mb |

| Inspection-Net | 0.529 | 0.51 | 0.946 | 0.467 | 0.724 | 0.404 | 0.646 | 0.315 | 0.182 | 164.5 Mb |

| Inspection-SD | 0.584 | 0.575 | 0.953 | 0.427 | 0.736 | 0.504 | 0.638 | 0.467 | 0.364 | 164.5 Mb + 30 Kb |

| Inspection-SD-CRF | 0.61 | 0.6 | 0.95 | 0.473 | 0.704 | 0.537 | 0.670 | 0.471 | 0.467 | 164.5 Mb + 74.74 Kb |

| DeepLab V3+ | 0.591 | 0.582 | 0.964 | 0.53 | 0.82 | 0.693 | 0.644 | 0.324 | 0.168 | 238 Mb |

| U2Net | 0.412 | 0.435 | 0.946 | 0.344 | 0.678 | 0.437 | 0.276 | 0.042 | 0.217 | 176.8 Mb |

| PSPNet | 0.61 | 0.599 | 0.972 | 0.491 | 0.861 | 0.823 | 0.676 | 0.428 | 0.021 | 345.2 Mb |

| PSPNet-PAC-CRF | 0.618 | 0.603 | 0.978 | 0.503 | 0.860 | 0.821 | 0.703 | 0.438 | 0.038 | 345.2 Mb + 74.74 Kb |

| Models | No CRF | Ours 4 Head | 2 Head | 1 Head | 6 Head |

|---|---|---|---|---|---|

| Head Class | - | [0123456] × 2 [0235] [035] | [0146] [0235] | All class | [0x], x |

| mIoU | 0.583 | 0.610 | 0.595 | 0.589 | 0.596 |

| Voxel Class | Crack | Spalling | Patched | Cable | Erosion | Spot | MAE |

|---|---|---|---|---|---|---|---|

| Naive TSDF | 25 | - | 10 | - | 4 | 1 | 9.5 |

| Back-projection | 10 | - | 1 | - | 2 | 6 | 1.75 |

| GT | 8 | - | 1 | - | 2 | 11 | 0 |

| Defect Class | Crack | Spalling | Patched | Cable | Erosion | Spot |

|---|---|---|---|---|---|---|

| Naive distance TSDF | 97 | 45078 | 346 | 1 | ||

| Back-projection | 228 | 47132 | 377 | 8 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Hong, K.; Wang, H.; Yuan, B.; Wang, T. Multiple Defects Inspection of Dam Spillway Surface Using Deep Learning and 3D Reconstruction Techniques. Buildings 2023, 13, 285. https://0-doi-org.brum.beds.ac.uk/10.3390/buildings13020285

Hong K, Wang H, Yuan B, Wang T. Multiple Defects Inspection of Dam Spillway Surface Using Deep Learning and 3D Reconstruction Techniques. Buildings. 2023; 13(2):285. https://0-doi-org.brum.beds.ac.uk/10.3390/buildings13020285

Chicago/Turabian StyleHong, Kunlong, Hongguang Wang, Bingbing Yuan, and Tianfu Wang. 2023. "Multiple Defects Inspection of Dam Spillway Surface Using Deep Learning and 3D Reconstruction Techniques" Buildings 13, no. 2: 285. https://0-doi-org.brum.beds.ac.uk/10.3390/buildings13020285