1. Introduction

As the building needs of our society grow in scale and dimension, design objectives for the built environment become more entangled, requiring architects and building engineers to collaborate wholistically on design solutions. Their goals are rarely independent of each other’s influence, and major redesigns late in design phases due to incohesive decisions can cost time, money, and the integrity of the design team. When developing comprehensive proposals during the conceptual design phase, computational tools such as parametric modeling can allow designers to rapidly iterate across possibilities and consider qualitative and quantitative objectives. Rather than rebuilding a model for each design variation, parametric models enable designers to easily explore different solutions by changing variables that control objectives in a design problem. However, architects and building engineers have historically followed different design processes to achieve their goals [

1,

2]. Yet the exact nature of these differences are not fully agreed upon and may be changing with technology and evolving disciplines [

3,

4,

5,

6]. For example, researchers have proposed that engineers assume problems can be well-defined, start with problem-analysis, and emphasize the “vertical” dimension (linear, procedural) of systems engineering, while architects assume partially defined problems and approach them with an opportunistic, argumentative process that emphasizes the horizontal dimension (iterative, problem-solving) [

1]. However, there is diversity among engineering disciplines in their exact approach, and there has been more recent emphasis on iterative problem-solving for engineering problems, potentially breaking this dichotomy [

7,

8].

Despite this ambiguity, many researchers still observe differences specific to architects and building engineers [

6,

9,

10,

11], and different approaches may hinder their combined efficacy when working in parametric tools. Stemming from their disciplinary training as students, they may even approach design differently based on the professional identity of their collaborators [

12]. Research has shown that diversity in teams can lead to more creative solutions, but an inconducive design environment and lack of shared understanding can impede design performance [

13]. At the same time, designers increasingly use digital forms of communication to collaborate, such as video meetings with screensharing for quicker feedback about design performance. When working in remote, parametric environments, it is unclear how students’ disciplinary identity may predict their design efficacy and behavior when collaborating with designers of similar or different educational backgrounds.

1.1. Parametric Models as Design Tools

Parametric 3D-modeling tools allow designers to readily explore design options by adjusting model variables and reviewing geometric and performance feedback, which can enable quick, multi-disciplinary decision making. These tools can potentially improve on traditionally separate design and analysis software, which may not most optimally address the range of complex requirements [

14]. For example, architects rely heavily on sketching [

15] and digital geometry tools [

16], while building engineers use discipline-specific analysis programs such as SAP2000 and ETABs for structural design or EnergyPlus for energy modeling. While previous research has shown 3D digital modeling to be a less conducive environment for collaboration compared to sketching [

17], this was due to the tedious nature of digital model building and may not apply to all forms of digital design exploration. An advantage to computational tools is that they enable efficient design responses and allow for more avenues of communication between the professions [

18].

Specifically useful for early design collaboration, parametric 3D-modeling tools allow designers to quickly explore a range of qualitative design options and receive multi-dimensional feedback about quantitative design performance [

19,

20]. Such an environment allows rapid exploration, albeit with more constraints, but also provides more information about the design than a sketch. These tools can improve design performance [

21], and previous research has supported that working in parametric models is a viable environment for design decision making [

22,

23,

24,

25,

26,

27]. Parametric design tools can be part of an equally accessible environment for different professions that provides quick, simultaneous feedback about both geometry and performance [

28,

29,

30]. Building designers increasingly use parametric design, thinking to explore solutions in a variety of applications, such as building forms [

31], structural design [

28], building energy [

29], and urban development [

32]. Some established examples of parametric modelling in practice include the Beyond Bending pavilion at the 2016 Venice Biennale [

33] and the iterative structural, energy, or daylighting analyses used by firms such as ARUP [

34] and Foster + Partners [

35].

In addition, computational design tools can be combined with digital platforms for collaboration. Due to shifts in the nature of work, expedited by the COVID-19 pandemic in 2020, online video meetings are increasingly used by the AEC community to design real buildings [

36] and can be beneficial to conceptual design development [

37]. As remote work becomes more normalized, digital mediums are increasingly used as the context for real design conversations in both engineering and architecture [

36,

38]. As an alternative to screen sharing and sketching in remote meeting platforms, shared online parametric models and their corresponding visualizations can provide an additional form of feedback. While dynamics within design teams in digital technologies have been studied before [

39,

40,

41], much of the work does not account for the context of parametric design environments, nor do they directly connect team efficacy based on team composition and defined design criteria. Understanding disciplinary identity when using these tools may influence how designers approach collaboration on computational platforms, resulting in differences of combined team design efficacy.

1.2. Collaborative Design Processes of Architect-Engineer Teams

Collaboration between diverse teams has been studied, characterized, and documented [

13,

42,

43,

44], but there is still much to understand about the specific interactions of engineers and architects, particularly when attempting to evaluate indicators of design efficacy. To best include the efforts of both architects and engineers, whose performance could be measured by different metrics, we follow Marriam-Webster dictionary’s definition of efficacy to be “the power to produce an effect”. Specific to buildings, design efficacy can be used to describe the successful achievement of desired outcomes such as cost, sustainability, efficiency, and discipline-specific goals such as spatial needs and structural requirements. Previously, engineering efficacy has been measured by how thoroughly engineers are able to address specified criteria [

45] and by measurable, outcome-based metrics [

46,

47]. Conversely, efficacy in architecture is harder to identify, as architectural goals can be more qualitative or experiential. Methods such as the Consensual Assessment Technique (CAT) method [

48] have been used to evaluate design quality when criteria are subjective and less measurable, such as in graphic design [

49].

Also significant is that building designers rarely work alone and must consider both qualitative and quantitative goals, which can obscure representations of their design process. While diversity in design teams stimulates creativity, with heterogeneous teams benefitting from a combination of expert perspectives, improved team performance most readily occurs if there is shared vocabulary and a conducive design environment [

13]. The team should also share similar conceptual cognitive structures [

50], which may differ by profession. While diverse teams of engineers and architects work towards the same end goal of a building, some acknowledge their different design processes and have shown they use separate design tools [

51]. However, as argued earlier because it is a main motivator of this paper, the development of new design models and the context of digital tools makes the distinctions of their processes less clear.

No model of design process has perfectly captured the activities of a whole profession [

3], and the integration of digital tools have further confounded understanding of design process. Oxman [

4] recognized that while some concepts reoccur in digital tools, design methods can vary depending on the media used. Design process models in parametric tools of architects have been illustrated by Stals et al. [

26] as the amplified exploration of ideas compared to processes supported by traditional tools. Oxman [

27] considered parametric design as a shift in understanding of design thinking, less bound by a representative model. However, these studies on parametric tools did not consider the differences between architects’ and engineers’ exploration. Increasingly, architects and engineers work in these tools together; therefore, studying their collaborative efforts is valuable to better understand and eventually incentivize effective teamwork given potential disciplinary barriers. Such challenges in design collaboration may stem from designers’ education where they begin to identify with a profession [

52]. Understanding the behaviors of student populations when using these tools can inform how they may collaborate in parametric environments in their future careers.

1.3. Decision Processes of Student Designers

While many assert that architects and engineers follow different design processes, there is evidence to support that student designers may not yet possess the cognitive processes that are emblematic to their profession. Kavakli and Gero [

53] found that when comparing series of cognitive actions in design, students followed a greater range of sequences of cognitive processes compared to experts, who employed a smaller range of sequence variation and were more efficient in their cognitive actions. Similarly, Ahmed et al. [

54] found that students tend to follow “trial and error” processes and do not have as refined design strategies as professionals, who were more systematic. However, these studies do not account for the influence of computational decision making on design. Abdelmohsen and Do [

55] found that novice architect designers performed prolonged processes to achieve the same goal as experts when responding to both sketching and parametric modeling tasks. In their study though, students worked independently and did not account for team collaboration in parametric tools.

As students are still developing as design thinkers in their fields, it is important to consider how they may collaborate with teammates who are trained in a different discipline. Architecture and engineering students often receive divergent instruction on how to address design goals when working in digital tools. While engineers have traditionally followed problem-solving methods with an emphasis on “right” answers [

56], this has been challenged recently as instructors incorporate more project-based learning [

57]. There is also increased discussion of preparing engineers for cross-disciplinary design thinking [

58,

59,

60]. Conversely, architectural education emphasizes spatial thinking with 3D modeling, and incorporates digital forms of learning though emerging tools [

15], parametric models [

61], optioneering [

62], and collaborative methods [

63]. While distinctions in design education may become harder to define as both disciplines evolve, many still note disciplinary divides between both architecture and engineering education and practice [

11]. Both types of expertise also tend to play defined roles in practice. In traditional building design procedures, architects may finalize many characteristics of a building before consulting with their engineers, limiting the autonomy of engineers to positively influence the design. Researchers from both professions suggest that early integration of engineers in the building design process can improve design performance and efficiency [

64,

65], but early integration has its challenges, as the professions have developed different disciplinary cultures [

66]. Overcoming these issues can be considered in their education for multi-disciplinary thinkers, but we need to first understand how they behave in mixed teams working in a parametric modeling environment.

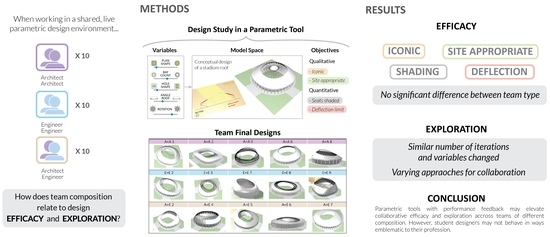

1.4. Research Questions and Hypotheses

In response, this research asks two questions about student architect and engineer designers: (1) How does team composition relate to design efficacy in a shared, live parametric design environment? And (2) How does team composition relate to design exploration in this environment? To answer these questions, a study was developed that compared pairs of two architecture students (A+A), two engineering students (E+E), and one of each discipline (A+E) as they jointly responded digitally to a conceptual design task with two engineering and two architectural criteria. Thirty pairs of designers, with ten of each team type, worked in an equally accessible online parametric design space which allowed them to explore a pre-built model using editable sliders. The model provided considerable geometric diversity and real-time engineering feedback, addressing the simulated performance needs of both professions and reducing barriers in aptitude of disciplinary tool familiarity. The teams’ ability to address the four criteria, as assessed by professional evaluators, was used to measure the efficacy of the final designs. Audio, video, and tool-use recordings of the design sessions captured information about the teams’ collaborative efforts and design exploration.

It was hypothesized that the diverse teams (A+E) would be more effective at addressing all the design criteria, and that design strategies would vary by team type. This hypothesis was based on previous literature describing the environment-dependent benefits of diverse teams. However, we noted the potential for no significant differences between team performance, possibly indicating unexpected and equalizing influences of the parametric tool on design processes. We also considered that disciplinary differences might not yet emerge in mutually approachable environments for student designers who are not yet experts in their field. Additionally, as this study was conducted through a digital video interface, it speaks to the potential screensharing strategies present in remote, collaborative working environments. Understanding how student architects and engineers cooperate in digital, parametric platforms can help discern effective team strategies in emerging design environments, inform educators about the preparedness of future designers to think multi-objectively, and reveal unexpected influences of parametric tools on conceptual design processes.

2. Materials and Methods

To understand how diverse pairs of student engineers and architects perform compared to same-wise pairs, this research relied on two digital design tools that are increasingly used in practice: a readily approachable parametric modeling platform, and remote video meetings to host collaborative design sessions. While parametric design can occur at various stages of building development and can be applied to many scales of design detail, this work focused on the conceptual design phase of a stadium roof, which required both architectural and engineering input, and was an approachable task for student designers. Although naturally occurring design processes can manifest in many environments, this work focused on parametric models as design tools to capture evidence of effective behavior specifically in this medium.

The teams worked remotely In an online parametric tool, not native to either discipline, which provided visual and numeric feedback. The intent was to facilitate an environment that was not directly familiar and thus did not favor the efforts of either profession. Participants performed the design task together in an online video meeting, which was able to record information about their exploration. In addition, the teams submitted screenshots of their final design and a design statement, which four professional designers used to evaluate team efficacy in addressing the design task objectives.

Figure 1 illustrates the study’s protocol with an example of the design tool interface.

2.1. Design Session Procedure and Participants

The study was conducted through recorded online video meetings, and the sessions lasted approximately one hour. In the first 20 min, the teams were briefed on the study tools, given the design task, and allowed 5 min to become familiar with the materials before developing a design with their assigned partner. The teams were then allowed 30–35 min to work on their design and submit deliverables from the design task.

Prior to running the study and collecting data, the interface and protocol were piloted on 3 teams to verify the clarity of the design task, usability of the tool, and accuracy of the data collection methods. The sample participants were either members of the research team who did not participate in the script development, or graduate students in an architectural engineering program with at least 1 year of experience in 3D parametric modeling. The sample participants were able to finish the task in the allotted time. Upon completing the test design session, these sample participants provided feedback about the study’s procedures, which were then further refined. The sample data was used to ensure the reliability of the data collection, processing, and analysis approaches.

This study was approved by the researchers’ Institutional Review Board. The participants were structures-focused engineering or architecture students from one of two large, public U.S. universities. Participation was limited to 4th or 5th year undergraduates with AEC internship experience for engineers and National Architecture Accrediting Board (NAAB) accredited structures courses for architects, or students of either discipline at the graduate level. Participants were paired based on disciplinary major and availability. The research questions of the study were not revealed to the participants so to not influence their performance. While the moderator was available to answer questions, they had minimal interaction with the teams during design and did not prompt any behaviors.

Although the students may experience more elaborate design challenges over longer periods of time through their coursework or in their future professional practice, replicating extensive, multi-year design processes is beyond the scope of this paper’s research questions. It has been established that design study protocols must consider limitations of tools and resources to collect clear, dependable data [

67]. To reduce cognitive fatigue and minimize uncontrollable external influences on team behavior, this research used a concise design task and focused metrics to evaluate the team processes.

2.2. Design Task Criteria

The conceptual design task asked participants to develop the geometry of an Olympic stadium roof for a fictional site plan in a tropical climate. There is precedent for stadium roof design as a good sample project to judge designer performance in parametric modeling [

34]. The design statement provided to the designers contained four criteria that used as design goals and used to assess the efficacy of the teams. Two of the criteria were qualitative requirements that aligned with architectural values: that the design be iconic and site appropriate. The other two quantitative requirements aligned with engineering goals: that the roof shade a certain percentage of seats during noon on the summer solstice and not exceed a maximum deflection limit, which the participants were required to calculate. These goals were considered accessible based on participants’ level of study and degree requirements. For the final deliverables of their proposed conceptual designs, teams were asked to submit 3–6 screenshots and a 5–8 sentence design statement that discussed how their design addressed the prompt. Additional detail of the design task and requirements can be found in

Appendix A.

2.3. Design Environment Details

The study’s primary tool consisted of an online parametric stadium roof model that the designers could edit by changing ten variable sliders. The tool was intended to be neutral to not favor the capabilities of one profession over the other, and novel to the designers, in that no participant had used the exact interface before. While the parametric model would limit the detailed development of the project, this design task asks the participants to focus on developing the roof design in the late-conceptual design phase, when aspects such as the structural systems and likely materials would have already been decided.

The model was constructed in the parametric modeling program Grasshopper and uploaded to Shapediver [

68], an online file hosting platform that allows external users to change model variables and obtain design feedback without editing the base file. Shapediver and similar cloud-based platforms have been gaining popularity in several fields due to their ease of access from a browser and utility in developing 3D model solutions. The Shapediver API interface was used to embed the model in a custom website built for the study that tracked user click and design data, such as when variables were changed. Before designing, participants were shown how to use the tool and they independently accessed the website during the video meeting. They were briefed on how to share their screens, but screen sharing was not required nor explicitly encouraged.

Figure 2 shows the structure of the tool’s files and examples of three screensharing strategies that may be used by the design teams, such as one person sharing their screen and the other watching for the whole session, one person sharing their screen while the other person keeps working, or sharing screens back and forth throughout the session.

The tool’s ten editable sliders mostly modified geometric qualities of the stadium and the variables all impacted the four design criteria in some capacity. Authentic to a design challenge in practice, the base model was built such that no “best” solution existed. For example, a larger roof area improved shading, but also increased deflection, which was undesirable. In the model, the quantitative criteria were achievable for a range of visual solutions but could not be met under all variable settings. Providing ten variables allowed designers of both types to consider combinations of solutions and use different approaches to explore the design space. The variables were mostly continuous, which gave participants the ability to directly manipulate the design. Collectively across all variables, there were over 5 trillion possible solutions. While the parametric model would limit the detailed development of the project, this design task asks the participants to focus on developing the roof design in the late-conceptual design phase, when some aspects such as the structural systems and likely materials would have already been decided. In addition, the tool used Karamba3D [

69] to perform live deflection calculations of the roof as the users changed the variables. Details about the tool’s variables and internal calculations of deflection and seat shading can be found in

Appendix B.

2.4. Methods for Evaluationg Team Efficacy and Exploration

To answer the study’s research questions, three streams of data were evaluated: final design efficacy based on professionals’ evaluations, exploration behavior based on engagement with the tool, and team collaboration strategies based on how they chose to work remotely in the video meeting.

2.4.1. Assessing Team Efficacy

Following the design task, team efficacy was assessed by four professionals (one licensed engineer, one engineering professor, one licensed architect, and one architecture professor) for how well the teams addressed the criteria in their visual submissions and design statements. All reviewers held professional degrees in their field and were located at schools or firms in the southwestern US, northeastern US, or western Europe. The licensed professionals had at least 8 years of experience in practice and the professors taught for at least 7 years. Their evaluation followed the Consensual Assessment Technique method [

48], which uses professionals to evaluate design quality, responding to questions about criteria performance using a Likert scale. The CAT method is often used in evaluating design ideas that rely on qualitative evaluation, but it has been used in engineering applications as well [

70]. The professional evaluators were asked “how well did the project from team X address criterion Y of the design task”. They reported their opinions on a five-item scale including the responses “not at all”, “somewhat well”, “moderately well”, very well”, or “extremely well”. The professionals completed their assessments individually and were not told which team type they were evaluating. To mitigate evaluation fatigue, each professional evaluated only 12 designs (four of each team type). To verify the agreeance between the evaluators, they evaluated six of the same projects and six different projects. For the same six projects that they evaluated, an intraclass correlation coefficient was calculated for all criteria.

2.4.2. Assessing Team Collaborative Design Exploration

In addition to efficacy, design exploration was documented by measuring the teams’ interaction with the design tool using click data and by observing how the teams collaborated in the shared work environment. As the professions increasingly rely on online forms of design cooperation, considering the student participants’ behavior when working in the digital environment can inform how the professions use these tools when designing.

To capture the designers’ exploration of the tool, we included a tracking mechanism in the design website that recorded variable changes and corresponding iterations during the session. Comparing differences in number of variables explored and iterations tested can suggest the relative breadth of the design exploration. Yu [

25] observed that parametric design has two kinds of cognitive processes: “design knowledge”, which relies on a designer’s knowledge for their decisions, and “rule algorithm”, in which the designer’s decisions respond to the rules of the model. Using more variables and creating more iterations can reflect the application of both cognitive processes. Although the teams in our study did not exhaustively engage all the variables, they mostly adjusted all variables at least once. In the time allowance of the study, this reflected enough dimensions for authentic engagement, but not too many variables for the designers to consider. The numbers of iterations were compared to the efficacy ratings for each criterion, since more iteration may relate to improved design performance. Significant iteration, though, might align with an architect’s process, whereas an engineering process may lead more directly to a solution.

2.4.3. Assessing Team Screensharing

The method by which the teams chose to collaborate in their visual efforts was also noted. Although previous research has considered collaboration through digital file exchange [

71], it did not account for active environment engagement. Alternatively, virtual reality tools can allow two users to move around in the same environment with an integrated video platform, but virtual reality is not yet pervasive in architecture and engineering firms for collaborative design environments. In the online environment used in this study, participants were allowed to choose how to work in the digital modeling environment. They could develop their solutions through various screensharing tactics, which were observed by team type. The researchers noted which partner shared screens, how long they shared, and if they alternated screensharing. This empirical approach to describing team collaboration styles allowed the researchers to note new behaviors as they occurred. If the majority of a team type’s pairs followed the same screensharing method to develop their models, it may speak to a likeness in collaborative process, but if all the pairs behave differently, this would further confound the disciplinary process’s identities when working in video shared, parametric design environments.

3. Results

A total of 30 designs were created, with 10 designs from each team type.

Figure 3 shows screenshots of 18 of the 30 projects. Initial visual assessment suggests a range of solutions with the most visually noticeable characteristics being plan shape, roof angle, and roof coverage. However, the professionals’ assessments provide more critical examination of the teams’ efficacy, which provided a baseline by which to compare the teams’ collaboration and design space exploration.

3.1. Professional Assessment of Team Efficacy

To determine team efficacy, four professionals evaluated the projects for how well the design pairs addressed the four criteria.

Figure 4 shows the professional’s evaluations as box and whisker plots of the team type efficacy for each objective. The A+A teams had higher average effectiveness than the other teams at meeting all four criteria, but in “site” and “deflection”, at least one of the A+A teams was judged to have not addressed the criteria at all. The A+E teams had the lowest average effectiveness in “iconic”, “shading”, and “deflection”, with the largest range in performance. While the E+E teams were not more effective than the other team types at any criteria, all E+E teams were at least somewhat effective at addressing the four criteria.

A Kruskal–Wallis test was performed for each criterion to determine if there were any statistical differences between team types at a

p = 0.05 level of significance. No team type was significantly different in the efficacy of achieving any of the four criteria, with deflection having the lowest

p-value of 0.334. Since five of the twelve team type criteria had evaluations scoring from 0 to 1, the outlying values in the large range may have overly influenced the data, reducing the data’s statistical significance. To test if the large ranges had a negative impact on statistical significance, the highest and lowest evaluation value for each team type in each criterion were removed, and the Kruskal–Wallis tests were run again. While the

p-values for each criterion in the Kruskal–Wallis test were closer to a significance level of 0.05, they were still not significant. The

p-values from these tests are shown in

Table 1.

Based on their ratings, an intraclass correlation coefficient was calculated across all the evaluators for all criteria. It was found to be 0.719, which meets an acceptable level of agreeability. While coefficients between 0.900 and 1.000 are considered in very high agreeance, and those above 0.7 are considered acceptably high, interpretation of coefficients are conditional to each application. In this study, because the assessments are both qualitative and quantitative, judged by four raters with unique expertise, and use an evaluation scale with five options, an agreeance of greater than 90% would be unexpected. The CAT method for creativity evaluation, which often uses ICC to consider evaluator agreeance, assumes that the professionals all have the same area of expertise. In contrast, this study uses both architects and engineers to evaluate the projects, who have their own areas of expertise, and still meets a level of agreeance above 0.7 with an ICC of 0.719.

3.2. Characteristics in Collaborative Exploration

The teams’ exploration of the design space was measured by their engagement with the design tool and by their behavior when collaborating in the online environment.

3.2.1. Characteristics in Collaborative Exploration

Figure 5 shows the number of iterations and average variables changed for each team type. No team type explored a statistically greater number of iterations than the other team types nor changed a greater number of variables, based on a Kruskal–Wallis test at

p = 0.05 level of significance. However, comparing iterations to individual criteria may yield more informative results.

When considering the relationship between the number of iterations created by each team type and the efficacy performance ratings for each criterion, a pattern emerges.

Figure 6 shows the plots of criteria ratings vs. iterations for each criterion and their fitted linear regression line. The figure also provides the slope for each linear regression equation and the

p-value at a 0.05 level of significance based on a simple linear regression analysis for statistical significance between the variables. For the test, the null hypothesis is that the slope is 0 and the alternative hypothesis is that the slope is not 0. The

p-values of the regression analyses are greater than 0.05; therefore, there is not enough evidence to say that iterations have a linear statistical relationship to criteria efficacy. However, the signs of the slopes for their relationship are consistent in each criterion. While more iterations relate positively to greater criteria efficacy for the E+E and A+E teams, the opposite is true for the A+A teams, for which the relationship is negative or negligible.

3.2.2. Screen Sharing the Collaborative Environment

When working collaboratively in the design environment, we noted several patterns on how pairs explored the model while using the remote design tools.

Figure 7 shows a sample of the different screensharing strategies and the number of teams for each team type that followed the strategies. The most common method for sharing ideas, labeled Strategy 1, was when one team member shared their screen within 5 min of starting their session and moved in the model while the other designer watched and made suggestions. This strategy was followed by 5 A+A teams, 7 E+E teams, and 4 A+E teams. Strategy 2 was when one person shared, but their partner continued working on their own model. Strategy 3 was when each teammate shared their screen at least once. In some cases, teams shared their screen multiple times. Strategy 4 represents other methods. For example, team AE10 never screenshared, but verbally updated each other about their variable settings when they found solutions that they liked. Team AA8 worked independently and only shared their design towards the end of the session. A third team, AE4, chose to screenshare both designers’ screens while allowing both designers to control the mouse. There was no screensharing method consistently used by a team type.

4. Discussion

In summary, we hypothesized that when working in a parametric, digital modelling environment, diverse teams would show significantly better performance when A+E, A+A, and E+E pairs were given the same design task, but this finding was not supported by the data. It was also expected that explicit behaviors based on team type would become evident in efficacy or design space exploration. However, this was not the case. It was surprising that the teams performed similarly and did not show greater proficiency at addressing their own disciplinary design criteria. While some differences between team types were noted, few rose to the level of statistical significance at traditional confidence levels. Further discussion for each research question is given below:

RQ 1: How does team composition relate to design efficacy in a shared, live parametric design environment? Diverse pairs of building designers were not significantly more effective at addressing the design criteria than same-wise pairs, despite what is predicted by existing literature. Although the provided parametric design environment may not have allowed for enough design diversity between team types, it is possible that for the student designers, live feedback from the parametric tool may have benefitted the efforts of the teams in absence of other disciplines. In a traditional practice workflow, the professions serve their own roles and provide disciplinary expertise, and there is a lag in communication while they perform their respective responsibilities in sequence. The shared modeling space with multidisciplinary feedback may have partially performed the jobs of both architects and engineers at the resolution of early-stage design. However, it is also possible that student designers are not yet proficient in their field and did not perform in a way that is emblematic to their profession and therefore did not show differences in performance. In addition, Lee et al. [

50] reports that, regarding creativity, simply including designers with different backgrounds does not guarantee improved results if the designers do not share mental models for problem solving. Future research should consider whether providing live, visual, or quantitative feedback, alongside geometric flexibility, can help serve roles of both professions and increase the ability of homogeneous pairs to manage multidisciplinary criteria.

RQ 2: How does team composition relate to design exploration in this environment? Although no team type explored the model significantly more based on the number of iterations and number of variables changed, the increase of iterations compared to team type efficacy does suggest some differences between groups. While greater iterations related to improved design efficacy ratings for the E+E and A+E teams, the same was not true for A+A teams. Since iterative processes are associated with architects [

42], an increase in iterations should have, theoretically, improved the design performance by all teams, especially the A+A teams. Furthermore, no team type consistently followed the same strategy for sharing screens to develop their designs. Screensharing in collaboration is not specific to a particular profession and may not differ by disciplinary background, but it is important in effective student education [

72,

73]. The students in this study may be better at working remotely through screensharing due to their remote experiences during the COVID-19 pandemic. In addition, the relationship between team type characteristics and team efficacy is inconclusive, suggesting that diversity in engineering and architect teams does not guarantee improved results when considered in the context of a collaborative, parametric environment.

A summary of what was learned regarding each research question is provided in

Figure 8. Overall, the study’s metrics may suggest the presence of an equalizing influence of parametric tools on efficacy and exploration, or that student designers do not have differing behavior between professions in the provided design environment. Parametric tools have been shown to positively support design performance [

74], and it could be that the mutually approachable environment influenced the design process. However, impacts on design team performance can be generally hard to discern, as previous research on construction design teams have also shown inconclusive results [

75]. Although further research is needed to understand the impact of multi-disciplinary tools on mixed disciplinary teams, the lack of distinct differences presented in this paper provides a baseline for assessing exploration and efficacy in the context of collaborative design.

Limitations

There are several limitations to the study. Despite its methodological advantages, using a pre-made parametric design space does not allow for exhaustive analysis of all possible conceptual design approaches for buildings. However, as McGrath [

67] established, there are three goals in understanding and quantifying team group interaction: generalizing of evidence from a large population, precision of measurements, and realism of the simulation. This study conducted concise yet somewhat abstract design simulations to achieve precision of measurement across a reasonably large population, which sacrificed some aspects of realism of the design simulation. However, having fewer participants with rich data is reasonable for studying design to capture the subtlety and depth of the process, particularly in studies which follow protocol analysis methods [

76] (p. 15).

McGrath also acknowledges that to evaluate the results of a team groups study, one should be critical of the methods and tools used that are specific to the study or profession. While this study uses one design challenge, in focusing on just the stadium roof, the designers were able to complete the task in the allotted time and respond to the disciplinary specific design goals using their respective knowledge. Other limitations could include perceived ambiguity in the design criteria, or the fact that the data collected for collaboration and exploration does not perfectly characterize those corresponding behaviors—there is some subjectivity in mapping between data collection and behavior for a specific design challenge. Nevertheless, the study relied on established methods for design evaluation and had clear protocols for data collection to determine statistical significance in the design teams’ different characteristics.

5. Conclusions

This paper presented the results of a design study that considered relationships between the efficacy and behavior of diverse and same-wise pairs of student engineer and architect designers. While it was expected that diverse teams would be more effective at addressing varied design criteria, a professional assessment of the designs did not suggest that any team type performed significantly better than the others. However, the lack of significant differences in design performance and behavior raises questions about the influence of the digital design environment on the design process—it is possible that an online digital modeling platform may have influenced design strategies to converge. Subtle differences between the A+A and E+E teams’ behavior suggest narratives relating to team type characteristics, but there are few notable distinctions. In applying these results to practice, it may be that parametric modeling tools can be helpful for designers of either architecture or engineering backgrounds to explore design spaces. Such approaches may not be useful for all professional firms or all design stages, but managers may consider opportunities afforded by parametric models, especially during conceptual design and other instances in which options are visually compared by multidisciplinary teams. Future work will consider how teams of professional engineers and architects may collaborate when working on the same design task in a more extensive design scenario. This will overcome limitations introduced by the reliance on the parametric design space. In addition, the methods used in this study could be applied to understanding the behaviors of larger building design teams over more extensive design sessions. As design tools evolve and design requirements continue to push construction boundaries, it is important to continually understand effective indicators of architect-engineer team performance.