Influence of Image TIFF Format and JPEG Compression Level in the Accuracy of the 3D Model and Quality of the Orthophoto in UAV Photogrammetry

Abstract

:1. Introduction

2. Characteristics of Some Digital Image Formats Used in Photogrammetry

2.1. RAW

2.2. TIFF

2.3. JPEG

3. Data and Methods

3.1. Method

- Dataset containing DNG images;

- Dataset containing TIFF images;

- Dataset containing JPEG images with compression equal to 1, i.e., high level of compression (called JPEG1 in the study);

- Dataset containing JPEG images with compression equal to 6, i.e., medium level of compression (called JPEG6 in the study);

- Dataset containing JPEG images with compression equal to 12, i.e., low level of compression (called JPEG12 in the study).

- quality of the alignment of the images (error pixel);

- estimation of errors through comparison with points use ground control points (GCPs) obtained by topographical way;

- comparison between the point cloud generated by DNG image format and the other point cloud generated using TIFF and JPEG formats;

3.2. UAV and Camera Features

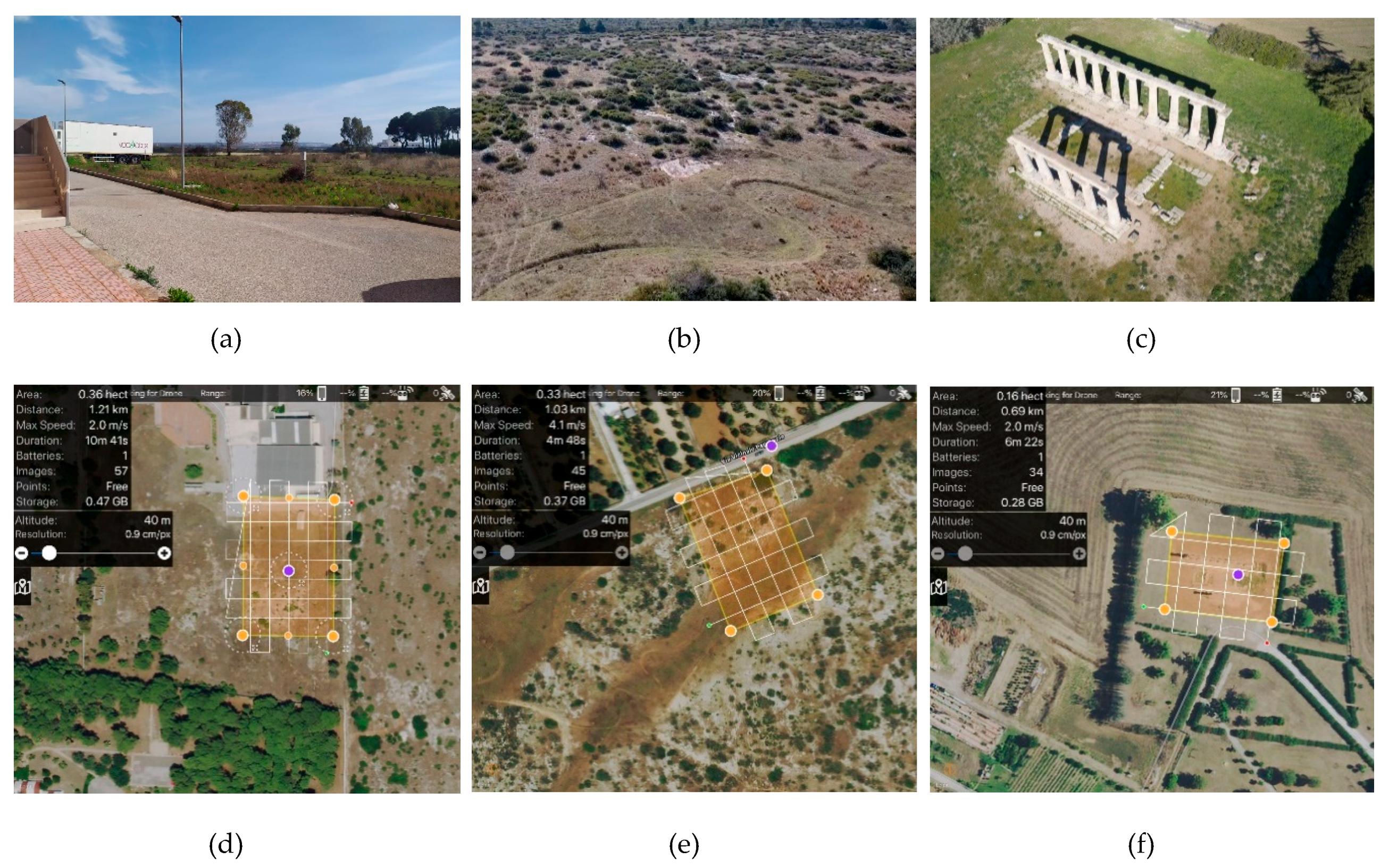

4. Empirical Tests

4.1. Mission Planning

- Test site 1 (Figure 2a), a flat green area near the structure of the Polytechnic of Bari in Taranto headquarters (Italy);

- Test site 2 (Figure 2b), a natural river bed with important slopes;

- Test site 3 (Figure 2c), a Cultural Heritage site located in Metaponto (Italy), the so called “Tavole Palatine” are the remains of a Greek temple of the sixth century BC, dedicated to the goddess Hera.

4.2. Post-Processing of the Datasets and Evaluation of the Accuracy on GCPs

4.2.1. Post-Processing in Metashape Software

4.2.2. Post-Processing in 3DF Zephir Software of the Datasets Containing Images Acquired on the Test Site 3

4.3. Orthophoto Generation

5. Evaluation of the Accuracy

5.1. Comparison between the Point Clouds

5.2. Time Processing

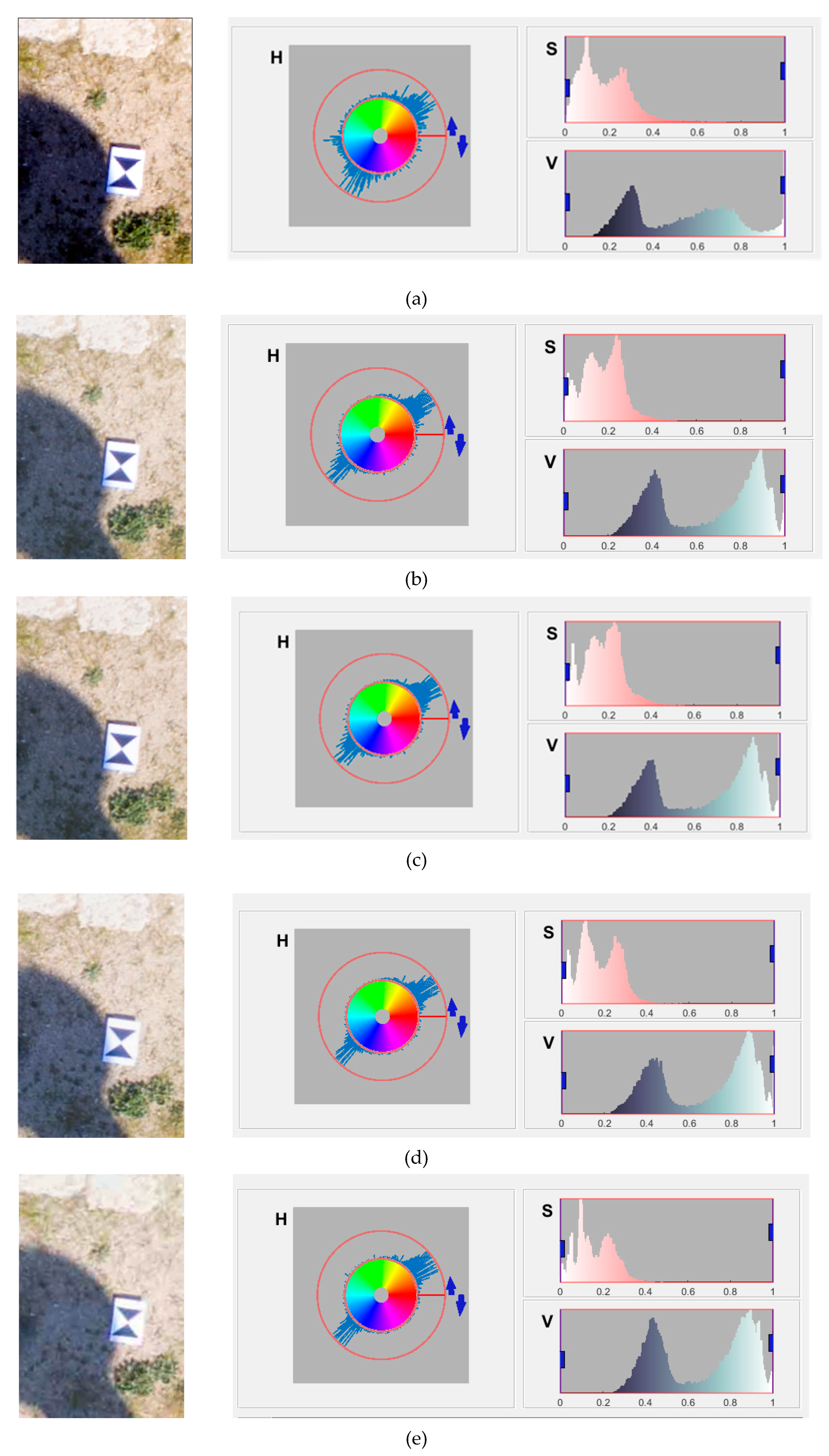

5.3. Evaluation of the Quality of the Orthophoto

- the hue in the DNG image has more chromatic values, that is, it has a greater distribution of colors than the other images;

- the pixels in the histogram of the value are distributed in the tonal range in a uniform way and do not present peaks in correspondence of the extreme values and consequently, the image presents a more correct tonalization; in comparison, as the JPEG compression increases, it is possible to notice how the curve of the value moves to the right creating an apparent overexposure of the image.

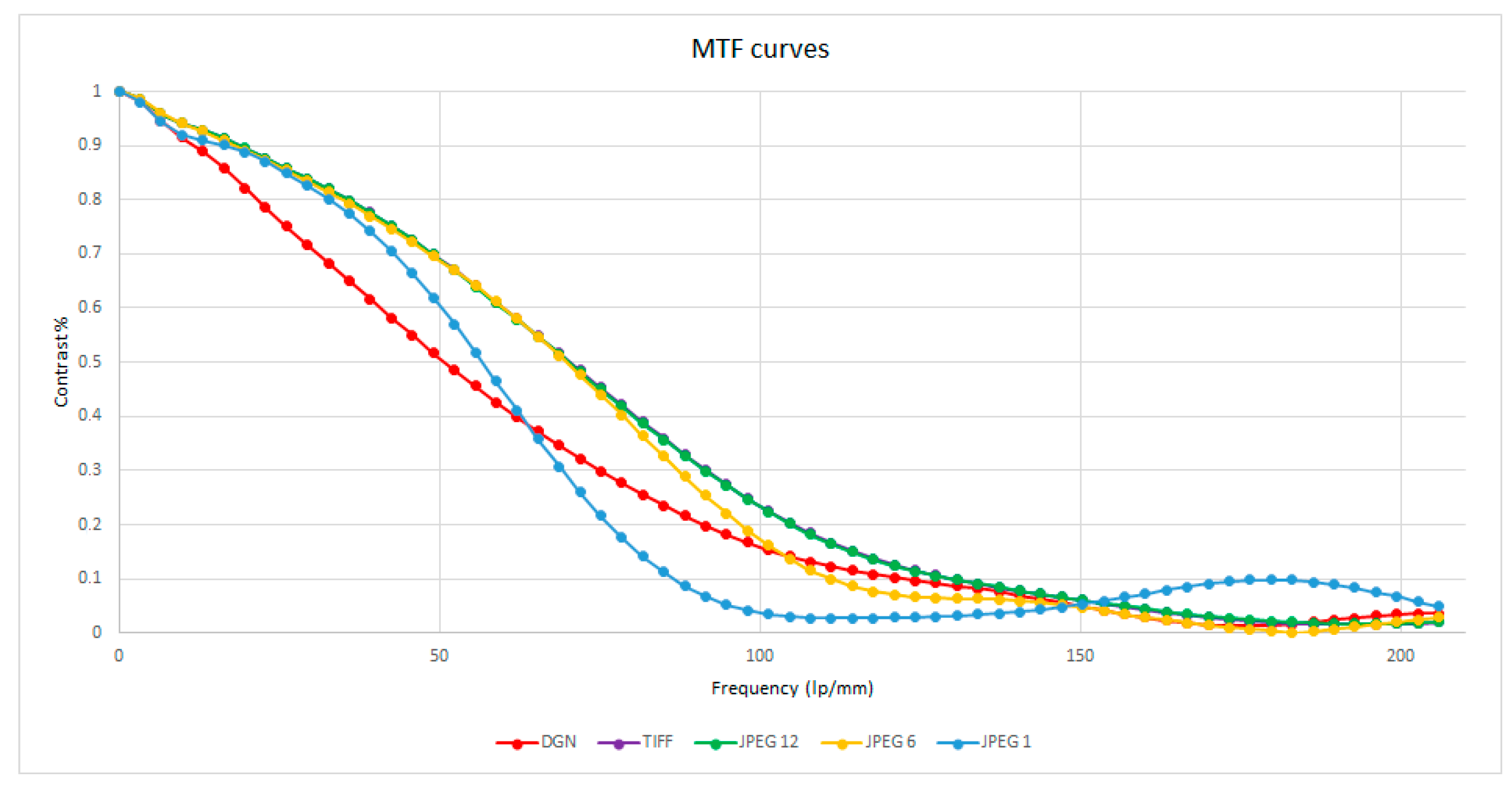

5.4. Testing for Image Analysis by Modulation Transfer Function (MTF)

6. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Eisenbeiß, H. UAV Photogrammetry. Ph.D. Thesis, Institute of Geodesy and Photogrammetry, ETH Zurich, Zurich, Switzerland, 2009; p. 235. [Google Scholar]

- Nikolakopoulos, K.G.; Kozarski, D.; Kogkas, S. Coastal areas mapping using UAV photogrammetry. In Earth Resources and Environmental Remote Sensing/GIS Applications; SPIE: Toulouse, France, 2018; Volume 10428, p. 104280. [Google Scholar]

- Mesas-Carrascosa, F.J.; Notario García, M.D.; Meroño de Larriva, J.E.; García-Ferrer, A. An analysis of the influence of flight parameters in the generation of unmanned aerial vehicle (UAV) orthomosaicks to survey archaeological areas. Sensors 2016, 16, 1838. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Mouget, A.; Lucet, G. Photogrammetric archaeological survey with UAV. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2014, 2, 251–258. [Google Scholar] [CrossRef] [Green Version]

- Scianna, A.; La Guardia, M. Main features of a 3D GIS for a monumental complex with an historical-cultural relevance. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2017, 42, 519–526. [Google Scholar] [CrossRef] [Green Version]

- Liu, P.; Chen, A.Y.; Huang, Y.N.; Han, J.Y.; Lai, J.S.; Kang, S.C.; Wu, T.H.; Wen, M.C.; Tsai, M.H. A review of rotorcraft unmanned aerial vehicle (UAV) developments and applications in civil engineering. Smart Struct. Syst. 2014, 13, 1065–1094. [Google Scholar] [CrossRef]

- Maza, I.; Caballero, F.; Capitán, J.; Martínez-de-Dios, J.R.; Ollero, A. Experimental results in multi-UAV coordination for disaster management and civil security applications. J. Intell. Robot. Syst. 2011, 61, 563–585. [Google Scholar] [CrossRef]

- Santagata, T. Monitoring of the Nirano Mud Volcanoes Regional Natural Reserve (North Italy) using Unmanned Aerial Vehicles and Terrestrial Laser Scanning. J. Imaging 2017, 3, 42. [Google Scholar] [CrossRef] [Green Version]

- Gonzales, R.C.; Woods, R.E. Digital image processing, 2nd ed.; Prentice Hall: Upper Saddle River, NJ, USA, 2002; p. 797. [Google Scholar]

- Nelson, M.; Gailly, J.L. The Data Compression Book, 2nd ed.; M & T Books: New York, NY, USA, 1995; p. 403. [Google Scholar]

- Vemuri, B.C.; Sahni, S.; Chen, F.; Kapoor, C.; Leonard, C.; Fitzsimmons, J. Lossless image compression. Igarss 2014, 45, 1–5. [Google Scholar]

- Jasmi, R.P.; Perumal, B.; Rajasekaran, M.P. Comparison of image compression techniques using huffman coding, DWT and fractal algorithm, 2015. In Proceedings of the2015 International Conference on Computer Communication and Informatics (ICCCI), Coimbatore, India, 8–10 January 2015; IEEE: Coimbatore, Indi; pp. 1–5. [Google Scholar]

- Vidhya, K.; Karthikeyan, G.; Divakar, P.; Ezhumalai, S. A Review of lossless and lossy image compression techniques. Int. Res. J. Eng. Technol. (IRJET) 2016, 3, 616–617. [Google Scholar]

- Maeder, A.J. Lossy compression effects on digital image matching. In Proceedings of the Fourteenth International Conference on Pattern Recognition (Cat. No. 98EX170), Brisbane, Australia, 20 August 1998; IEEE: Piscataway, NJ, USA; Volume 2, pp. 1626–1629. [Google Scholar]

- Zhilin, L.; Xiuxia, Y.; Lam, K.W. Effects of. JPEG compression on the accuracy of photogrammetric point determination. Photogramm. Eng. Remote Sens. 2002, 68, 847–853. [Google Scholar]

- Shih, T.Y.; Liu, J.K. Effects of JPEG 2000 compression on automated DSM extraction: Evidence from aerial photographs. Photogramm. Rec. 2005, 20, 351–365. [Google Scholar] [CrossRef]

- Akca, D.; Gruen, A. Comparative geometric and radiometric evaluation of mobile phone and still video cameras. Photogramm. Rec. 2009, 24, 217–245. [Google Scholar] [CrossRef]

- O’Connor, J. Impact of Image Quality on SfM Photogrammetry: Colour, Compression and Noise. Ph.D. Thesis, Kingston University, Kingston upon Thames, UK, 2018. [Google Scholar]

- Pepe, M.; Fregonese, L.; Crocetto, N. Use of SFM-MVS Approach to Nadir and Oblique Images Generated throught Aerial Cameras to Build 2.5 D Map and 3D Models in Urban Areas. Geocarto Int. 2019, 1–17. [Google Scholar] [CrossRef]

- Kiefner, M.; Hahn, M. Image compression versus matching accuracy. Int. Arch. Photogramm. Remote Sens. 2000, 33, 316–323. [Google Scholar]

- Marčiš, M.; Fraštia, M. Influence of image compression on image and reference point accuracy in photogrammetric measurement. In Advances and Trends in Geodesy, Cartography and Geoinformatics, Proceedings of the 10th International Scientific and Professional Conference on Geodesy, Cartography and Geoinformatics (GCG 2017), Demänovská Dolina, Slovakia, 10–13 October 2017; Demänovská, D., Low Tatras, S., Eds.; CRC Press: Boca Raton, FL, USA, 2017; p. 77. [Google Scholar]

- Re, C.; Simioni, E.; Cremonese, G.; Roncella, R.; Forlani, G.; Langevin, Y.; Da Deppo, V.; Naletto, G. Salemi, G. Effects of image compression and illumination on digital terrain models for the stereo camera of the BepiColombo mission. Planet. Space Sci. 2017, 136, 1–14. [Google Scholar] [CrossRef]

- Aldus Corporation. TIFF 6.0 Specifications. Available online: http://www.tnt.uni-hannover.de/js/soft/imgproc/fileformats/tiff.doc.6.0.pdf (accessed on 20 October 2019).

- Wiggins, R.H.; Davidson, H.C.; Harnsberger, H.R.; Lauman, J.R.; Goede, P.A. Image file formats: Past, present, and future. Radiographics 2001, 21, 789–798. [Google Scholar] [CrossRef]

- Agostini, L.V.; Silva, I.S.; Bampi, S. Pipelined fast 2D DCT architecture for JPEG image compression. In Proceedings of the Symposium on Integrated Circuits and Systems Design, Pirenopolis, Brazil, 15 September 2001; Association for Computing Machinery: New York, NY, USA, 2001; pp. 226–231. [Google Scholar]

- Evening, M. Adobe Photoshop CC for Photographers: A Professional Image Editor’s Guide to the Creative Use of Photoshop for the Macintosh and PC; Routledge: Abingdon, UK, 2013. [Google Scholar]

- JPEG Compression Levels in Photoshop and Lightroom. PhotographyLife. Available online: https://photographylife.com/jpeg-compression-levels-in-photoshop-and-lightroom (accessed on 10 January 2020).

- Bianco, S.; Ciocca, G.; Marelli, D. Evaluating the performance of structure from motion pipelines. J. Imaging 2018, 4, 98. [Google Scholar] [CrossRef] [Green Version]

- Zach, C.; Sormann, M.; Karner, K. High-performance multi-view reconstruction. In Proceedings of the Third International Symposium on 3D Data Processing, Visualization, and Transmission (3DPVT’06), Chapel Hill, NC, USA, 14–16 June 2006; IEEE: Washington, DC, USA; pp. 113–120. [Google Scholar]

- Pepe, M.; Costantino, D.; Restuccia Garofalo, A. An Efficient Pipeline to Obtain 3D Model for HBIM and Structural Analysis Purposes from 3D Point Clouds. Appl. Sci. 2020, 10, 1235. [Google Scholar] [CrossRef] [Green Version]

- Pepe, M.; Fregonese, L.; Scaioni, M. Planning airborne photogrammetry and remote-sensing missions with modern platforms and sensors. Eur. J. Remote Sens. 2018, 51, 412–436. [Google Scholar] [CrossRef]

- Barazzetti, L.; Forlani, G.; Remondino, F.; Roncella, R.; Scaioni, M. Experiences and achievements in automated image sequence orientation for close-range photogrammetric projects. In Proceedings of the SPIE, Proceedings of the International Confonference “Videometrics Range Imaging, Munich, Germany, 21 June 2011; paper No. 80850F. Remondino, F., Shortis, M.R., Eds.; SPIE: Bellingham, WA, USA; Volume 8085, p. 13. [Google Scholar] [CrossRef]

- Chen, S.; Zhang, R.; Su, H.; Tian, J.; Xia, J. Scaling-up transformation of multisensor images with multiple resolution. Sensors 2009, 9, 1370–1381. [Google Scholar] [CrossRef] [Green Version]

- Hegde, G.P.; Hegde, N.; Muralikrishna, I. Measurement of quality preservation of pan-sharpened image. Int. J. Eng. Res. Dev. 2012, 2, 12–17. [Google Scholar]

- Wald, L. Quality of high resolution synthesized images: Is there a simple criterion? In Proceedings of the International Conference Fusion of Earth Data, Nice, France, 26–28 January 2000; Volume 1, pp. 99–105. [Google Scholar]

- Parente, C.; Pepe, M. Influence of the weights in IHS and Brovey methods for pan-sharpening WorldView-3 satellite images. Int. J. Eng. Technol. 2017, 6, 71–77. [Google Scholar] [CrossRef] [Green Version]

- van den Bergh, F. MTF Mapper User Documentation, 2012; 28.

- Kohm, K. Modulation transfer function measurement method and results for the Orbview-3 high resolution imaging satellite. In Proceedings of the Congress International Society for Photogrammetry and Remote Sensing; ISPRS: Berlin, Germany, 2004; Volume 20, pp. 12–23. [Google Scholar]

- Matsuoka, R. Effect of lossy jpeg compression of an image with chromatic aberrations on target measurement accuracy. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2014, 2, 235–242. [Google Scholar] [CrossRef] [Green Version]

| JPEG Compression | Photoshop Scale Name | Equivalent in% |

|---|---|---|

| 0 | Low | 0–7 |

| 1 | Low | 8–15 |

| 2 | Low | 16–23 |

| 3 | Low | 24–30 |

| 4 | Low | 31–38 |

| 5 | Medium | 39–46 |

| 6 | Medium | 47–53 |

| 7 | Medium | 54–61 |

| 8 | High | 62–69 |

| 9 | High | 70–76 |

| 10 | Maximum | 77–84 |

| 11 | Maximum | 85–92 |

| 12 | Maximum | 93–100 |

| Features | Specifications |

| UAV Platform | ||

| Max. take-off weight | 907 g | |

| Maximum Speed (P-Mode) | 48 km/h/13.4 m/s | |

| Flight time | ~31 min | |

| Camera: Hasselblad L1D-20c> | ||

| Sensor | 1″ CMOS; Effective pixels: 20 million | |

| Photo size | 5472 × 3648 | |

| Focal length | 10.26 mm | |

| Field of view | approx. 77° | |

| Aperture | f/2.8–f/11 | |

| Shooting speed | Electronic shutter: 8–1/8000 s | |

| Format | SD-GCPs | Tie Points | ||||

|---|---|---|---|---|---|---|

| TE (m) | X (m) | Y (m) | Z (m) | XY (m) | ||

| DNG | 0.054 | 0.006 | 0.006 | 0.053 | 0.009 | 93,311 |

| TIFF | 0.059 | 0.009 | 0.009 | 0.057 | 0.013 | 92,791 |

| JPEG12 | 0.054 | 0.008 | 0.008 | 0.053 | 0.011 | 93,358 |

| JPEG6 | 0.059 | 0.008 | 0.009 | 0.058 | 0.019 | 93,108 |

| JPEG1 | 0.060 | 0.010 | 0.010 | 0.059 | 0.014 | 91,756 |

| Format | SD-GCPs | Tie Points | ||||

|---|---|---|---|---|---|---|

| TE (m) | X (m) | Y (m) | Z (m) | XY (m) | ||

| DNG | 0.045 | 0.025 | 0.031 | 0.020 | 0.040 | 66,092 |

| TIFF | 0.049 | 0.030 | 00.028 | 0.027 | 0.041 | 63,679 |

| JPEG12 | 0.050 | 0.030 | 0.027 | 0.029 | 0.041 | 63,540 |

| JPEG6 | 0.051 | 0.029 | 0.027 | 0.031 | 0.041 | 61,635 |

| JPEG1 | 0.044 | 0.022 | 0.033 | 0.019 | 0.040 | 56,596 |

| Format | SD—GCPs | Tie Points | ||||

|---|---|---|---|---|---|---|

| TE (m) | X (m) | Y (m) | Z (m) | XY (m) | ||

| DNG | 0.013 | 0.008 | 0.007 | 0.008 | 0.011 | 167,627 |

| TIFF | 0.013 | 0.008 | 0.006 | 0.008 | 0.010 | 148,613 |

| JPEG12 | 0.013 | 0.008 | 0.006 | 0.007 | 0.010 | 147,688 |

| JPEG6 | 0.014 | 0.008 | 0.006 | 0.008 | 0.011 | 150,227 |

| JPEG1 | 0.060 | 0.024 | 0.023 | 0.051 | 0.034 | 148,611 |

| Format | SD-CPs | ||||

|---|---|---|---|---|---|

| TE (m) | X (m) | Y (m) | Z (m) | XY (m) | |

| DNG | 0.055 | 0.006 | 0.006 | 0.054 | 0.009 |

| TIFF | 0.062 | 0.009 | 0.009 | 0.061 | 0.012 |

| JPEG12 | 0.058 | 0.008 | 0.008 | 0.057 | 0.011 |

| JPEG6 | 0.064 | 0.009 | 0.008 | 0.062 | 0.012 |

| JPEG1 | 0.060 | 0.010 | 0.010 | 0.063 | 0.018 |

| Format | SD-CPs | ||||

|---|---|---|---|---|---|

| TE (m) | X (m) | Y (m) | Z (m) | XY (m) | |

| DNG | 0.049 | 0.027 | 0.034 | 0.022 | 0.044 |

| TIFF | 0.054 | 0.033 | 0.030 | 0.030 | 0.045 |

| JPEG12 | 0.055 | 0.033 | 0.030 | 0.032 | 0.045 |

| JPEG6 | 0.056 | 0.033 | 0.030 | 0.033 | 0.045 |

| JPEG1 | 0.046 | 0.024 | 0.036 | 0.016 | 0.044 |

| Format | SD-CPs | ||||

|---|---|---|---|---|---|

| TE (m) | X (m) | Y (m) | Z (m) | XY (m) | |

| DNG | 0.016 | 0.010 | 0.007 | 0.010 | 0.012 |

| TIFF | 0.014 | 0.010 | 0.006 | 0.008 | 0.012 |

| JPEG12 | 0.014 | 0.010 | 0.006 | 0.008 | 0.012 |

| JPEG6 | 0.015 | 0.010 | 0.006 | 0.008 | 0.012 |

| JPEG1 | 0.070 | 0.028 | 0.027 | 0.062 | 0.039 |

| Format | Dense Point Cloud | ||

|---|---|---|---|

| Test Site 1 | Test Site 2 | Test Site 3 | |

| DNG | 1,767,190 | 1,889,492 | 1,226,600 |

| TIFF | 1,670,902 | 1,808,733 | 1,119,258 |

| JPEG12 | 1,681,901 | 1,805,020 | 1,378,370 |

| JPEG6 | 1,670,747 | 1,806,635 | 1,376,540 |

| JPEG1 | 1,658,614 | 1,776,380 | 1,268,410 |

| Format | SD—GCPs | Tie Points | Dense Point Cloud | ||||

|---|---|---|---|---|---|---|---|

| TE (m) | X (m) | Y (m) | Z (m) | XY (m) | |||

| DNG | 0.018 | 0.010 | 0.008 | 0.012 | 0.013 | 22,254 | 624,766 |

| DNG | 0.018 | 0.010 | 0.008 | 0.012 | 0.013 | 22,254 | 624,766 |

| TIFF | 0.016 | 0.009 | 0.008 | 0.010 | 0.012 | 16,936 | 668,490 |

| JPEG12 | 0.017 | 0.008 | 0.010 | 0.011 | 0.013 | 19,417 | 670,026 |

| JPEG6 | 0.019 | 0.011 | 0.010 | 0.012 | 0.015 | 19,551 | 669,359 |

| JPEG1 | 0.047 | 0.014 | 0.011 | 0.043 | 0.018 | 17,092 | 680,969 |

| Format | SD–CPs | ||||

|---|---|---|---|---|---|

| TE (m) | X (m) | Y (m) | Z (m) | XY (m) | |

| DNG | 0.018 | 0.011 | 0.009 | 0.011 | 0.014 |

| TIFF | 0.017 | 0.011 | 0.007 | 0.011 | 0.013 |

| JPEG12 | 0.019 | 0.012 | 0.010 | 0.010 | 0.016 |

| JPEG6 | 0.027 | 0.015 | 0.016 | 0.016 | 0.022 |

| JPEG1 | 0.037 | 0.014 | 0.011 | 0.033 | 0.018 |

| Test n.1 | Test n.2 | Test n.3 | ||||

|---|---|---|---|---|---|---|

| MEAN (m) | SD (m) | MEAN (m) | SD (m) | MEAN (m) | SD (m) | |

| DNG—TIFF | 0.015 | 0.074 | 0.022 | 0.178 | 0.004 | 0.044 |

| DNG—JPEG12 | 0.005 | 0.043 | 0.004 | 0.159 | 0.005 | 0.007 |

| DNG—JPEG 6 | 0.015 | 0.075 | 0.009 | 0.227 | 0.004 | 0.063 |

| DNG—JPEG1 | 0.006 | 0.050 | 0.046 | 0.167 | 0.069 | 0.137 |

| SfM Building Blocks | Dense Point Cloud Generation | |||||

|---|---|---|---|---|---|---|

| Matching | Image Orientation | Normalized Time vs. DNG | Depth Map | Dense Cloud | Normalized Time vs. DNG | |

| DNG | 8 m 59 s | 3 m 41 s | (760 s) | 8 m 48 s | 1 m 13 s | (601 s) |

| TIFF | 7 m 46 s | 3 m 23 s | 12% | 6 m 20 s | 23 s | 33% |

| JPEG12 | 7 m 47 s | 3 m 37 s | 10% | 5 m 40 s | 26 s | 39% |

| JPEG6 | 7 m 39 s | 3 m 19 s | 13% | 6 m 7 s | 23 s | 35% |

| JPEG1 | 7 m 33 s | 3 m 09 s | 16% | 5 m 31 s | 22 s | 41% |

| SfM Building Blocks | Dense Point Cloud Generation | |||||

|---|---|---|---|---|---|---|

| Matching | Image Orientation | Normalized Time vs. DNG | Depth Map | Dense Cloud | Normalized Time vs. DNG | |

| DNG | 9 m 46 s | 1 m 39 s | (685 s) | 9 m 07 s | 1 m 15 s | (622 s) |

| TIFF | 8 m 10 s | 1 m 32 s | 15% | 6 m 12 s | 23 s | 36% |

| JPEG12 | 7 m 32 s | 1 m 35 s | 20% | 6 m 01 s | 26 s | 36% |

| JPEG6 | 7 m 26 s | 1 m 32 s | 21% | 5 m 56 s | 23 s | 37% |

| JPEG1 | 7 m 19 s | 1 m 17 s | 25% | 5 m 58 s | 23 s | 37% |

| SfM Building Blocks | Dense Point Cloud Generation | |||||

|---|---|---|---|---|---|---|

| Matching | Image Orientation | Normalized Time vs. DNG | Depth Map | Dense Cloud | Normalized Time vs. DNG | |

| DNG | 27 m 42 s | 2 m 08 s | (1790 s) | 54 m 38 s | 4 m 52 s | (3570 s) |

| TIFF | 25 m 29 s | 3 m 30 s | 3% | 40 m 23 s | 4 m 53 s | 24% |

| JPEG12 | 25 m 21 s | 2 m 29 s | 7% | 40 m 34 s | 3 m 06 s | 27% |

| JPEG6 | 25 m 08 s | 2 m 21 s | 8% | 43 m 38 s | 3 m 18 s | 21% |

| JPEG1 | 24 m 49 s | 4 m 10 s | 3% | 34 m 52 s | 5 m 24 s | 32% |

| Format | Channel | Mean | Bias | RMSE | RASE | ERGAS |

|---|---|---|---|---|---|---|

| TIFF | red | 160.43 | 67.43 | 10.97 | 7.937932 | 1.288985 |

| green | 156.00 | 59.87 | 9.54 | |||

| blue | 150.40 | 51.17 | 15.69 | |||

| JPEG12 | red | 158.63 | 67,35 | 9.17 | 7.508253 | 1.253453 |

| green | 153.49 | 59.46 | 8.40 | |||

| blue | 146.88 | 51.52 | 15.53 | |||

| JPEG6 | red | 160.87 | 66.82 | 11.40 | 8.783363 | 1.34298 |

| green | 157.14 | 59.32 | 10,73 | |||

| blue | 154.52 | 49.41 | 18.14 | |||

| JPEG1 | red | 161.75 | 65.21 | 12.38 | 9.731192 | 1.407486 |

| green | 158.29 | 58.26 | 12.30 | |||

| blue | 156.48 | 47.78 | 20.30 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Alfio, V.S.; Costantino, D.; Pepe, M. Influence of Image TIFF Format and JPEG Compression Level in the Accuracy of the 3D Model and Quality of the Orthophoto in UAV Photogrammetry. J. Imaging 2020, 6, 30. https://0-doi-org.brum.beds.ac.uk/10.3390/jimaging6050030

Alfio VS, Costantino D, Pepe M. Influence of Image TIFF Format and JPEG Compression Level in the Accuracy of the 3D Model and Quality of the Orthophoto in UAV Photogrammetry. Journal of Imaging. 2020; 6(5):30. https://0-doi-org.brum.beds.ac.uk/10.3390/jimaging6050030

Chicago/Turabian StyleAlfio, Vincenzo Saverio, Domenica Costantino, and Massimiliano Pepe. 2020. "Influence of Image TIFF Format and JPEG Compression Level in the Accuracy of the 3D Model and Quality of the Orthophoto in UAV Photogrammetry" Journal of Imaging 6, no. 5: 30. https://0-doi-org.brum.beds.ac.uk/10.3390/jimaging6050030