A User Study of a Wearable System to Enhance Bystanders’ Facial Privacy

Abstract

:1. Introduction

- We present a summary of human–computer interaction studies and systems related to facial privacy.

- We present a user study of the FacePET system with a focus on users’ perceptions about the device and intelligent goggles with features to mitigate facial detection algorithms.

- We discuss the results of the study to further enhance the FacePET system, as well as influence the development of future bystander-centric devices for facial privacy.

2. Related Works

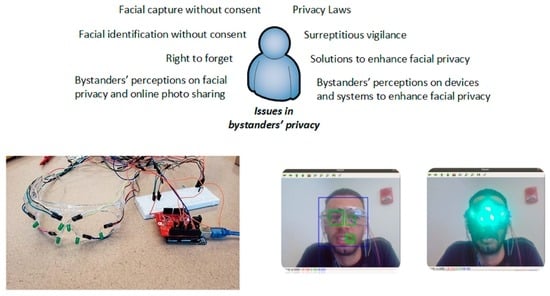

2.1. Bystanders’ Facial Privacy: Human–Computer Interaction (HCI) Perspective

- Seven studies in Table 1 recruited less than 36 participants (five studies recruited 20 or less participants [8,31,38,39,40], and two studies recruited less than 36 participants [32,34]. Only two studies recruited more than 100 participants [36,37]. The studies with less than 36 participants use interviews, observation, testing of devices and some of them use surveys. The studies with more than 100 participants use surveys or automated ways (AI) to gather data of interest.

- The definitions of private/public (shared) spaces and privacy perceptions vary among individuals. What is meant for a private/public space seems to depend on context (i.e., individuals, actions and devices used at any given location).

- The design of the data capturing device has an impact on user and bystanders’ privacy perceptions.

- Individuals want to have control of their facial privacy even though some contexts are less private-sensitive than others.

2.2. Bystanders’ Facial Privacy: Solutions

2.2.1. Location-Dependent Methods

- Banning/Confiscating devices: Even though they are non-technological solutions, banning/confiscating devices are the oldest method to handle bystanders’ privacy. In the U.S., this method was first used starting from the development of portable photographic cameras at the end of the 19th century [45]. Around this time, cameras were forbidden at some public spaces and private venues.

- Disabling devices: In this group the goal is to disable a capturing device to protect bystanders’ privacy. Methods under this category can be further classified based on the technology used to disable the capturing device. In the first group (sensor saturation), a capturing device is disabled by some type of signal that interferes with a sensor that collects identifiable data [3]. In the broadcasting of commands group, a capturing device receives disabling messages via data communication interfaces (i.e., Wi-Fi, Bluetooth, infrared) [4,5]. In the last group (context-based approaches) the capturing device identifies contexts using badges, labels, or it recognizes contexts [46] using Artificial Intelligence (AI) methods to determine if capturing cannot take place [6,7,8].

2.2.2. Obfuscation-Dependent Methods

- Bystander-based obfuscation: In this category, bystanders avoid their facial identification either by using technological solutions to hide or perturb bystanders’ identifiable features, or by performing a physical action such as asking somebody to stop capturing data, or simply leaving a shared/public space. Our FacePET [25] wearable device falls into this category.

- Device-based obfuscation: In this group, third-party devices which are not owned by the bystander perform blurring or add noise (in the signal processing sense) to the image captured from the bystander to hide his/her identity. Depending on how the software at the capturing device performs the blurring, solutions in this category can be further classified into default obfuscation (any face in the image will be blurred) [19], selective obfuscation (third-party device users select who to obfuscate in the image) [20], or collaborative obfuscation (third-party and bystander’s device collaborate via wireless protocols [47] to allow a face to be blurred) [21]. A drawback of device-based obfuscation method is that a bystander must trust a device that he/she does not control to protect his/her privacy.

3. The FacePET System

3.1. Adversarial Machine Learning Attacks on the Viola–Jones Algorithm

3.2. The Facial Privacy Enabled Technology (FacePET) System

- FacePET wearable: The FacePET wearable (as Figure 6 shows) is composed of goggles with 6 strategically placed Light Emitting Diodes (LEDs), a Bluetooth Low Energy (BLE)-enabled microcontroller, and a power supply. When a bystander wears and activates the wearable, the FacePET wearable emits green light that generates noise (in the signal processing sense) and confuse Haar-like features for the Viola–Jones algorithm. The BLE microcontroller allows the bystander to turn on/off the lights through a Graphical User Interface (GUI) implemented as a mobile application and runs on the bystanders’ mobile phone.

- FacePET mobile applications: We implemented two mobile applications for the FacePET system. The first mobile application, namely the Bystander’s mobile app implements a GUI to turn on/off the FacePET wearable through commands broadcast using BLE communications. The Bystander’s mobile app also implements an Access Control List (ACL) in which third-party cameras are authorized to disable the wearable and take photos. Different types of policies can be enforced for external parties to disable the wearable. For example, for a specific third-party user, the Bystander’s mobile app can limit the number of times the wearable can be disabled for that third-party user. Further privacy policies based on contexts (i.e., location) can also be implemented. The second app, called the Third-party (stranger) mobile application, issues requests to disable the wearable and take photos of the bystander with wearable’s lights off. In the current prototype, the Third-party (stranger) mobile application connects to the Bystander’s mobile app via Bluetooth [53]. Figure 7 presents screenshots of both mobile applications.

- FacePET consent protocol: The FacePET consent protocol (as Figure 8 shows) enables a mechanism that creates a list of trusted cameras (an ACL) at the bystander’s mobile application. In our current prototype the consent protocol is implemented over Bluetooth.

4. A User Evaluation on Perceptions of FacePET and Bystander-Based Facial Privacy Devices

4.1. Methodology

4.2. Study Results

- The current model is too big and draws attention.

- The model is not stylish and can obstruct vision.

- Select participants do not really take pictures or engage in the media market in such a manner.

- Person laughs and says, “Stupid glasses”.

- People would stare a lot.

- People would be confused at first or creeped out.

- People would ask why the user was wearing such a device.

- The device would only invite more people to take pictures of it.

4.3. Study Limitations

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Perez, A.J.; Zeadally, S.; Griffith, S. Bystanders’ privacy. IT Prof. 2017, 19, 61–65. [Google Scholar] [CrossRef]

- Perez, A.J.; Zeadally, S. Privacy issues and solutions for consumer wearables. IT Prof. 2018, 20, 46–56. [Google Scholar] [CrossRef]

- Truong, K.N.; Patel, S.N.; Summet, J.W.; Abowd, G.D. Preventing camera recording by designing a capture-resistant environment. In Proceedings of the International Conference on Ubiquitous Computing, Tokyo, Japan, 11–14 September 2005; pp. 73–86. [Google Scholar]

- Tiscareno, V.; Johnson, K.; Lawrence, C. Systems and Methods for Receiving Infrared Data with a Camera Designed to Detect Images Based on Visible Light. U.S. Patent 8848059, 30 September 2014. [Google Scholar]

- Wagstaff, J. Using Bluetooth to Disable Camera Phones. Available online: http://www.loosewireblog.com/2004/09/using_bluetooth.html (accessed on 21 September 2018).

- Kapadia, A.; Henderson, T.; Fielding, J.J.; Kotz, D. Virtual walls: Protecting digital privacy in pervasive environments. In Proceedings of the International Conference Pervasive Computing, LNCS 4480, Toronto, ON, Canada, 13–16 May 2007; pp. 162–179. [Google Scholar]

- Blank, P.; Kirrane, S.; Spiekermann, S. Privacy-aware restricted areas for unmanned aerial systems. IEEE Secur. Priv. 2018, 16, 70–79. [Google Scholar] [CrossRef]

- Steil, J.; Koelle, M.; Heuten, W.; Boll, S.; Bulling, A. Privaceye: Privacy-preserving head-mounted eye tracking using egocentric scene image and eye movement features. In Proceedings of the 11th ACM Symposium on Eye Tracking Research & Applications, 25–28 June 2019; pp. 1–10. [Google Scholar]

- Pidcock, S.; Smits, R.; Hengartner, U.; Goldberg, I. Notisense: An urban sensing notification system to improve bystander privacy. In Proceedings of the 2nd International Workshop on Sensing Applications on Mobile Phones (PhoneSense), Seattle, WA, USA, 12–15 June 2011; pp. 1–5. [Google Scholar]

- Yamada, T.; Gohshi, S.; Echizen, I. Use of invisible noise signals to prevent privacy invasion through face recognition from camera images. In Proceedings of the ACM Multimedia 2012 (ACM MM 2012), Nara, Japan, 29 October 2012; pp. 1315–1316. [Google Scholar]

- Yamada, T.; Gohshi, S.; Echizen, I. Privacy visor: Method based on light absorbing and reflecting properties for preventing face image detection. In Proceedings of the 2013 IEEE International Conference on Systems, Man, and Cybernetics (SMC), Manchester, UK, 13–16 October 2013; pp. 1572–1577. [Google Scholar]

- Sharif, M.; Bhagavatula, S.; Bauer, L.; Reiter, M.K. Accessorize to a crime: Real and stealthy attacks on state-of-the-art face recognition. In Proceedings of the 2016 ACM SIGSAC Conference Computer and Communications Security (CCS 2016), Vienna, Austria, 24–28 October 2016; pp. 1528–1540. [Google Scholar]

- ObscuraCam: Secure Smart Camera. Available online: https://guardianproject.info/apps/obscuracam/ (accessed on 30 September 2018).

- Aditya, P.; Sen, R.; Druschel, P.; Joon Oh, S.; Benenson, R.; Fritz, M.; Schiele, B.; Bhattacharjee, B.; Wu, T.T. I-pic: A platform for privacy-compliant image capture. In Proceedings of the 14th Annual International Conference on Mobile Systems, Applications, and Services (MobiSys), Singapore, 25–30 June 2016; pp. 249–261. [Google Scholar]

- Roesner, F.; Molnar, D.; Moshchuk, A.; Kohno, T.; Wang, H.J. World-driven access control for continuous sensing. In Proceedings of the 2014 ACM SIGSAC Conference on Computer and Communications Security (CCS 2014), Scottsdale, AZ, USA, 3–7 November 2014; pp. 1169–1181. [Google Scholar]

- Maganis, G.; Jung, J.; Kohno, T.; Sheth, A.; Wetherall, D. Sensor Tricorder: What does that sensor know about me? In Proceedings of the 12th Workshop on Mobile Computing Systems and Applications (HotMobile ‘11), Phoenix, AZ, USA, 1–3 March 2011; pp. 98–103. [Google Scholar]

- Templeman, R.; Korayem, M.; Crandall, D.J.; Kapadia, A. PlaceAvoider: Steering First-Person Cameras away from Sensitive Spaces. In Proceedings of the 2014 Network and Distributed System Security (NDSS) Symposium, San Diego, CA, USA, 23–26 February 2014; pp. 23–26. [Google Scholar]

- AVG Reveals Invisibility Glasses at Pepcom Barcelona. Available online: http://now.avg.com/avg-reveals-invisibility-glasses-at-pepcom-barcelona (accessed on 7 July 2020).

- Frome, A.; Cheung, G.; Abdulkader, A.; Zennaro, M.; Wu, B.; Bissacco, A.; Adam, H.; Neven, H.; Vincent, L. Large-scale privacy protection in Google Street View. In Proceedings of the 12th International Conference on Computer Vision, Kyoto, Japan, 29 September–2 October 2009; pp. 2373–2380. [Google Scholar]

- Li, A.; Li, Q.; Gao, W. Privacycamera: Cooperative privacy-aware photographing with mobile phones. In Proceedings of the 13th Annual IEEE International Conference on Sensing, Communication, and Networking (SECON), London, UK, 27–30 June 2016; pp. 1–9. [Google Scholar]

- Schiff, J.; Meingast, M.; Mulligan, D.K.; Sastry, S.; Goldberg, K. Respectful cameras: Detecting visual markers in real-time to address privacy concerns. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems, San Diego, CA, USA, 29 October–2 November 2007; pp. 65–89. [Google Scholar]

- Ra, M.R.; Lee, S.; Miluzzo, E.; Zavesky, E. Do not capture: Automated obscurity for pervasive imaging. IEEE Internet Comput. 2017, 21, 82–87. [Google Scholar] [CrossRef]

- Ashok, A.; Nguyen, V.; Gruteser, M.; Mandayam, N.; Yuan, W.; Dana, K. Do not share! Invisible light beacons for signaling preferences to privacy-respecting cameras. In Proceedings of the 1st ACM MobiCom Workshop on Visible Light Communication Systems, Maui, HI, USA, 7–11 September 2014; pp. 39–44. [Google Scholar]

- Ye, T.; Moynagh, B.; Albatal, R.; Gurrin, C. Negative face blurring: A privacy-by-design approach to visual lifelogging with google glass. In Proceedings of the 23rd ACM International Conference on Information and Knowledge Management, Shanghai, China, 3–7 November 2014; pp. 2036–2038. [Google Scholar]

- Perez, A.J.; Zeadally, S.; Matos Garcia, L.Y.; Mouloud, J.A.; Griffith, S. FacePET: Enhancing bystanders’ facial privacy with smart wearables/internet of things. Electronics 2018, 7, 379. [Google Scholar] [CrossRef] [Green Version]

- Face Detection Using Haar Cascades. Available online: https://docs.opencv.org/3.4.2/d7/d8b/tutorial_py_face_detection.html (accessed on 7 July 2020).

- Viola, P.; Jones, M. Robust real-time face detection. Int. J. Comput. Vis. 2004, 57, 137–154. [Google Scholar] [CrossRef]

- Office of the Privacy Commissioner of Canada. Consent and Privacy a Discussion Paper Exploring Potential Enhancements to Consent Under the Personal Information Protection and Electronic Documents Act. Available online: https://www.priv.gc.ca/en/opc-actions-and-decisions/research/explore-privacy-research/2016/consent_201605 (accessed on 9 September 2020).

- United States the White House Office. National Strategy for Trusted Identities in Cyberspace: Enhancing Online Choice, Efficiency, Security, and Privacy. Available online: https://www.hsdl.org/?collection&id=4 (accessed on 9 September 2020).

- Horizon 2020 Horizon Programme of the European Union. Complete Guide to GDPR Compliance. Available online: https://gdpr.eu/ (accessed on 9 September 2020).

- Palen, L.; Salzman, M.; Youngs, E. Going wireless: Behavior & practice of new mobile phone users. In Proceedings of the 2000 ACM Conference on Computer Supported Cooperative Work (CSCW’00), Philadelphia, PA, USA, 2–6 December 2000; pp. 201–210. [Google Scholar]

- Denning, T.; Dehlawi, Z.; Kohno, T. In situ with bystanders of augmented reality glasses: Perspectives on recording and privacy-mediating technologies. In Proceedings of the 2014 SIGCHI Conference on Human Factors in Computing System, Toronto, ON, Canada, 26 April 26–1 May 2014; pp. 2377–2386. [Google Scholar]

- Motti, V.G.; Caine, K. Users’ privacy concerns about wearables. In Proceedings of the International Conference on Financial Cryptography and Data Security, San Juan, Puerto Rico, 30 January 2015; pp. 231–244. [Google Scholar]

- Hoyle, R.; Templeman, R.; Armes, S.; Anthony, D.; Crandall, D.; Kapadia, A. Privacy behaviors of lifeloggers using wearable cameras. In Proceedings of the 2014 ACM International Joint Conference on Pervasive and Ubiquitous Computing, Seattle, WA, USA, 13–17 September 2014; pp. 571–582. [Google Scholar]

- Hoyle, R.; Stark, L.; Ismail, Q.; Crandall, D.; Kapadia, A.; Anthony, D. Privacy norms and preferences for photos Posted Online. ACM Trans. Comput. Hum. Interact. (TOCHI) 2020, 27, 1–27. [Google Scholar] [CrossRef]

- Zhang, S.A.; Feng, Y.; Das, A.; Bauer, L.; Cranor, L.; Sadeh, N. Understanding people’s privacy attitudes towards video analytics technologies. In Proceedings of the FTC PrivacyCon 2020, Washington, DC, USA, 21 July 2020; pp. 1–18. [Google Scholar]

- Hatuka, T.; Toch, E. Being visible in public space: The normalisation of asymmetrical visibility. Urban Stud. 2017, 54, 984–998. [Google Scholar] [CrossRef]

- Wang, Y.; Xia, H.; Yao, Y.; Huang, Y. Flying eyes and hidden controllers: A qualitative study of people’s privacy perceptions of civilian drones in the US. In Proceedings of the on Privacy Enhancing Technologies, Darmstadt, Germany, 16–22 July 2016; pp. 172–190. [Google Scholar]

- Chang, V.; Chundury, P.; Chetty, M. Spiders in the sky: User perceptions of drones, privacy, and security. In Proceedings of the 2017 CHI Conference on Human Factors in Computing Systems, New York, NY, USA, 6–11 May 2017; pp. 6765–6776. [Google Scholar]

- Ahmad, I.; Farzan, R.; Kapadia, A.; Lee, A.J. Tangible privacy: Towards user-centric sensor designs for bystander privacy. Proc. ACM Hum. Comput. Interact. 2020, 4, 116. [Google Scholar] [CrossRef]

- Nielsen, J. Usability Engineering, 1st ed.; Academic Press: Cambridge, MA, USA, 1993; ISBN 978-0125184052. [Google Scholar]

- Zeadally, S.; Khan, S.; Chilamkurti, N. Energy-efficient networking: Past, present, and future. J. Supercomput. 2012, 62, 1093–1118. [Google Scholar] [CrossRef]

- Jamil, F.; Kim, D.H. Improving accuracy of the alpha–beta filter algorithm using an ANN-based learning mechanism in indoor navigation system. Sensors 2019, 19, 3946. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Ahmad, S.; Kim, D.-H. Toward accurate position estimation using learning to prediction algorithm in indoor navigation. Sensors 2020, 20, 4410. [Google Scholar]

- Jarvis, J. Public Parts: How Sharing in the Digital Age Improves the Way We Work and Live, 1st ed.; Simon & Schuster: New York, NY, USA, 2011; pp. 1–272. ISBN 978-1451636000. [Google Scholar]

- Jamil, F.; Ahmad, S.; Iqbal, N.; Kim, D.H. Towards a remote monitoring of patient vital signs based on IoT-based blockchain integrity management platforms in smart hospitals. Sensors 2020, 20, 2195. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Iqbal, M.A.; Amin, R.; Kim, D. Adaptive thermal-aware routing protocol for wireless body area network. Electronics 2019, 8, 47. [Google Scholar]

- Dalvi, N.; Domingos, P.; Sanghai, S.; Verma, D. Adversarial classification. In Proceedings of the 10th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, Seattle, WA, USA, 22–25 August 2004; pp. 99–108. [Google Scholar]

- Biggio, B.; Roli, F. Wild patterns: Ten years after the rise of adversarial machine learning. Pattern Recognit. 2018, 84, 317–331. [Google Scholar] [CrossRef] [Green Version]

- Li, H.; Lin, Z.; Shen, X.; Brandt, J.; Hua, G. A convolutional neural network cascade for face detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–22 June 2015; pp. 5325–5334. [Google Scholar]

- Bose, A.J.; Aarabi, P. Adversarial attacks on face detectors using neural net based constrained optimization. In Proceedings of the IEEE 20th International Workshop on Multimedia Signal Processing (MMSP), Vancouver, BC, Canada, 29–31 August 2018; pp. 1–6. [Google Scholar]

- Goswami, G.; Ratha, N.; Agarwal, A.; Singh, R.; Vatsa, M. Unravelling robustness of deep learning based face recognition against adversarial attacks. In Proceedings of the 32nd AAAI Conference on Artificial Intelligence (AAAI-18), New Orleans, LA, USA, 2–7 February 2018; p. 6829. [Google Scholar]

- Zeadally, S.; Siddiqui, F.; Baig, Z. 25 years of bluetooth technology. Future Internet 2019, 11, 194. [Google Scholar] [CrossRef] [Green Version]

| Reference | Research Focus | Approach | Comments |

|---|---|---|---|

| Palen et al. [31] | Mobile phones in shared spaces | 19 new mobile phone users tracked for six weeks. Voice mail diaries, interviews and calling behavior data collected for four months | Users were inclined to modify their perceptions on social appropriateness from initial use. Highlighted a conflict of spaces (physical vs. virtual) |

| Denning et al. [32] | Augmented Reality (AR) glasses | 12 field sessions with 31 bystanders interviewed and their reactions to a co-located AR device | Participants identified different factors on making recording more/less acceptable and they expressed interest on being asked for consent to be recorded and record-blocking devices |

| Motti et al. [33] | Wearable devices including armbands, smart watches, earpieces, head bands, headphones and smart glasses | Observational study of online comments posted by wearable users. A total of 72 privacy comments analyzed | Identified 13 user’s concerns about wearable privacy related to the type of data and how device collects, stores, processes and shares data. Concerns depend on type and design of device |

| Hoyle et al. [34] | Lifelogging with wearable camera devices | In situ user study in which 36 participants wore a lifelogging device for a week, answered questionnaires on photos captured, and participated in an exit interview | Users preferred to manage privacy through in situ physical control of image collection (rather than later), context determines sensitivity, and users were concerned about bystanders’ privacy although almost no opposition or concerns were expressed by bystanders during study |

| Hoyle et al. [35] | Privacy perceptions of online photos | Survey deployed through Amazon Mechanical Turk (mTurk) with 279 respondents. Survey used 60 photos showing 10 different contextual conditions | Respondents shared common expectations on the privacy norms of online images. Norms are socially contingent and multidimensional. Social contexts and sharing can affect social meaning of privacy |

| Zhang et al. [36] | Privacy attitudes on video analytics technologies | 10 day longitudinal in situ study involving 123 participants and 2328 deployment scenarios | Privacy preferences vary with a number of factors (context). Some contexts make people feel uncomfortable. People have little awareness on the contexts where video analytics can be deployed |

| Hatuka et al. [37] | Smartphone users’ perceptions about contemporary meaning of public/private spaces | Correlational study with 138 participants who took surveys and were observed by researchers for three months. Participants divided in two groups: basic phone users and advanced smart phone users | Differences on the meaning of public/private spaces may be blurred and may be dynamically redefined by use of technology |

| Wang et al. [38] | Civilian use of drones/Unmanned Aerial Vehicles (UAVs) | 16 semi-structured interviews to examine people’s perceptions on drones and usage under five specific scenarios. Participants were shown a real drone and videos about its capabilities before interview | Differences on the meaning of public/private spaces for participants. Participants highlighted inconspicuous recording and inaccessible drone pilots to request for privacy as concerns and some participants expected for expected for consent to be asked before recording by drones |

| Chang et al. [39] | Drones | Laboratory study with 20 participants using real and simulated drones to elicit user perceptions about drone security and privacy. Study also used surveys, interviews and drone piloting exercises | Drone design affects privacy and raises security concerns with drones. Recommended the use of geo-fencing to address privacy concerns, designated fly-zones/“highways” for drones. Auditive and wind clues to inform of drone usage for bystanders |

| Steil at al. [8] | User evaluation of a privacy-preserving device to block a head-mount camera | 12 participants with semi-structured one-to-one interviews to evaluate an eye gesture-activated first-person camera shutter blocker device controlled by Artificial Intelligence (AI) 17 participants annotated video datasets for training data | Eye-tracking can be used as a way to handle bystanders’ privacy as camera activates when person fixes eyesight. Non-invasive on the user. Eye tracking not perceived in general as a threat to privacy by participants. Privacy sensitivity varies largely among people, thus affecting the definition of privacy |

| Aditya et al. [14] | Personal expectations and desires for privacy on photos when photographed as a bystander | Survey deployed online via Google Forms with 227 respondents from 32 different countries | Privacy concerns and privacy actions varied based on context (i.e., location, social situations) |

| Ahmad et al. [40] | People’s perceptions of and behaviors around current IoT devices as bystanders | Interview study with 19 participants | Participants expressed concerns about uncertainty of IoT device’s state (if they were recording or not) and their purpose when being bystanders around these devices |

| Our approach (FacePET) | User study of a bystander-based wearable (smart glasses) to attack facial detection algorithms | 21 participants took survey on bystanders’ privacy, wore the FacePET device, saw the results of facial privacy protection on their faces, and answered questions on the usability of FacePET | Most participants would use FacePET or a bystander-based facial privacy device. Most participants agreed that facial privacy features would improve the use and adoption of smart glasses |

| Category | Subcategory | Method |

|---|---|---|

| Location Methods disable or ban the utilization of capturing devices | Disabling devices–sensor saturation | BlindSpot [3] |

| Disabling services–Broadcasting of commands | Using infrared to disable devices [4] | |

| Using Bluetooth to disable devices [5] | ||

| Disabling devices–context-based | Virtual Walls [6] | |

| Privacy-restricted areas [7] | ||

| World-driven access control [15] | ||

| Sensor Tricorder [16] | ||

| PlaceAvoider [17] | ||

| PrivacEye: [8] | ||

| Obfuscation Methods hide the identity of bystanders’ faces to avoid identification | Bystander–based | NotiSense [9] |

| PrivacyVisor [10] | ||

| PrivacyVisor III [11] | ||

| Perturbed eyeglass frames [12] | ||

| Invisibility Glasses [18] | ||

| Device-based–default | Privacy Google StreetView [19] | |

| Device-based–selective | ObscuraCam [13] | |

| Respectful cameras [21] | ||

| Invisible Light Beacons [23] | ||

| Negative face blurring [24] | ||

| Device-based–collaborative | I–pic [14] | |

| PrivacyCamera [20] | ||

| Do Not Capture [22] |

| Participants’ Characteristics | Number of Participants | |

|---|---|---|

| Age group | Less than 20 years | 1 |

| 20–30 years | 17 | |

| 30–40 years | 3 | |

| Gender | Male | 14 |

| Female | 7 | |

| Educational level | High school | 1 |

| Some college credits | 14 | |

| Associate’s degree | 1 | |

| Bachelor’s degree | 4 | |

| Master’s degree | 1 | |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Perez, A.J.; Zeadally, S.; Griffith, S.; Garcia, L.Y.M.; Mouloud, J.A. A User Study of a Wearable System to Enhance Bystanders’ Facial Privacy. IoT 2020, 1, 198-217. https://0-doi-org.brum.beds.ac.uk/10.3390/iot1020013

Perez AJ, Zeadally S, Griffith S, Garcia LYM, Mouloud JA. A User Study of a Wearable System to Enhance Bystanders’ Facial Privacy. IoT. 2020; 1(2):198-217. https://0-doi-org.brum.beds.ac.uk/10.3390/iot1020013

Chicago/Turabian StylePerez, Alfredo J., Sherali Zeadally, Scott Griffith, Luis Y. Matos Garcia, and Jaouad A. Mouloud. 2020. "A User Study of a Wearable System to Enhance Bystanders’ Facial Privacy" IoT 1, no. 2: 198-217. https://0-doi-org.brum.beds.ac.uk/10.3390/iot1020013