Fractional Differential Texture Descriptors Based on the Machado Entropy for Image Splicing Detection

Abstract

:1. Introduction

2. Fractional Entropy

3. Construction of Fractional Masks

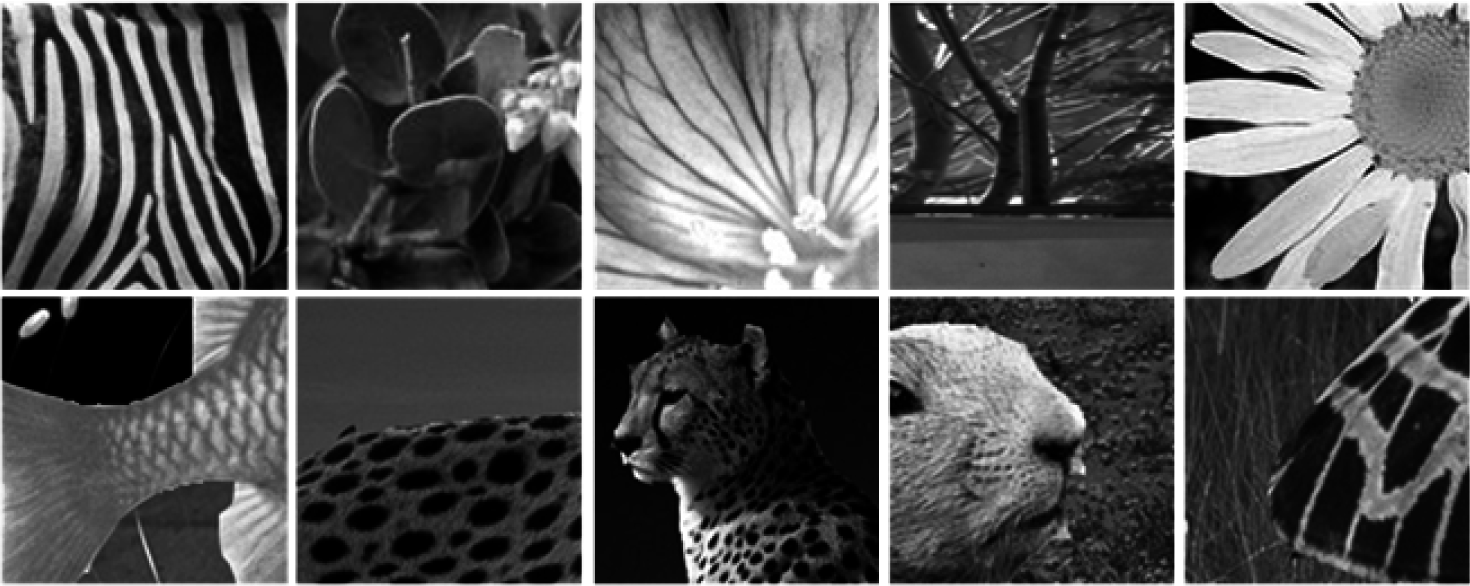

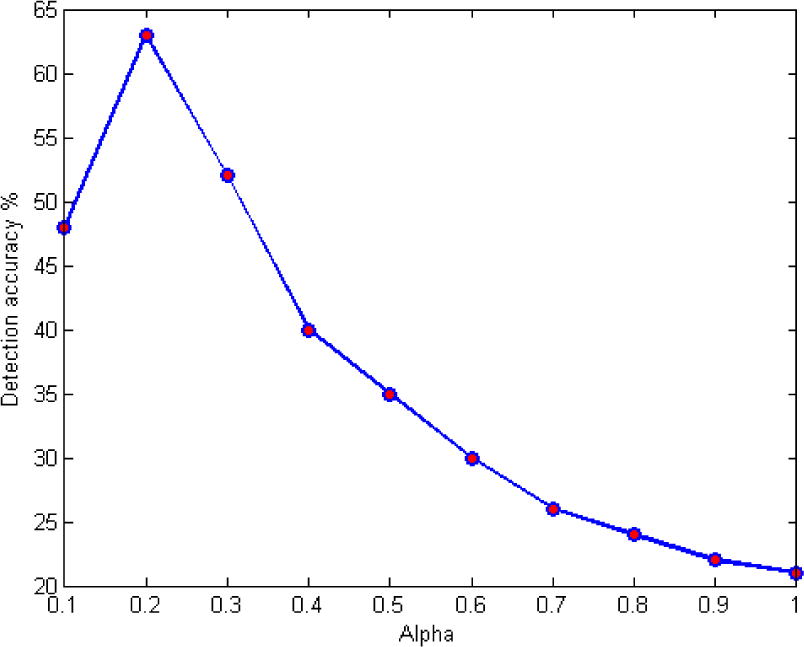

3.1. Texture Feature Extraction

| // Input |

| // I: an Input image |

| // α, µ are fractional parameters of the proposed masks |

| // Output: |

| // T: Texture features |

| 1. Construct 2D fractional mask coefficients in the following eight directions: 0°, 45°, 90°, 135°, 180°, 225°, 270°, and 315°. |

| 2. Split output image into blocks equal to fractional mask window size. |

| 3. For each block, compute the output image’s block in which each pixel of the image’s block is convolved with the fractional masks on eight directions. |

| 4. For each output block, compute the gray-level co-occurrence matrix: Contrast; Homogeneity; Energy, and Entropy [25]. |

| Save the texture features vector T for all image blocks as the final texture features. |

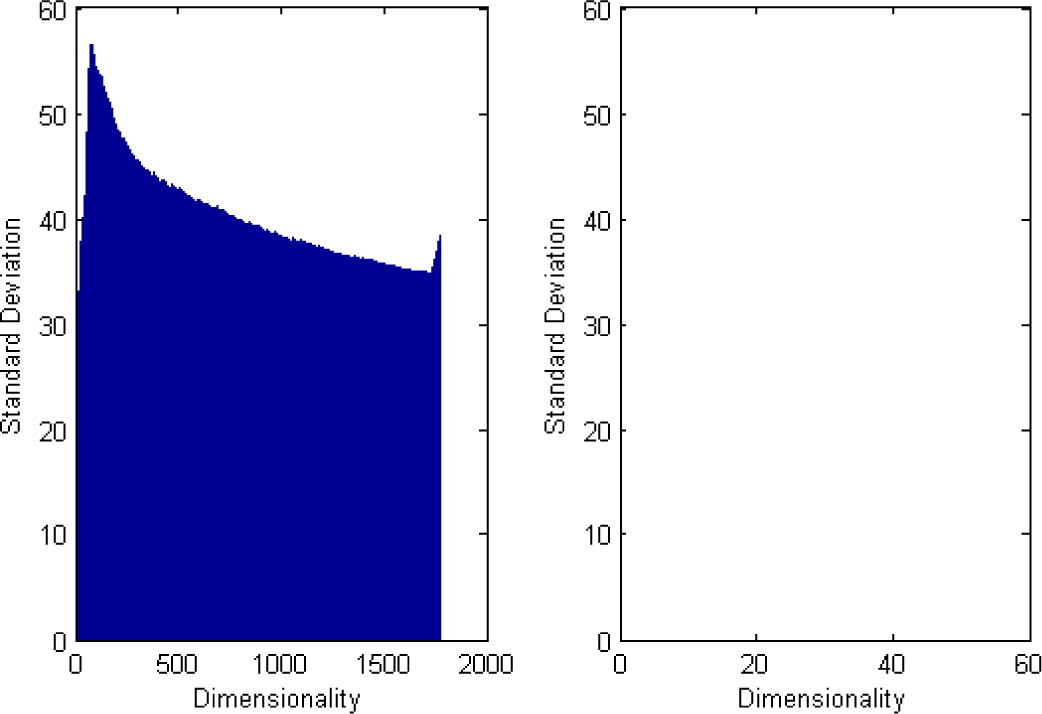

4. Dimension Reduction Method

5. Experimental Results and Discussion

5.1. Classification

- Radial basis function is used as a kernel function

- Grid search method is applied to obtain the best value for c and γ parameters so that the SVM classifier can accurately predict unknown data.

5.2. Comparison with Other Methods

6. Conclusions

Acknowledgments

Author Contributions

Conflict of Interests

References

- He, Z.; Sun, W.; Lu, W.; Lu, H. Digital image splicing detection based on approximate run length. Pattern Recognit. Lett. 2011, 32, 1591–1597. [Google Scholar]

- Wang, W.; Dong, J.; Tan, T. A survey of passive image tampering detection. In Digital Watermarking, Proceedings of the 8th International Workshop on Digital Watermarking (IWDW 2009), University of Surrey, Guildford, Surrey, UK, 24–26 August 2009; Ho, A.T.S., Shi, Y.Q., Kim, H.J., Barni, M., Eds.; Springer: Berlin, Germany, 2009; pp. 308–322. [Google Scholar]

- Zhao, X.; Li, J.; Li, S.; Wang, S. Detecting digital image splicing in chroma spaces. In Digital Watermarking, Proceedings of the 9th International Workshop on Digital Watermarking (IWDW 2010), Seoul, Korea, 1–3 October 2010; Kim, H.J., Shi, Y.Q., Barni, M., Eds.; Springer: Berlin, Germany, 2011; pp. 12–22. [Google Scholar]

- Shi, Y.Q.; Chen, C.; Chen, W. A natural image model approach to splicing detection. Proceedings of the 9th Workshop on Multimedia & Security, Dallas, TX, USA, 20–21 September 2007; pp. 51–62.

- Ng, T.-T.; Chang, S.-F.; Lin, C.-Y.; Sun, Q. Passive-blind image forensics. Multimed. Secur. Technol. Digital Rights 2006, 15, 383–412. [Google Scholar]

- Zhang, J.; Zhao, Y.; Su, Y. A new approach merging Markov and DCT features for image splicing detection. In. In Proceedings of the IEEE, International Conference on Intelligent Computing and Intelligent Systems, Shanghai, China, 20–22 November 2009; pp. 390–394.

- Shi, Y.Q.; Chen, C.; Chen, W. A Markov process based approach to effective attacking jpeg steganography. In Information Hiding; Camenisch, J.L., Collberg, C.S., Johnson, N.F., Sallee, P., Eds.; Springer: Berlin, Germany, 2007; pp. 249–264. [Google Scholar]

- Moghaddasi, Z.; Jalab, H.A.; Md Noor, R.; Aghabozorgi, S. Improving rlrn image splicing detection with the use of PCA and Kernel PCA. Sci. World J 2014, 2014. [Google Scholar] [CrossRef]

- He, Z.; Lu, W.; Sun, W. Improved run length based detection of digital image splicing. In Digital-Forensics and Watermarking, Proceedings of the 10th International Workshop, IWDW 2011, Atlantic City, NJ, USA, 23–26 October 2011; Shi, Y.Q., Kim, H.J., Perez-Gonzalez, F., Eds.; Springer: Berlin, Germany, 2012; pp. 349–360. [Google Scholar]

- Podlubny, I. Fractional Differential Equations: An Introduction to Fractional Derivatives, Fractional Differential Equations, to Methods of Their Solution and Some of Their Applications; Mathematics in Science and Engineering; Academic Press: Waltham, MA, USA, 1999. [Google Scholar]

- Hilfer, R.; Butzer, P.; Westphal, U.; Douglas, J.; Schneider, W.; Zaslavsky, G.; Nonnemacher, T.; Blumen, A.; West, B. Applications of Fractional Calculus in Physics; World Scientific: Singapore, Singapore, 2000. [Google Scholar]

- Kilbas, A.A.A.; Srivastava, H.M.; Trujillo, J.J. Theory and Applications of Fractional Differential Equations; Elsevier Science Limited: Oxfordshire, UK, 2006; Volume 204. [Google Scholar]

- Jalab, H.A.; Ibrahim, R.W. Fractional conway polynomials for image denoising with regularized fractional power parameters. J. Math. Imaging Vis. 2015, 51, 442–450. [Google Scholar]

- Jalab, H.A. Regularized fractional power parameters for image denoising based on convex solution of fractional heat equation. Abst. Appl. Anal. 2014, 2014. [Google Scholar] [CrossRef]

- Jalab, H.A.; Ibrahim, R.W. Fractional alexander polynomials for image denoising. Signal Process. 2015, 107, 340–354. [Google Scholar]

- Jalab, H.A.; Ibrahim, R.W. Denoising algorithm based on generalized fractional integral operator with two parameters. Discrete Dyn. Nat. Soc. 2012, 2012. [Google Scholar] [CrossRef]

- Jalab, H.A.; Ibrahim, R.W. Texture enhancement based on the savitzky-golay fractional differential operator. Math. Probl. Eng. 2013, 2013. [Google Scholar] [CrossRef]

- Jalab, H.A.; Ibrahim, R.W. Texture feature extraction based on fractional mask convolution with cesáro means for content-based image retrieval. In Pricai 2012: Trends in Artificial Intelligence; Springer: Berlin, Germany, 2012; pp. 170–179. [Google Scholar]

- Tsallis, C. Introduction to Nonextensive Statistical Mechanics; Springer: Berlin, Germany, 2009. [Google Scholar]

- Machado, J.T. Entropy analysis of integer and fractional dynamical systems. Nonlinear Dyn. 2010, 62, 371–378. [Google Scholar]

- Ibrahim, R.W. The fractional differential polynomial neural network for approximation of functions. Entropy 2013, 15, 4188–4198. [Google Scholar]

- Mathai, A.M.; Haubold, H.J. On a generalized entropy measure leading to the pathway model with a preliminary application to solar neutrino data. Entropy 2013, 15, 4011–4025. [Google Scholar]

- Machado, J.T. Fractional order generalized information. Entropy 2014, 16, 2350–2361. [Google Scholar]

- Ibrahim, R.W. On generalized Srivastava–Owa fractional operators in the unit disk. Adv. Differ. Equ. 2011, 2011, 1–10. [Google Scholar]

- Selvarajah, S.; Kodituwakku, S. Analysis and comparison of texture features for content based image retrieval. Int. J. Latest Trends Comput. 2011, 2, 108–113. [Google Scholar]

- Anusudha, K.; Koshie, S.A.; Ganesh, S.S.; Mohanaprasad, K. Image splicing detection involving moment-based feature extraction and classification using artificial neural networks. Int. J. Signal Image Process. 2010, 1, 9–13. [Google Scholar]

- Ng, T.-T.; Chang, S.-F. A Data Set of Authentic and Spliced Image Blocks; ADVENT Technical Report, # 203-2004-3; Columbia University: New York, NY, USA, 2004. [Google Scholar]

- Chang, C.C.; Lin, C.J. Libsvm: A library for support vector machines. ACM Trans. Intell. Syst. Technol. 2011, 2. [Google Scholar] [CrossRef]

- He, Z.; Lu, W.; Sun, W.; Huang, J. Digital image splicing detection based on Markov features in DCT and DWT domain. Pattern Recognit. 2012, 45, 4292–4299. [Google Scholar]

- Fu, D.; Shi, Y.Q.; Su, W. Detection of image splicing based on Hilbert–Huang transform and moments of characteristic functions with wavelet decomposition. In Digital Watermarking, Proceedings of the 5th International Workshop on Digital Watermarking (IWDW 2006), Jeju Island, Korea, 8–10 November 2006; Shi, Y.Q., Jeon, B., Eds.; Springer: Berlin, Germany, 2006; pp. 177–187. [Google Scholar]

- Dong, J.; Wang, W.; Tan, T.; Shi, Y.Q. Run-length and edge statistics based approach for image splicing detection. In Digital Watermarking, Proceedings of the 7th International Workshop on Digital Watermarking, Busan, Korea,, 10–12 November 2008; Kim, H.J., Katzenbeisser, S., Ho, A.T.S., Eds.; Springer: Berlin, Germany, 2009; pp. 76–87. [Google Scholar]

| Dimensionality | True positive (%) | True negative (%) | Accuracy (%) | |

|---|---|---|---|---|

| Number of features | 1764 | 74.74 | 55.92 | 70.33 |

| Dimension | True positive (%) | True negative (%) | Accuracy (%) | |

|---|---|---|---|---|

| Features + Kernel PCA | 200 | 88.46 | 76.97 | 82.72 |

| 150 | 88.46 | 84.87 | 86.67 | |

| 100 | 89.74 | 86.84 | 88.29 | |

| 90 | 91.03 | 88.82 | 89.92 | |

| 80 | 91.03 | 89.47 | 90.26 | |

| 70 | 88.46 | 90.13 | 89.30 | |

| 60 | 90.38 | 89.47 | 89.93 | |

| 50 | 89.74 | 89.47 | 89.61 | |

| 40 | 92.31 | 91.45 | 91.88 | |

| 30 | 91.67 | 90.13 | 90.91 | |

| 20 | 91.03 | 78.95 | 84.99 | |

| 10 | 100 | 0 | 50.65 |

| Feature Extraction Methods | Dimensionality | TP (%) | TN (%) | Acc (%) |

|---|---|---|---|---|

| Expanded DCT Markov [29] | 100 50 | 89.92 89.60 | 90.21 90.45 | 90.07 90.02 |

| DWT Markov [29] | 100 50 | 87.58 86.71 | 85.39 85.70 | 86.50 86.21 |

| Expanded DCT Markov + DWT Markov [29] | 100 50 | 93.28 92.28 | 93.83 93.13 | 93.55 93.55 |

| HHT + Moments of Characteristic Functions with Wavelet Decomposition [30] | 110 78 | 80.03 73.91 | 80.25 76.49 | 80.15 75.23 |

| Run-length and edge statistics based model [31] | 163 139 | 83.23 83.87 | 85.53 76.97 | 84.36 80.46 |

| Fractional features + Kernel PCA (Proposed) | 40 | 92.31 | 91.45 | 91.88 |

© 2015 by the authors; licensee MDPI, Basel, Switzerland This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ibrahim, R.W.; Moghaddasi, Z.; Jalab, H.A.; Noor, R.M. Fractional Differential Texture Descriptors Based on the Machado Entropy for Image Splicing Detection. Entropy 2015, 17, 4775-4785. https://0-doi-org.brum.beds.ac.uk/10.3390/e17074775

Ibrahim RW, Moghaddasi Z, Jalab HA, Noor RM. Fractional Differential Texture Descriptors Based on the Machado Entropy for Image Splicing Detection. Entropy. 2015; 17(7):4775-4785. https://0-doi-org.brum.beds.ac.uk/10.3390/e17074775

Chicago/Turabian StyleIbrahim, Rabha W., Zahra Moghaddasi, Hamid A. Jalab, and Rafidah Md Noor. 2015. "Fractional Differential Texture Descriptors Based on the Machado Entropy for Image Splicing Detection" Entropy 17, no. 7: 4775-4785. https://0-doi-org.brum.beds.ac.uk/10.3390/e17074775