Information Geometry, Fluctuations, Non-Equilibrium Thermodynamics, and Geodesics in Complex Systems

Abstract

:1. Introduction

2. Distances/Metrics

2.1. Distance between Two PDFs

2.1.1. Wootters’ Distance

2.1.2. Kullback-Leibler (K-L) Divergence/Relative Entropy

2.1.3. Jensen Divergence

2.1.4. Euclidean Norm

2.2. Distance along the Path

2.2.1. Information Rate

2.2.2. Information Length

3. Model and Comparison of Metrics

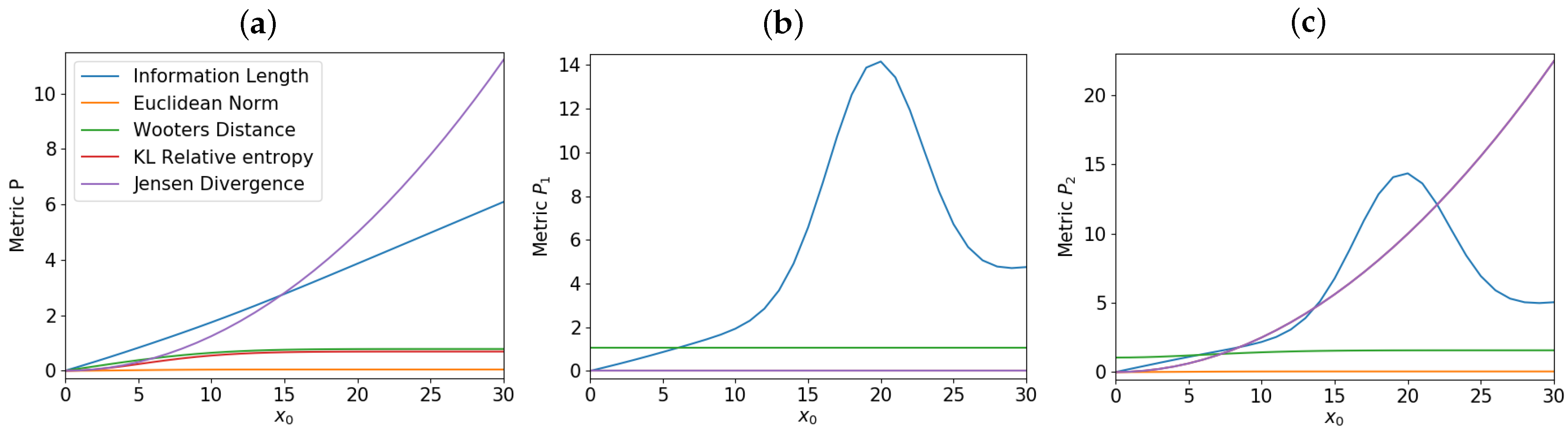

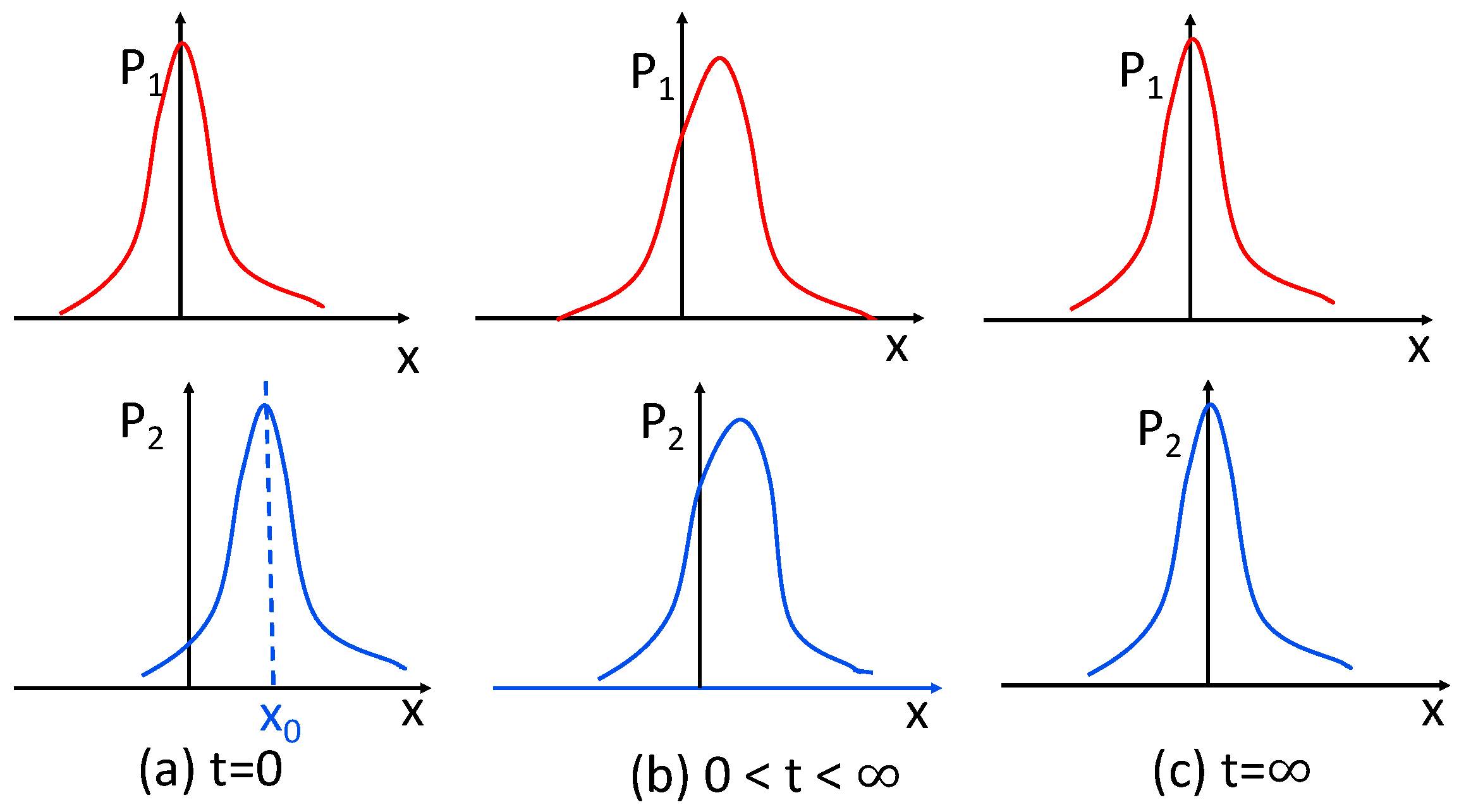

3.1. Geometric Structure of Equilibrium/Attractors

3.2. Correlation between Two Interacting Components

4. Thermodynamic Relations

4.1. Entropy Production Rate and Flow

4.2. Non-Equilibrium Thermodynamical Laws

4.3. Relative Entropy as a Measure of Irreversibility

4.4. Example

5. Inequalities

5.1. General Inequality Relations

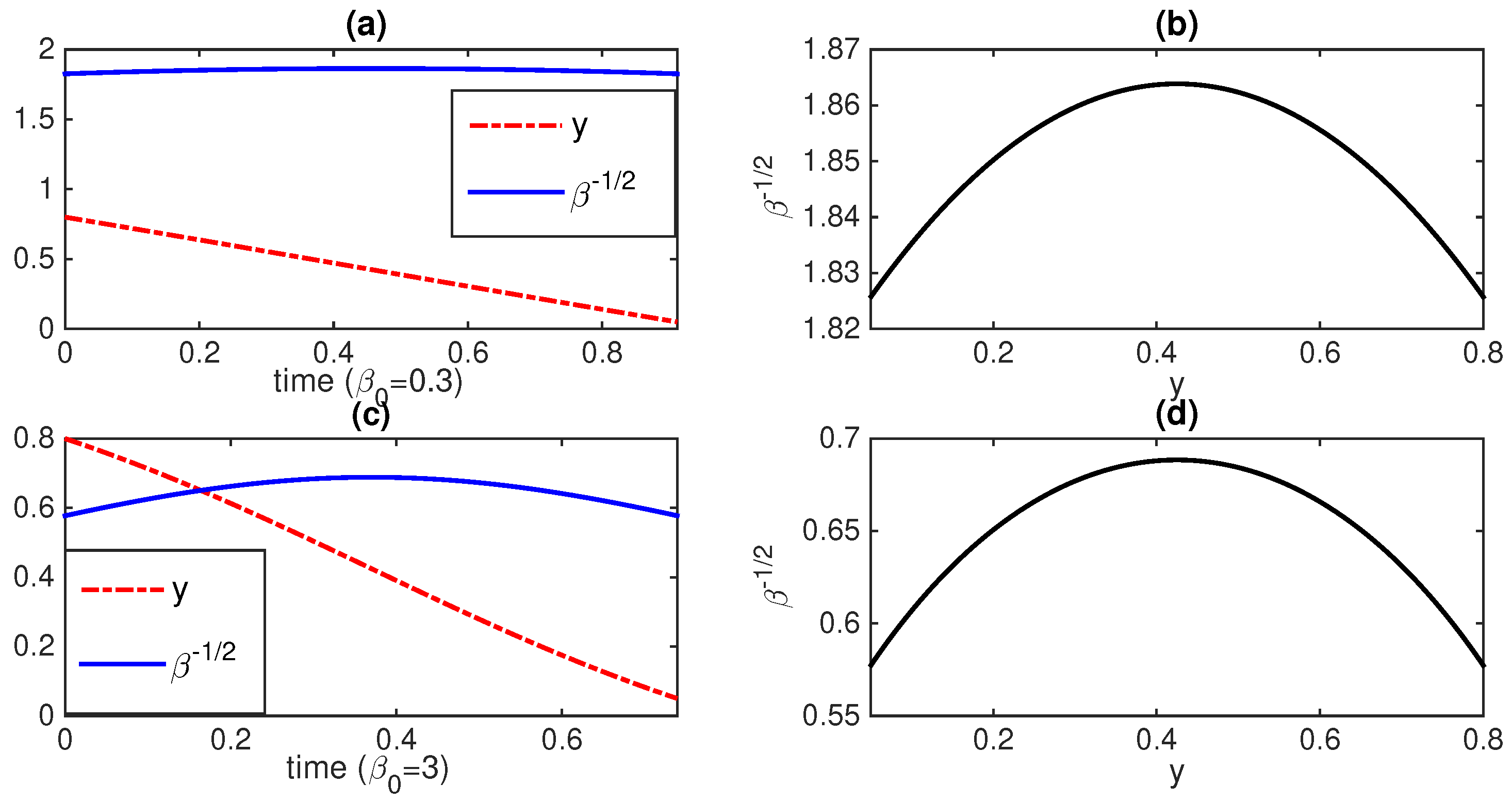

5.2. Applications to the Non-Autonomous O-U Process

6. Geodesics, Control and Hyperbolic Geometry

6.1. Geodesics–Shortest-Distance Path

6.2. Comments on Self-Organization and Control

7. Discussions and Conclusions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A

Appendix B. The Coupled O-U Process

Appendix C. Curved Geometry: The Christoffel and Ricci-Curvature Tensors

Appendix D. Hyperbolic Geometry

References

- Cover, T.M.; Thomas, J.A. Elements of Information Theory; Wiley: New York, NY, USA, 1991. [Google Scholar]

- Parr, T.; Da Costa, L.; Friston, K.J. Markov blankets, information geometry and stochastic thermodynamics. Philos. Trans. R. Soc. A 2019, 378, 20190159. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Oizumi, M.; Tsuchiya, N.; Amari, S. Unified framework for information integration based on information geometry. Proc. Nat. Am. Soc. 2016, 113, 14817. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Kowalski, A.M.; Martin, M.T.; Plastino, A.; Rosso, O.A.; Casas, M. Distances in Probability Space and the Statistical Complexity Setup. Entropy 2011, 13, 1055–1075. [Google Scholar] [CrossRef] [Green Version]

- Martin, M.T.; Plastino, A.; Rosso, O.A. Statistical complexity and disequilibrium. Phys. Lett. A 2003, 311, 126–132. [Google Scholar] [CrossRef]

- Gibbs, A.L.; Su, F.E. On choosing and bounding probability metrics. Int. Stat. Rev. 2002, 70, 419–435. [Google Scholar] [CrossRef] [Green Version]

- Jordan, R.; Kinderlehrer, D.; Otto, F. The variational formulation of the Fokker–Planck equation. SIAM J. Math. Anal. 1998, 29, 1–17. [Google Scholar] [CrossRef]

- Takatsu, A. Wasserstein geometry of Gaussian measures. Osaka J. Math. 2011, 48, 1005–1026. [Google Scholar]

- Lott, J. Some geometric calculations on Wasserstein space. Commun. Math. Phys. 2008, 277, 423–437. [Google Scholar] [CrossRef] [Green Version]

- Gangbo, W.; McCann, R.J. The geometry of optimal transportation. Acta Math. 1996, 177, 113–161. [Google Scholar] [CrossRef]

- Zamir, R. A proof of the Fisher information inequality via a data processing argument. IEEE Trans. Inf. Theory 1998, 44, 1246–1250. [Google Scholar] [CrossRef]

- Otto, F.; Villani, C. Generalization of an Inequality by Talagrand and Links with the Logarithmic Sobolev Inequality. J. Funct. Anal. 2000, 173, 361–400. [Google Scholar] [CrossRef] [Green Version]

- Costa, S.; Santos, S.; Strapasson, J. Fisher information distance. Discrete Appl. Math. 2015, 197, 59–69. [Google Scholar] [CrossRef]

- Ferradans, S.; Xia, G.-S.; Peyré, G.; Aujol, J.-F. Static and dynamic texture mixing using optimal transport. Lecture Notes Comp. Sci. 2013, 7893, 137–148. [Google Scholar]

- Kim, E.; Lee, U.; Heseltine, J.; Hollerbach, R. Geometric structure and geodesic in a solvable model of nonequilibrium process. Phys. Rev. E 2016, 93, 062127. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Nicholson, S.B.; Kim, E. Investigation of the statistical distance to reach stationary distributions. Phys. Lett. A 2015, 379, 83–88. [Google Scholar] [CrossRef]

- Heseltine, J.; Kim, E. Novel mapping in non-equilibrium stochastic processes. J. Phys. A 2016, 49, 175002. [Google Scholar] [CrossRef]

- Kim, E.; Hollerbach, R. Signature of nonlinear damping in geometric structure of a nonequilibrium process. Phys. Rev. E 2017, 95, 022137. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Kim, E.; Hollerbach, R. Geometric structure and information change in phase transitions. Phys. Rev. E 2017, 95, 062107. [Google Scholar] [CrossRef] [Green Version]

- Kim, E.; Jacquet, Q.; Hollerbach, R. Information geometry in a reduced model of self-organised shear flows without the uniform coloured noise approximation. J. Stat. Mech. 2019, 2019, 023204. [Google Scholar] [CrossRef] [Green Version]

- Anderson, J.; Kim, E.; Hnat, B.; Rafiq, T. Elucidating plasma dynamics in Hasegawa-Wakatani turbulence by information geometry. Phys. Plasmas 2020, 27, 022307. [Google Scholar] [CrossRef]

- Heseltine, J.; Kim, E. Comparing information metrics for a coupled Ornstein-Uhlenbeck process. Entropy 2019, 21, 775. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Kim, E.; Heseltine, J.; Liu, H. Information length as a useful index to understand variability in the global circulation. Mathematics 2020, 8, 299. [Google Scholar] [CrossRef] [Green Version]

- Kim, E.; Hollerbach, R. Time-dependent probability density functions and information geometry of the low-to-high confinement transition in fusion plasma. Phys. Rev. Res. 2020, 2, 023077. [Google Scholar] [CrossRef] [Green Version]

- Hollerbach, R.; Kim, E.; Schmitz, L. Time-dependent probability density functions and information diagnostics in forward and backward processes in a stochastic prey-predator model of fusion plasmas. Phys. Plasmas 2020, 27, 102301. [Google Scholar] [CrossRef]

- Guel-Cortez, A.J.; Kim, E. Information Length Analysis of Linear Autonomous Stochastic Processes. Entropy 2020, 22, 1265. [Google Scholar] [CrossRef] [PubMed]

- Guel-Cortez, A.J.; Kim, E. Information geometric theory in the prediction of abrupt changes in system dynamics. Entropy 2021, 23, 694. [Google Scholar] [CrossRef]

- Kim, E. Investigating Information Geometry in Classical and Quantum Systems through Information Length. Entropy 2018, 20, 574. [Google Scholar] [CrossRef] [Green Version]

- Kim, E. Information geometry and non-equilibrium thermodynamic relations in the over-damped stochastic processes. J. Stat. Mech. Theory Exp. 2021, 2021, 093406. [Google Scholar] [CrossRef]

- Parr, T.; Da Costa, L.; Heins, C.; Ramstead, M.J.D.; Friston, K.J. Memory and Markov Blankets. Entropy 2021, 23, 1105. [Google Scholar] [CrossRef]

- Da Costa, L.; Thomas, P.; Biswa, S.; Karl, F.J. Neural Dynamics under Active Inference: Plausibility and Efficiency of Information Processing. Entropy 2021, 23, 454. [Google Scholar] [CrossRef]

- Frieden, B.R. Science from Fisher Information; Cambridge University Press: Cambridge, UK, 2004. [Google Scholar]

- Wootters, W. Statistical distance and Hilbert-space. Phys. Rev. D 1981, 23, 357–362. [Google Scholar] [CrossRef]

- Ruppeiner, G. Thermodynamics: A Riemannian geometric model. Phys. Rev. A. 1079, 20, 1608. [Google Scholar] [CrossRef]

- Salamon, P.; Nulton, J.D.; Berry, R.S. Length in statistical thermodynamics. J. Chem. Phys. 1985, 82, 2433–2436. [Google Scholar] [CrossRef]

- Nulton, J.; Salamon, P.; Andresen, B.; Anmin, Q. Quasistatic processes as step equilibrations. J Chem. Phys. 1985, 83, 334. [Google Scholar] [CrossRef]

- Braunstein, S.L.; Caves, C.M. Statistical distance and the geometry of quantum states. Phys. Rev. Lett. 1994, 72, 3439. [Google Scholar] [CrossRef]

- Diósi, L.; Kulacsy, K.; Lukács, B.; Rácz, A. Thermodynamic length, time, speed, and optimum path to minimize entropy production. J. Chem. Phys. 1996, 105, 11220. [Google Scholar] [CrossRef] [Green Version]

- Crooks, G.E. Measuring thermodynamic length. Phys. Rev. Lett. 2007, 99, 100602. [Google Scholar] [CrossRef] [Green Version]

- Salamon, P.; Nulton, J.D.; Siragusa, G.; Limon, A.; Bedeaus, D.; Kjelstrup, D. A Simple Example of Control to Minimize Entropy Production. J. Non-Equilib. Thermodyn. 2002, 27, 45–55. [Google Scholar] [CrossRef]

- Feng, E.H.; Crooks, G.E. Far-from-equilibrium measurements of thermodynamic length. Phys. Rev. E. 2009, 79, 012104. [Google Scholar] [CrossRef] [Green Version]

- Sivak, D.A.; Crooks, G.E. Thermodynamic Metrics and Optimal Paths. Phys. Rev. Lett. 2012, 8, 190602. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Matey, A.; Lamberti, P.W.; Martin, M.T.; Plastron, A. Wortters’ distance resisted: A new distinguishability criterium. Eur. Rhys. J. D 2005, 32, 413–419. [Google Scholar]

- d’Onofrio, A. Fractal growth of tumors and other cellular populations: Linking the mechanistic to the phenomenological modeling and vice versa. Chaos Solitons Fractals 2009, 41, 875. [Google Scholar] [CrossRef] [Green Version]

- Newton, A.P.L.; Kim, E.; Liu, H.-L. On the self-organizing process of large scale shear flows. Phys. Plasmas 2013, 20, 092306. [Google Scholar] [CrossRef]

- Kim, E.; Liu, H.-L.; Anderson, J. Probability distribution function for self-organization of shear flows. Phys. Plasmas 2009, 16, 0552304. [Google Scholar] [CrossRef] [Green Version]

- Kim, E.; Diamond, P.H. Zonal flows and transient dynamics of the L-H transition. Phys. Rev. Lett. 2003, 90, 185006. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Feinberg, A.P.; Irizarry, R.A. Stochastic epigenetic variation as a driving force of development, evolutionary adaptation, and disease. Proc. Natl. Acad. Sci. USA 2010, 107, 1757. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Wang, N.X.; Zhang, X.M.; Han, X.B. The effects of environmental disturbances on tumor growth. Braz. J. Phys. 2012, 42, 253. [Google Scholar] [CrossRef] [Green Version]

- Lee, J.; Farquhar, K.S.; Yun, J.; Frankenberger, C.; Bevilacqua, E.; Yeung, E.; Kim, E.; Balázsi, G.; Rosner, M.R. Network of mutually repressive metastasis regulators can promote cell heterogeneity and metastatic transitions. Proc. Natl. Acad. Sci. USA 2014, 111, E364. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Lee, U.; Skinner, J.J.; Reinitz, J.; Rosner, M.R.; Kim, E. Noise-driven phenotypic heterogeneity with finite correlation time. PLoS ONE 2015, 10, e0132397. [Google Scholar]

- Haken, H. Information and Self-Organization: A Macroscopic Approach to Complex Systems, 3rd ed.; Springer: Berlin/Heidelberg, Germany, 2006; pp. 63–64. [Google Scholar]

- Kim, E. Intermittency and self-organisation in turbulence and statistical mechanics. Entropy 2019, 21, 574. [Google Scholar] [CrossRef] [Green Version]

- Aschwanden, M.J.; Crosby, N.B.; Dimitropoulou, M.; Georgoulis, M.K.; Hergarten, S.; McAteer, J.; Milovanov, A.V.; Mineshige, S.; Morales, L.; Nishizuka, N.; et al. 25 Years of Self-Organized Criticality: Solar and Astrophysics. Space Sci. Rev. 2016, 198, 47–166. [Google Scholar] [CrossRef] [Green Version]

- Zweben, S.J.; Boedo, J.A.; Grulke, O.; Hidalgo, C.; LaBombard, B.; Maqueda, R.J.; Scarin, P.; Terry, J.L. Edge turbulence measurements in toroidal fusion devices. Plasma Phys. Contr. Fusion 2007, 49, S1–S23. [Google Scholar] [CrossRef]

- Politzer, P.A. Observation of avalanche-like phenomena in a magnetically confined plasma. Phys. Rev. Lett. 2000, 84, 1192–1195. [Google Scholar] [CrossRef] [PubMed]

- Beyer, P.; Benkadda, S.; Garbet, X.; Diamond, P.H. Nondiffusive transport in tokamaks: Three-dimensional structure of bursts and the role of zonal flows. Phys. Rev. Lett. 2000, 85, 4892–4895. [Google Scholar] [CrossRef] [Green Version]

- Drake, J.F.; Guzdar, P.N.; Hassam, A.B. Streamer formation in plasma with a temperature gradient. Phys. Rev. Lett. 1988, 61, 2205–2208. [Google Scholar] [CrossRef]

- Antar, G.Y.; Krasheninnikov, S.I.; Devynck, P.; Doerner, R.P.; Hollmann, E.M.; Boedo, J.A.; Luckhardt, S.C.; Conn, R.W. Experimental evidence of intermittent convection in the edge of magnetic confinement devices. Phys. Rev. Lett. 2001, 87, 065001. [Google Scholar] [CrossRef] [Green Version]

- Carreras, B.A.; Hidalgo, E.; Sanchez, E.; Pedrosa, M.A.; Balbin, R.; Garcia-Cortes, I.; van Milligen, B.; Newman, D.E.; Lynch, V.E. Fluctuation-induced flux at the plasma edge in toroidal devices. Phys. Plasmas 1996, 3, 2664–2672. [Google Scholar] [CrossRef]

- De Vries, P.C.; Johnson, M.F.; Alper, B.; Buratti, P.; Hender, T.C.; Koslowski, H.R.; Riccardo, V. JET-EFDA Contributors, Survey of disruption causes at JET. Nuclear Fusion 2011, 51, 053018. [Google Scholar] [CrossRef]

- Kates-Harbeck, J.; Svyatkovskiy, A.; Tang, W. Predicting disruptive instabilities in controlled fusion plasmas through deep learning. Nature 2019, 568, 527. [Google Scholar] [CrossRef]

- Landau, L.; Lifshitz, E.M. Statistical Physics: Part 1. In Course of Theoretical Physics; Elsevier Ltd.: New York, NY, USA, 1980; Volume 5. [Google Scholar]

- Shannon, C.E. A Mathematical Theory of Communication. Bell Syst. Tech. J. 1948, 27, 623. [Google Scholar] [CrossRef]

- Jarzynski, C.R. Equalities and Inequalities: Irreversibility and the Second Law of Thermodynamics at the Nanoscale. Annu. Rev. Condens. Matter Phys. 2011, 2, 329–351. [Google Scholar] [CrossRef] [Green Version]

- Sekimoto, K. Stochastic Energetic; Lecture Notes in Physics 799; Springer: Heidelberg, Germany, 2010. [Google Scholar] [CrossRef]

- Jarzynski, C.R. Comparison of far-from-equilibrium work relations. Physique 2007, 8, 495–506. [Google Scholar] [CrossRef] [Green Version]

- Jarzynski, C.R. Nonequilibrium Equality for Free Energy Differences. Phys. Rev. Lett. 1997, 78, 2690–2693. [Google Scholar] [CrossRef] [Green Version]

- Evans, D.J.; Cohen, G.D.; Morriss, G.P. Probability of Second Law Violations in Shearing Steady States. Phys. Rev. Lett. 1993, 71, 2401. [Google Scholar] [CrossRef] [Green Version]

- Evans, D.J.; Searles, D.J. The Fluctuation Theorem. Adv. Phys. 2012, 51, 1529–1585. [Google Scholar] [CrossRef]

- Gallavotti, G.; Cohen, E.G.D. Dynamical Ensembles in Nonequilibrium Statistical Mechanics. Phys. Rev. Lett. 1995, 74, 2694. [Google Scholar] [CrossRef] [Green Version]

- Kurchan, J. Fluctuation theorem for stochastic dynamics. J. Phys. A Math. Gen. 1998, 31, 3719. [Google Scholar] [CrossRef] [Green Version]

- Searles, D.J.; Evans, D.J. Ensemble dependence of the transient fluctuation theorem. J. Chem. Phys. 2000, 13, 3503. [Google Scholar] [CrossRef] [Green Version]

- Seifert, U. Entropy production along a stochastic trajectory and an integral fluctuation theorem. Phys. Rev. Lett. 2005, 95, 040602. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Abreu, D.; Seifert, U. Extracting work from a single heat bath through feedback. EuroPhys. Lett. 2011, 94, 10001. [Google Scholar] [CrossRef]

- Seifert, U. Stochastic thermodynamics, fluctuation theorems and molecular machines. Rep. Prog. Phys. 2012, 75, 26001. [Google Scholar] [CrossRef] [Green Version]

- Spinney, R.E.; Ford, I.J. Fluctuation relations: A pedagogical overview. arXiv 2012, arXiv:1201.6381S. [Google Scholar]

- Haas, K.R.; Yang, H.; Chu, J.-W. Trajectory Entropy of Continuous Stochastic Processes at Equilibrium. J. Phys. Chem. Lett. 2014, 5, 999. [Google Scholar] [CrossRef]

- Van den Broeck, C. Stochastic thermodynamics: A brief introduction. Phys. Complex Colloids 2013, 184, 155–193. [Google Scholar]

- Murashita, Y. Absolute Irreversibility in Information Thermodynamics. arXiv 2015, arXiv:1506.04470. [Google Scholar]

- Tomé, T. Entropy Production in Nonequilibrium Systems Described by a Fokker-Planck Equation. Braz. J. Phys. 2016, 36, 1285–1289. [Google Scholar] [CrossRef] [Green Version]

- Salazar, D.S.P. Work distribution in thermal processes. Phys. Rev. E 2020, 101, 030101. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Kullback, S. Letter to the Editor: The Kullback-Leibler distance. Am. Stat. 1951, 41, 340–341. [Google Scholar]

- Sagawa, T. Thermodynamics of Information Processing in Small Systems; Springer: Berlin/Heidelberg, Germany, 2012. [Google Scholar]

- Nielsen, M.A.; Chuang, I.L. Quantum Computation and Quantum Information; Cambridge University Press: Cambridge, UK, 2000. [Google Scholar]

- Landauer, R. Irreversibility and heat generation in the computing process. IBM J. Res. Dev. 1961, 5, 183–191. [Google Scholar] [CrossRef]

- Bérut, A.; Arakelyan, A.; Petrosyan, A.; Ciliberto, S.; Dillenschneider, R.; Lutz, E. Experimental verification of Landauer’s principle linking information and thermodynamics. Nature 2012, 483, 187. [Google Scholar] [CrossRef]

- Leff, H.S.; Rex, A.F. Maxwell’s Demon: Entropy, Information, Computing; Princeton University Press: Princeton, NJ, USA, 1990. [Google Scholar]

- Bekenstein, J.D. How does the entropy/information bound work? Found. Phys. 2005, 35, 1805. [Google Scholar] [CrossRef] [Green Version]

- Capozziello, S.; Luongo, O. Information entropy and dark energy evolution. Int. J. Mod. Phys. D 2018, 27, 1850029. [Google Scholar] [CrossRef] [Green Version]

- Kawai, R.; Parrondo, J.M.R.; Van den Broeck, C. Dissipation: The phase-space perspective. Phys. Rev. Lett. 2007, 98, 080602. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Esposito, M.; Van den Broeck, C. Second law and Landauer principle far from equilibrium. Europhys. Lett. 2011, 95, 40004. [Google Scholar] [CrossRef] [Green Version]

- Horowitz, J.; Jarzynski, C.R. An illustrative example of the relationship between dissipation and relative entropy. Phys. Rev. E 2009, 79, 021106. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Parrondo, J.M.R.; van den Broeck, C.; Kawai, R. Entropy production and the arrow of time. New J. Phys. 2009, 11, 073008. [Google Scholar] [CrossRef]

- Deffner, S.; Lutz, E. Information free energy for nonequilibrium states. arXiv 2012, arXiv:1201.3888. [Google Scholar]

- Horowitz, J.M.; Sandberg, H. Second-law-like inequalities with information and their interpretations. New J. Phys. 2014, 16, 125007. [Google Scholar] [CrossRef]

- Nicholson, S.B.; García-Pintos, L.P.; del Campo, A.; Green, J.R. Time-information uncertainty relations in thermodynamics. Nat. Phys. 2020, 16, 1211–1215. [Google Scholar] [CrossRef]

- Flego, S.P.; Frieden, B.R.; Plastino, A.; Plastino, A.R.; Soffer, B.H. Nonequilibrium thermodynamics and Fisher information: Sound wave propagation in a dilute gas. Phys. Rev. E 2003, 68, 016105. [Google Scholar] [CrossRef]

- Carollo, A.; Spagnolo, B.; Dubkov, A.A.; Valenti, D. On quantumness in multi-parameter quantum estimation. J. Stat. Mech. Theory E 2019, 2019, 094010. [Google Scholar] [CrossRef] [Green Version]

- Carollo, A.; Valenti, D.; Spagnolo, B. Geometry of quantum phase transitions. Phys. Rep. 2020, 838, 1–72. [Google Scholar] [CrossRef] [Green Version]

- Davies, P. Does new physics lurk inside living matter? Phys. Today 2020, 73, 34. [Google Scholar] [CrossRef]

- Sjöqvist, E. Geometry along evolution of mixed quantum states. Phys. Rev. Res. 2020, 2, 013344. [Google Scholar] [CrossRef] [Green Version]

- Briët, J.; Harremoës, P. Properties of classical and quantum Jensen-Shannon divergence. Phys. Rev. A 2009, 79, 052311. [Google Scholar] [CrossRef] [Green Version]

- Casas, M.; Lambertim, P.; Lamberti, P.; Plastino, A.; Plastino, A.R. Jensen-Shannon divergence, Fisher information, and Wootters’ hypothesis. arXiv 2004, arXiv:quant-ph/0407147. [Google Scholar]

- Sánchez-Moreno, P.; Zarzo, A.; Dehesa, J.S. Jensen divergence based on Fisher’s information. J. Phys. A Math. Theor. 2012, 45, 125305. [Google Scholar] [CrossRef] [Green Version]

- López-Ruiz, L.; Mancini, H.; Calbet, X. A statistical measure of complexity. Phys. Lett. A 1995, 209, 321–326. [Google Scholar] [CrossRef] [Green Version]

- Cafaro, C.; Alsing, P.M. Information geometry aspects of minimum entropy production paths from quantum mechanical evolutions. Phys. Rev. E 2020, 101, 022110. [Google Scholar] [CrossRef] [Green Version]

- Ashida, K.; Oka, K. Stochastic thermodynamic limit on E. coli adaptation by information geometric approach. Biochem. Biophys. Res. Commun. 2019, 508, 690–694. [Google Scholar] [CrossRef] [Green Version]

- Risken, H. The Fokker-Planck Equation: Methods of Solution and Applications; Springer: Berlin, Germany, 1996. [Google Scholar]

- Van Den Brock, C. On the relation between white shot noise, Gaussian white noise, and the dichotomic Markov process. J. Stat. Phys. 1983, 31, 467–483. [Google Scholar] [CrossRef]

- Bena, I. Dichotomous Markov Noise: Exact results for out-of-equilibrium systems (a brief overview). Int. J. Mod. Phys. B 2006, 20, 2825–2888. [Google Scholar] [CrossRef] [Green Version]

- Onsager, L.; Machlup, S. Fluctuations and Irreversible Processes. Phys. Rev. 1953, 91, 1505–1512. [Google Scholar] [CrossRef]

- Parrondo, J.M.R.; de Cisneros, B.J.; Brito, R. Thermodynamics of Isothermal Brownian Motors. In Stochastic Processes in Physics, Chemistry, and Biology; Freund, J.A., Pöschel, T., Eds.; Lecture Notes in Physics; Springer: Berlin/Heidelberg, Germany, 2000; Volume 557. [Google Scholar] [CrossRef]

- Gaveau, B.; Granger, L.; Moreau, M.; Schulman, L. Dissipation, interaction, and relative entropy. Phys. Rev. E 2014, 89, 032107. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Ignacio, A.; Martínez, G.B.; Jordan, M.H.; Juan, M.R.P. Inferring broken detailed balance in the absence of observable currents. Nat. Commun. 2019, 10, 3542. [Google Scholar]

- Roldán, É.; Barral, J.; Martin, P.; Parrondo, J.M.R.; Jülicher, F. Quantifying entropy production in active fluctuations of the hair-cell bundle from time irreversibility and uncertainty relations. New J. Phys. 2021, 23, 083013. [Google Scholar] [CrossRef]

- Chevallier, E.; Kalunga, E.; Angulo, J. Kernel Density Estimation on Spaces of Gaussian Distributions and Symmetric Positive Definite Matrices. 2015. Available online: hal.archives-ouvertes.fr/hal-01245712 (accessed on 29 September 2021).

- Nicolis, G.; Prigogine, I. Self-Organization in Nonequilibrium Systems: From Dissipative Structures to Order through Fluctuations; John Wiley and Son: New York, NY, USA, 1977. [Google Scholar]

- Prigogine, I. Time, structure, and fluctuations. Science 1978, 201, 777–785. [Google Scholar] [CrossRef] [Green Version]

- Jaynes, E.T. The Minimum Entropy Production Principle. Ann. Rev. Phys. Chem. 1980, 31, 579–601. [Google Scholar] [CrossRef] [Green Version]

- Mehdi, N. On the Evidence of Thermodynamic Self-Organization during Fatigue: A Review. Entropy 2020, 22, 372. [Google Scholar]

- Dewar, R.C. Information theoretic explanation of maximum entropy production, the fluctuation theorem and self-organized criticality in non-equilibrium stationary states. J. Phys. A. Math. Gen. 2003, 36, 631–641. [Google Scholar] [CrossRef] [Green Version]

- Sekhar, J.A. Self-Organization, Entropy Generation Rate, and Boundary Defects: A Control Volume Approach. Entropy 2021, 23, 1092. [Google Scholar] [CrossRef] [PubMed]

- Philipp, S.; Thomas, F.; Ray, D.; Friston, K.J. Exploration, novelty, surprise, and free energy minimization. Front. Psychol. 2013, 4, 1–5. [Google Scholar]

- Friston, K.J. The free-energy principle: A unified brain theory? Nat. Rev. Neurosci. 2012, 11, 127–138. [Google Scholar] [CrossRef] [PubMed]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kim, E.-j. Information Geometry, Fluctuations, Non-Equilibrium Thermodynamics, and Geodesics in Complex Systems. Entropy 2021, 23, 1393. https://0-doi-org.brum.beds.ac.uk/10.3390/e23111393

Kim E-j. Information Geometry, Fluctuations, Non-Equilibrium Thermodynamics, and Geodesics in Complex Systems. Entropy. 2021; 23(11):1393. https://0-doi-org.brum.beds.ac.uk/10.3390/e23111393

Chicago/Turabian StyleKim, Eun-jin. 2021. "Information Geometry, Fluctuations, Non-Equilibrium Thermodynamics, and Geodesics in Complex Systems" Entropy 23, no. 11: 1393. https://0-doi-org.brum.beds.ac.uk/10.3390/e23111393