1. Introduction

Accurate modelling and realistic reproduction of appearance has been widely explored in computer vision and graphics. There have been significant advances in digital photography over the last decades, which have made measurement-based appearance modelling popular for various applications in realistic computer graphics. Especially, the method is well aligned with realistic appearance modelling of human faces, enabling considerable applications in the entertainment (such as movies, games and advertisement), medical (dermatology) and beauty (cosmetics) sectors. Over the years, researchers have presented various measurement-based modelling techniques and setups for acquiring facial and material reflectance [

1,

2,

3]. Practically, however, the measured reflectance data need to be fit to suitable analytic or physics-based reflectance models in a rendering pipeline. This practice of fitting between measurements and appropriate models has driven the separation of individual reflectance components, such as specular (surface) and diffuse (subsurface), which has become important in measurement-based modelling approaches. Researchers have thus proposed various reflectance separation techniques, particularly for dielectric materials, based on polarized and spectral information [

4,

5,

6]. Among these, polarization-based separation in conjunction with a sophisticated light stage setup [

7] has achieved high quality separation of specular and diffuse reflectance and has been applied as one of the optimal solutions for high-end capture of human faces and objects. However, this approach has traditionally required multi-shot capture with different polarization modes (such as cross- and parallel polarization states) for separate acquisition of reflectance components or optically complicated imaging systems (e.g., multiple cameras sharing an optical axis through a beam-splitter) for multiplexed acquisition of different polarization states. This polarization-based approach has also been extended for a more challenging task, fine-grained separation of layered reflectance (e.g., in skin), combining the computational illumination technique with a sophisticated multi-shot acquisition [

8].

In this paper, we present an extension of our recent work [

9] proposing two novel techniques for efficient single-shot acquisition of surface and subsurface reflectance with novel computational analysis of the polarized light-field data for layered reflectance separation. The work in [

9] proposed two-way polarized light-field (TPLF) imaging, which was implemented by a low-resolution light-field camera, attaching two polarization filters. In this paper, we present an extended version of the polarized light-field technique, four-way polarized light-field (FPLF) imaging, with higher polarization dimensions and imaging resolution for more general applications of single-shot reflectance acquisition. Our two techniques achieve not only standard diffuse-specular separation, but also separation of layered reflectance albedos spanning specular, single scattering, shallow scattering and deep scattering by a single-shot light-field image (see Figure 7). While the TPLF technique required uniform polarized illumination at a fixed angle, the FPLF technique achieves high-quality reflectance separation under unpolarized or partially polarized illumination at an arbitrary angle. Besides albedo estimation, the two techniques can be applied for acquiring photometric diffuse and specular normal maps and further photometric normal maps of layered reflectance with multiple shots under polarized spherical gradient illumination for realistic skin rendering applications.

To summarize, the specific technical contributions of this work are as follows:

The practical realization of TPLF imaging by attaching orthogonal linear polarizers and FPLF imaging by attaching four linear polarizers at 0, 45, 90 and 135, respectively, to the aperture of the main lens of a commercial light-field camera. TPLF imaging angularly multiplexes two orthogonal polarization states of incoming rays in a single-shot and enables separation of diffuse and specular reflectance using angular light-field sampling under controlled polarized illumination. FPLF imaging enables a similar function under uncontrolled unpolarized or partially polarized illumination such as outdoor illumination.

A novel computational method for single-shot separation of layered reflectance by analysing the acquired light-field data in the 2D angular domain. The method allows us to estimate layered reflectance albedos using a single-shot polarized light-field image under either uniform (TPLF) or partially (FPLF) polarized illumination. Furthermore, the method achieves novel layered separation of photometric normals acquired under polarized spherical gradient illumination.

The rest of the paper is organized as follows:

Section 2 describes reviews of related work in three categories before presenting our proposed TPLF camera and computational method of diffuse-specular separation in

Section 3.

Section 4 presents the extension to four-way polarized light-field imaging.

Section 5 then describes our novel computational approach to acquire separated layered albedos and photometric normals of facial reflectance by analysing polarized light-field imagery. We conclude with detailed analysis and limitations of our technique in

Section 8 after presenting validation experiments and facial rendering applications using photometric normals of layered reflectance in

Section 7.

3. Two-Way Polarized Light-Field Imaging

We now describe our acquisition technique for diffuse and specular reflectance based on our novel two-way polarized light-field (TPLF) camera, which addresses the challenge of capturing two different polarization states in a single-shot.

Figure 1a shows a ray diagram for a regular light-field camera. For simplicity, let us assume there are five scene points emitting four rays in diffuse or specular reflection. The four rays emitted from each point in various directions are focused into a point by the main lens and dispersed into sensor pixels by the microlens array. Let us think about a half occluder placed at the back of the main lens as shown in

Figure 1b. Only half of the rays reach the camera sensor, and the other half are blocked by the occluder, resulting in the semicircular rays as shown on the right. In the case of our TPLF camera shown in

Figure 1c, the occluder is replaced with a two-way linear polarizer, where the upper half is vertically polarized and the bottom half is horizontally polarized. This way, the blocked and unblocked rays in the occluder case now pass through the horizontal and the vertical polarizers, respectively. If the scene is illuminated with vertically-polarized light, specular reflection rays (illustrated in red, green and blue) can pass through only the vertically-polarized region. This results in the semicircular images via the microlens array on the right side. On the other hand, diffuse reflection rays (illustrated in purple and yellow) can pass through the entire polarizer (due to depolarization) and result in the fully-circular images on the right. Consequently, given linearly-polarized illumination, our TPLF camera provides a semicircular and a fully-circular microlens image for specular and diffuse surface reflections, respectively. These respective microlens imaging regions are predetermined once using a calibration photograph, and TPLF photographs can thereafter be automatically processed using this calibration information. Note that pixels in a raw photograph are sensed through a mosaic colour filter. Hence, we have to additionally demosaic colour pixels by averaging colour information in 4 × 4 pixel regions to generate a colour TPLF photograph.

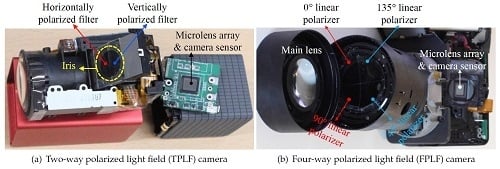

Figure 2a shows the actual TPLF camera used for our experiments, which was built from a Lytro Red Hot 16 GB camera to which we attached a two-way linear polarizer in the pupil plane of the main lens. The camera produces a light-field image at 1080 × 1080 resolution with a 331 × 381 microlens array. Although Lytro software and some other methods allow increasing the resolution of light-field images to higher than the number of microlenses, we only carry out single-pixel generation per each microlens image for simplicity, which means our processed images have 331 × 381 resolution.

Diffuse-Specular Separation

Figure 3a shows an example photograph captured by the TPLF camera of a plastic mannequin. In the inset image of the eye, each circular pattern corresponds to the contribution of rays passing through each microlens. It can be seen that the lower semi-circular region has very bright intensity, which is contributed by strong polarization preserving specular rays imaged through a microlens. As indicated in our ray simulation (

Figure 1c), the upper semicircular region has a lower intensity since the specular rays are filtered out by cross-polarization in this region. Conversely, diffuse rays, which are depolarized, contribute equally to the whole circular microlens image. By exploiting this spatial separation between diffuse and specular rays, parallel polarized (diffuse + specular) and cross-polarized diffuse-only images could be generated from sampling pixels over each respective semicircular region. Here, the diffuse + specular component image (

Figure 3b) was generated by averaging pixels sampled from lower semicircular regions. Likewise, a diffuse-only component image (

Figure 3c) was generated by averaging pixels from the upper semicircular region. Subtraction of the diffuse-only image from the diffuse + specular image generated the specular (polarization preserving) component image, as shown in

Figure 3d. Note that due to cross-polarization, the diffuse-only component (c) is imaged with only half intensity.

The diffuse-specular separation examples shown in

Figure 3, as well the separation result of the female subject shown in

Figure 4 were acquired under uniform illumination using an LED hemisphere shown in

Figure 4a. The hemispherical illumination system consists of a total of 130 LED (5 W) light sources. A linear polarizer is mounted in front of each light source and adjustable to rotate along the tangent direction to the light source. We pre-calibrate the polarizer orientation on all the lights to cross-polarize with respect to a frontal camera viewing direction similar to the procedure described in [

27]. Besides recording the reflectance of a subject under constant uniform illumination to estimate the albedo, the LED hemisphere also allows us to record the subject’s reflectance under polarized spherical gradient illumination in order to estimate photometric normals.

Figure 4b–e shows separated diffuse and specular normals estimated for the female subject shown in

Figure 4 using three additional measurements under the X, Y and Z spherical gradients. As can be seen, the diffuse normal is smooth due to blurring of surface detail due to subsurface scattering, while the specular normal contains high frequency skin mesostructure detail due to first surface reflection [

27]. However, we only need to make a total of four measurements for separated albedo and photometric normals, as we do not need to flip a polarizer in front of the camera [

27] or switch polarization on the LED sphere [

29] in order to observe two orthogonal polarization states.

4. Four-Way Polarized Light-Field Imaging

This section describes our novel four-way polarized light-field (FPLF) camera, which extends a TPLF camera with four linear polarizers.

Figure 2b shows our FPLF camera, which was built from a Lytro Illum camera by attaching four linear polarizers at 0, 45, 90 and 135

in the pupil plane of the main lens. The camera can produce a light-field image at a maximum 2450 × 1634 resolution with a 539 × 432 microlens array using Lytro’s official software, Lytro Desktop. The raw sensor resolution of the camera is 7728 × 5368 pixels. Our sampling algorithm produces a light-field image at 1078 × 864 resolution, which results in two pixel sampling per each microlens region. We found this sampling to yield a high SNR with suitable spatial details.

Section 3 describes how two images under vertical and horizontal polarization states are obtained by separately sampling pixels in the upper and lower semicircular region of a TPLF photograph. Similarly, we can obtain four images under different polarization states at 0, 45, 90 and 135

by separately sampling each quarter region of a FPLF photograph.

Figure 5a shows a raw FPLF photograph over a piece of Persian relief made of polished stone acquired under sunlight. The inset image of (a) clearly shows two quarter-circular regions in the upper part of each microlens image. Since the polarization angle of incident light is closely aligned with the polarization filter angle (0

) of the left-top quarter-circular region, the intensity of the region is high with parallel polarization filtering. The polarization filter angle of the right-top quarter-circular region is rotated by 90

from the left-top region, so the intensity of the region is dark with cross-polarization filtering. The intensity of the bottom quarter-circular regions is between that of the left-top and right-top regions, and the intensity difference between the two bottom regions is less than the upper regions due to partial parallel (or cross-) polarization filtering with a polarization filter angle of 45

and 135

. (b–e) show images for the four polarization states, which were generated by separately sampling pixels in the four quarter-circular regions of each microlens image shown in the raw FPLF photograph in (a).

It is known that measurement of three or more different polarization states allows us to construct a Stokes vector, which characterizes the polarization state of incident light [

17]. By employing the method of Riviere et al., we can obtain intensity under cross- and parallel polarization states from the measured Stokes vector. Their method estimates the intensity of cross- and parallel polarization states by solving Mueller calculus [

34] associated with the Stokes vector under partially polarized illumination imaged at the Brewster angle of incidence. Since direct sunlight in open sky is partially linearly polarized, we separated diffuse and specular reflectance for the Persian relief by employing the same approach of the near Brewster angle measurement and single-shot light-field sampling, as shown in

Figure 5f,g. Note that Riviere et al. [

17] measured three polarization states at 0, 45 and 90

to solve an equation with three unknowns, which are then employed for computing diffuse and specular reflectance. We found that solving the equation with four measurements (acquired in a single-shot) with an overdetermined condition yields better SNR and uniformity in separated diffuse and specular reflectance, as shown in

Figure 5h,i.

5. Layered Reflectance Separation

The previous sections described diffuse-specular separation using respective sampling of cross- and parallel-polarized semi-circular regions of a microlens in our TPLF and FPLF cameras. In this section, we now describe a novel computational method to obtain layered reflectance separation from a single-shot photograph using angular light-field sampling. It should be noted that in skin, the specular component separated with polarization difference imaging is actually also mixed with some single scattering, which also preserves polarization [

8]. This corresponds to the “specular” values of each microlens image in our TPLF camera photograph, as shown in

Figure 6b, which are obtained after difference imaging of the lower and upper semi-circular regions of the microlens and include contributions of specular reflectance and single scattering. Similarly, the diffuse values in the upper semi-circular region of the microlens include contributions of both shallow and deep subsurface scattering. We make the observation that our TPLF camera allows us to angularly sample these various reflectance functions in a single photograph. As depicted in

Figure 6a, these reflectance functions have increasingly wider reflection lobes ordered as follows: specular reflectance has a sharper lobe than single scattering in the polarization preserving component, and both shallow and deep scattering have wider reflectance lobes, as they are the result of multiple subsurface scattering. Among these, deep scattering has a wider lobe than shallow scattering due to a greater number of subsurface scattering events. Now, the circularly arranged pixels of the microlens angularly sample these reflectance functions as follows: the brighter pixels within each semi-circular region sample both narrow and wide reflectance lobes, while the darker pixels sample only the wider reflectance lobes, which have lower peaks. This motivates us to propose an angular sampling method to separate these various layered reflectance components. We first rely on the observation that specular rays are much brighter than single scattered rays in skin. Hence, we propose separation of these two components by separately sampling high and low intensity values in the polarization preserving component. Each semi-circular region inside a microlens of the Lytro Red Hot camera observes a total of

= 36 values. We sort these observed values according to their brightness and employ a threshold for the separation. We empirically found averaging the brightest

values as specular reflection and averaging the remaining (darker)

values as the single scattering component gave good results in practice (see

Figure 7d,e).

Next, we assume that deep scattering has a wider angular lobe than shallow scattering in the diffuse component since it scatters further out spatially. Hence, we model deep and shallow scattering components in the diffuse-only region of a microlens image as shown in

Figure 6b: darker (outer) pixels correspond to only deep scattering, while brighter (inner) pixels contain both shallow and deep scattering. This modelling assumes that the lobe of shallow scattering is narrow enough not to contribute to the entire diffuse-only region of the microlens. We sample pixels in the darker outer region of each microlens image and average them for generating a deep scattering image, as shown in

Figure 7g. Subtracting the deep scattering value from shallow + deep scattering pixels in the brighter inner region generated the shallow scattering image in

Figure 7f. We empirically found that using the sampling ratio of the darkest

values for estimating deep scattering and the brightest

values for estimating shallow + deep provided qualitatively similar results of separation compared to those reported by Ghosh et al. [

8], with similar colour tones and scattering amount in each component. Finally, in order to employ the separated shallow and deep scattering components as albedos for layered rendering, we need one additional step of radiometric calibration to ensure that the sum of the two separated shallow and deep albedos matches the total diffuse reflectance albedo in order to ensure that the separation is additive, similar to the separation of [

8].

Note that our assumptions about the width of the reflectance lobes is supported by the modelling of Ghosh et al. [

8], where they applied the multipole diffusion model [

35] for shallow scattering and the dipole diffusion model [

36] for deep scattering to model epidermal and dermal scattering approximately. They measured scattering profiles with projected circular dot patterns and fitted the measurements to these diffusion models. While the outer two thirds region of each projected pattern was fitted accurately with the deep scattering model, the inner one-third region was not because of the additional shallow scattering component. Differences in lobe widths of shallow and deep scattering are also supported by the Henyey–Greenstein phase function [

37] where the forward scattering parameter g is (0.90, 0.87, 0.85) and (0.85, 0.81, 0.77) for epidermal and dermal layers, respectively, in (R, G, B) [

38]. Since the smaller parameter values make the lobe wider, deep scattering occurring at a dermal layer is assumed to have a wider lobe. Therefore, considering the modelled layer thicknesses for epidermal (0.001–0.25 mm) and dermal layers (1–4 mm) in the literature, the radiative transport equation implies that the thicker layer makes the scattering lobe wider [

39].

We can extend the layered reflectance separation of TPLF imaging for a FPLF camera shown in

Figure 2b under polarized illumination at an arbitrary angle.

Figure 6c,d shows how layered reflectance is separately acquired through four polarization filters in a FPLF camera. The diagram (c) assumes the polarization angle of reflected light as 0

, so the left-top quarter-circular region of a microlens image, (d), is acquired through parallel polarization with a 0

polarization filter. The left-top quarter-circular region is just the same as the bottom semicircular region of the TPLF imaging shown in

Figure 6b, providing diffuse and specular components. The right-top quarter-circular region in (d) undergoes cross-polarization the same as the upper semicircular region of the TPLF imaging, providing the diffuse-only component. The bottom quarter-circular regions in (d) provide diffuse and partial specular components with partial cross-polarization filtering.

The same intensity-based sampling method of a TPLF camera is applied to each quarter circular region of a microlens image in a FPLF photograph to separately generate layered reflectance images under polarized illumination under an arbitrary angle. For specular-albedo, we generate four light-field images by sampling the brightest 30% pixels in each quarter circular region of a microlens image in a FPLF photograph. By applying the method of Riviere et al. [

17], we estimate the intensity of parallel and cross-polarization with the four light-field images. Subtraction of the two intensity values per each pixel gives a specular-only image, as shown in top row of

Figure 8. The same procedure with different sampling thresholds applies to generate other layered reflectance albedos. The only difference is that shallow and deep scattering albedos are obtained from the cross-polarized microlens pixels. The same intensity-based threshold ratio is applied to each RGB channel of a TPLF or FPLF photograph. Thus, spectral characteristics of an object such as colour and reflectivity do not affect the proposed separation method. as shown in the eyes of the mannequin in

Figure 3 and the eyes of the subject in

Figure 7f,g with extreme black and white colours. The dynamic range of the sensor can in principle affect the quality of the separation. This is however somewhat mitigated by our employment of the intensity threshold ratio, which allows sampling of pixels in the relative intensity range. Thus, each layered reflectance albedo has the same relative dynamic range as the raw sensor modulo any quantization errors.

Besides separating layered reflectance albedos under uniform polarized illumination, we go further than previous work to separate diffuse normals additionally into shallow and deep scattering normal maps (

Figure 9) by applying our novel angular domain light-field sampling to polarized spherical gradient illumination. As can be seen, the shallow scattering normals contain more surface detail than the regular diffuse normals estimated with spherical gradients, while the deep scattering normals are softer and more blurred than the corresponding diffuse normals. We believe this novel layered separation of photometric normals in addition to albedo separation can be very useful for real-time rendering of layered skin reflectance with a hybrid normal rendering approach.

Figure 10 demonstrates another similar separation where we remove single scattering from the polarization-preserving component to estimate pure specular albedo and, for the first time, a pure specular normal map (b). Note that specular normal maps have been used to estimate accurate high frequency skin mesostructure detail for high-end facial capture [

27,

29]. However, “specular” normals (a) as acquired by Ma and Ghosh et al. have some single scattering mixed with the signal, which acts as a small blur kernel on the specular surface detail and can also slightly bend the true orientation of the specular normal. Removal of this single scattering from the data used to compute pure specular normals has the potential for further increasing the accuracy and resolution of facial geometry reconstruction.

6. Results

This section describes validation of our method in various imaging conditions.

Figure 8 presents the results of layered reflectance separation when the position of a TPLF camera or a light source was changed. We used an LCD projector, an EUG WXGA LCD projector in 5000 Lumens, attached with a linear polarization filter for polarized illumination and a Lytro Illum camera for TPLF imaging.

Figure 8a–d shows the results depending on the distance between a subject and a light source when the TPLF camera was placed at a fixed position, 1.5 m from the subject. The distance between a subject and a light source is directly related to the intensity of reflected light. The shallow and deep scattering images from left to right clearly show that the intensity of scattering drops as the distance between a subject and a light source increases from 0.5 m–2 m. It is interesting to see that the slight change of illumination energy is more noticeable in scattering reflection than specular reflection. Despite the change of reflection intensity, we found that the quality of layered reflectance separation is not significantly affected in specular-only, single scattering, shallow scattering and deep scattering, as shown in

Figure 8. Thus, this experiment validates that the distance to the light source has a minor influence on the performance of layered reflectance separation with TPLF imaging.

Figure 8e,f presents the results of layered reflectance separation depending on the distance between a subject and a TPLF camera. The change of imaging distance can affect both receiving energy of reflected light and the incident angle of reflected rays to the camera lens. Shallow and deep scattering images in

Figure 8e,f show intensity drop as imaging distance increases from 0.5 m–2 m. Furthermore, specular and single scattering images show a change of position of strong reflection. However, the results validate that the quality of layered reflectance separation is not affected by the imaging distance. Note that rays reflected from an object point are sensed by a single microlens image consisting of more than 100 pixels with the Lytro Illum camera. This experiment reveals that such a number of pixels provide sufficient information to resolve four kinds of layered reflectance occurring at an object point regardless of imaging distance.

Figure 11 presents the results of layered reflectance separation under polarized spherical illumination using a light stage. The subject was placed at a near (0.5 m, top row), a middle (1.0 m, middle row) and a far distance (2 m, bottom row) inside of the light stage from a Lytro Illum camera for TPLF imaging. Similar to the previous experiment on changing camera distance using an LCD projector, we found that camera distance has a minor influence on performance of layered reflectance separation under polarized spherical illumination. Compared to the previous results with an LCD projector, the results with a light stage show more uniform intensity distribution on the entire face in shallow and deep scattering. It is noticeable that prints of nose pads of glasses are visible between the eyes and the nose in only shallow and deep scattering images.

7. Applications and Analysis

We now present rendering applications of standard diffuse-specular and layered reflectance separation using our TPLF camera.

Figure 12a presents hybrid normal rendering for a female subject (lit by a point light source) according to the method proposed by Ma et al. [

27]. Here, the diffuse and specular albedo and normal maps were acquired using four photographs with the TPLF camera under polarized spherical gradient illumination using the LED hemisphere. As can be seen, the specular reflectance highlights the skin surface mesostructure, while the diffuse reflectance has a soft translucent appearance due to blurring of the diffuse normals due to subsurface scattering. Note that the facial geometry for the hybrid normal rendering was acquired separately using multiview stereo with additional DSLR cameras (see

Figure 4a).

Figure 12b presents a novel layered hybrid normal rendering where the diffuse albedo and normals have been further separated into shallow and deep scattering albedo and normals, respectively. Note that due to the additive property of the separation, the result of layered rendering (b) is very similar to the standard hybrid normal rendering (a) in this case.

Figure 12c–f presents a few editing applications of the proposed layered hybrid normal rendering with separated shallow and deep scattering albedo and normals. Here, we have removed the specular layer from the rendering to highlight the edits to the diffuse layer. The top row presents the results of diffuse rendering with the regular diffuse albedo shaded with the shallow scattering normal in (c) and deep scattering normal in (d), respectively. As can be seen, the skin appearance is dry and rougher when shaded with the shallow scattering normal and softer and more translucent when shaded with the deep scattering normal compared to the regular hybrid normal rendering (a). The bottom row presents the results of layered hybrid normal rendering where we have edited the shallow and deep scattering albedos to enhance one component relative to the other. As can be seen, enhancing the shallow scattering albedo (w.r.t. deep) (e) makes the skin appearance more pale and dry, while enhancing the deep scattering albedo (w.r.t. shallow) (f) makes the skin more pink in tone and softer in appearance. The availability of layered reflectance albedos and normals with our TPLF acquisition approach makes such appearance editing operations easily possible.

Figure 13 presents the results of shallow and deep scattering separation obtained with various intensity thresholds for light-field sampling and compares these single-shot separation results with that obtained using the computational illumination technique of Ghosh et al. [

8] (

Figure 13d), which uses cross-polarized phase-shifted high frequency structured lighting patterns. As can be seen, our shallow and deep scattering albedo separation with single-shot TPLF imaging achieves similar qualitative results of layered separation compared to the multi-shot technique of [

8]. Here, we found the intensity threshold of

:

(brighter

values used to estimate shallow + deep, darker

used to estimate deep) for the separation of shallow and deep scattering to have the most qualitative similarity to structured lighting-based separation. Note that the multi-shot result was somewhat degraded in comparison to the original paper. This is due to subtle subject motion artefacts during the four shots required to acquire the response to phase-shifted stripes. We employed a commodity Canon EOS 650D camera with sequential triggering to acquire the four shots, while Ghosh et al. [

8] used a special head stabilization rig and fast capture with burst mode photography (using a high-end Canon 1D Mark III camera) to prevent such motion artefacts. This further highlights the advantage of our proposed single-shot method.

One advantage of our method is that we employ uniform spherical illumination for separation, which is more suitable for albedo estimation than frontal projector illumination required for structured lighting. The projector illumination also impacts subject comfort during acquisition: the subject had to keep very still and their eyes closed during acquisition of the structured lighting patterns for the separation. We, however, note that there are some visible colour differences between the results of separation with the TPLF camera and separation with structured lighting. These colour differences can be mainly attributed to differences in colour temperatures of the two respective illumination systems (LED hemisphere vs. projector), as well as differences in respective camera colour response curves (the data for structured light-based separation was acquired using a Canon DSRL camera). The colour reproduction with the Lytro camera is not as vivid, because it is based on our own implementation of demosaicing, which may not be optimal.

Figure 14 compares specular and diffuse separation with single-shot TPLF imaging and the multi-shot direct-indirect separation technique of Nayar et al. [

11]. For a fair comparison, we used the same Lytro Illum camera and light source, an EUG WXGA LCD projector for both cases. We took four shots with a normal Lytro Illum camera while illuminating the subject’s face with shifted high-frequency stripe patterns for the multi-shot technique. Then, for single-shot TPLF imaging, we inserted two linear polarizers in vertical and horizontal directions into the Lytro Illum camera at the same position shown in

Figure 2b. The results also support how the results of the multi-shot technique are degraded by motion and projection artefacts, especially in the specular-only image; see

Figure 14b. Furthermore, the diffuse-only image of the multi-shot technique (

Figure 14c) shows inaccurate separation with visible specular components near the left eye.

We further demonstrate separation of layered reflectance from human skin as described in

Section 5 under uncontrolled sunlight with partially polarized illumination at an arbitrary angle using the proposed FPLF imaging.

Figure 15 shows layered reflectance of a hand and a face under sunlight incident on the surface near Brewster angle of incidence, which was acquired from a single-shot FPLF photograph. Since sunlight is only partially polarized, note that some portion of specular components was not completed removed in shallow and deep scattering images, especially the cheek and chin regions of the face, due to the high curvature of these regions.

Finally, we analyse the quality of layered reflectance separation with TPLF imaging under various other illumination setups that are commonly available.

Figure 16 presents the results of single-shot layered reflectance separation on a subject’s face lit from the side with uniform illumination from a 32

desktop LCD panel (top row), a set of four point light sources (with two on each side) approximating a typical photometric stereo setup (centre row) and finally lit with only a single point light source from the front (bottom row). Here, we simply used smartphone LED flashes as the point light sources in our experiment. Note that the LCD panel we employed already emits (vertical) linearly polarized illumination, so we did not need to explicitly polarize it for the measurement. We mounted plastic linear polarizer sheets (vertically oriented) in front of the phone LED flashes for the other measurements. Among these, employing uniform illumination emitted by the LCD panel resulted in the highest quality of separation, particularly for shallow and deep scattering. Such an illumination setup could also be used in conjunction with passive facial capture systems such as that employed by Bradley et al. [

31]. Furthermore, our method also achieves good qualitative layered reflectance separation under just a set of point light sources (and even with a single point light), which has not been previously demonstrated. We believe this can be very useful for appearance capture with commodity facial capture setups. We however note that the separation results with the point light sources suffer from a poorer signal-to-noise ratio due to the larger dynamic range between the specular highlight and the diffuse reflectance compared to when using spherical or extended (LCD) illumination.

Limitations and Discussion

Since our TPLF and FPLF photography is based on light-field imaging, it suffers from the classical issue of resolution trade-off with a light-field camera. However, the good news is that light-field camera resolution is rapidly increasing, e.g., the Lytro Illum used for our experiment now has a 2K spatial resolution. The Lytro camera does not provide an official way to manipulate the raw light-field photograph, so we implemented our own microlens sampling and colour demosaicing pipeline. This clearly leads to sub-optimal results, as we could not obtain precise information on microlens position for precise pixel sampling, and also, colours were not reproduced as vividly as desirable. The colour reproduction can be improved by measuring a colour chart with the TPLF camera and transforming measured colour values to the sRGB colour space. Our separation with TPLF imaging assumes that the incident illumination is polarized with its axis either vertically or horizontally oriented in order to match the orientation of our two-way polarizer. Fortunately, this is not very hard to achieve in practice with many illumination setups. Our FPLF imaging is not bounded by this assumption since it allows us to estimate parallel and cross-polarization states under polarized illumination at an arbitrary angle. The advantage of FPLF imaging compared to TPLF imaging is enhanced flexibility with respect to the incident illumination. While TPLF imaging requires aligned orientation of polarized illumination, FPLF imaging works with uncontrolled and arbitrary partially-polarized illumination, such as sunlight. Thus, only FPLF imaging can be applied for outdoor applications. However, separated reflectance images obtained using TPLF imaging have higher SNR than those obtained with FPLF imaging since TPLF imaging samples twice the number of pixels in the microlens compared to FPLF imaging. Thus, there exists a trade-off between flexibility and SNR in the number of filters employed for polarized light-field imaging. The ideal number of polarization filters is the minimum number of filters required for a particular application. The implementation of a TPLF camera using a Lytro Red Hot camera has a restricted dynamic range compared to DSLR cameras, which is why it is currently more suitable for acquisition with spherical or extended illumination compared to point light sources. However, we expect this dynamic range issue to be resolved with improvements in light-field camera technology in association with HDR imaging. There is also the possibility of misalignment error with manual attachment of a two-way or four-way polarizer into a light-field camera. This could be resolved in future work by employing an imaging sensor with an inbuilt linear polarizer (e.g., Sony’s IMX250MZR).

We currently empirically set the intensity thresholds for layered reflectance separation. Further research would be required to relate such separation thresholds to physiological characteristics of reflection and scattering of light in various layers of skin. We also simply render skin appearance with a hybrid normal rendering approach using the separated reflectance albedos and photometric normals. However, many rendering systems instead implement full blown subsurface scattering simulations to achieve translucency effects. Besides scattering albedos, such a rendering approach requires an estimate of translucency (diffuse mean free path), which we do not currently measure. However, the approach of Zhu et al. [

40] could be employed with our data to estimate per pixel translucency from four measurements under polarized spherical gradient illumination. Furthermore, our separation of diffuse albedo and normals into shallow and deep scattering albedo and normals might enable estimation of layered translucency parameters for rendering shallow and deep scattering using such an approach. Our imaging approach for layered reflectance separation may also have applications in other domains such as cosmetics where the technique could be applied to separate a cosmetic layer from the underlying skin reflectance or applications in industrial or cultural heritage sectors for separation of layered materials such as paints or pigments.