1. Introduction

Selective fruit harvesting is among the most time-consuming and labor-intensive agricultural operations. Over the past four decades, humans have been trying to develop robots to do this work [

1,

2,

3,

4]. However, owing to the complex operating environment and unstructured physical parameters of the operational objects, several key factors affect the smooth operation of fruit-harvesting robots. One of them is the precise collaborative operation of the target positioning unit of fruit recognition and the picking execution component, also known as the “hand–eye collaborative operation” system [

5,

6,

7].

In the hand–eye coordination operation system of the manipulator, there are two major ways to install cameras, “eye to hand” and “eye in hand” [

8]. In the “eye to hand”-based hand–eye coordination operation system, the camera and the manipulator are installed separately, which can help obtain image-related information of the fruit over a larger field of view, where it is easy to realize visual feedback control, but in this system, the movement of the manipulator causes the target object to be occluded. When the environment changes or the camera moves, the latter needs to be recalibrated [

9,

10]. The accuracy of the visual system and the mechanical system also affect the rate of success of operation, operational accuracy, and cost. In the “eye in hand” hand–eye coordination operation system, the camera is fixed at the end of the manipulator, and the target object is close to it to prevent the manipulator from occluding the target object and to achieve a high-resolution image [

11]. The requirements on the accuracies of visual measurement and positioning of the manipulator are low, and the small displacement of the fruit does not affect its grip. However, the system needs to continually call the results of processing of images from the camera because it has a small field of view. Furthermore, using the feedback control of the manipulator, the robot is susceptible to tremors [

12], thus influencing the stability of control and picking speed of the system. There is also another method that combines two camera mounting methods, which has been less studied in the field of agriculture. Mehta et al. [

13] proposed a cooperative visual servo controller that benefits from the large field-of-view of a fixed camera and the accuracy of a camera-in-hand (CiH). A rotation controller was developed to orient the robot such that the target fruit selected by the fixed camera can be viewed by the CiH attached to the end-effector. At the same time, the corresponding control system was developed and a strict stability analysis is carried out to guarantee its closed-loop performance.

During target identification, positioning, and picking among automated picking operations, if the advantages of the first and second operating systems described earlier can be combined to coordinate the vision system, the movement chassis, and the manipulator system, accurate operation can be achieved. In this paper, our method is similar to the third one mentioned above. A binocular camera was mounted onto the chassis of a picking robot and a monocular camera was mounted at the end of the picking manipulator. A “global–local” visual servo picking system was thus constructed. The binocular camera was used to identify ripe fruits and calibrate the operational distance. The robot then moved to the area of the picking operation and picked fruit using the manipulator with a monocular camera servo. Compared with the first method mentioned above, the visual accuracy and mechanical accuracy of the method proposed in this paper greatly reduced the influence on the accuracy of fruit picking. It also had the advantage of the second method, the smaller displacement of the fruit did not affect the success rate of picking. There were two main differences with the third method mentioned above. Firstly, their overall system was fixed. A fixed monocular camera was used to provide a global view and obtain the target fruit information of the whole fruit tree. However, our overall system is mobile. The fixed binocular camera was used to obtain the information of fruits in the front working area, and meanwhile, the chassis moving platform was coordinated to reach the work stopping point. Secondly, they got the 3D fruit position through the camera in the center of the terminal claw, completing the real-time control of the manipulator. In contrast, we obtained the target fruit image information through the camera in the center of the terminal claw and realized the visual servo control based on the image to complete the harvest. Feng et.al. [

4] designed an autonomous tomato harvesting robot and they used the first method of vision servo. The execution time of a single harvest cycle was about 24s, and the success rate for harvesting tomatoes was 83.9%. Lehnert et al. [

12] designed an autonomous sweet pepper harvesting system for protected cropping systems and they used the second vision servo method. The highest success rate of grasping was 90%. Mehta et al. [

13] proposed a cooperative visual servo controller for harvesting medium and large varieties of citrus fruit. The accuracy of the controller was observed to be about 15 mm. The results of experiments showed that the system we proposed is feasible. Compared with the first two methods mentioned above, this system can perform picking operations highly reliably while improving picking accuracy and speed in automated agricultural picking.

The presented paper is organized as follows:

Section 2 introduces the principle of “global–local” visual servo picking and describes the operating process of the system;

Section 3 states every subsystem of the system, which includes manipulator workspace analysis, visual processing methods and picking strategies;

Section 4 provides experimental evidence to verify the feasibility of this “global–local” visual servo system; concluding remarks are presented in

Section 5.

2. Principle of “Global–Local” Visual Servo Picking

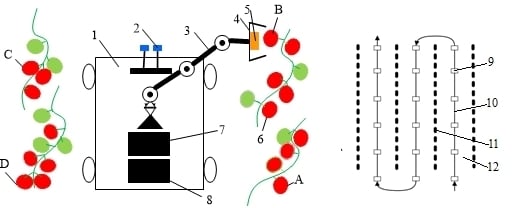

The “global–local” visual servo picking system, as shown in

Figure 1a, includes a monocular vision system, a binocular vision system, a chassis, a manipulator, and an end effector. The robot performs cruise operations according to the specific operational path as shown in

Figure 1b. When the robot stops at the first stop point before entering the ridge, the binocular vision system starts to judge the ripeness of the fruit in the global field of vision, and calibrates the operational distance of the ripe fruit at the given coordinates. Then, by reference to the operational space of the manipulator, the range of operations for picking ripe fruits can be determined. Under the guidance of the robot’s navigation and positioning system, the chassis motion system is driven to move to the calculated operational stop point. The manipulator begins picking operations under monocular visual guidance. During the operation of the manipulator, the binocular vision system begins to judge the maturity of the fruit in the global field of vision, and calibrates the distance to the ripe fruit in cycles for automated picking operations.

The process of visual servo picking operations is shown in

Figure 2. The robot travels according to a predetermined operational trajectory and stops at the first stop point of the ridged aisle. The binocular vision system begins to judge the degree of ripeness of the fruit in the global field of vision and demarcates the operational distance to the ripe fruit at the given coordinates. Based on an analysis of the operating space of the manipulator, the range of operation of the ripe fruit that can be picked is obtained. The coordinates of the next stop point for the robot and the farthest fruits—A, B, C, and D—that can be picked by the robot as well as their respective picking coordinates are planned. Then, under the guidance of the navigation and positioning system, the robot controls the chassis motion system to stay at the predetermined stop points for operation, and drives the end of the manipulator to reach the vicinity of fruit A. By using the monocular vision system, the servo controls the manipulator to move to the picking position for fruit A and starts picking the ripe fruit on the right side of the robot’s forward direction. When the distance between the end effector and the target fruit meets the picking requirements, a single picking operation is complete. Then, the remaining ripe fruits are picked via the monocular visual servo. When the end of the manipulator moves to the vicinity of fruit B, the operation of the picking of the ripe fruit on the right side of the robot is carried out. When the end of the manipulator moves to the vicinity of fruit D, it starts picking the ripe fruit on the left side of the robot. When the end of the manipulator moves to the vicinity of fruit C, the ripe fruit is picked on the left side of the robot. Once all ripe fruits in the area near the robot have been picked, it utilizes the binocular vision system to continue to judge fruit ripeness in the global field of vision and demarcate the operational distance to the ripe fruit. It travels to the next target stop point and repeats the above steps to continue picking the ripe fruit in other areas. Once the picking operation in the given ridge aisle is complete, the robot continues to travel according to its predetermined operating trajectory and proceeds to the next ridge aisle to continue picking.

Based on an analysis of the process of the robot’s picking operation, methods for determining the operational space of the manipulator, the range of accuracy of the binocular vision system, the positions of fruits A, B, C, and D, and the determination of the starting points for picking influence the calculation of coordinates of the robot’s stop points. The accuracy of converting the binocular camera’s coordinate system and the manipulator’s end-coordinate system influence and determine the efficiency of the picking operations. The coordinated operation of the monocular vision system, manipulator, and end effector determine the order of picking of the ripe fruit and the timing of the picking action. As different greenhouses have different planting specifications and heights of fruit growth, different robotic picking manipulators are required. To carry out standardized picking operations and improve the efficiency of the automatic operation of the robot, the automatic picking of tomato in a greenhouse was used as an example. The robot requires different manipulators when picking tomatoes at different heights. However, in general, tomatoes close to the root begin to mature first, after which their height continues to increase as they ripen. Moreover, tomatoes at the same height have slight differences in times of maturation. As a result, the lifting platform shown in

Figure 3 can be used in combination with a robot’s chassis and a manipulator to pick ripe tomatoes in different periods. In this way, the principles of automatic picking of tomatoes at different heights are roughly the same, with the difference being that as the heights of the tomatoes vary, the adjustments to the height of the platform vary. Therefore, for the picking discussed here, the manipulator can have access to all tomatoes within the field of vision for picking.

Assuming that all joints of the picking manipulator were rotated by 180 degrees and the base joint was rotated, the theoretical operational area of the robot was a round crown shape. As shown in

Figure 4, under normal circumstances, tomatoes cultivated in the greenhouse had an inter-ridge interval of 80 cm, thickness of the plant of 15 cm, and an effective plant height of 30~160 cm as shown in

Figure 5. To enable the robot to perform picking operations effectively and simultaneously facilitate the continuous planning of its picking trajectory, the picking area was taken as a rectangular parallelepiped as shown in

Figure 6. According to the inter-ridge distance for planting tomatoes in the greenhouse and plant thickness, the distance between the origin of the manipulator and the effective area of operation of the robot was set to 30–50 cm; that is, the length of the area of operation shaped as a rectangular parallelepiped along the y-axis was 20 cm, and the length along the x-axis was half the distance of the inter-ridge plant interval, that is, 40 cm. The length along the z-axis was 30 cm.

As shown in

Figure 6, the center of symmetry of the two lenses of the binocular camera was taken as the origin of its coordinate system, and the center of the bottom of the base of the manipulator was set as the origin of its coordinate system. The coordinates of the ripe fruit in the field of vision were detected by the binocular vision system, and the distances between each fruit, and the x-, y-, and z-axes of the binocular camera were calculated. According to the positional relationship between the binocular camera and the base of the manipulator, the coordinates of the ripe fruit relative to the axes of the base of the manipulator were determined. In the picking area on the right side of the robot, the ripe fruit at the shortest distance from the binocular vision system along the x-axis was selected and marked as fruit A. In the picking area on the left side of the robot, the ripe fruit at the shortest distance from the binocular vision system along the x-axis was marked as Fruit D. The robot traveled in the middle of the ridge. Considering its navigational and positioning accuracy, its coordinates along the y-axis remained unchanged. According to the distances between fruit A and fruit D, and the x-axis of the base of the manipulator, the smaller value of the two was calculated. This was recorded as the starting point of the next space for the picking operation along the x-axis. Coordinates of the starting point along the x-axis and half of the length of the space for the picking operation—that is, 20 cm—were added to obtain coordinates of the x-axis of the next stop point of the robot. The starting point along the x-axis and 40 cm were added to obtain coordinates of the x-axis of the next terminal point of the picking operation of the robot. According to the distance to the fruit along the z-axis, the spatial height of the fruit suitable for the picking operation was chosen. Then, according to the distribution of fruits on the left and right sides of the robot, the coordinates of the ripe fruits were saved. In the space for the picking operation on the right side, the fruit farthest from the binocular vision system along the x-axis was selected and marked as B. In the picking space on the left side, the fruit farthest from the binocular vision system along the x-axis was selected and marked as fruit C. Then, the robot could quickly reach the next stop point for operation via the navigation and positioning system.

For visual servo navigation and positioning, our team used a greenhouse robot that combined a navigation system based on an odometer, a gyroscope, and a laser radar. This could operate while constructing a two-dimensional (2D) environment map for the greenhouse with its system architecture as shown in

Figure 7. Its principle was that the robot initially planned a range for the area for the walking operation based on the cruise task of automatic picking. It then obtained mileage information by collecting data from the encoder while walking in combination with data detected by the gyroscope as well as the originally planned walking track through track deduction. The robot established a 2D environment map in combination with data scanned by a laser radar and the gmapping algorithm. The A* algorithm was used to plan the cruise path and the self-adaptive Monte Carlo method (AMCL) to estimate the position and posture of the robot. The application of the A* algorithm was expanded according to the navigation toolkit in ROSA and a specific path of cruise operation was obtained by setting the target points. A considerable amount of research has been devoted to the navigation and positioning of robots [

14,

15,

16,

17], and our team is also writing articles on the issue. Accurate navigation and positioning are helpful for automatic picking, but the main purpose of this paper is to introduce the global–local visual servo picking operations of the robot in a greenhouse. Due to limitations of length, details of the robot’s navigation and positioning are provided here.

Based on the above analysis, critical factors influence the performance of the global–local visual servo picking system, including the rate of identification of ripe fruits, the range of accuracy of binocular vision, and the efficiency and success of picking using the monocular visual servo of the manipulator.