Assessment of the Potential of Wrist-Worn Wearable Sensors for Driver Drowsiness Detection

Abstract

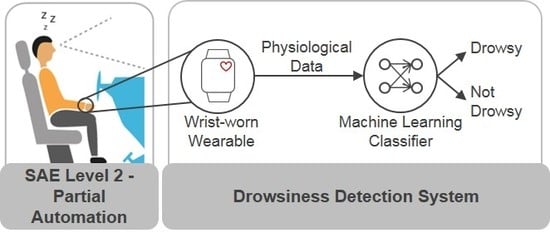

:1. Introduction

2. Driver Drowsiness Measurement Technologies

3. Previous Work in Driver Drowsiness Detection Using Wrist-Worn Wearable Devices

- RQ1: Is it possible to reliably detect driver drowsiness by using physiological data (HRV) from a wrist-worn wearable device as single data source in combination with a machine learning classifier?

- RQ2: Considering the in-vehicle setting, how do the results of the consumer device differ from a more intrusive medical-grade device?

- RQ3: How do the results differ in the case of user-dependent vs. user-independent tests?

4. Methodology

4.1. Simulator Study

4.1.1. Simulator

4.1.2. Participants

4.1.3. Study Procedure

4.1.4. Measurement Technique

4.2. Driver State Analysis

4.2.1. Video Ratings

4.2.2. Detection of Micro-Sleep Events through Image Processing

4.3. Feature Extraction and Data Set Preparation

- Time-domain features: Mean RR interval length (meanRR), maximum RR interval length (maxRR), minimum RR interval length (minRR), range of RR interval length (rangeRR), standard deviation of RR interval lengths (SDNN), mean of 5-min standard deviation of RR intervals (SDANNIndex), maximum heart rate (maxHR), minimum heart rate (minHR), average heart rate (meanHR), standard deviation of heart rate (SDHR), square root of the mean squared difference of successive RR intervals (RMSSD), number of interval differences of successive RR intervals greater than 50 ms (NN50), percentage of successive/adjacent RR intervals differing by 50 ms (pNN50);

- Frequency-domain features: Very low frequency power (VLF), low frequency power (LFpower), high frequency power (HFpower), total power (Totalpower), percentage value of very low frequency power (pVLF), percentage value of low frequency power (pLF), percentage value of high frequency power (pHF), normalized low frequency power (LFnorm), normalized high frequency power (HFnorm), ratio of low and high frequency (LFHF_ratio);

- Non-linear domain features: Standard deviation of instantaneous (short term) beat-to-beat R-R interval variability (SD1), standard deviation (SD) of the long term R-R interval variability (SD2), ratio of standard deviation 1 and standard deviation 2;

4.4. Classification of Driver Drowsiness

4.4.1. Class Balancing and Feature Selection

4.4.2. Performance Measures

5. Results

5.1. Selected Features

5.2. Classification Results

5.3. Discussion and Limitations

6. Conclusions

Future Work

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- EuroNCAP. EuroNCAP 2025 Roadmap; Technical Report; EuroNCAP: Leuven, Belgium, 2017. [Google Scholar]

- National Highway Traffic Safety Administration; US Department of Transportation. Traffic Safety Facts Critical Reasons for Crashes Investigated in the National Motor Vehicle Crash Causation Survey; Technical Report; National Center for Statistics and Analysis (NCSA): Washington, DC, USA, 2015.

- Tefft, B.C. Acute Sleep Deprivation and Risk of Motor Vehicle Crash Involvement. Technical Report. 2016. Available online: https://aaafoundation.org/wp-content/uploads/2017/12/AcuteSleepDeprivationCrashRisk.pdf (accessed on 31 December 2019).

- Society of Automotive Engineers (SAE) International. Taxonomy and Definitions for Terms Related to Driving Automation Systems for On-Road Motor Vehicles; SAE International: Warrendale, PA, USA, 2018. [Google Scholar] [CrossRef]

- Sahayadhas, A.; Sundaraj, K.; Murugappan, M. Detecting Driver Drowsiness Based on Sensors: A Review. Sensors 2012, 12, 16937–16953. [Google Scholar] [CrossRef] [Green Version]

- Kundinger, T.; Riener, A.; Sofra, N. A Robust Drowsiness Detection Method based on Vehicle and Driver Vital Data. In Mensch und Computer 2017—Workshopband; Burghardt, M., Wimmer, R., Wolff, C., Womser-Hacker, C., Eds.; Gesellschaft für Informatik e.V.: Regensburg, Germany, 2017. [Google Scholar]

- Poursadeghiyan, M.; Mazloumi, A.; Saraji, G.N.; Niknezhad, A.; Akbarzadeh, A.; Ebrahimi, M.H. Determination the Levels of Subjective and Observer Rating of Drowsiness and Their Associations with Facial Dynamic Changes. Iran. J. Public Health 2017, 46, 93–102. [Google Scholar]

- Anund, A.; Fors, C.; Hallvig, D.; Åkerstedt, T.; Kecklund, G. Observer Rated Sleepiness and Real Road Driving: An Explorative Study. PLoS ONE 2013, 8, e0064782. [Google Scholar] [CrossRef] [Green Version]

- Åkerstedt, T.; Gillberg, M. Subjective and objective sleepiness in the active individual. Int. J. Neurosci. 1990, 52, 29–37. [Google Scholar] [CrossRef]

- Johns, M.W. A New Method for Measuring Daytime Sleepiness: The Epworth Sleepiness Scale. Sleep 1991, 14, 540–545. [Google Scholar] [CrossRef] [Green Version]

- Shahid, A.; Wilkinson, K.; Marcu, S.; Shapiro, C.M. Stanford Sleepiness Scale (SSS). In Stop, that and One Hundred Other Sleep Scales; Shahid, A., Wilkinson, K., Marcu, S., Shapiro, C.M., Eds.; Springer: New York, NY, USA, 2012; pp. 369–370. [Google Scholar]

- Monk, T.H. A visual analogue scale technique to measure global vigor and affect. Psychiatry Res. 1989, 27, 89–99. [Google Scholar] [CrossRef]

- Weinbeer, V.; Muhr, T.; Bengler, K.; Baur, C.; Radlmayr, J.; Bill, J. Highly Automated Driving: How to Get the Driver Drowsy and How Does Drowsiness Influence Various Take-Over-Aspects? 8. Tagung Fahrerassistenz; Lehrstuhl für Fahrzeugtechnik mit TÜV SÜD Akademie: Munich, Germany, 2017. [Google Scholar]

- Ahlstrom, C.; Fors, C.; Anund, A.; Hallvig, D. Video-based observer rated sleepiness versus self-reported subjective sleepiness in real road driving. Eur. Transp. Res. Rev. 2015, 7, 38. [Google Scholar] [CrossRef]

- Mashko, A. Subjective Methods for the Assessment of Driver Drowsiness. Acta Polytech. CTU Proc. 2017, 12, 64. [Google Scholar] [CrossRef] [Green Version]

- Knipling, R.R.; Wierwille, W.W. Vehicle-based drowsy driver detection: Current status and future prospects. In Proceedings of the IVHS AMERICA Conference Moving Toward Deployment, Atlanta, GA, USA, 17–20 April 1994. [Google Scholar]

- Ueno, H.; Kaneda, M.; Tsukino, M. Development of drowsiness detection system. In Proceedings of the VNIS’94—1994 Vehicle Navigation and Information Systems Conference, Yokohama, Japan, 31 August–2 September 1994; pp. 15–20. [Google Scholar]

- Leonhardt, S.; Leicht, L.; Teichmann, D. Unobtrusive vital sign monitoring in automotive environments—A review. Sensors 2018, 18, 3080. [Google Scholar] [CrossRef] [Green Version]

- Li, G.; Mao, R.; Hildre, H.; Zhang, H. Visual Attention Assessment for Expert-in-the-loop Training in a Maritime Operation Simulator. IEEE Trans. Ind. Inform. 2019, 16, 522–531. [Google Scholar] [CrossRef]

- Sant’Ana, M.; Li, G.; Zhang, H. A Decentralized Sensor Fusion Approach to Human Fatigue Monitoring in Maritime Operations. In Proceedings of the 2019 IEEE 15th International Conference on Control and Automation (ICCA), Edinburgh, UK, 16–19 July 2019; pp. 1569–1574. [Google Scholar] [CrossRef]

- Hu, X.; Lodewijks, G. Detecting fatigue in car drivers and aircraft pilots by using non-invasive measures: The value of differentiation of sleepiness and mental fatigue. J. Saf. Res. 2020, 72, 173–187. [Google Scholar] [CrossRef]

- Doudou, M.; Bouabdallah, A.; Berge-Cherfaoui, V. Driver Drowsiness Measurement Technologies: Current Research, Market Solutions, and Challenges. Int. J. Intell. Transp. Syst. Res. 2019, 1–23. [Google Scholar] [CrossRef]

- Vesselenyi, T.; Moca, S.; Rus, A.; Mitran, T.; Tătaru, B. Driver drowsiness detection using ANN image processing. IOP Conf. Ser. Mater. Sci. Eng. 2017, 252, 012097. [Google Scholar] [CrossRef]

- Jabbar, R.; Al-Khalifa, K.; Kharbeche, M.; Alhajyaseen, W.; Jafari, M.; Jiang, S. Real-time Driver Drowsiness Detection for Android Application Using Deep Neural Networks Techniques. Procedia Comput. Sci. 2018, 130, 400–407. [Google Scholar] [CrossRef]

- Shakeel, M.F.; Bajwa, N.A.; Anwaar, A.M.; Sohail, A.; Khan, A.; ur Rashid, H. Detecting Driver Drowsiness in Real Time Through Deep Learning Based Object Detection. In Advances in Computational Intelligence; Rojas, I., Joya, G., Catala, A., Eds.; Springer International Publishing: Cham, Switzerland, 2019; pp. 283–296. [Google Scholar]

- Vijayan, V.; Sherly, E. Real time detection system of driver drowsiness based on representation learning using deep neural networks. J. Intell. Fuzzy Syst. 2019, 36, 1–9. [Google Scholar] [CrossRef]

- Bamidele, A.; Kamardin, K.; Syazarin, N.; Mohd, S.; Shafi, I.; Azizan, A.; Aini, N.; Mad, H. Non-intrusive Driver Drowsiness Detection based on Face and Eye Tracking. Int J. Adv. Comput. Sci. Appl. 2019, 10, 549–569. [Google Scholar] [CrossRef]

- SmartEye. Driver Monitoring System. Interior Sensing for Vehicle Integration. 2019. Available online: https://smarteye.se/automotive-solutions/ (accessed on 31 December 2019).

- Edenborough, N.; Hammoud, R.; Harbach, A.; Ingold, A.; Kisacanin, B.; Malawey, P.; Newman, T.; Scharenbroch, G.; Skiver, S.; Smith, M.; et al. Driver state monitor from DELPHI. In Proceedings of the 2005 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR), San Diego, CA, USA, 20–26 June 2005; Volume 2, pp. 1206–1207. [Google Scholar]

- NoNap. NoNap Anti Sleep Alarm. 2019. Available online: http://www.thenonap.com/ (accessed on 31 December 2019).

- Optalert. Scientifically validated Glasses-Mining. 2019. Available online: https://www.optalert.com/explore-products/scientifically-validated-glasses-mining/ (accessed on 31 December 2019).

- Corbett, M.A. A Drowsiness Detection System for Pilots: Optaler. Aviat. Space Environ. Med. 2009, 80, 149. [Google Scholar] [CrossRef]

- Zhang, W.; Cheng, B.; Lin, Y. Driver drowsiness recognition based on computer vision technology. Tsinghua Sci. Technol. 2012, 17, 354–362. [Google Scholar] [CrossRef]

- Trutschel, U.; Sirois, B.; Sommer, D.; Golz, M.; Edwards, D. PERCLOS: An Alertness Measure of the Past. In Proceedings of the Driving Assessment 2011: 6th International Driving Symposium on Human Factors in Driver Assessment, Training, and Vehicle Design, Lake Tahoe, CA, USA, 27–30 June 2011; pp. 172–179. [Google Scholar] [CrossRef]

- Forsman, P.M.; Vila, B.J.; Short, R.A.; Mott, C.G.; Dongen, H.P.V. Efficient driver drowsiness detection at moderate levels of drowsiness. Accid. Anal. Prev. 2013, 50, 341–350. [Google Scholar] [CrossRef]

- Ingre, M.; Åkerstedt, T.; Peters, B.; Anund, A.; Kecklund, G.; Pickles, A. Subjective sleepiness and accident risk avoiding the ecological fallacy. J. Sleep Res. 2006, 15, 142–148. [Google Scholar] [CrossRef]

- Morris, D.M.; Pilcher, J.J.; Switzer, F.S., III. Lane heading difference: An innovative model for drowsy driving detection using retrospective analysis around curves. Accid. Anal. Prev. 2015, 80, 117–124. [Google Scholar] [CrossRef] [PubMed]

- Friedrichs, F.; Yang, B. Drowsiness monitoring by steering and lane data based features under real driving conditions. In Proceedings of the European Signal Processing Conference, Aalborg, Denmark, 23–27 August 2010; pp. 209–213. [Google Scholar]

- Li, Z.; Li, S.E.; Li, R.; Cheng, B.; Shi, J. Online detection of driver fatigue using steering wheel angles for real driving conditions. Sensors 2017, 17, 495. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- McDonald, A.D.; Schwarz, C.; Lee, J.D.; Brown, T.L. Real-Time Detection of Drowsiness Related Lane Departures Using Steering Wheel Angle. In Proceedings of the Human Factors and Ergonomics Society Annual Meeting, Boston, MA, USA, 22–26 October 2012; Volume 56, pp. 2201–2205. [Google Scholar] [CrossRef] [Green Version]

- Driver Alert System. Available online: https://www.volkswagen.co.uk/technology/car-safety/driver-alert-systemn (accessed on 31 December 2019).

- ATTENTION ASSIST: Drowsiness-Detection System Warns Drivers to Prevent Them Falling Asleep Momentarily. Available online: https://media.daimler.com/marsMediaSite/en/instance/ko.xhtml?oid=9361586 (accessed on 31 December 2019).

- Lexus Safety System+. Available online: https://drivers.lexus.com/lexus-drivers-theme/pdf/LSS+%20Quick%20Guide%20Link.pdf (accessed on 31 December 2019).

- Taran, S.; Bajaj, V. Drowsiness Detection Using Adaptive Hermite Decomposition and Extreme Learning Machine for Electroencephalogram Signals. IEEE Sens. J. 2018, 18, 8855–8862. [Google Scholar] [CrossRef]

- Rundo, F.; Rinella, S.; Massimino, S.; Coco, M.; Fallica, G.; Parenti, R.; Conoci, S.; Perciavalle, V. An Innovative Deep Learning Algorithm for Drowsiness Detection from EEG Signal. Computation 2019, 7, 13. [Google Scholar] [CrossRef] [Green Version]

- Budak, U.; Bajaj, V.; Akbulut, Y.; Atila, O.; Sengur, A. An Effective Hybrid Model for EEG-Based Drowsiness Detection. IEEE Sens. J. 2019, 19, 7624–7631. [Google Scholar] [CrossRef]

- Lee, H.; Lee, J.; Shin, M. Using Wearable ECG/PPG Sensors for Driver Drowsiness Detection Based on Distinguishable Pattern of Recurrence Plots. Electronics 2019, 8, 192. [Google Scholar] [CrossRef] [Green Version]

- Gromer, M.; Salb, D.; Walzer, T.; Madrid, N.M.; Seepold, R. ECG sensor for detection of driver’s drowsiness. Procedia Comput. Sci. 2019, 159, 1938–1946. [Google Scholar] [CrossRef]

- Babaeian, M.; Mozumdar, M. Driver Drowsiness Detection Algorithms Using Electrocardiogram Data Analysis. In Proceedings of the 2019 IEEE 9th Annual Computing and Communication Workshop and Conference (CCWC), Las Vegas, NV, USA, 7–9 January 2019; pp. 0001–0006. [Google Scholar]

- Zheng, W.; Gao, K.; Li, G.; Liu, W.; Liu, C.; Liu, J.; Wang, G.; Lu, B. Vigilance Estimation Using a Wearable EOG Device in Real Driving Environment. IEEE Trans. Intell. Transp. Syst. 2019, 21, 1–15. [Google Scholar] [CrossRef]

- Barua, S.; Ahmed, M.U.; Ahlström, C.; Begum, S. Automatic driver sleepiness detection using EEG, EOG and contextual information. Expert Syst. Appl. 2019, 115, 121–135. [Google Scholar] [CrossRef]

- Hu, S.; Zheng, G. Driver drowsiness detection with eyelid related parameters by Support Vector Machine. Expert Syst. Appl. 2009, 36, 7651–7658. [Google Scholar] [CrossRef]

- Mahmoodi, M.; Nahvi, A. Driver drowsiness detection based on classification of surface electromyography features in a driving simulator. Proc. Inst. Mech. Eng. Part H J. Eng. Med. 2019, 233, 395–406. [Google Scholar] [CrossRef]

- Fu, R.; Wang, H. Detection of Driving Fatigue by using noncontact EMG and ECG signals measurement system. Int. J. Neural Syst. 2014, 24, 1450006. [Google Scholar] [CrossRef] [PubMed]

- Wörle, J.; Metz, B.; Thiele, C.; Weller, G. Detecting sleep in drivers during highly automated driving: The potential of physiological parameters. IET Intell. Transp. Syst. 2019, 13, 1241–1248. [Google Scholar] [CrossRef]

- Ramesh, M.V.; Nair, A.K.; Kunnathu, A.T. Real-Time Automated Multiplexed Sensor System for Driver Drowsiness Detection. In Proceedings of the 2011 7th International Conference on Wireless Communications, Networking and Mobile Computing, Wuhan, China, 23–25 September 2011; pp. 1–4. [Google Scholar] [CrossRef]

- Rahim, H.; Dalimi, A.; Jaafar, H. Detecting Drowsy Driver Using Pulse Sensor. J. Teknol. 2015, 73, 5–8. [Google Scholar] [CrossRef] [Green Version]

- Jung, S.; Shin, H.; Chung, W. Driver fatigue and drowsiness monitoring system with embedded electrocardiogram sensor on steering wheel. IET Intell. Transp. Syst. 2014, 8, 43–50. [Google Scholar] [CrossRef]

- Solaz, J.; Laparra-Hernández, J.; Bande, D.; Rodríguez, N.; Veleff, S.; Gerpe, J.; Medina, E. Drowsiness Detection Based on the Analysis of Breathing Rate Obtained from Real-time Image Recognition. Transp. Res. Procedia 2016, 14, 3867–3876. [Google Scholar] [CrossRef] [Green Version]

- Final Report Summary—HARKEN (Heart and Respiration in-Car Embedded Nonintrusive Sensors)| Report Summary|HARKEN|FP7|CORDIS|European Commission. 2014. Available online: https://cordis.europa.eu/project/rcn/103870/reporting/en (accessed on 31 December 2019).

- Creative Mode. STEER: Wearable Device That Will Not Let You Fall Asleep. 2019. Available online: https://www.kickstarter.com/projects/creativemode/steer-you-will-never-fall-asleep-while-driving?lang=en (accessed on 31 December 2019).

- StopSleep. Anti-Sleep Alarm. 2019. Available online: https://www.stopsleep.co.uk/ (accessed on 31 December 2019).

- Neurocom. Driver Vigilance Telemetric Control System—VIGITON. 2019. Available online: http://www.neurocom.ru/en2/product/vigiton.html (accessed on 31 December 2019).

- Strategy Analytics. Global Smartwatch Vendor Market Share by Region: Q4 2018. 2015. Available online: https://www.strategyanalytics.com/access-services/devices/wearables/market-data/report-detail/global-smartwatch-vendor-market-share-by-region-q4-2018 (accessed on 31 December 2019).

- Georgiou, K.; Larentzakis, A.V.; Khamis, N.N.; Alsuhaibani, G.I.; Alaska, Y.A.; Giallafos, E.J. Can Wearable Devices Accurately Measure Heart Rate Variability? A Systematic Review. Folia Med. 2018, 60, 7–20. [Google Scholar] [CrossRef] [Green Version]

- Lee, B.; Lee, B.; Chung, W. Standalone Wearable Driver Drowsiness Detection System in a Smartwatch. IEEE Sens. J. 2016, 16, 5444–5451. [Google Scholar] [CrossRef]

- Lee, B.L.; Lee, B.G.; Li, G.; Chung, W.Y. Wearable Driver Drowsiness Detection System Based on Smartwatch. In Proceedings of the Korea Institute of Signal Processing and Systems (KISPS) Fall Conference, Korea, Japan, 21–22 November 2014; Volume 15, pp. 134–146. [Google Scholar]

- Leng, L.B.; Giin, L.B.; Chung, W. Wearable driver drowsiness detection system based on biomedical and motion sensors. In Proceedings of the 2015 IEEE SENSORS, Busan, Korea, 1–4 November 2015. [Google Scholar] [CrossRef]

- Choi, M.; Koo, G.; Seo, M.; Kim, S.W. Wearable Device-Based System to Monitor a Driver’s Stress, Fatigue, and Drowsiness. IEEE Trans. Instrum. Meas. 2018, 67, 634–645. [Google Scholar] [CrossRef]

- Li, Q.; Wu, J.; Kim, S.D.; Kim, C.G. Hybrid Driver Fatigue Detection System Based on Data Fusion with Wearable Sensor Devices. Available online: https://www.semanticscholar.org/paper/Hybrid-Driver-Fatigue-Detection-System-Based-on-Li-Wu/90da9d40baa5172d962930d838e5ea040f463bad (accessed on 14 February 2020).

- Kundinger, T.; Riener, A.; Sofra, N.; Weigl, K. Drowsiness Detection and Warning in Manual and Automated Driving: Results from Subjective Evaluation. In Proceedings of the 10th International Conference on Automotive User Interfaces and Interactive Vehicular Applications, AutomotiveUI ’18, Toronto, ON, Canada, 23–25 September 2018; pp. 229–236. [Google Scholar] [CrossRef]

- Sleep Health Foundation. Sleep Needs Across the Lifespan. 2015. Available online: http://www.sleephealthfoundation.org.au/files/pdfs/Sleep-Needs-Across-Lifespan.pdf (accessed on 31 December 2019).

- Support, E. Recent Publications Citing the E4 wristband. 2018. Available online: https://support.empatica.com/hc/en-us/articles/115002540543-Recent-Publications-citing-the-E4-wristband- (accessed on 31 December 2019).

- McCarthy, C.; Pradhan, N.; Redpath, C.; Adler, A. Validation of the Empatica E4 wristband. In Proceedings of the 2016 IEEE EMBS International Student Conference (ISC), Ottawa, ON, Canada, 29–31 May 2016; pp. 1–4. [Google Scholar] [CrossRef]

- Corporation, B. Bittium Faros Waterproof ECG Devices. 2019. Available online: https://support.empatica.com/hc/en-us/articles/115002540543-Recent-Publications-citing-the-E4-wristband- (accessed on 31 December 2019).

- Vicente, J.; Laguna, P.; Bartra, A.; Bailón, R. Drowsiness detection using heart rate variability. Med. Biol. Eng. Comput. 2016, 54, 927–937. [Google Scholar] [CrossRef]

- Michail, E.; Kokonozi, A.; Chouvarda, I.; Maglaveras, N. EEG and HRV markers of sleepiness and loss of control during car driving. In Proceedings of the 2008 30th Annual International Conference of the IEEE Engineering in Medicine and Biology Society, Vancouver, BC, Canada, 20–25 August 2008; pp. 2566–2569. [Google Scholar] [CrossRef]

- Malik, M.; Camm, A.; Bigger, J.; Breithardt, G.; Cerutti, S.; Cohen, R.; Coumel, P.; Fallen, E.; Kennedy, H.; Kleiger, R.; et al. Heart rate variability. Standards of measurement, physiological interpretation, and clinical use. Eur. Heart J. 1996, 17, 354–381. [Google Scholar] [CrossRef] [Green Version]

- Lee, I.; Lau, P.; Chua, E.C.P.; Gooley, J.J.; Tan, W.Q.; Yeo, S.C.; Puvanendran, K.; Mien, I.H. Heart Rate Variability Can Be Used to Estimate Sleepiness-related Decrements in Psychomotor Vigilance during Total Sleep Deprivation. Sleep 2012, 35, 325–334. [Google Scholar] [CrossRef] [Green Version]

- Sandberg, D. The performance of driver sleepiness indicators as a function of interval length. In Proceedings of the 2011 14th International IEEE Conference on Intelligent Transportation Systems (ITSC), Washington, DC, USA, 5–7 October 2011; pp. 1735–1740. [Google Scholar] [CrossRef]

- Landis, J.R.; Koch, G.G. The Measurement of Observer Agreement for Categorical Data; Technical Report 1; International Biometric Society: Washington, DC, USA, 1977. [Google Scholar]

- Tarvainen, M.P.; Niskanen, J.P.; Lipponen, J.A.; Ranta-aho, P.O.; Karjalainen, P.A. Kubios HRV—Heart rate variability analysis software. Comput. Methods Programs Biomed. 2014, 113, 210–220. [Google Scholar] [CrossRef] [PubMed]

- Empatica Support. E4 Data—IBI Expected Signal. 2020. Available online: https://support.empatica.com/hc/en-us/articles/360030058011-E4-data-IBI-expected-signal (accessed on 31 January 2020).

- Li, G.; Chung, W.Y. Detection of Driver Drowsiness Using Wavelet Analysis of Heart Rate Variability and a Support Vector Machine Classifier. Sensors 2013, 13, 16494–16511. [Google Scholar] [CrossRef] [Green Version]

- Shirmohammadi, S.; Barbe, K.; Grimaldi, D.; Rapuano, S.; Grassini, S. Instrumentation and measurement in medical, biomedical, and healthcare systems. IEEE Instrum. Meas. Mag. 2016, 19, 6–12. [Google Scholar] [CrossRef]

- Kohavi, R. A Study of Cross-validation and Bootstrap for Accuracy Estimation and Model Selection. In Proceedings of the 14th International Joint Conference on Artificial Intelligence, IJCAI’95, Adelaide, Australia, 10–14 December 2001; Morgan Kaufmann Publishers Inc.: San Francisco, CA, USA, 1995; Volume 2, pp. 1137–1143. [Google Scholar]

- Chawla, N.; Bowyer, K.; Hall, L.; Kegelmeyer, W. SMOTE: Synthetic Minority Over-sampling Technique. J. Artif. Intell. Res. 2002, 16, 321–357. [Google Scholar] [CrossRef]

- Hall, M.A. Correlation-Based Feature Subset Selection for Machine Learning. Ph.D. Thesis, University of Waikato, Hamilton, NZ, USA, 1998. [Google Scholar]

- Branco, P.; Torgo, L.; Ribeiro, R.P. A Survey of Predictive Modeling on Imbalanced Domains. ACM Comput. Surv. 2016, 49. [Google Scholar] [CrossRef]

- Hall, M.; Frank, E.; Holmes, G.; Pfahringer, B.; Reutemann, P.; Witten, I.H. The WEKA Data Mining Software: An Update. SIGKDD Explor. 2009, 11, 10–18. [Google Scholar] [CrossRef]

- Persson, A.; Jonasson, H.; Fredriksson, I.; Wiklund, U.; Ahlström, C. Heart Rate Variability for Driver Sleepiness Classification in Real Road Driving Conditions. In Proceedings of the 2019 41st Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Berlin, Germany, 23–27 July 2019; pp. 6537–6540. [Google Scholar] [CrossRef]

| Drowsiness Level | Indicators |

|---|---|

| 1 not drowsy | appearance of alertness present; normal facial tone; normal fast eye blinks; short ordinary glances; occasional body movements/gestures |

| 2 slightly drowsy | still sufficiently alert; less sharp/alert looks; longer glances; slower eye blinks; first mannerisms as: rubbing face/eyes, scratching, facial contortions, moving restlessly in the seat |

| 3 moderately drowsy | mannerisms; slower eye lid closures; decreasing facial tone; glassy eyes; staring at fixed position |

| 4 drowsy | eyelid closures (1–2 s); eyes rolling sideways; rarer blinks; no proper focused eyes; decreased facial tone; lack of apparent activity; large isolated or punctuating movements; |

| 5 very drowsy | eyelid closures (2–3 s); eyes rolling upward/sideways; no proper focused eyes; decreased facial tone; lack of apparent activity; large isolated or punctuating movements; |

| 6 extremely drowsy | eyelid closures (4 s or more); falling asleep; longer periods of lack of activity; movements when transition in and out of dozing; |

| Drowsiness Level | Eyelid Closure Time | Micro-Sleep Events |

|---|---|---|

| 4: drowsy | 1 ≤ seconds < 2 | 89 |

| 5: very drowsy | 2 ≤ seconds < 4 | 69 |

| 6: extremely drowsy | seconds ≥ 4 | 43 |

| Observer Rating | Adjusted Rating | Number of Occurrences |

|---|---|---|

| 1 | 4 | 1 |

| 2 | 4 | 3 |

| 2 | 5 | 4 |

| 2 | 6 | 1 |

| 3 | 4 | 2 |

| 3 | 5 | 5 |

| 4 | 5 | 3 |

| 5 | 6 | 1 |

| 6 | 4 | 1 |

| n.a. | 6 | 2 |

| Class | Video Ratings | Video Ratings + Micro-Sleep Events |

|---|---|---|

| non-drowsy (level 1–3) | 212 | 196 |

| drowsy (level 4–6) | 32 | 50 |

| UDT | |

|---|---|

| Wristband | 8× meanRR, meanHR 1× meanRR, meanHR, RMSSD 1× meanRR, meanHR, RMSSD, NN50 |

| ECG | 3× maxRR, minRR, maxHR, minHR 2× maxHR, minHR 5× maxRR, minRR |

| UIT | |

| Wristband | 5× meanRR, meanHR, SD1 1× meanRR, maxRR, SD1, LFpower 1× meanRR, maxRR, meanHR 5× meanRR, meanHRV, RMSSD 9× meanRR, meanHR 1× meanRR, meanHR, HFpower 1× meanRR, meanHR, pNN50, SD1 1× meanRR, meanHR, RMSSD, LFpower 1× meanRR, meanHR, SD2 1× meanRR, meanHR, Totalpower 1× meanRR, minHR, meanHR, RMSSD, NN50 |

| ECG | 8× maxRR, minRR, maxHR, minHR 5× maxRR, minRR, rangeRR, maxHR, minHR 3× maxRR, maxHR 1× maxHR, minHR 6× minRR, minHR 4× maxRR, minRR |

| UDT | Wristband | ECG | ||||

| Model | A | F1 | F2 | A | F1 | F2 |

| BN | 79.59 | 0.86 | 0.62 | 96.85 | 0.98 | 0.92 |

| NB | 68.39 | 0.79 | 0.32 | 53.88 | 0.66 | 0.26 |

| SVM | 29.27 | 0.72 | 0.32 | 54.39 | 0.66 | 0.28 |

| KNN | 92.13 | 0.95 | 0.83 | 97.34 | 0.98 | 0.94 |

| RF | 91.58 | 0.94 | 0.82 | 97.37 | 0.98 | 0.94 |

| RT | 90.02 | 0.93 | 0.79 | 97.37 | 0.98 | 0.94 |

| DS | 77.60 | 0.86 | 0.36 | 81.36 | 0.89 | 0.21 |

| DT | 80.61 | 0.87 | 0.65 | 97.18 | 0.98 | 0.93 |

| MLP | 61.94 | 0.69 | 0.46 | 64.43 | 0.71 | 0.30 |

| UIT | Wristband | ECG | ||||

| Model | A | F1 | F2 | A | F1 | F2 |

| BN | 57.77 | 0.69 | 0.19 | 70.86 | 0.77 | 0.10 |

| NB | 66.74 | 0.80 | 0.57 | 41.84 | 0.63 | 0.20 |

| SVM | 65.64 | 0.79 | 0.26 | 40.01 | 0.61 | 0.28 |

| KNN | 55.44 | 0.71 | 0.12 | 65.71 | 0.75 | 0.14 |

| RF | 62.36 | 0.74 | 0.20 | 70.64 | 0.79 | 0.13 |

| RT | 63.16 | 0.72 | 0.19 | 68.88 | 0.76 | 0.21 |

| DS | 73.39 | 0.82 | 0.65 | 78.94 | 0.83 | 0.17 |

| DT | 64.28 | 0.73 | 0.15 | 76.14 | 0.73 | 0.37 |

| MLP | 43.48 | 0.57 | 0.42 | 25.84 | 0.49 | 0.22 |

| Participant | 3 | 4 | 21 | 27 | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Model | Device | A | F1 | F2 | A | F1 | F2 | A | F1 | F2 | A | F1 | F2 |

| BN | Wristband | 59.90 | 0.75 | 0.00 | 20.45 | 0.32 | 0.04 | 52.30 | 0.65 | 0.27 | 18.02 | 0.17 | 0.20 |

| ECG | 51.73 | 0.59 | 0.41 | 36.57 | 0.54 | 0.00 | 77.06 | 0.87 | 0.00 | 85.49 | 0.92 | 0.51 | |

| KNN | Wristband | 58.17 | 0.73 | 0.05 | 22.30 | 0.37 | 0.00 | 62.20 | 0.77 | 0.00 | 42.55 | 0.60 | 0.01 |

| ECG | 63.41 | 0.68 | 0.58 | 42.93 | 0.59 | 0.07 | 74.56 | 0.85 | 0.04 | 78.65 | 0.86 | 0.26 | |

| RF | Wristband | 57.37 | 0.73 | 0.02 | 53.16 | 0.14 | 0.68 | 63.65 | 0.78 | 0.00 | 47.43 | 0.64 | 0.04 |

| ECG | 66.62 | 0.77 | 0.36 | 42.29 | 0.54 | 0.23 | 72.40 | 0.84 | 0.02 | 83.49 | 0.91 | 0.00 | |

| RT | Wristband | 56.74 | 0.72 | 0.02 | 40.90 | 0.52 | 0.24 | 63.29 | 0.76 | 0.00 | 47.06 | 0.63 | 0.04 |

| ECG | 67.27 | 0.78 | 0.36 | 42.29 | 0.54 | 0.23 | 74.81 | 0.86 | 0.02 | 87.49 | 0.93 | 0.00 | |

| DT | Wristband | 49.60 | 0.66 | 0.00 | 34.20 | 0.04 | 0.50 | 58.09 | 0.74 | 0.00 | 33.29 | 0.43 | 0.19 |

| ECG | 38.25 | 0.00 | 0.55 | 60.57 | 0.40 | 0.71 | 87.49 | 0.93 | 0.00 | 87.48 | 0.93 | 0.00 | |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kundinger, T.; Sofra, N.; Riener, A. Assessment of the Potential of Wrist-Worn Wearable Sensors for Driver Drowsiness Detection. Sensors 2020, 20, 1029. https://0-doi-org.brum.beds.ac.uk/10.3390/s20041029

Kundinger T, Sofra N, Riener A. Assessment of the Potential of Wrist-Worn Wearable Sensors for Driver Drowsiness Detection. Sensors. 2020; 20(4):1029. https://0-doi-org.brum.beds.ac.uk/10.3390/s20041029

Chicago/Turabian StyleKundinger, Thomas, Nikoletta Sofra, and Andreas Riener. 2020. "Assessment of the Potential of Wrist-Worn Wearable Sensors for Driver Drowsiness Detection" Sensors 20, no. 4: 1029. https://0-doi-org.brum.beds.ac.uk/10.3390/s20041029