Product Inspection Methodology via Deep Learning: An Overview

Abstract

:1. Introduction

- A series of steps to train and utilize deep learning models for the product inspection system in detail;

- Choosing proper deep learning models for the system;

- Connecting deep learning models to existing systems;

- User interface and maintenance schemes.

2. Related Work

2.1. Conventional Product Inspection Systems

2.2. Product Inspection with Deep Learning

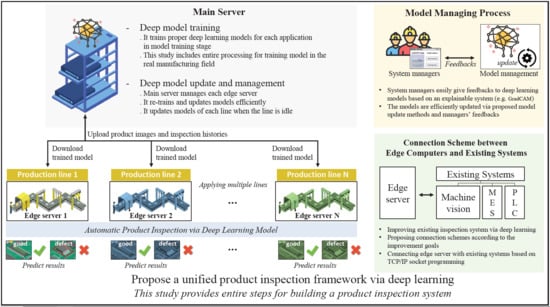

3. Proposed Automatic Product Inspection Framework via Deep Learning

3.1. Model Training Stage

3.1.1. Data Collection

3.1.2. Data Pre-Processing

- Image transformation is a traditional data augmentation method that changes or transforms the given images to augment new image data by the following methods. (1) Perspective transformation changes an image in terms of its size, rotation, and perspective. It has eight degrees of freedom (DOFs) and transforms the image as if the camera observed the images from different viewpoints. (2) Color transformation changes the color distribution of images and color space (e.g., RGB, HSV, etc.) of images. (3) Noise addition adds various kinds of noise to images, such as salt-and-pepper, Gaussian, and Poisson noise. We can add these noises using kernel filtering.

- Image generation creates new images based on the distribution of acquired images. A generative adversarial network [36] was used. Specifically, you can use CycleGAN [37] or ProgressiveGAN [38]. If you already have multiple defect images on different lines, you can use CycleGAN to create defect images by using good images of the line you want to apply deep learning to(basically good images can be collected very easily). In addition, when images are created using a general GAN, it may be classified as defective by using the form of distortion and this problem can be reduced by using ProgressiveGAN.

3.1.3. Model Selection and Training: Classifier versus Detector

3.2. Model Applying Stage

3.2.1. Connecting Deep Learning Models to Existing Systems

- Reducing false-positive rates of the existing system;

- Improving true-positive rates of the existing system;

- Replacing the existing system with deep learning models.

3.2.2. Model Expansion

3.3. Model Managing Stage

3.3.1. Explainable System: Grad-Cam

3.3.2. Model Update System

4. Datasets

- The Cylinder bonding dataset contains images of cylinders with bond applied. As shown in Figure 7, the bonds should be evenly applied inside the cylinders unless the bonding between the parts will not work properly. If the bond is broken or lumped together, we consider the cylinder as defective. We collected 651 non-defective and 651 defective images.

- The Navigation icon dataset is a set of icon images in the car navigation software. It includes 20 classes of icons. The size of each icon was normalized to pixels. This dataset is insufficient for training deep learning models. In Section 5.1, we present some classification results using the Navigation icon dataset.

5. Experimental Results

5.1. Data Augmentation

5.2. Defect Classification Results

5.2.1. PCB Parts Dataset

5.2.2. Cylinder Bonding Dataset

5.3. User Systems

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Appendix A. Product Manufacturing Systems

References

- Putera, S.I.; Ibrahim, Z. Printed circuit board defect detection using mathematical morphology and MATLAB image processing tools. In Proceedings of the 2010 2nd International Conference on Education Technology and Computer, Shanghai, China, 22–24 June 2010. [Google Scholar]

- Dave, N.; Tambade, V.; Pandhare, B.; Saurav, S. PCB defect detection using image processing and embedded system. Int. Res. J. Eng. Technol. (IRJET) 2016, 3, 1897–1901. [Google Scholar]

- Wei, P.; Liu, C.; Liu, M.; Gao, Y.; Liu, H. CNN-based reference comparison method for classifying bare PCB defects. J. Eng. 2018, 2018, 1528–1533. [Google Scholar] [CrossRef]

- Jing, J.; Dong, A.; Li, P.; Zhang, K. Yarn-dyed fabric defect classification based on convolutional neural network. Opt. Eng. 2017, 56, 093104. [Google Scholar] [CrossRef]

- Li, J.; Su, Z.; Geng, J.; Yin, Y. Real-time detection of steel strip surface defects based on improved yolo detection network. IFAC-PapersOnLine 2018, 51, 76–81. [Google Scholar] [CrossRef]

- Li, X.; Zhou, Y.; Chen, H. Rail surface defect detection based on deep learning. In Proceedings of the Eleventh International Conference on Graphics and Image Processing (ICGIP 2019), Hangzhou, China, 12–14 October 2019. [Google Scholar]

- Selvaraju, R.R.; Cogswell, M.; Das, A.; Vedantam, R.; Parikh, D.; Batra, D. Grad-cam: Visual explanations from deep networks via gradient-based localization. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 618–626. [Google Scholar]

- Soini, A. Machine vision technology take-up in industrial applications. In Proceedings of the 2nd International Symposium on Image and Signal Processing and Analysis (ISPA 2001), Pula, Croatia, 19–21 June 2001; pp. 332–338. [Google Scholar]

- Otsu, N. A threshold selection method from gray-level histograms. IEEE Trans. Syst. Man Cybern. 1979, 9, 62–66. [Google Scholar] [CrossRef] [Green Version]

- Iglesias, C.; Martínez, J.; Taboada, J. Automated vision system for quality inspection of slate slabs. Comput. Ind. 2018, 99, 119–129. [Google Scholar] [CrossRef]

- Zhang, X.; Zhang, J.; Ma, M.; Chen, Z.; Yue, S.; He, T.; Xu, X. A high precision quality inspection system for steel bars based on machine vision. Sensors 2018, 18, 2732. [Google Scholar] [CrossRef] [Green Version]

- Chang, P.C.; Chen, L.Y.; Fan, C.Y. A case-based evolutionary model for defect classification of printed circuit board images. J. Intell. Manuf. 2008, 19, 203–214. [Google Scholar] [CrossRef]

- Chaudhary, V.; Dave, I.R.; Upla, K.P. Automatic visual inspection of printed circuit board for defect detection and classification. In Proceedings of the 2017 International Conference on Wireless Communications, Signal Processing and Networking (WiSPNET), Chennai, India, 22–24 March 2017; pp. 732–737. [Google Scholar]

- Schmitt, J.; Bönig, J.; Borggräfe, T.; Beitinger, G.; Deuse, J. Predictive model-based quality inspection using Machine Learning and Edge Cloud Computing. Adv. Eng. Inform. 2020, 45, 101101. [Google Scholar] [CrossRef]

- Benbarrad, T.; Salhaoui, M.; Kenitar, S.B.; Arioua, M. Intelligent Machine Vision Model for Defective Product Inspection Based on Machine Learning. J. Sens. Actuator Netw. 2021, 10. [Google Scholar] [CrossRef]

- Yang, Y.; Pan, L.; Ma, J.; Yang, R.; Zhu, Y.; Yang, Y.; Zhang, L. A High-Performance Deep Learning Algorithm for the Automated Optical Inspection of Laser Welding. Appl. Sci. 2020, 10, 933. [Google Scholar] [CrossRef] [Green Version]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. In Proceedings of the Advances in Neural Information Processing Systems, Lake Tahoe, NV, USA, 3–6 December 2012; pp. 1097–1105. [Google Scholar]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- Guan, S.; Lei, M.; Lu, H. A steel surface defect recognition algorithm based on improved deep learning network model using feature visualization and quality evaluation. IEEE Access 2020, 8, 49885–49895. [Google Scholar] [CrossRef]

- Hao, R.; Lu, B.; Cheng, Y.; Li, X.; Huang, B. A steel surface defect inspection approach towards smart industrial monitoring. J. Intell. Manuf. 2020, 1–11. [Google Scholar] [CrossRef]

- Wang, X.; Gao, Y., II; Dong, J.; Qin, X.; Qi, L.; Ma, H.; Liu, J. Surface defects detection of paper dish based on Mask R-CNN. In Proceedings of the Third International Workshop on Pattern Recognition, Beijing, China, 26–28 May 2018. [Google Scholar]

- Redmon, J.; Farhadi, A. Yolov3: An incremental improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar]

- Bochkovskiy, A.; Wang, C.Y.; Liao, H.Y.M. Yolov4: Optimal speed and accuracy of object detection. arXiv 2020, arXiv:2004.10934. [Google Scholar]

- Yun, J.P.; Shin, W.C.; Koo, G.; Kim, M.S.; Lee, C.; Lee, S.J. Automated defect inspection system for metal surfaces based on deep learning and data augmentation. J. Manuf. Syst. 2020, 55, 317–324. [Google Scholar] [CrossRef]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich feature hierarchies for accurate object detection and semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 580–587. [Google Scholar]

- Girshick, R. Fast r-cnn. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 1440–1448. [Google Scholar]

- He, K.; Gkioxari, G.; Dollár, P.; Girshick, R. Mask r-cnn. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2961–2969. [Google Scholar]

- Dimitriou, N.; Leontaris, L.; Vafeiadis, T.; Ioannidis, D.; Wotherspoon, T.; Tinker, G.; Tzovaras, D. Fault diagnosis in microelectronics attachment via deep learning analysis of 3-D laser scans. IEEE Trans. Ind. Electron. 2019, 67, 5748–5757. [Google Scholar] [CrossRef] [Green Version]

- Block, S.B.; da Silva, R.D.; Dorini, L.B.; Minetto, R. Inspection of Imprint Defects in Stamped Metal Surfaces Using Deep Learning and Tracking. IEEE Trans. Ind. Electron. 2021, 68, 4498–4507. [Google Scholar] [CrossRef]

- Lin, T.Y.; Goyal, P.; Girshick, R.; He, K.; Dollár, P. Focal Loss for Dense Object Detection. In Proceedings of the 2017 IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 2999–3007. [Google Scholar]

- Kotsiopoulos, T.; Leontaris, L.; Dimitriou, N.; Ioannidis, D.; Oliveira, F.; Sacramento, J.; Amanatiadis, S.; Karagiannis, G.; Votis, K.; Tzovaras, D.; et al. Deep multi-sensorial data analysis for production monitoring in hard metal industry. Int. J. Adv. Manuf. Technol. 2020, 14. [Google Scholar] [CrossRef]

- Song, K.; Yan, Y. A noise robust method based on completed local binary patterns for hot-rolled steel strip surface defects. Appl. Surf. Sci. 2013, 285, 858–864. [Google Scholar] [CrossRef]

- Tabernik, D.; Šela, S.; Skvarč, J.; Skočaj, D. Segmentation-based deep-learning approach for surface-defect detection. J. Intell. Manuf. 2020, 31, 759–776. [Google Scholar] [CrossRef] [Green Version]

- Bao, Y.; Song, K.; Liu, J.; Wang, Y.; Yan, Y.; Yu, H.; Li, X. Triplet-Graph Reasoning Network for Few-shot Metal Generic Surface Defect Segmentation. IEEE Trans. Instrum. Meas. 2021. [Google Scholar] [CrossRef]

- Shorten, C.; Khoshgoftaar, T.M. A survey on image data augmentation for deep learning. J. Big Data 2019, 6, 1–48. [Google Scholar] [CrossRef]

- Goodfellow, I.J.; Pouget-Abadie, J.; Mirza, M.; Xu, B.; Warde-Farley, D.; Ozair, S.; Courville, A.; Bengio, Y. Generative adversarial networks. arXiv 2014, arXiv:1406.2661. [Google Scholar]

- Zhu, J.Y.; Park, T.; Isola, P.; Efros, A.A. Unpaired image-to-image translation using cycle-consistent adversarial networks. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2223–2232. [Google Scholar]

- Karras, T.; Aila, T.; Laine, S.; Lehtinen, J. Progressive growing of gans for improved quality, stability, and variation. arXiv 2017, arXiv:1710.10196. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Szegedy, C.; Liu, W.; Jia, Y.; Sermanet, P.; Reed, S.; Anguelov, D.; Erhan, D.; Vanhoucke, V.; Rabinovich, A. Going deeper with convolutions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 1–9. [Google Scholar]

- Tan, M.; Pang, R.; Le, Q.V. Efficientdet: Scalable and efficient object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 10781–10790. [Google Scholar]

- Pan, S.J.; Yang, Q. A survey on transfer learning. IEEE Trans. Knowl. Data Eng. 2009, 22, 1345–1359. [Google Scholar] [CrossRef]

- Ben-David, S.; Blitzer, J.; Crammer, K.; Pereira, F. Analysis of representations for domain adaptation. In Proceedings of the Advances in Neural Information Processing Systems, Vancouver, BC, Canada, 3–6 December 2007; pp. 137–144. [Google Scholar]

- Kim, T.H.; Cho, Y.J.; Kim, H.R. A PCB Inspection with Semi-Supervised ADDA Networks. KIISE Trans. Comput. Pract. 2020, 26, 150–155. [Google Scholar] [CrossRef]

- French, R.M. Catastrophic forgetting in connectionist networks. Trends Cogn. Sci. 1999, 3, 128–135. [Google Scholar] [CrossRef]

- Tong, S.; Chang, E. Support vector machine active learning for image retrieval. In Proceedings of the Ninth ACM International Conference on Multimedia, Ottawa, ON, Canada, 30 September–5 October 2001; pp. 107–118. [Google Scholar]

- Jung, A.B.; Wada, K.; Crall, J.; Tanaka, S.; Graving, J.; Reinders, C.; Yadav, S.; Banerjee, J.; Vecsei, G.; Kraft, A.; et al. Imgaug. 2020. Available online: https://github.com/aleju/imgaug (accessed on 1 February 2020).

- Chollet, F. Xception: Deep learning with depthwise separable convolutions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 1251–1258. [Google Scholar]

- Haniff, H.; Sulaiman, M.; Shah, H.; Teck, L. Shape-based matching: Defect inspection of glue process in vision system. In Proceedings of the 2011 IEEE Symposium on Industrial Electronics and Applications, Langkawi, Malaysia, 25–28 September 2011; pp. 53–57. [Google Scholar]

- Xie, S.; Girshick, R.; Dollár, P.; Tu, Z.; He, K. Aggregated residual transformations for deep neural networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 1492–1500. [Google Scholar]

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-excitation networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 7132–7141. [Google Scholar]

- Kletti, J. Manufacturing Execution System-MES; Springer: Berlin/Heidelberg, Germany, 2007. [Google Scholar]

- Reis, R.A.; Webb John, W. Programmable Logic Controllers: Principles and Applications; Prentice Hall: Hoboken, NJ, USA, 1998; Volume 4. [Google Scholar]

| Category | Method |

|---|---|

| Image transformation | Projective transformation |

| Color transformation | |

| Noise addition | |

| Image generation | Generative Adversarial Network [36] |

| Defect Classifiers | Defect Detectors | |

|---|---|---|

| Type of defect | Overall and various types of defects | Local and similar forms |

| Output | Predict a class (OK/NG) of the image | Detect defects in the images |

| References | ResNet [39], GoogleNet [40], VggNet [18], and AlexNet [17] | Yolo v3 [22], Yolo v4 [23], and EfficientDet [41] |

| Terminology | Description |

|---|---|

| Shooting trigger | Requesting the machine vision to start the inspection |

| Image sending | Sending images taken by machine vision to edge server |

| Machine vision prediction | Product inspection by machine vision |

| Deep model prediction | Product inspection by deep learning model |

| Test result | Inspection result of whether the product is non-defective (OK) or defective (NG) |

| Parts Type | # of Defective | # of Non-Defective |

|---|---|---|

| Soldered pin | 1000 | 1000 |

| MCU | 2200 | 2200 |

| Class | Number of Images | Accuracy |

|---|---|---|

| 20 | 272 (Original data) | 0.632% |

| 20 | 2720 (Original data + augmented data) | 0.854% |

| Methods | Xception [48] | ResNet-50 [39] | vgg16 [18] | vgg19 [18] | GoogleNet [40] |

|---|---|---|---|---|---|

| Accuracy | 0.954 | 0.985 | 0.954 | 0.972 | 0.963 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kim, T.-H.; Kim, H.-R.; Cho, Y.-J. Product Inspection Methodology via Deep Learning: An Overview. Sensors 2021, 21, 5039. https://0-doi-org.brum.beds.ac.uk/10.3390/s21155039

Kim T-H, Kim H-R, Cho Y-J. Product Inspection Methodology via Deep Learning: An Overview. Sensors. 2021; 21(15):5039. https://0-doi-org.brum.beds.ac.uk/10.3390/s21155039

Chicago/Turabian StyleKim, Tae-Hyun, Hye-Rin Kim, and Yeong-Jun Cho. 2021. "Product Inspection Methodology via Deep Learning: An Overview" Sensors 21, no. 15: 5039. https://0-doi-org.brum.beds.ac.uk/10.3390/s21155039