Dynamic Pricing Based on Demand Response Using Actor–Critic Agent Reinforcement Learning

Abstract

:1. Introduction

1.1. Motivation and Background

1.2. Contributions and Organization

1.3. Literature Review

2. Materials and Methods

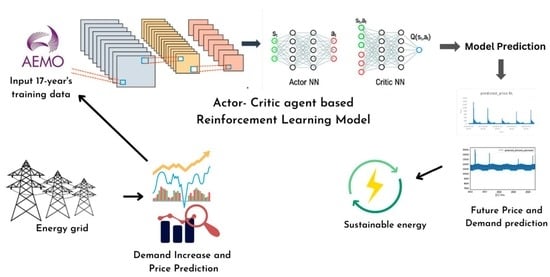

2.1. Proposed Dynamic-Pricing-Based Demand Response Approach

2.1.1. System Model

2.1.2. Actor–Critic Agent RL for Demand Response

3. Model and Simulation

3.1. Data Collection

3.2. Simulation Model

4. Results and Discussion

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Vardakas, J.S.; Zorba, N.; Verikoukis, C.V. A survey on demand response programs in smart grids: Pricing methods and optimization algorithms. IEEE Commun. Surv. Tutor. 2014, 17, 152–178. [Google Scholar] [CrossRef]

- Nolan, S.; O’Malley, M. Challenges and barriers to demand response deployment and evaluation. Appl. Energy 2015, 152, 1–10. [Google Scholar] [CrossRef]

- Qdr, Q. Benefits of Demand Response in Electricity Markets and Recommendations for Achieving Them; U.S. Department of Energy: Washington, DC, USA, 2006.

- Shen, B.; Ghatikar, G.; Lei, Z.; Li, J.; Wikler, G.; Martin, P. The role of regulatory reforms, market changes, and technology development to make demand response a viable resource in meeting energy challenges. Appl. Energy 2014, 130, 814–823. [Google Scholar] [CrossRef]

- Siano, P. Demand response and smart grids—A survey. Renew. Sustain. Energy Rev. 2014, 30, 461–478. [Google Scholar] [CrossRef]

- Faria, P.; Vale, Z. Demand response in electrical energy supply: An optimal real time pricing approach. Energy 2011, 36, 5374–5384. [Google Scholar] [CrossRef] [Green Version]

- Yi, P.; Dong, X.; Iwayemi, A.; Zhou, C.; Li, S. Real-time opportunistic scheduling for residential demand response. IEEE Trans. Smart Grid 2013, 4, 227–234. [Google Scholar] [CrossRef]

- McKenna, K.; Keane, A. Residential load modeling of price-based demand response for network impact studies. IEEE Trans. Smart Grid 2015, 7, 2285–2294. [Google Scholar] [CrossRef] [Green Version]

- Aghaei, J.; Alizadeh, M.-I.; Siano, P.; Heidari, A. Contribution of emergency demand response programs in power system reliability. Energy 2016, 103, 688–696. [Google Scholar] [CrossRef]

- Aghaei, J.; Alizadeh, M.I. Critical peak pricing with load control demand response program in unit commitment problem. IET Gener. Transm. Distrib. 2013, 7, 681–690. [Google Scholar] [CrossRef]

- Borenstein, S.; Jaske, M.; Rosenfeld, A. Dynamic Pricing, Advanced Metering, and Demand Response in Electricity Markets; Center for the Study of Energy Markets: Berkeley, CA, USA, 2002. [Google Scholar]

- Weisbrod, G.; Ford, E. Market segmentation and targeting for real time pricing. In Proceedings of the 1996 EPRI Conferences on Innovative Approaches to Electricity Pricing, La Jolla, CA, USA, 27–29 March 1996; pp. 14–111. [Google Scholar]

- Vahedipour-Dahraie, M.; Najafi, H.R.; Anvari-Moghaddam, A.; Guerrero, J.M. Study of the effect of time-based rate demand response programs on stochastic day-ahead energy and reserve scheduling in islanded residential microgrids. Appl. Sci. 2017, 7, 378. [Google Scholar] [CrossRef] [Green Version]

- Nogales, F.J.; Contreras, J.; Conejo, A.J.; Espínola, R. Forecasting next-day electricity prices by time series models. IEEE Trans. Power Syst. 2002, 17, 342–348. [Google Scholar] [CrossRef]

- Contreras, J.; Espinola, R.; Nogales, F.J.; Conejo, A.J. ARIMA models to predict next-day electricity prices. IEEE Trans. Power Syst. 2003, 18, 1014–1020. [Google Scholar] [CrossRef]

- Mandal, P.; Senjyu, T.; Urasaki, N.; Funabashi, T.; Srivastava, A.K. Electricity price forecasting for PJM Day-ahead market. In Proceedings of the 2006 IEEE PES Power Systems Conference and Exposition, Atlanta, GA, USA, 29 October–1 November 2006; pp. 1321–1326. [Google Scholar] [CrossRef]

- Catalão, J.; Mariano, S.; Mendes, V.; Ferreira, L. Application of neural networks on next-day electricity prices forecasting. In Proceedings of the 41st International Universities Power Engineering Conference, Newcastle upon Tyne, UK, 6–8 September 2006; pp. 1072–1076. [Google Scholar] [CrossRef]

- Taylor, J.W. Short-term electricity demand forecasting using double seasonal exponential smoothing. J. Oper. Res. Soc. 2003, 54, 799–805. [Google Scholar] [CrossRef]

- Taylor, J.W. Triple seasonal methods for short-term electricity demand forecasting. Eur. J. Oper. Res. 2010, 204, 139–152. [Google Scholar] [CrossRef] [Green Version]

- Wang, J.; Zhu, W.; Zhang, W.; Sun, D. A trend fixed on firstly and seasonal adjustment model combined with the ε-SVR for short-term forecasting of electricity demand. Energy Policy 2009, 37, 4901–4909. [Google Scholar] [CrossRef]

- Mirasgedis, S.; Sarafidis, Y.; Georgopoulou, E.; Lalas, D.; Moschovits, M.; Karagiannis, F.; Papakonstantinou, D. Models for mid-term electricity demand forecasting incorporating weather influences. Energy 2006, 31, 208–227. [Google Scholar] [CrossRef]

- Zhou, P.; Ang, B.; Poh, K.L. A trigonometric grey prediction approach to forecasting electricity demand. Energy 2006, 31, 2839–2847. [Google Scholar] [CrossRef]

- Akay, D.; Atak, M. Grey prediction with rolling mechanism for electricity demand forecasting of Turkey. Energy 2007, 32, 1670–1675. [Google Scholar] [CrossRef]

- Hyndman, R.J.; Fan, S. Density forecasting for long-term peak electricity demand. IEEE Trans. Power Syst. 2009, 25, 1142–1153. [Google Scholar] [CrossRef] [Green Version]

- McSharry, P.E.; Bouwman, S.; Bloemhof, G. Probabilistic forecasts of the magnitude and timing of peak electricity demand. IEEE Trans. Power Syst. 2005, 20, 1166–1172. [Google Scholar] [CrossRef]

- Saravanan, S.; Kannan, S.; Thangaraj, C. India’s electricity demand forecast using regression analysis and artificial neural networks based on principal components. ICTACT J. Soft Comput. 2012, 2, 365–370. [Google Scholar] [CrossRef]

- Torriti, J. Price-based demand side management: Assessing the impacts of time-of-use tariffs on residential electricity demand and peak shifting in Northern Italy. Energy 2012, 44, 576–583. [Google Scholar] [CrossRef]

- Yang, P.; Tang, G.; Nehorai, A. A game-theoretic approach for optimal time-of-use electricity pricing. IEEE Trans. Power Syst. 2012, 28, 884–892. [Google Scholar] [CrossRef]

- Jessoe, K.; Rapson, D. Commercial and industrial demand response under mandatory time-of-use electricity pricing. J. Ind. Econ. 2015, 63, 397–421. [Google Scholar] [CrossRef]

- Jang, D.; Eom, J.; Kim, M.G.; Rho, J.J. Demand responses of Korean commercial and industrial businesses to critical peak pricing of electricity. J. Clean. Prod. 2015, 90, 275–290. [Google Scholar] [CrossRef]

- Zhou, Z.; Zhao, F.; Wang, J. Agent-based electricity market simulation with demand response from commercial buildings. IEEE Trans. Smart Grid 2011, 2, 580–588. [Google Scholar] [CrossRef]

- Li, X.H.; Hong, S.H. User-expected price-based demand response algorithm for a home-to-grid system. Energy 2014, 64, 437–449. [Google Scholar] [CrossRef]

- Gao, D.-C.; Sun, Y.; Lu, Y. A robust demand response control of commercial buildings for smart grid under load prediction uncertainty. Energy 2015, 93, 275–283. [Google Scholar] [CrossRef]

- Ding, Y.M.; Hong, S.H.; Li, X.H. A demand response energy management scheme for industrial facilities in smart grid. IEEE Trans. Ind. Inform. 2014, 10, 2257–2269. [Google Scholar] [CrossRef]

- Luo, Z.; Hong, S.-H.; Kim, J.-B. A price-based demand response scheme for discrete manufacturing in smart grids. Energies 2016, 9, 650. [Google Scholar] [CrossRef] [Green Version]

- Vanthournout, K.; Dupont, B.; Foubert, W.; Stuckens, C.; Claessens, S. An automated residential demand response pilot experiment, based on day-ahead dynamic pricing. Appl. Energy 2015, 155, 195–203. [Google Scholar] [CrossRef]

- Li, Y.-C.; Hong, S.H. Real-time demand bidding for energy management in discrete manufacturing facilities. IEEE Trans. Ind. Electron. 2016, 64, 739–749. [Google Scholar] [CrossRef]

- Yu, M.; Lu, R.; Hong, S.H. A real-time decision model for industrial load management in a smart grid. Appl. Energy 2016, 183, 1488–1497. [Google Scholar] [CrossRef]

- Mnih, V.; Kavukcuoglu, K.; Silver, D.; Graves, A.; Antonoglou, I.; Wierstra, D.; Riedmiller, M. Playing atari with deep reinforcement learning. arXiv 2013, arXiv:1312.5602. [Google Scholar]

- Mnih, V.; Kavukcuoglu, K.; Silver, D.; Rusu, A.A.; Veness, J.; Bellemare, M.G.; Graves, A.; Riedmiller, M.; Fidjeland, A.K.; Ostrovski, G.; et al. Human-level control through deep reinforcement learning. Nature 2015, 518, 529–533. [Google Scholar] [CrossRef]

- Wen, Z.; O’Neill, D.; Maei, H. Optimal demand response using device-based reinforcement learning. IEEE Trans. Smart Grid 2015, 6, 2312–2324. [Google Scholar] [CrossRef] [Green Version]

- Ruelens, F.; Claessens, B.J.; Vandael, S.; Iacovella, S.; Vingerhoets, P.; Belmans, R. Demand response of a heterogeneous cluster of electric water heaters using batch reinforcement learning. In Proceedings of the 2014 Power Systems Computation Conference, Wroclaw, Poland, 18–22 August 2014; pp. 1–7. [Google Scholar] [CrossRef]

- Ruelens, F.; Claessens, B.J.; Quaiyum, S.; De Schutter, B.; Babuška, R.; Belmans, R. Reinforcement learning applied to an electric water heater: From theory to practice. IEEE Trans. Smart Grid 2016, 9, 3792–3800. [Google Scholar] [CrossRef] [Green Version]

- Ruelens, F.; Claessens, B.J.; Vandael, S.; De Schutter, B.; Babuška, R.; Belmans, R. Residential demand response of thermostatically controlled loads using batch reinforcement learning. IEEE Trans. Smart Grid 2016, 8, 2149–2159. [Google Scholar] [CrossRef] [Green Version]

- Kofinas, P.; Vouros, G.; Dounis, A.I. Energy management in solar microgrid via reinforcement learning using fuzzy reward. Adv. Build. Energy Res. 2018, 12, 97–115. [Google Scholar] [CrossRef]

- Chiş, A.; Lundén, J.; Koivunen, V. Reinforcement learning-based plug-in electric vehicle charging with forecasted price. IEEE Trans. Veh. Technol. 2016, 66, 3674–3684. [Google Scholar] [CrossRef]

- Vandael, S.; Claessens, B.; Ernst, D.; Holvoet, T.; Deconinck, G. Reinforcement learning of heuristic EV fleet charging in a day-ahead electricity market. IEEE Trans. Smart Grid 2015, 6, 1795–1805. [Google Scholar] [CrossRef] [Green Version]

- Kuznetsova, E.; Li, Y.-F.; Ruiz, C.; Zio, E.; Ault, G.; Bell, K. Reinforcement learning for microgrid energy management. Energy 2013, 59, 133–146. [Google Scholar] [CrossRef]

- Xu, X.; Jia, Y.; Xu, Y.; Xu, Z.; Chai, S.; Lai, C.S. A multi-agent reinforcement learning-based data-driven method for home energy management. IEEE Trans. Smart Grid 2020, 11, 3201–3211. [Google Scholar] [CrossRef] [Green Version]

- Ji, Y.; Wang, J.; Xu, J.; Fang, X.; Zhang, H. Real-time energy management of a microgrid using deep reinforcement learning. Energies 2019, 12, 2291. [Google Scholar] [CrossRef] [Green Version]

- Lu, R.; Hong, S.H.; Zhang, X. A dynamic pricing demand response algorithm for smart grid: Reinforcement learning approach. Appl. Energy 2018, 220, 220–230. [Google Scholar] [CrossRef]

- Wolak, F.A. Do residential customers respond to hourly prices? Evidence from a dynamic pricing experiment. Am. Econ. Rev. 2011, 101, 83–87. [Google Scholar] [CrossRef] [Green Version]

- Ifland, M.; Exner, N.; Döring, N.; Westermann, D. Influencing domestic customers’ market behavior with time flexible tariffs. In Proceedings of the 2012 IEEE Power and Energy Society General Meeting, Berlin, Germany, 14–17 October 2012; pp. 1–7. [Google Scholar] [CrossRef]

- Zareipour, H.; Cañizares, C.A.; Bhattacharya, K.; Thomson, J. Application of public-domain market information to forecast Ontario’s wholesale electricity prices. IEEE Trans. Power Syst. 2006, 21, 1707–1717. [Google Scholar] [CrossRef]

- Khan, T.A.; Hafeez, G.; Khan, I.; Ullah, S.; Waseem, A.; Ullah, Z. Energy demand control under dynamic price-based demand response program in smart grid. In Proceedings of the 2020 International Conference on Electrical, Communication, and Computer Engineering (ICECCE), Istanbul, Turkey, 12–13 June 2020; pp. 1–6. [Google Scholar]

- Miller, M.; Alberini, A. Sensitivity of price elasticity of demand to aggregation, unobserved heterogeneity, price trends, and price endogeneity: Evidence from US Data. Energy Policy 2016, 97, 235–249. [Google Scholar] [CrossRef] [Green Version]

- Hong, Y.; Zhou, Y.; Li, Q.; Xu, W.; Zheng, X. A deep learning method for short-term residential load forecasting in smart grid. IEEE Access 2020, 8, 55785–55797. [Google Scholar] [CrossRef]

- Nguyen, V.-B.; Duong, M.-T.; Le, M.-H. Electricity Demand Forecasting for Smart Grid Based on Deep Learning Approach. In Proceedings of the 2020 5th International Conference on Green Technology and Sustainable Development (GTSD), Ho Chi Minh City, Vietnam, 27–28 November 2020; pp. 353–357. [Google Scholar] [CrossRef]

- Jahangir, H.; Tayarani, H.; Gougheri, S.S.; Golkar, M.A.; Ahmadian, A.; Elkamel, A. Deep learning-based forecasting approach in smart grids with microclustering and bidirectional LSTM network. IEEE Trans. Ind. Electron. 2020, 68, 8298–8309. [Google Scholar] [CrossRef]

- Aurangzeb, K.; Alhussein, M.; Javaid, K.; Haider, S.I. A pyramid-CNN based deep learning model for power load forecasting of similar-profile energy customers based on clustering. IEEE Access 2021, 9, 14992–15003. [Google Scholar] [CrossRef]

- Taleb, I.; Guerard, G.; Fauberteau, F.; Nguyen, N. A Flexible Deep Learning Method for Energy Forecasting. Energies 2022, 15, 3926. [Google Scholar] [CrossRef]

- Souhe, F.G.Y.; Mbey, C.F.; Boum, A.T.; Ele, P.; Kakeu, V.J.F. A hybrid model for forecasting the consumption of electrical energy in a smart grid. J. Eng. 2022, 2022, 629–643. [Google Scholar] [CrossRef]

| Reference | Model | Demand Prediction | Price Prediction | Elasticity | Data Source (Both Real Time and Historical) |

|---|---|---|---|---|---|

| [8] | Experiment Model | ✓ | ✓ | ✓ | × |

| [14] | Dynamic Regression Model | ✓ | × | × | × |

| [14] | Transfer Function Model | ✓ | × | × | × |

| [54] | ARIMA model | ✓ | × | × | × |

| [18] | ARIMA model | × | ✓ | × | × |

| [19] | ARIMA model | × | ✓ | × | × |

| [20] | Support Vector Regression Model | × | ✓ | × | × |

| [21] | Autoregressive Model | ✓ | × | × | × |

| [22] | Trigonometric Gray Model | ✓ | × | × | × |

| [23] | Gray Model with Polling | ✓ | × | × | × |

| [24] | Semi-Parametric Model | × | ✓ | × | × |

| [26] | Linear Regression and ANNs | ✓ | × | × | × |

| [28] | GAME Theoretic Model | × | ✓ | × | × |

| [29] | Experimental Model | × | ✓ | × | × |

| [30] | Hourly Regression Model | ✓ | × | × | × |

| [33] | Reinforcement Q-learning | ✓ | × | ✓ | × |

| [35] | Experimental Model | × | × | ✓ | × |

| [36] | Experimental Model | × | × | ✓ | × |

| [37] | Experimental Model | × | × | ✓ | × |

| [51] | Reinforcement Learning | ✓ | ✓ | ✓ | × |

| [55] | Experimental Model | ✓ | ✓ | ✓ | × |

| Proposed | Deep RL and LSTM | ✓ | ✓ | ✓ | ✓ |

| Parameter | Value |

|---|---|

| LSTM Units | 512 |

| Regularization | L2 (1 × 10−4) |

| Batch Size | 1000 |

| Activation Function | LeakyReLU |

| Optimizer | Adam |

| Learning Rate | 0.0000000001 |

| Loss Function | Mean Squared Error (MSE) |

| Date | Time | Actual Demand | Predicted Demand | Difference (∆Demand) | Mean Squared Error (MSE) |

|---|---|---|---|---|---|

| 1 May 2016 | 0.00–23.30 | 7462.67 | 7506.12 | 0.58% | - |

| 1 June 2016 | 7440.01 | 7493.94 | 0.72% | - | |

| 1 July 2016 | 7325.68 | 7384.83 | 0.80% | - | |

| 1 August 2016 | 7413.97 | 7463.65 | 0.67% | - | |

| 1 September 2016 | 7163.77 | 7216.83 | 0.74% | - | |

| 1 October 2016 | 7354.75 | 7370.56 | 0.21% | - | |

| Average | 7360.14 | 7405.98 | 0.62% | 9.07 | |

| Date | Time | Actual Price | Predicted Price | Difference (∆Price) | Mean Squared Error (MSE) |

|---|---|---|---|---|---|

| 1 March 2019 | 0.00–23.30 | 94.10 | 91.27 | 3% | - |

| 1 April 2019 | 104.59 | 101.46 | 2.99% | - | |

| 1 May 2019 | 93.21 | 89.71 | 3.75% | - | |

| 1 June 2019 | 64.82 | 61.39 | 5.29% | - | |

| 1 July 2019 | 70.32 | 68.70 | 2.30% | - | |

| 1 August 2019 | 91.48 | 88.32 | 3.45% | - | |

| Average | 86.42 | 83.48 | 3.4% | 2304.4/9.79% | |

| Ξ | Method | 01–12 a.m. | 01–23.30 p.m. |

| Proposed RL | −1.574 | −0.2422 | |

| LSTM | −1.405 | −0.7441 | |

| Miller et al. [56] | −0.300 | −0.550 | |

| Off-Peak | Mid-Peak | On-Peak | |

| (1–12 a.m.) | (13–16 p.m., 22–24 p.m.) | (17–21 p.m.) | |

| −0.3 | −0.5 | −0.7 |

| Reference | Year | Models | Dataset | MAPE (%) |

|---|---|---|---|---|

| [22] | 2006 | Trigonometric Gray Model | Electricity demand data from 1981 to 2002 collected from China Statistical Yearbook | 2.37 |

| [28] | 2012 | Support Vector Regression Model | Real data of electricity demand from 2004 (January) to 2008 (May) | 3.799 |

| [30] | 2015 | Linear Regression and ANNs | Electricity consumption data of India | 0.430 |

| [57] | 2020 | Iterative-Resblock-Based Deep Neural Network (IRBDNN) | Household Appliance Consumption Dataset from March 2011 to July 2011 obtained from REDD | 0.6159 |

| [58] | 2020 | LSTM | Power consumption data from 2012 to 2017 obtained from Vietnam | |

| [59] | 2021 | B-LSTM | Three-year data of wind speed, load demand, and hourly electric price for Ontario | 18.6 for electricity price; 3.17 for load demand |

| [60] | 2021 | Pyramid CNN | Australian Government’s Smart Grid Smart City (SGSC) project database, initiated in 2010. This database contains information from numerous individual household energy customers who have a hot water system installed. The dataset encompasses data from thousands of these customers. | 39 |

| [61] | 2022 | CNN + LSTM + MLP | Open access data from EDM (Electricity Demand of Mayotte) | 1.71 for 30 min 3.5 for 1 day 5.1 for 1 week |

| [62] | 2022 | Support Vector Regression (SVR) + Firefly Algorithm (FA) + Adaptive Neuro-Fuzzy Inference System (ANFIS) | Twenty-four-year data of Smart Meter Consumption (1994–2017), obtained from World Bank, Electricity Sector Regulatory Agency of Cameroon, and Electricity Distribution Agency | 0.4124 |

| Proposed Model | 2023 | LSTM and RL | 17 years, AEMO dataset | 0.1548 (price) 0.0124 (demand) |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ismail, A.; Baysal, M. Dynamic Pricing Based on Demand Response Using Actor–Critic Agent Reinforcement Learning. Energies 2023, 16, 5469. https://0-doi-org.brum.beds.ac.uk/10.3390/en16145469

Ismail A, Baysal M. Dynamic Pricing Based on Demand Response Using Actor–Critic Agent Reinforcement Learning. Energies. 2023; 16(14):5469. https://0-doi-org.brum.beds.ac.uk/10.3390/en16145469

Chicago/Turabian StyleIsmail, Ahmed, and Mustafa Baysal. 2023. "Dynamic Pricing Based on Demand Response Using Actor–Critic Agent Reinforcement Learning" Energies 16, no. 14: 5469. https://0-doi-org.brum.beds.ac.uk/10.3390/en16145469