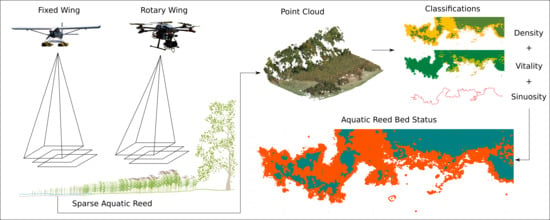

Quantification of Extent, Density, and Status of Aquatic Reed Beds Using Point Clouds Derived from UAV–RGB Imagery

Abstract

:1. Introduction

2. Materials and Methods

2.1. Study Area

2.2. Description of UAV Point Clouds

2.3. Reference and Validation Data

2.4. Classification of Reed Extent and Density in UAV Point Clouds

2.5. Estimation of Vegetation Status

2.6. Validation of Classification Results

2.6.1. Reed Bed Extent Quantification and Frontline Assessment

2.6.2. Accuracy Assessment of Density and Vegetation Status Classification

3. Results

3.1. Point Cloud Classification

3.2. Extent Quantification and Frontline Assessment

3.3. Accuracy Assessments of Density and Status of Aquatic Reeds

4. Discussion

4.1. Frontline Allocation and Extent Quantification

4.2. Density and Status Assessment of Aquatic Reed Beds

5. Conclusion

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Grosser, S.; Pohl, W.; Melzer, A. Untersuchung des Schilfrückgangs an Bayerischen Seen: Forschungsprojekt des Bayerischen Staatsministeriums für Landesentwicklung und Umweltfragen; LfU: München, Germany, 1997. [Google Scholar]

- Rolletschek, H. The impact of reed-protecting structures on littoral zones. Limnol. Ecol. Manag. Inland Waters 1999, 29, 86–92. [Google Scholar] [CrossRef]

- Struyf, E.; van Damme, S.; Gribsholt, B.; Bal, K.; Beauchard, O.; Middelburg, J.J.; Meire, P. Phragmites australis and silica cycling in tidal wetlands. Aquat. Bot. 2007, 87, 134–140. [Google Scholar] [CrossRef]

- Mitsch, W.J.; Zhang, L.; Stefanik, K.C.; Nahlik, A.M.; Anderson, C.J.; Bernal, B.; Hernandez, M.; Song, K. Creating Wetlands: Primary Succession, Water Quality Changes, and Self-Design over 15 Years. BioScience 2012, 62, 237–250. [Google Scholar] [CrossRef] [Green Version]

- Holsten, B.; Schoenberg, W.; Jensen, K. (Eds.) Schutz und Entwicklung Aquatischer Schilfröhrichte: Ein Leitfaden für die Praxis, 1st ed.; LLUR: Flintbek, Germany, 2013. [Google Scholar]

- Dienst, M.; Schmieder, K.; Ostendorp, W. Dynamik der Schilfröhrichte am Bodensee unter dem Einfluss von Wasserstandsvariationen. Limnol. Ecol. Manag. Inland Waters 2004, 34, 29–36. [Google Scholar] [CrossRef]

- Ostendorp, W. ‘Die-back’ of reeds in Europe—A critical review of literature. Aquat. Bot. 1989, 35, 5–26. [Google Scholar] [CrossRef]

- Nechwatal, J.; Wielgoss, A.; Mendgen, K. Flooding events and rising water temperatures increase the significance of the reed pathogen Pythium phragmitis as a contributing factor in the decline of Phragmites australis. Hydrobiologia 2008, 613, 109–115. [Google Scholar] [CrossRef]

- Erwin, K.L. Wetlands and global climate change: The role of wetland restoration in a changing world. Wetl. Ecol. Manag. 2009, 17, 71–84. [Google Scholar] [CrossRef]

- Vincent, W.F. Effects of Climate Change on Lakes; Elsevier: Amsterdam, The Netherlands, 2009; pp. 55–60. [Google Scholar]

- Boak, E.H.; Turner, I.L. Shoreline Definition and Detection: A Review. J. Coast. Res. 2005, 214, 688–703. [Google Scholar] [CrossRef]

- Ostendorp, W. Reed Bed Characteristics and Significance of Reeds in Landscape Ecology; Bibliothek der Universität Konstanz: Konstanz, Germany, 1993. [Google Scholar]

- Poulin, B.; Davranche, A.; Lefebvre, G. Ecological assessment of Phragmites australis wetlands using multi-season SPOT-5 scenes. Remote Sens. Environ. 2010, 114, 1602–1609. [Google Scholar] [CrossRef] [Green Version]

- Schmieder, K.; Woithon, A. Einsatz von Fernerkundung im Rahmen aktueller Forschungsprojekte zur Gewässerökologie an der Universität Hohenheim. Bayerische Akademie für Naturschutz und Landschaftspflege 2004, 2, 39–45. [Google Scholar]

- Samiappan, S.; Turnage, G.; Hathcock, L.; Casagrande, L.; Stinson, P.; Moorhead, R. Using unmanned aerial vehicles for high-resolution remote sensing to map invasive Phragmites australis in coastal wetlands. Int. J. Remote Sens. 2017, 38, 2199–2217. [Google Scholar] [CrossRef]

- Hoffmann, F.; Zimmermann, S. Chiemsee Schilfkataster: 1973, 1979, 1991 und 1998; Wasserwirtschaftsamt Traunstein: Traunstein, Germany, 2000. [Google Scholar]

- Corti Meneses, N.; Baier, S.; Geist, J.; Schneider, T. Evaluation of Green-LiDAR Data for Mapping Extent, Density and Height of Aquatic Reed Beds at Lake Chiemsee, Bavaria—Germany. Remote Sens. 2017, 9, 1308. [Google Scholar] [CrossRef]

- Meneses, N.C.; Baier, S.; Reidelstürz, P.; Geist, J.; Schneider, T. Modelling heights of sparse aquatic reed (Phragmites australis) using Structure from Motion point clouds derived from Rotary- and Fixed-Wing Unmanned Aerial Vehicle (UAV) data. Limnologica 2018, 72, 10–21. [Google Scholar] [CrossRef]

- Onojeghuo, A.O.; Blackburn, G.A. Optimising the use of hyperspectral and LiDAR data for mapping reedbed habitats. Remote Sens. Environ. 2011, 115, 2025–2034. [Google Scholar] [CrossRef]

- Villa, P.; Laini, A.; Bresciani, M.; Bolpagni, R. A remote sensing approach to monitor the conservation status of lacustrine Phragmites australis beds. Wetl. Ecol. Manag. 2013, 21, 399–416. [Google Scholar] [CrossRef]

- Zlinszky, A.; Mücke, W.; Lehner, H.; Briese, C.; Pfeifer, N. Categorizing wetland vegetation by airborne laser scanning on Lake Balaton and Kis-Balaton, Hungary. Remote Sens. 2012, 4, 1617–1650. [Google Scholar] [CrossRef]

- Colomina, I.; Molina, P. Unmanned aerial systems for photogrammetry and remote sensing: A review. ISPRS J. Photogramm. Remote Sens. 2014, 92, 79–97. [Google Scholar] [CrossRef]

- McCabe, M.F.; Houborg, R.; Lucieer, A. High-resolution sensing for precision agriculture: From Earth-observing satellites to unmanned aerial vehicles. In Remote Sensing for Agriculture, Ecosystems, and Hydrology XVIII; Neale, C.M.U., Maltese, A., Eds.; SPIE: Bellingham, WA, USA, 2016; p. 999811. [Google Scholar]

- Dandois, J.; Baker, M.; Olano, M.; Parker, G.; Ellis, E. What is the Point?: Evaluating the Structure, Color, and Semantic Traits of Computer Vision Point Clouds of Vegetation. Remote Sens. 2017, 9, 355. [Google Scholar] [CrossRef]

- Westoby, M.J.; Brasington, J.; Glasser, N.F.; Hambrey, M.J.; Reynolds, J.M. ‘Structure-from-Motion’ photogrammetry: A low-cost, effective tool for geoscience applications. Geomorphology 2012, 179, 300–314. [Google Scholar] [CrossRef] [Green Version]

- Tonkin, T.N.; Midgley, N.G.; Graham, D.J.; Labadz, J.C. The potential of small unmanned aircraft systems and structure-from-motion for topographic surveys: A test of emerging integrated approaches at Cwm Idwal, North Wales. Geomorphology 2014, 226, 35–43. [Google Scholar] [CrossRef] [Green Version]

- White, J.; Wulder, M.; Vastaranta, M.; Coops, N.; Pitt, D.; Woods, M. The Utility of Image-Based Point Clouds for Forest Inventory: A Comparison with Airborne Laser Scanning. Forests 2013, 4, 518–536. [Google Scholar] [CrossRef] [Green Version]

- Ren, D.D.W.; Tripathi, S.; Li, L.K.B. Low-cost multispectral imaging for remote sensing of lettuce health. J. Appl. Remote Sens. 2017, 11, 16006. [Google Scholar] [CrossRef] [Green Version]

- Katsigiannis, P.; Misopolinos, L.; Liakopoulos, V.; Alesxandridis, T.K.; Zalidis, G. (Eds.) An Autonomous Multi-Sensor UAV System for Reduced-Input Precision Agriculture Applications; IEEE: Piscataway, NJ, USA, 2016. [Google Scholar]

- Dash, J.P.; Watt, M.S.; Pearse, G.D.; Heaphy, M.; Dungey, H.S. Assessing very high resolution UAV imagery for monitoring forest health during a simulated disease outbreak. ISPRS J. Photogramm. Remote Sens. 2017, 131, 1–14. [Google Scholar] [CrossRef]

- Venturi, S.; Di Francesco, S.; Materazzi, F.; Manciola, P. Unmanned aerial vehicles and Geographical Information System integrated analysis of vegetation in Trasimeno Lake, Italy. Lakes Reserv. Res. Manag. 2016, 21, 5–19. [Google Scholar] [CrossRef]

- Gitelson, A.A.; Gritz, Y.; Merzlyak, M.N. Relationships between leaf chlorophyll content and spectral reflectance and algorithms for non-destructive chlorophyll assessment in higher plant leaves. J. Plant Physiol. 2003, 160, 271–282. [Google Scholar] [CrossRef] [PubMed]

- Stanton, C.; Starek, M.J.; Elliott, N.; Brewer, M.; Maeda, M.M.; Chu, T. Unmanned aircraft system-derived crop height and normalized difference vegetation index metrics for sorghum yield and aphid stress assessment. J. Appl. Remote Sens. 2017, 11, 26035. [Google Scholar] [CrossRef] [Green Version]

- Weiss, M.; Baret, F. Using 3D Point Clouds Derived from UAV RGB Imagery to Describe Vineyard 3D Macro-Structure. Remote Sens. 2017, 9, 111. [Google Scholar] [CrossRef]

- Agisoft LLC. Agisoft PhotoScan User Manual: Professional Edition; Agisoft LLC: St. Petersburg, Russia, 2017. [Google Scholar]

- Bayerisches Landesamt für Umwelt. Gewässerkundlicher Dienst Bayern. 2017. Available online: https://www.gkd.bayern.de/ (accessed on 1 June 2017).

- Pfeifer, N.; Mandlburger, G.; Otepka, J.; Karel, W. OPALS—A framework for Airborne Laser Scanning data analysis. Comput. Environ. Urban Syst. 2014, 45, 125–136. [Google Scholar] [CrossRef]

- Meyer, G.E.; Neto, J.C. Verification of color vegetation indices for automated crop imaging applications. Comput. Electron. Agric. 2008, 63, 282–293. [Google Scholar] [CrossRef]

- Woebbecke, D.M.; Meyer, G.E.; Bargen, K.V.; Mortensen, D.A. Color Indices for Weed Identification under Various Soil, Residue, and Lighting Conditions. Trans. ASAE 1995, 38, 259–269. [Google Scholar] [CrossRef]

- George, E.; Meyer, T.W.H.L. Machine vision detection parameters for plant species identification. Proc. SPIE 1999, 3543. [Google Scholar] [CrossRef]

- Lameski, P.; Zdravevski, E.; Trajkovik, V.; Kulakov, A. Weed Detection Dataset with RGB Images Taken Under Variable Light Conditions. In ICT Innovations 2017; Trajanov, D., Bakeva, V., Eds.; Springer International Publishing: Cham, Switzerland, 2017; pp. 112–119. [Google Scholar]

- Sapkale, J.B.; Kadam, Y.U.; Jadhav, I.A.; Kamble, S.S. River in Planform and Variation in Sinuosity Index: A Study of Dhamni River, Kolhapur (Maharashtra), India. Int. J. Sci. Eng. Res. 2016, 7, 863–867. [Google Scholar]

- Lillesand, T.M.; Kiefer, R.W.; Chipman, J.W. Remote Sensing and Image Interpretation, 7th ed.; John Wiley: Hoboken, NJ, USA, 2015. [Google Scholar]

- Congalton, R.G.; Green, K. Assessing the Accuracy of Remotely Sensed Data: Principles and Practices, 2nd ed.; CRC Press/Taylor & Francis: Boca Raton, FL, USA, 2009. [Google Scholar]

- Marcaccio, J.V.; Markle, C.E.; Chow-Fraser, P. Unmanned aerial vehicles produce high-resolution, seasonally-relevant imagery for classifying wetland vegetation. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2015, XL-1/W4, 249–256. [Google Scholar] [CrossRef]

- Alexander, C.; Deák, B.; Kania, A.; Mücke, W.; Heilmeier, H. Classification of vegetation in an open landscape using full-waveform airborne laser scanner data. Int. J. Appl. Earth Observ. Geoinf. 2015, 41, 76–87. [Google Scholar] [CrossRef]

- Luo, S.; Wang, C.; Xi, X.; Pan, F.; Qian, M.; Peng, D.; Nie, S.; Qin, H.; Lin, Y. Retrieving aboveground biomass of wetland Phragmites australis (common reed) using a combination of airborne discrete-return LiDAR and hyperspectral data. Int. J. Appl. Earth Observ. Geoinf. 2017, 58, 107–117. [Google Scholar] [CrossRef]

- Kefauver, S.C.; El-Haddad, G.; Vergara-Diaz, O.; Araus, J.L. RGB picture vegetation indexes for High-Throughput Phenotyping Platforms (HTPPs). In Remote Sensing for Agriculture, Ecosystems, and Hydrology XVII; Neale, C.M.U., Maltese, A., Eds.; SPIE: Bellingham, WA, USA, 2015; p. 96370J. [Google Scholar]

- Du, M.; Noguchi, N. Monitoring of Wheat Growth Status and Mapping of Wheat Yield’s within-Field Spatial Variations Using Color Images Acquired from UAV-camera System. Remote Sens. 2017, 9, 289. [Google Scholar] [CrossRef]

- Casadesús, J.; Kaya, Y.; Bort, J.; Nachit, M.M.; Araus, J.L.; Amor, S.; Ferrazzano, G.; Maalouf, F.; Maccaferri, M.; Martos, V.; et al. Using vegetation indices derived from conventional digital cameras as selection criteria for wheat breeding in water-limited environments. Ann. Appl. Biol. 2007, 150, 227–236. [Google Scholar] [CrossRef]

- Li, W.; Niu, Z.; Chen, H.; Li, D.; Wu, M.; Zhao, W. Remote estimation of canopy height and aboveground biomass of maize using high-resolution stereo images from a low-cost unmanned aerial vehicle system. Ecol. Indic. 2016, 67, 637–648. [Google Scholar] [CrossRef]

- Michez, A.; Piégay, H.; Lisein, J.; Claessens, H.; Lejeune, P. Classification of riparian forest species and health condition using multi-temporal and hyperspatial imagery from unmanned aerial system. Environ. Monit. Assess. 2016, 188, 146. [Google Scholar] [CrossRef] [PubMed]

- Candiago, S.; Remondino, F.; de Giglio, M.; Dubbini, M.; Gattelli, M. Evaluating Multispectral Images and Vegetation Indices for Precision Farming Applications from UAV Images. Remote Sens. 2015, 7, 4026–4047. [Google Scholar] [CrossRef] [Green Version]

| Platform | Point Density [point/m²] | Flying Altitude [m] | Ground Resolution [cm/pixel] | Tie Points | Projections | Reprojection Error [pix] |

|---|---|---|---|---|---|---|

| Rotary-wing | 1230 | 46 | 2.1 | 328,377 | 781,900 | 0.28 |

| Fixed-wing | 2260 | 146 | 2.9 | 97,842 | 210,185 | 0.33 |

| Category | General Description |

|---|---|

| Stressed Reed | Sparse and parallel stripes along the reed bed edge (due to either floods, wind storms, or driftwood accumulation), a lane/aisle perpendicular to the shore (for docks, boat traffic, bathing, fish traps), the dissolution of reed beds though decreasing stem density, frayed, ripped, not zoned reed edge and in single clumps (through erosion or flood), and seaward stubble fields of past reed beds. |

| Unstressed Reed | Characterized by a closed and evenly growing stock. Their seaward stock limit is evenly curved and uninterrupted. There is a gradual decline in crop density and the middle stem height instead. The reed is stock-forming over large areas and without gaps in the interior. |

| Classified Data | Reference Data | ||||

|---|---|---|---|---|---|

| Sparse reed | Dense reed | Water | Totals | User’s accuracy (%) | |

| Sparse reed | 23 | 3 | 0 | 26 | 88.46 |

| Dense reed | 0 | 4 | 0 | 4 | 100.00 |

| Water | 3 | 0 | 0 | 3 | 0.00 |

| Totals | 26 | 7 | 0 | 33 | |

| Producer’s accuracy (%) | 88.46 | 57.14 | 0.00 | ||

| Total accuracy: | 81.82% | Kappa statistic: | 0.48 | ||

| Classified Data | Reference Data | ||||

|---|---|---|---|---|---|

| Sparse reed | Dense reed | Water | Totals | User’s accuracy (%) | |

| Sparse reed | 219 | 19 | 10 | 248 | 88.31 |

| Dense reed | 9 | 41 | 0 | 50 | 82.00 |

| Water | 40 | 0 | 344 | 384 | 89.58 |

| Totals | 268 | 60 | 354 | 682 | |

| Producer’s accuracy (%) | 81.72 | 68.33 | 97.18 | ||

| Total accuracy: | 88.56% | Kappa statistic: | 0.795 | ||

| Classified Data | Reference Data | ||||

|---|---|---|---|---|---|

| Stressed reed | Unstressed reed | Water | Totals | User’s accuracy (%) | |

| Stressed reed | 129 | 8 | 14 | 151 | 85.43 |

| Unstressed reed | 2 | 18 | 0 | 20 | 90.00 |

| Water | 40 | 0 | 173 | 213 | 81.22 |

| Totals | 171 | 26 | 187 | 384 | |

| Producer’s accuracy (%) | 75.44 | 69.23 | 92.51 | ||

| Total accuracy: | 83.33% | Kappa statistic: | 0.691 | ||

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Corti Meneses, N.; Brunner, F.; Baier, S.; Geist, J.; Schneider, T. Quantification of Extent, Density, and Status of Aquatic Reed Beds Using Point Clouds Derived from UAV–RGB Imagery. Remote Sens. 2018, 10, 1869. https://0-doi-org.brum.beds.ac.uk/10.3390/rs10121869

Corti Meneses N, Brunner F, Baier S, Geist J, Schneider T. Quantification of Extent, Density, and Status of Aquatic Reed Beds Using Point Clouds Derived from UAV–RGB Imagery. Remote Sensing. 2018; 10(12):1869. https://0-doi-org.brum.beds.ac.uk/10.3390/rs10121869

Chicago/Turabian StyleCorti Meneses, Nicolás, Florian Brunner, Simon Baier, Juergen Geist, and Thomas Schneider. 2018. "Quantification of Extent, Density, and Status of Aquatic Reed Beds Using Point Clouds Derived from UAV–RGB Imagery" Remote Sensing 10, no. 12: 1869. https://0-doi-org.brum.beds.ac.uk/10.3390/rs10121869