Extraction of Buildings from Multiple-View Aerial Images Using a Feature-Level-Fusion Strategy

Abstract

:1. Introduction

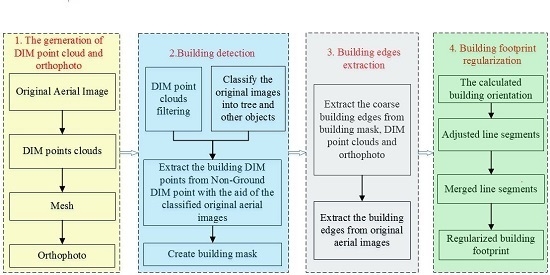

- Filter the DIM point clouds to get the non-ground points.

- Apply the object-oriented classification method to detect vegetation with the aid of the features from the original aerial images.

- Make use of the back-projection to remove the tree points from the non-ground points so that we can obtain the building DIM points according to classified original aerial images.

- Create the building mask using the building DIM points.

- Extract the coarse building edges from the DIM points, orthophoto and the blob of the individual building.

- Extract the building edge from the original aerial images and match these straight-line segments with the help of the coarse building edges.

- Make use of the aerial images alone to detect the buildings by the combination of the features from original aerial images and DIM point clouds.

- A new straight-line segment matching strategy based on three images is proposed with the help of the coarse building edge from the DIM points, orthophoto and the blob of the individual building.

- In the regularization stage, a new strategy is proposed for the generation of a building footprint.

2. Our Proposed Method

2.1. Generation of DIM Point Clouds and Orthophoto from Original Aerial Images

- Add the original images, the positioning and orientation system (POS) data and the camera calibration parameters into this software.

- Align photos. In this step, the point features are detected and matched; and the accuracy camera locations of each aerial image are estimated.

- Build the dense points clouds. According to a previous report [21], a stereo semi-global matching like (SGM-like) method is used to generate the DIM point clouds.

- Make use of the DIM points to generate a mesh. In nature, the mesh is the DSM of the survey area.

- Build the orthophoto using the generated mesh and the original aerial images.

2.2. Building Detection from DIM Point Clouds with the Aid of the Original Aerial Image

2.2.1. Filtering of DIM Point Clouds

2.2.2. Object-Oriented Classification of Original Aerial Images

- Multi-resolution segmentation of the aerial image. This technique is used to extract reasonable image objects. In the segmentation stage, several parameters, such as layer weight, compactness, shape, and scale, must be determined in advance. These algorithms and related parameters are described in detail in [47]. Generally, the parameters were determined through visual assessment as well as trial and error. We set the scale factor as 90 and set the weights for red, green, blue and straight-line layers are 1, 1, 1, and 2, respectively. The shape and compactness parameters are 0.3 and 0.7, respectively.

- Feature selection. The normalized difference vegetation index (NDVI) has been used extensively to detect vegetation [48]. However, relying on the NDVI alone to detect the vegetation is not accurate due to influence of shadows and colored buildings on aerial images [16]. Therefore, besides the NDVI, the texture information in the form of entropy [49] is also used on the basis of the observation that trees are rich in texture and have higher surface roughness than building roofs [16]. Moreover, R, G, B, and brightness are also selected as the features in our proposed method. Notably, if the near-infrared band is not available, color vegetation indices can be calculated from color aerial images. In this paper, we applied the green leaf index (GLI) [50,51] to replace NDVI. The formula of the GLI is expressed in the formula (1). In this formula, R, G and B represents the value of the red, green and blue bands of each pixel from original aerial image, respectively.

- Supervised classification of segments using a Random Forest (RF) [52]. The reference labels are created by an operator. The computed feature vector per segment is fed into the RF learning scheme. To monitor the quality of learning, the training and prediction is performed several times.

2.2.3. Removal of Tree Points from Non-Ground DIM Points

- At first, define a vector L. The size of L is equal to the number of non-ground DIM points and each element within this vector is used to mark the category of each corresponding non-ground DIM point. In the initial stage of this process, we set the value of each element within this vector to 0, which indicates that the corresponding DIM point is unclassified.

- Then, select a classified original aerial image and make use of this classified original aerial image to label the category of each element within the vector L. The fundamental of this step is back projection. If the calculated projected point of a non-ground DIM point falls within the region which is labelled as tree in the selected image, the corresponding element within L plus 1; otherwise, the corresponding element within L minus 1.

- Continue the second step until all classified original aerial images are traversed.

- If the value of an element within the vector L is greater than 0, the corresponding non-ground DIM point is regarded as a tree point; otherwise, the DIM point is a building point.

2.2.4. Extraction of Individual Building from the DIM Points

2.3. Building Edge Detection Using a Feature-Level Fusion Strategy

2.3.1. Detection of Coarse Building Edges from the DIM Points, Orthophoto and Building Mask

- Detection of building edges from DIM points. In terms of DIM point clouds, the building facades are the building edges. Hence, how to extract the building edges from DIM points is converted to how to detect the building facades from DIM points. The density of DIM points at the building façades is larger than that at other locations. Based on this, a method [55] named as the density of projected points (DoPP) is used to obtain the building façades. If the number of the points located in a grid cell is beyond the threshold thr, the grid is labelled 255. After these steps, the generated façade outlines still have a width of 2–3 pixels. Subsequently, a skeletonization algorithm [56] is performed to thin the façade outlines. Finally, a straight-line detector based on the freeman chain code [57] is used to generate building edges.

- Detection of building edges from orthophoto. The process of extracting building edges from orthophoto is divided into three steps. First, a straight-line segment detector [58] is used to extract straight-line segments from the orthophoto. Second, a buffer region is defined by a specified individual building blob obtained in Section 2.2. If the extracted straight-line segment intersects the buffer region, this straight-line segment is considered as a building candidate edge. Finally, the candidate edge is discretized into t points. The number of points located in buffer region is i. represents the length of the candidate edge falling into the buffer area. The larger this ratio is, the greater the probability that this candidate edge is the building edge is. In this paper, if this ratio is greater than 0.6, the candidate edge is considered a building edge.

- Detection of building edges from the building blob. The boundaries of buildings are estimated using the Moore Neighborhood Tracing algorithm [59], which provides an organized list of points for an object in a binary image. To convert the raster images of individual buildings into a vector, the Douglas-Peucker algorithm [60] is used to generate the building edges.

2.3.2. Detection of the Building Boundary Line Segments from the Original Aerial Images by Matching Line Segments

- Obtain the associated straight-line segments from multiple original aerial images and DIM points of an individual building

- ■

- Select an individual building detected in Section 2.2.4.

- ■

- According to the planar coordinate values of the individual building, obtain the corresponding DIM points.

- ■

- Project the DIM points onto the original aerial images, and obtain the corresponding regions of interest (ROIs) of selected individual building from multiple aerial images. Employ the straight-line segments detector [58] to extract the straight-line segments from corresponding ROIs. The extracted straight-line segments from ROIs are shown in Figure 7.

- ■

- Choose a coarse building edge, and discretize this straight-line segment into 2D points according to the given interval . Use the nearest neighbor interpolation algorithm [61] to convert the discretized 2D points into 3D points by fusing the height information from the DIM points. Notably, the points at the building facades which have been detected by the method DoPP should be removed before interpolation so that we can obtain the exact coordinate values of each 3D point.

- ■

- Project the 3D points onto the selected original image according to the collinear equation. In this step, occlusion detection is necessary.

- ■

- Fit the projected 2D points into a straight-line segment on the selected aerial image. A buffer region with the given size is created. Check whether the associated line segments from aerial image intersect the buffer region. If a straight-line segment from aerial image intersects within the buffer, the line is labelled as an alternative line.

- ■

- Select two longest line segments from the alternative matching line pool. As is shown in Figure 10a, it is obvious that the camera is not on the straight-line segment Line 1. Similarly, both the camera and are not on the straight-line segment and , respectively. Hence, a 3D plane is generated by the camera and the straight-line segment . Another plane is created by camera and the line segment . Two planes intersect into a 3D line segment.

- ■

- Project the 3D line segment onto the IMG-3. If the projected line and overlap each other, calculate the angle and normal distance between and the projected line; otherwise, return to the first step. In the process, the occlusion detection is necessary. The normal distance is expressed as Equation (2), and are shown in Figure 10b.

- ■

- To ensure the robustness and accuracy of line matching, the three homonymous lines should be checked. In accordance with the method described in the previous step, use the camera 1 and the line segment to generate a 3D plane, and use the camera 3 and the line segment to generate another 3D plane. Two planes intersect into a 3D line. Project the 3D line onto IMG-2. Check whether the normal distance and the angle between Line 2 and the projected line satisfy the given thresholds. Similarly, check the normal distance and angle between and the projected line, which is generated from and . If the normal distance and angle still satisfy the given thresholds, the three lines can be considered homonymous lines; otherwise, return to the first step.

- ■

- Make use of the three homonymous lines to create a 3D line. Project the 3D line onto the other aerial images. According to the given thresholds, search the homonymous line segments. The results are shown in Figure 11a. Make use of the homonymous lines to create a 3D line, and project the 3D line onto the plane. A new building edge can be created.

2.4. Regularization of Building Boundaries by the Fusion of Matched Lines and Boundary Lines

2.4.1. Adjustment of Straight-Line Segments

2.4.2. Merging of Straight-Line Segments

2.4.3. Building Footprint Generation

- First, calculate the distances from the point B to the other line segments in three directions—along the direction and two vertical directions of line segment AB. As shown in Figure 12c, the distance from the point B to the line segment CD is BK; the distance from the point B to the line segment EF is BM + ME; and the distance from the point B to the line segment HG is BN + NG.

- Search the minimum distance among the calculated distances from the point B to the other line segments. The line segment BK is the gap between the line segment AB and CD.

- Continue the above two steps until all the gaps are filled. The results are shown in Figure 12d.

- First, the endpoints are coded again, and the line segments are labelled. If the line segment is located on the external contour of the entire polygon, the straight-line segment is labelled as 1; otherwise, the line segment is labelled as 2, as shown in Figure 14a.

- Choose a line arbitrarily from the lines dataset as the starting edge. Here, the line segment is selected.

- Search the line segments that share the same endpoint . If only a straight-line segment is searched, then the line segment is the next line segment. If two or more straight-line segments are found, calculate the angle clockwise between each alternative line segment and this line segment EF. The angle between the line and is , and the angle between the line and is 90°. Choose the straight-line segment as the target line segment based on the smaller calculated angle value.

- Search until all the straight-line segments are removed. The result is shown in Figure 14f.

3. Experiments and Performance Evaluation

3.1. Data Description and Study Area

- VH. The VH dataset is published by the International Society for Photogrammetry and Remote Sensing (ISPRS), and has the real ground reference value. The object coordinate system of this dataset is the system of the Land Survey of the German federal state of Baden Württemberg, based on a Transverse Mercator projection. There are three test areas in this dataset and have been presented in Figure 15a–c. The orientation parameters are used to produce DIM points, and the derived DIM points of three areas have almost the same point density of approximately 30 2.

- ■

- VH 1. This test area is situated in the center of the city of Vaihingen. It is characterized by dense development consisting of historic buildings having rather complex shapes, but also has some trees. There are 37 buildings in this area and the buildings are located on the hillsides.

- ■

- VH 2. This area is flat and is characterized by a few high-rising residential buildings that are surrounded by trees. 14 buildings are in this test area.

- ■

- VH 3. This is a purely residential area with small detached houses and contain 56 buildings. The surface morphology of this area is relatively flat.

- Potsdam. The dataset including 210 images was collected by TrimbleAOS on 5 May 2008 in Potsdam, Germany and has a GSD of about . The reference coordinate system of this dataset is the WGS84 coordinate system with UTM zone 33N. Figure 15d shows the selected test area, which contains 4 patches according to the website (http://www2.isprs.org/commissions/comm3/wg4/2d-sem-label-potsdam.html): 6_11, 6_12, 7_11 and 7_12. The selected test area contains 54 buildings and is characterized by a typical historic city with large building blocks, narrow streets and a dense settlement structure. Because the collection times between the ISPRS VH benchmark dataset and the Potsdam dataset differ, the reference data are slightly modified by an operator.

- LN. This dataset including 170 images was collected by the Quattro DigiCAM Oblique system on 1 May 2011 in Lunen, Germany and has a GSD of . The object coordinate system of this dataset is the European Terrestrial Reference System 1989. Three patches with flat surface morphology were selected as the test areas. The reference data are obtained by a trained operator. Figure 15e–f shows the selected areas.

- ■

- LN 1. This is a purely residential area. In this area, there are 57 buildings and some vegetation.

- ■

- LN 2. In this area, there are 36 buildings and several of these buildings are the occluded by tress.

- ■

- LN 3. This area is characterized by a few high-rising buildings with complex structures. In this area, 47 buildings exist.

3.2. Evaluation Criterion

3.3. Results and Discussion

3.3.1. Vaihingen Results

3.3.2. Potsdam and LN Results

- Initially, the filtering threshold may be larger than the height of low buildings. In our test arrangement, some low buildings () are excluded in the filtering process. Fortunately, the height of most buildings is higher than the given thresholds in the Potsdam and Lunen test areas. Only the building marked as in Figure 18b is removed.

- Second, the misclassification of trees and other objects in the process of object-oriented classification of original aerial images is the main reason that affects the accuracy of building detection. Some buildings are removed due to the influence of similar spectral features and shadow. Figure 18f,g,i and Figure 19h,i shows examples of missed buildings. Similarly, some trees are classified as buildings, as shown in Figure 18f.

- Third, the noise of DIM points degrades the accuracy of building detection. Uncoupled to the over-segmentation error, under- and many-to-many segmentation errors exist in Table 4. Figure 19g shows the many-to-many segmentation error example. The shadow on the surface of the building leads to the over-segmentation of the building, and the adjacent buildings are combined with the building at the same time due to the small connecting regions between these two buildings.

3.4. Comparative Analysis

- Relative to RMA and Hand, our proposed method can obtain similar object-based completeness and accuracy in VH1 and VH3. The object-based accuracy of RMA and Hand in is 52% and 78%, respectively, and is significantly lower than that of the proposed method because NDVI is the main feature in RMA and Hand. The wrong NDVI estimate decreases the object-based accuracy in VH2 due to the influence of shadow pixels. The results show that our proposed method is more robust than RMA and Hand due to the use of additional features.

- The DLR not only divides the objects into buildings and vegetation but also takes into account vegetation shadowed in the separation of buildings and other objects. Therefore, the pixel-based completeness and accuracy of DLR is slightly higher than that of our proposed method in VH1, VH2 and VH3. Moreover, the pixel-based completeness and accuracy of DLR is also higher than that of other 4 methods. The object-based completeness of DLR is lower than that of our proposed method in VH2 and VH3, mainly because the features from original aerial images are used to detect buildings and because the small buildings can be easily detected in the two areas. Actually, there are seldom buildings that are occluded in the three test areas of Vaihingen. The advantages of our proposed method are not thoroughly demonstrated. The buildings labelled as k in Figure 18d and Figure 19e are partially or completely occluded, and DLR cannot detect the occluded buildings. In our proposed method, the occluded building can be detected. Notably, because the noise of DIM points is higher than that of LiDAR point cloud, the object-based accuracy of the proposed method is slightly lower than that of DLR in VH3. Large buildings (50 2) were extracted with 100% accuracy and completeness.

- The proposed method offers better in object-based completeness and accuracy than ITCR, mainly because the LiDAR point clouds need to be segmented into 3D segments that are regarded as the processed unit in ITCR. The process produces segmented errors that damage the building detection results. Furthermore, the segmentation of DIM points is more challenges because the quality of DIM points is lower than that of the LiDAR point cloud. Therefore, we make use of the aerial images to detect buildings from non-ground points to replace the segmentations of the DIM point cloud in our proposed method.

- Fed_2 and our proposed method obtain better performance than ITCR and IIST because the segmented errors are avoided in the process of building detection. The performance of Fed_2, MON4 and our proposed method is approximately the same.

3.5. Performances of Our Proposed Building Regularization

4. Conclusions

- In the building detection stage, the results of building detection rely on the results of object-oriented classification of original aerial images. Shadows are an important factor influencing the classification of original aerial images. In the process of classification, we categorize the objects into only two classes (trees and other objects) and do not train and classify shadows as separate objects. As a result, the classification results cannot perform perfectly. In the further work, we will make use of the intensity and chromatic to detect the shadow so that we can get better performance.

- The noise of the DIM point cloud produced by dense matching is higher than that of the LiDAR point clouds. The buildings detection results and footprint regularization are affected by noise.

- A threshold exists in the process of DIM point cloud filtering. The filtering threshold cannot ensure that all the buildings can be preserved. In fact, the low buildings may be removed, while some objects that are higher than the threshold are preserved. The improper threshold causes detection errors.

- At the stage of building footprint regularization, only straight lines are used. Therefore, for a building with a circular boundary, our proposed method cannot provide satisfactory performance.

- Finally, the morphology of buildings also influences the accuracy of building detection results. In fact, the more complex the building morphology is, the more difficult the building boundary regularization is.

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Krüger, A.; Kolbe, T.H. Building Analysis for Urban Energy Planning Using Key Indicators on Virtual 3d City Models—The Energy Atlas of Berlin. ISPRS Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2012, XXXIX-B2, 145–150. [Google Scholar]

- Arinah, R.; Yunos, M.Y.; Mydin, M.O.; Isa, N.K.; Ariffin, N.F.; Ismail, N.A. Building the Safe City Planning Concept: An Analysis of Preceding Studies. J. Teknol. 2015, 75, 95–100. [Google Scholar]

- Murtiyoso, A.; Remondino, F.; Rupnik, E.; Nex, F.; Grussenmeyer, P. Oblique Aerial Photography Tool for Building Inspection and Damage Assessment. ISPRS Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2014, XL-1, 309–313. [Google Scholar] [CrossRef]

- Vetrivel, A.; Gerke, M.; Kerle, N.; Vosselman, G. Identification of damage in buildings based on gaps in 3D point clouds from very high resolution oblique airborne images. ISPRS J. Photogramm. Remote Sens. 2015, 105, 61–78. [Google Scholar] [CrossRef]

- Karimzadeh, S.; Mastuoka, M. Building Damage Assessment Using Multisensor Dual-Polarized Synthetic Aperture Radar Data for the 2016 M 6.2 Amatrice Earthquake, Italy. Remote Sens. 2017, 9, 330. [Google Scholar] [CrossRef]

- Stefanov, W.L.; Ramsey, M.S.; Christensen, P.R. Monitoring urban land cover change: An expert system approach to land cover classification of semiarid to arid urban centers. Remote Sens. Environ. 2001, 77, 173–185. [Google Scholar] [CrossRef]

- Antonarakis, A.S.; Richards, K.S.; Brasington, J. Object-based land cover classification using airborne LiDAR. Remote Sens. Environ. 2008, 112, 2988–2998. [Google Scholar] [CrossRef]

- Rottensteiner, F.; Sohn, G.; Gerke, M.; Wegner, J.D.; Breitkopf, U.; Jung, J. Results of the ISPRS benchmark on urban object detection and 3D building reconstruction. ISPRS J. Photogramm. Remote Sens. 2014, 93, 256–271. [Google Scholar] [CrossRef]

- Poullis, C. A Framework for Automatic Modeling from Point Cloud Data. IEEE Trans. Pattern Anal. Mach. Intell. 2013, 35, 2563–2575. [Google Scholar] [CrossRef]

- Sun, S.; Salvaggio, C. Aerial 3D Building Detection and Modeling from Airborne LiDAR Point Clouds. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2013, 6, 1440–1449. [Google Scholar] [CrossRef]

- Mongus, D.; Lukač, N.; Žalik, B. Ground and building extraction from LiDAR data based on differential morphological profiles and locally fitted surfaces. ISPRS J. Photogramm. Remote Sens. 2014, 93, 145–156. [Google Scholar] [CrossRef]

- Liua, C.; Shia, B.; Yanga, X.; Lia, N. Legion Segmentation for Building Extraction from LIDAR Based Dsm Data. ISPRS Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2012, XXXIX-B3, 291–296. [Google Scholar] [CrossRef]

- Dahlke, D.; Linkiewicz, M.; Meissner, H. True 3D building reconstruction: Façade, roof and overhang modelling from oblique and vertical aerial imagery. Int. J. Image Data Fusion 2015, 6, 314–329. [Google Scholar] [CrossRef]

- Nex, F.; Rupnik, E.; Remondino, F. Building Footprints Extraction from Oblique Imagery. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2013, II-3/W3, 61–66. [Google Scholar] [CrossRef]

- Arefi, H.; Reinartz, P. Building Reconstruction Using DSM and Orthorectified Images. Remote Sens. 2013, 5, 1681–1703. [Google Scholar] [CrossRef] [Green Version]

- Grigillo, D.; Kanjir, U. Urban object extraction from digital surface model and digital aerial images. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2012, I-3, 215–220. [Google Scholar] [CrossRef]

- Gerke, M.; Xiao, J. Fusion of airborne laser scanning point clouds and images for supervised and unsupervised scene classification. ISPRS J. Photogramm. Remote Sens. 2014, 87, 78–92. [Google Scholar] [CrossRef]

- Awrangjeb, M.; Zhang, C.; Fraser, C.S. Automatic Reconstruction of Building Roofs through Effective Integration of Lidar and Multispectral Imagery. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2012, I-3, 203–208. [Google Scholar] [CrossRef]

- Rottensteiner, F.; Trinder, J.; Clode, S.; Kubik, K. Using the Dempster-Shafer method for the fusion of LiDAR data and multi-spectral images for building detection. Inf. Fusion 2005, 6, 283–300. [Google Scholar] [CrossRef]

- Chen, L.; Zhao, S.; Han, W.; Li, Y. Building detection in an urban area using LiDAR data and QuickBird imagery. Int. J. Remote Sens. 2012, 33, 5135–5148. [Google Scholar] [CrossRef]

- Remondino, F.; Spera, M.G.; Nocerino, E.; Menna, F.; Nex, F. State of the art in high density image matching. Photogramm. Rec. 2014, 29, 144–166. [Google Scholar] [CrossRef]

- Myint, S.W.; Gober, P.; Brazel, A.; Grossman-Clarke, S.; Weng, Q. Per-pixel vs. object-based classification of urban land cover extraction using high spatial resolution imagery. Remote Sens. Environ. 2011, 115, 1145–1161. [Google Scholar] [CrossRef]

- Blaschke, T. Object based image analysis for remote sensing. ISPRS J. Photogramm. Remote Sens. 2010, 65, 2–16. [Google Scholar] [CrossRef]

- Salehi, B.; Zhang, Y.; Zhong, M.; Dey, V. Object-Based Classification of Urban Areas Using VHR Imagery and Height Points Ancillary Data. Remote Sens. 2012, 4, 2256–2276. [Google Scholar] [CrossRef] [Green Version]

- Pu, R.; Landry, S.; Yu, Q. Object-Based Urban Detailed Land Cover Classification with High Spatial Resolution IKONOS Imagery. Int. J. Remote. Sens. 2011, 32, 3285–3308. [Google Scholar]

- Burnett, C.; Blaschke, T. A multi-scale segmentation/object relationship modeling methodology for landscape analysis. Ecol. Model. 2003, 168, 233–249. [Google Scholar] [CrossRef]

- Rottensteiner, F.; Trinder, J.; Clode, S.; Kubik, K. Building detection by fusion of airborne laserscanner data and multi-spectral images: Performance evaluation and sensitivity analysis. ISPRS J. Photogramm. Remote Sens. 2007, 62, 135–149. [Google Scholar] [CrossRef]

- ISPRS. ISPRS Test Project on Urban Classification, 3D Building Reconstruction and Semantic Labeling. 2013. Available online: http://www2.isprs.org/commissions/comm3/wg4/tests.html (accessed on 20 May 2018).

- Tarantino, E.; Figorito, B. Extracting Buildings from True Color Stereo Aerial Images Using a Decision Making Strategy. Remote Sens. 2011, 3, 1553–1567. [Google Scholar] [CrossRef]

- Xiao, J.; Gerke, M.; Vosselman, G. Building extraction from oblique airborne imagery based on robust façade detection. ISPRS J. Photogramm. Remote Sens. 2012, 68, 56–68. [Google Scholar] [CrossRef]

- Rau, J.Y.; Jhan, J.P.; Hsu, Y.C. Analysis of Oblique Aerial Images for Land Cover and Point Cloud Classification in an Urban Environment. IEEE Trans. Geosci. Remote Sens. 2015, 53, 1304–1319. [Google Scholar] [CrossRef]

- Jwa, Y.; Sohn, G.; Tao, V.; Cho, W. An implicit geometric regularization of 3D building shape using airborne LiDAR data. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2008, XXXVII, 69–76. [Google Scholar]

- Brunn, A.; Weidner, U. Model-Based 2D-Shape Recovery. In Proceedings of the DAGM-Symposium, Bielefeld, Germany, 13–15 September 1995; Springer: Berlin, Germany, 1995; pp. 260–268. [Google Scholar]

- Ameri, B. Feature based model verification (FBMV): A new concept for hypothesis validation in building reconstruction. Int. Arch. Photogramm. Remote Sens. 2000, 33, 24–35. [Google Scholar]

- Sampath, A.; Shan, J. Building Boundary Tracing and Regularization from Airborne LiDAR Point Clouds. Photogramm. Eng. Remote Sens. 2007, 73, 805–812. [Google Scholar] [CrossRef]

- Jarzabekrychard, M. Reconstruction of Building Outlines in Dense Urban Areas Based on LIDAR Data and Address Points. ISPRS Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2012, 39, 121–126. [Google Scholar] [CrossRef]

- Rottensteiner, F. Automatic generation of high-quality building models from LiDAR data. Comput. Graph. Appl. IEEE 2003, 23, 42–50. [Google Scholar] [CrossRef]

- Sohn, G.D.I. Data fusion of high-resolution satellite imagery and LiDAR data for automatic building extraction. ISPRS J. Photogramm. Remote Sens. 2007, 62, 43–63. [Google Scholar] [CrossRef]

- Gilani, S.; Awrangjeb, M.; Lu, G. An Automatic Building Extraction and Regularization Technique Using LiDAR Point Cloud Data and Ortho-image. Remote Sens. 2016, 8, 258. [Google Scholar] [CrossRef]

- Jakobsson, M.; Rosenberg, N.A. CLUMPP: A cluster matching and permutation program for dealing with label switching and multimodality in analysis of population structure. Bioinformatics 2007, 23, 1801–1806. [Google Scholar] [CrossRef]

- Habib, A.F.; Zhai, R.; Kim, C. Generation of Complex Polyhedral Building Models by Integrating Stereo-Aerial Imagery and LiDAR Data. Photogramm. Eng. Remote Sens. 2010, 76, 609–623. [Google Scholar] [CrossRef]

- Agisoft. Agisoft PhotoScan. 2018. Available online: http://www.agisoft.ru/products/photoscan/professional/ (accessed on 25 May 2018).

- Sithole, G.; Vosselman, G. Experimental comparison of filter algorithms for bare-Earth extraction from airborne laser scanning point clouds. ISPRS J. Photogramm. Remote Sens. 2004, 59, 85–101. [Google Scholar] [CrossRef]

- Zhang, W.; Qi, J.; Wan, P.; Wang, H.; Xie, D.; Wang, X.; Yan, G. An Easy-to-Use Airborne LiDAR Data Filtering Method Based on Cloth Simulation. Remote Sens. 2016, 8, 501. [Google Scholar] [CrossRef]

- Axelsson, P. DEM generation from laser scanner data using adaptive TIN models. Int. Arch. Photogramm. Remote Sens. 2000, 33 Pt B4, 110–117. [Google Scholar]

- Dong, Y.; Cui, X.; Zhang, L.; Ai, H. An Improved Progressive TIN Densification Filtering Method Considering the Density and Standard Variance of Point Clouds. ISPRS Int. J. Geo-Inf. 2018, 7, 409. [Google Scholar] [CrossRef]

- Devereux, B.J.; Amable, G.S.; Posada, C.C. An efficient image segmentation algorithm for landscape analysis. Int. J. Appl. Earth Obs. Geo-Inf. 2004, 6, 47–61. [Google Scholar] [CrossRef]

- Defries, R.S.; Townshend, J.R.G. NDVI-derived land cover classifications at a global scale. Int. J. Remote Sens. 1994, 15, 3567–3586. [Google Scholar] [CrossRef]

- Awrangjeb, M.; Ravanbakhsh, M.; Fraser, C.S. Automatic detection of residential buildings using LIDAR data and multispectral imagery. ISPRS J. Photogramm. Remote Sens. 2010, 65, 457–467. [Google Scholar] [CrossRef]

- Tomimatsu, H.; Itano, S.; Tsutsumi, M.; Nakamura, T.; Maeda, S. Seasonal change in statistics for the floristic composition of Miscanthus- and Zoysia-dominated grasslands. Jpn. J. Grassl. Sci. 2009, 55, 48–53. [Google Scholar]

- Meyer, G.E.; Neto, J.C. Verification of color vegetation indices for automated crop imaging applications. Comput. Electron. Agric. 2008, 63, 282–293. [Google Scholar] [CrossRef]

- Pal, M. Random forest classifier for remote sensing classification. Int. J. Remote Sens. 2005, 26, 217–222. [Google Scholar] [CrossRef]

- Habib, A.F.; Kim, E.M.; Kim, C.J. New Methodologies for True Orthophoto Generation. Photogramm. Eng. Remote Sens. 2007, 73, 25–36. [Google Scholar] [CrossRef] [Green Version]

- Wu, K.; Otoo, E.; Suzuki, K. Optimizing two-pass connected-component labeling algorithms. Pattern Anal. Appl. 2009, 12, 117–135. [Google Scholar] [CrossRef]

- Zhong, S.W.; Bi, J.L.; Quan, L.Q. A Method for Segmentation of Range Image Captured by Vehicle-borne Laser scanning Based on the Density of Projected Points. Acta Geod. Cartogr. Sin. 2005, 34, 95–100. [Google Scholar]

- Iwanowski, M.; Soille, P. Fast Algorithm for Order Independent Binary Homotopic Thinning. In Proceedings of the International Conference on Adaptive and Natural Computing Algorithms, Warsaw, Poland, 11–14 April 2007; Springer: Berlin, Germany, 2007; pp. 606–615. [Google Scholar]

- Li, C.; Wang, Z.; Li, L. An Improved HT Algorithm on Straight Line Detection Based on Freeman Chain Code. In Proceedings of the 2009 2nd International Congress on Image and Signal Processing, Tianjin, China, 17–19 October 2009; pp. 1–4. [Google Scholar]

- Lu, X.; Yao, J.; Li, K.; Li, L. CannyLines: A parameter-free line segment detector. In Proceedings of the 2015 IEEE International Conference on Image Processing (ICIP), Quebec City, QC, Canada, 27–30 September 2015; pp. 507–511. [Google Scholar]

- Cen, A.; Wang, C.; Hama, H. A fast algorithm of neighbourhood coding and operations in neighbourhood coding image. Mem. Fac. Eng. Osaka City Univ. 1995, 36, 77–84. [Google Scholar]

- Visvalingam, M.; Whyatt, J.D. The Douglas-Peucker Algorithm for Line Simplification: Re-evaluation through Visualization. Comput. Graph. Forum 1990, 9, 213–225. [Google Scholar] [CrossRef]

- Maeland, E. On the comparison of interpolation methods. IEEE Trans Med Imaging 1988, 7, 213–217. [Google Scholar] [CrossRef] [PubMed]

- Rutzinger, M.; Rottensteiner, F.; Pfeifer, N. A comparison of evaluation techniques for building extraction from airborne laser scanning. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2009, 2, 11–20. [Google Scholar] [CrossRef]

| Symbols | Description |

|---|---|

| m, m,50 | Completeness for all the buildings, over 50 2 and over 2.5 2 object-based detection |

| r, r,50 | Correctness for all the buildings, over 50 2 and over 2.5 2 object-based detection |

| l, l,50 | Quality for all the buildings, over 50 2 and over 2.5 2 object-based detection |

| mp, mp,50 | Completeness all the buildings, over 50 2 and over 2.5 2 pixel-based detection |

| rp, rp,50 | Correctness all the buildings, over 50 2 and over 2.5 2 pixel -based detection |

| lp | Quality of all building pixel-based detection |

| Planimetric accuracy in meters | |

| M detected buildings correspond to one building in the reference (over-segmented) | |

| A detected building corresponds to N buildings in the reference (under-segmented) | |

| Both over- and under-segmentation in the number of buildings |

| Method | Areas | m | r | m,50 | r,50 | l,50 | ||||

|---|---|---|---|---|---|---|---|---|---|---|

| Before regularization | VH 1 | 81.00 | 100.00 | 81.00 | 100.00 | 100.00 | 100.00 | 0 | 2 | 1 |

| VH 2 | 83.30 | 100.00 | 83.30 | 100.00 | 100.00 | 100.00 | 0 | 0 | 0 | |

| VH 3 | 87.20 | 95.23 | 81.60 | 100.00 | 100.00 | 100.00 | 0 | 2 | 0 | |

| Average | 83.83 | 98.41 | 81.97 | 100.00 | 100.00 | 100.00 | 0 | 1.33 | 0.33 | |

| After regularization | VH 1 | 81.00 | 100.00 | 81 | 100.00 | 100.00 | 100.00 | 0 | 2 | 1 |

| VH 2 | 83.30 | 100.00 | 83.3 | 100.00 | 100.00 | 100.00 | 0 | 0 | 0 | |

| VH 3 | 87.20 | 95.23 | 81.6 | 100.00 | 100.00 | 100.00 | 0 | 2 | 0 | |

| Average | 83.83 | 98.41 | 81.97 | 100.00 | 100.00 | 100.00 | 0 | 1.33 | 0.33 |

| Proposed Detection | Areas | mp | rp | lp | |

|---|---|---|---|---|---|

| Before regularization | VH 1 | 90.7 | 91.2 | 83.45 | 0.853 |

| VH 2 | 89.14 | 95.47 | 88.55 | 0.612 | |

| VH 3 | 90.42 | 95.22 | 86.5 | 0.727 | |

| Average | 90.09 | 93.96 | 86.17 | 0.731 | |

| After regularization | VH 1 | 90.38 | 92.29 | 84.04 | 0.844 |

| VH 2 | 92.37 | 96.75 | 89.59 | 0.591 | |

| VH 3 | 90.7 | 95.68 | 87.13 | 0.632 | |

| Average | 91.15 | 94.91 | 89.92 | 0.69 |

| Method | Areas | m | r | l | m,50 | r,50 | l,50 | |||

|---|---|---|---|---|---|---|---|---|---|---|

| Before regularization | Potsdam | 90.74 | 100 | 90.74 | 100 | 100 | 100 | 1 | 4 | 0 |

| 87.71 | 96.15 | 84.75 | 97.06 | 100 | 97.06 | 0 | 8 | 0 | ||

| 94.4 | 97.14 | 91.89 | 100 | 100 | 100 | 0 | 3 | 0 | ||

| 86.05 | 94.59 | 82.22 | 100 | 100 | 100 | 0 | 2 | 0 | ||

| Average | 89.73 | 96.97 | 87.4 | 99.27 | 100 | 99.27 | 0.25 | 4.25 | 0.25 | |

| After regularization | Potsdam | 90.74 | 100 | 90.74 | 100 | 100 | 100 | 1 | 3 | 0 |

| 87.71 | 96.15 | 84.75 | 97.06 | 100 | 97.06 | 0 | 8 | 0 | ||

| 94.4 | 97.14 | 91.89 | 100 | 100 | 100 | 0 | 2 | 1 | ||

| 86.05 | 94.59 | 82.22 | 100 | 100 | 100 | 0 | 2 | 0 | ||

| Average | 89.73 | 96.97 | 87.4 | 99.27 | 100 | 99.27 | 0.25 | 4.25 | 0.25 |

| Proposed Detection | Areas | mp | rp | lp | |

|---|---|---|---|---|---|

| Before regularization | Potsdam | 94.9 | 95.1 | 90.5 | 0.913 |

| 93.15 | 89.33 | 83.82 | 0.882 | ||

| 91.81 | 93.05 | 85.92 | 0.816 | ||

| 95.72 | 93.78 | 90 | 0.754 | ||

| Average | 93.9 | 92.82 | 87.56 | 0.841 | |

| After regularization | Potsdam | 94.61 | 95.7 | 90.76 | 0.843 |

| 93.32 | 90.02 | 84.56 | 0.813 | ||

| 92.3 | 94.12 | 87.27 | 0.742 | ||

| 96.4 | 93.69 | 90.52 | 0.715 | ||

| Average | 94.16 | 93.38 | 88.28 | 0.778 |

| Benchmark Data Set | Methods | Data Type | Processing Strategy | Reference |

|---|---|---|---|---|

| VH | DLR | Image | supervised | [8] |

| RMA | Image | data-driven | [8] | |

| Hand | Image | Dempster-Shafer | [8] | |

| Fed_2 | LiDAR + image | data-driven | [39] | |

| ITCR | LiDAR + image | supervised | [17] | |

| MON4 | LiDAR + image | Data-driven | [28] |

| Area | Method | m | r | m,50 | r,50 | mp | rp | ||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| VH1 | DLR | 83.8 | 96.9 | 100 | 100 | 91.9 | 95.4 | 0.9 | - | - | - |

| RMA | 83.8 | 96.9 | 100 | 100 | 91.6 | 92.4 | 1.0 | - | - | - | |

| Hand | 83.8 | 93.9 | 100 | 100 | 93.8 | 90.5 | 0.9 | - | - | - | |

| Fed_2 | 83.8 | 100 | 100 | 100 | 85.4 | 86.6 | 1.0 | 0 | 6 | 0 | |

| ITCR | 86.5 | 91.4 | 100 | 100 | 91.2 | 90.3 | 1.1 | - | - | - | |

| MON4 | 89.2 | 93.9 | 100 | 100 | 92.1 | 83.9 | 1.3 | - | - | - | |

| PM | 81 | 100 | 100 | 100 | 90.38 | 92.29 | 0.8 | 0 | 2 | 1 | |

| VH2 | DLR | 78.6 | 100 | 100 | 100 | 94.3 | 97 | 0.6 | - | - | - |

| RMA | 85.7 | 52.2 | 100 | 100 | 95.4 | 85.9 | 0.9 | - | - | - | |

| Hand | 78.6 | 78.6 | 100 | 100 | 95.1 | 89.8 | 0.8 | - | - | - | |

| Fed_2 | 85.7 | 100 | 100 | 100 | 88.8 | 84.5 | 0.9 | 0 | 2 | 0 | |

| ITCR | 78.6 | 42.3 | 100 | 100 | 94 | 89 | 0.8 | - | - | - | |

| MON4 | 85.7 | 91.7 | 100 | 100 | 97.2 | 83.5 | 1.1 | - | - | - | |

| PM | 83.8 | 100 | 100 | 100 | 92.37 | 96.75 | 0.6 | 0 | 0 | 0 | |

| VH3 | DLR | 78.6 | 100 | 100 | 100 | 93.7 | 95.5 | 0.7 | - | - | - |

| RMA | 78.6 | 93.9 | 100 | 100 | 91.3 | 92.4 | 0.8 | - | - | - | |

| Hand | 78.6 | 92.8 | 92.1 | 100 | 91.9 | 90.6 | 0.8 | - | - | - | |

| Fed_2 | 82.1 | 95.7 | 100 | 100 | 89.9 | 84.7 | 1.1. | 0 | 5 | 0 | |

| ITCR | 75 | 78.2 | 94.7 | 100 | 89.1 | 92.5 | 0.8 | - | - | - | |

| MON4 | 76.8 | 95.7 | 97.4 | 100 | 93.7 | 81.3 | 1.0 | - | - | - | |

| PM | 85.11 | 95.23 | 100 | 100 | 90.7 | 95.68 | 0.6 | 0 | 2 | 0 |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Dong, Y.; Zhang, L.; Cui, X.; Ai, H.; Xu, B. Extraction of Buildings from Multiple-View Aerial Images Using a Feature-Level-Fusion Strategy. Remote Sens. 2018, 10, 1947. https://0-doi-org.brum.beds.ac.uk/10.3390/rs10121947

Dong Y, Zhang L, Cui X, Ai H, Xu B. Extraction of Buildings from Multiple-View Aerial Images Using a Feature-Level-Fusion Strategy. Remote Sensing. 2018; 10(12):1947. https://0-doi-org.brum.beds.ac.uk/10.3390/rs10121947

Chicago/Turabian StyleDong, Youqiang, Li Zhang, Ximin Cui, Haibin Ai, and Biao Xu. 2018. "Extraction of Buildings from Multiple-View Aerial Images Using a Feature-Level-Fusion Strategy" Remote Sensing 10, no. 12: 1947. https://0-doi-org.brum.beds.ac.uk/10.3390/rs10121947