Can Multispectral Information Improve Remotely Sensed Estimates of Total Suspended Solids? A Statistical Study in Chesapeake Bay

Abstract

:1. Introduction

2. Materials and Methods

2.1. Data Description

2.1.1. Satellite Data Processing

2.1.2. In situ Measurements

2.1.3. Satellite-in Situ Matchups

2.2. Methods

2.2.1. Statistical Methodology

2.2.2. Statistical and Machine Learning Models

2.2.3. Geographic and Temporal Cross-Validation

3. Results and Discussion

3.1. Model Comparison

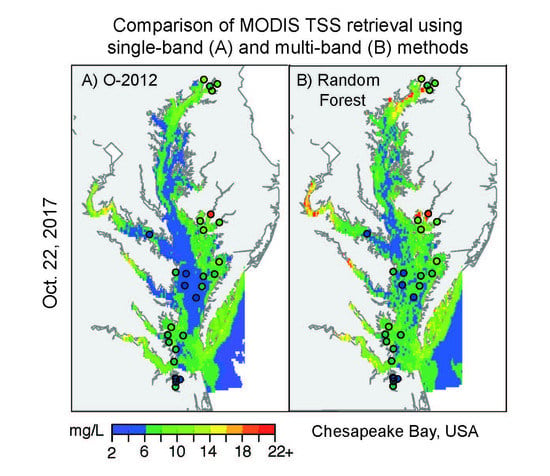

3.2. Comparison with Single-Band TSS Algorithm

3.3. Daily Satellite, in situ Mapped Comparisons

3.4. Geographic and Temporal Cross-Validation

4. Conclusions

Supplementary Materials

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Caburlotto, G.; Haley, B.J.; Lleò, M.M.; Huq, A.; Colwell, R.R. Serodiversity and ecological distribution of Vibrio parahaemolyticus in the Venetian Lagoon, Northeast Italy. Environ. Microbiol. Rep. 2010, 2, 151–157. [Google Scholar] [CrossRef] [PubMed]

- Johnson, C.N.; Bowers, J.C.; Griffith, K.J.; Molina, V.; Clostio, R.W.; Pei, S.; Laws, E.; Paranjpye, R.N.; Strom, M.S.; Chen, A.; et al. Ecology of Vibrio parahaemolyticus and Vibrio vulnificus in the Coastal and Estuarine Waters of Louisiana, Maryland, Mississippi, and Washington (United States). Appl. Environ. Microbiol. 2012, 78, 7249–7257. [Google Scholar] [CrossRef] [PubMed]

- Davis, B.J.K.; Jacobs, J.M.; Davis, M.F.; Schwab, K.J.; DePaola, A.; Curriero, F.C. Environmental determinants of Vibrio parahaemolyticus in the Chesapeake Bay. Appl. Environ. Microbiol. 2017, 83, e01147-17. [Google Scholar] [CrossRef] [PubMed]

- Stumpf, R.P. Sediment transport in Chesapeake Bay during floods: Analysis using satellite and surface observations. J. Coastal Res. 1988, 4, 1–15. [Google Scholar]

- Hu, C.; Chen, Z.; Clayton, T.; Swarzenski, P.; Brock, J.; Muller-Karger, F. Assessment of estuarine water-quality indicators using MODIS medium-resolution bands: Initial results from Tampa Bay, FL. Remote Sens. Environ. 2004, 93, 423–441. [Google Scholar] [CrossRef]

- Doxaran, D.; Ehn, J.; Bélanger, S.; Matsuoka, A.; Hooker, S.; Babin, M. Optical characterisation of suspended particles in the Mackenzie River plume (Canadian Arctic Ocean) and implications for ocean colour remote sensing. Biogeosciences 2012, 9, 3213–3229. [Google Scholar] [CrossRef] [Green Version]

- Wang, H.; Hladik, C.M.; Huang, W.; Milla, K.; Edmiston, L.; Harwell, M.A.; Schalles, J.F. Detecting the spatial and temporal variability of chlorophyll-a concentration and total suspended solids in Apalachicola Bay, Florida using MODIS imagery. Int. J. Remote Sens. 2010, 31, 439–453. [Google Scholar] [CrossRef]

- Chen, S.; Huang, W.; Chen, W.; Chen, X. An enhanced MODIS remote sensing model for detecting rainfall effects on sediment plume in the coastal waters of Apalachicola Bay. Mar. Environ. Res. 2011, 72, 265–272. [Google Scholar] [CrossRef] [PubMed]

- Zhao, H.; Chen, Q.; Walker, N.D.; Zheng, Q.; MacIntyre, H.L. A study of sediment transport in a shallow estuary using MODIS imagery and particle tracking simulation. Int. J. Remote Sens. 2011, 32, 6653–6671. [Google Scholar] [CrossRef]

- Ondrusek, M.; Stengel, E.; Kinkade, C.S.; Vogel, R.L.; Keegstra, P.; Hunter, C.; Kim, C. The development of a new optical total suspended material algorithm for the Chesapeake Bay. Remote Sens. Environ. 2012, 119, 243–254. [Google Scholar] [CrossRef]

- Feng, L.; Hu, C.; Chen, X.; Song, Q. Influence of the three gorges dam on total suspended matters in the Yangtze estuary and its adjacent coastal waters: Observations from MODIS. Remote Sens. Environ. 2014, 140, 779–788. [Google Scholar] [CrossRef]

- Shen, F.; Zhou, Y.; Peng, X.; Chen, Y. Satellite multi-sensor mapping of suspended particulate matter in turbid estuarine and coastal ocean, China. Int. J. Remote Sens. 2014, 35, 4173–4192. [Google Scholar] [CrossRef]

- Dogliotti, A.I.; Ruddick, K.G.; Nechad, B.; Doxaran, D.; Knaeps, E. A single algorithm to retrieve turbidity from remotely-sensed data in all coastal and estuarine waters. Remote Sens. Environ. 2015, 156, 157–168. [Google Scholar] [CrossRef]

- Han, B.; Loisel, H.; Vantrepotte, V.; Mériaux, X.; Bryére, P.; Ouillon, S.; Dessailly, D.; Xing, Q.; Zhu, J. Development of a semi-analytical algorithm for the retrieval of suspended particulate matter from remote sensing over clear to very turbid waters. Remote Sens. 2016, 8, 211. [Google Scholar] [CrossRef]

- Hasan, M.; Benninger, L. Resiliency of the western Chesapeake Bay to total suspended solid concentrations following storms and accounting for land-cover. Estuar. Coast. Shelf Sci. 2017, 191, 136–149. [Google Scholar] [CrossRef]

- Chen, J.; D’Sa, E.; Cui, T.; Zhang, X. A semi-analytical total suspended sediment retrieval model in turbid coastal waters: A case study in Changjiang River Estuary. Opt. Express 2013, 21, 13018–13031. [Google Scholar] [CrossRef] [PubMed]

- Stumpf, R.P.; Pennock, J.R. Calibration of a general optical equation for remote sensing of suspended sediments in a moderately turbid estuary. J. Geophys. Res. 1989, 94, 14363–14371. [Google Scholar] [CrossRef]

- Tzortziou, M.; Herman, J.; Gallegos, C.; Neale, P.; Subramaniam, A.; Harding, L. Bio-optics of the Chesapeake Bay from measurements and radiative transfer closure. Estuar. Coast. Shelf Sci. 2006, 68, 348–362. [Google Scholar] [CrossRef]

- Mitchell, C.; Hu, C.; Bowler, B.; Drapeau, D.; Balch, W.M. Estimating particulate inorganic carbon concentrations of the global ocean from ocean color measurements using a reflectance difference approach. J. Geophys. Res. Oceans 2017, 122, 8707–8720. [Google Scholar] [CrossRef]

- Qiu, Z. A simple optical model to estimate suspended particulate matter in Yellow River Estuary. Opt. Express 2013, 21, 27891–27904. [Google Scholar] [CrossRef] [PubMed]

- Sokoletsky, L.; Shen, F.; Yang, X.; Wei, X. Evaluation of Empirical and Semianalytical Spectral Reflectance Models for Surface Suspended Sediment Concentration in the Highly Variable Estuarine and Coastal Waters of East China. IEEE J. Sel. Top. Appl. 2016, 9, 1–11. [Google Scholar] [CrossRef]

- Smith, R.C.; Baker, K.S. Optical classification of natural waters. Limnol. Oceanogr. 1978, 23, 260–267. [Google Scholar] [CrossRef]

- Bukata, R.P.; Jerome, J.H.; Kondratyev, K.Y.; Pozdnyakov, D.V. Optical Properties and Remote Sensing of Inland and Coastal Waters; CRC Press: Boca Raton, FL, USA, 1995. [Google Scholar]

- Kemp, W.; Boynton, W.; Adolf, J.; Boesch, D.; Boicourt, W.; Brush, G.; Cornwell, J.; Fisher, T.; Glibert, P.; Hagy, J.; et al. Eutrophication of Chesapeake Bay: Historical trends and ecological interactions. Mar. Ecol. Prog. Ser. 2005, 303, 1–29. [Google Scholar] [CrossRef]

- Brush, G.S. Rates and patterns of estuarine sediment accumulation. Limnol. Oceanogr. 1989, 34, 1235–1246. [Google Scholar] [CrossRef] [Green Version]

- Clean Water Act Section 303(d): Notice for the Establishment of the Total Maximum Daily Load (TMDL) for the Chesapeake Bay (EPA). Available online: Https://www.gpo.gov/fdsys/pkg/FR-2011-01-05/ pdf/2010-33280.pdf (accessed on 12 December 2017).

- NASA’s OceanColor Web. Available online: Https://oceancolor.gsfc.nasa.gov (accessed on 16 August 2017).

- Bailey, S.W.; Franz, B.A.; Werdell, P.J. Estimation of near-infrared water-leaving reflectance for satellite ocean color data processing. Opt. Express 2010, 18, 7521–7527. [Google Scholar] [CrossRef] [PubMed]

- Aurin, D.; Mannino, A.; Franz, B. Spatially resolving ocean color and sediment dispersion in river plumes, coastal systems, and continental shelf waters. Remote Sens. Environ. 2013, 137, 212–225. [Google Scholar] [CrossRef]

- Chesapeake Bay Program Water Quality Database (1984-present). Available online: http://www.chesapeakebay.net/what/downloads/cbp_water_quality_database_1984_present (accessed on 16 August 2017).

- Urquhart, E.A.; Zaitchik, B.F.; Hoffman, M.J.; Guikema, S.D.; Geiger, E.F. Remotely sensed estimates of surface salinity in the Chesapeake Bay: A statistical approach. Remote Sens. Environ. 2012, 123, 522–531. [Google Scholar] [CrossRef]

- Nychka, D.; Furrer, R.; Paige, J.; Sain, S. Fields: Tools for Spatial Data. R package version 9.0. Available online: https://cran.r-project.org/web/packages/fields/fields.pdf (accessed on 1 September 2018).

- R Core Team. R: A Language and Environment for Statistical Computing. Available online: http://www.R-project.org (accessed on 16 August 2017).

- Breiman, L.; Freidman, J.; Olshen, R.; Stone, C. Classification and Regression Trees; Chapman and Hall/CRC: Boca Raton, FL, USA, 1984. [Google Scholar]

- Chipman, H.; George, I.; McCulloch, R. BART: Bayesian additive regression trees. Ann. Appl. Stat. 2010, 4, 266–298. [Google Scholar] [CrossRef]

- Breiman, L. Random forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef]

- Nelder, J.; Wedderburn, R. Generalized linear models. J. R. Stat. Soc. Ser. A. 1972, 135, 370–384. [Google Scholar] [CrossRef]

- Hastie, T.; Tibshirani, R. Generalized additive models. Stat. Sci. 1986, 1, 297–310. [Google Scholar] [CrossRef]

- Friedman, J. Multivariate adaptive regression spline. Ann. Stat. 1991, 19, 1–141. [Google Scholar] [CrossRef]

- Lee, K.Y.; Cha, Y.T.; Park, J.H. Short-term load forecasting using an artificial neural network. IEEE Trans. Power Syst. 1992, 7, 124–132. [Google Scholar] [CrossRef] [Green Version]

- James, G.; Witten, D.; Hastie, T.; Tibshirani, R. An Introduction to Statistical Learning with Applications in R; Springer: New York, NY, USA, 2013. [Google Scholar]

- Holm, S. A simple sequentially rejective multiple test procedure. Scand. J. Stat. 1979, 6, 65–70. [Google Scholar]

- Friedman, J.H. Greedy function approximation: A gradient boosting machine. Ann. Stat. 2001, 29, 1189–1232. [Google Scholar] [CrossRef]

- Dorji, P.; Fearns, P. A quantitative comparison of total suspended sediment algorithms: A case study of the last decade for MODIS and Landsat-based sensors. Remote Sens. 2016, 8, 810. [Google Scholar] [CrossRef]

| Variable | Mean | Standard Deviation | Maximum | Minimum |

|---|---|---|---|---|

| In situ TSS (mg/L) | 8.50 | 5.41 | 47.00 | 2.18 |

| nLw (645) (μW/cm2/nm/sr) | 0.7127 | 0.5240 | 3.4386 | 0.0683 |

| Rrs_412 (sr−1) | 0.0021 | 0.0021 | 0.0239 | 8.71 × 10−10 |

| Rrs_443 (sr−1) | 0.0030 | 0.0019 | 0.0223 | 0.0002 |

| Rrs_469 (sr−1) | 0.0039 | 0.0020 | 0.0215 | 0.0002 |

| Rrs_488 (sr−1) | 0.0044 | 0.0021 | 0.0192 | 0.0004 |

| Rrs_531 (sr−1) | 0.0068 | 0.0029 | 0.0189 | 0.0013 |

| Rrs_547 (sr−1) | 0.0075 | 0.0032 | 0.0212 | 0.0017 |

| Rrs_555 (sr−1) | 0.0074 | 0.0031 | 0.0209 | 0.0016 |

| Rrs_645 (sr−1) | 0.0045 | 0.0033 | 0.0217 | 0.0004 |

| Rrs_667 (sr−1) | 0.0036 | 0.0030 | 0.0205 | 0.0002 |

| Rrs_678 (sr−1) | 0.0036 | 0.0029 | 0.0205 | 0.0001 |

| Rrs_859 (sr−1) | 0.0009 | 0.0009 | 0.0079 | 2.00 × 10−6 |

| RF | GAM | GLM | NN | MARS | CART | BART | SVM | Mean | |

|---|---|---|---|---|---|---|---|---|---|

| MAE | 2.42 | 2.64 | 2.69 | 2.76 | 2.74 | 2.87 | 2.73 | 2.57 | 4.01 |

| MSE | 19.04 | 20.49 | 22.43 | 24.32 | 21.98 | 23.22 | 21.73 | 22.19 | 35.36 |

| RMSE | 4.36 | 4.53 | 4.74 | 4.93 | 4.69 | 4.82 | 4.66 | 4.71 | 5.95 |

| Model | MAE | MSE | RMSE |

|---|---|---|---|

| RF | 2.38 * | 18.46 * | 4.30 |

| RF(645) | 2.76 | 20.81 | 4.56 |

| O-2012 | 2.97 | 31.44 | 5.61 |

| O-2012(fit) | 2.71 | 21.77 | 4.67 |

| Above 80th Percentile | Below 80th Percentile | |||

|---|---|---|---|---|

| RF | O-2012 | RF | O-2012 | |

| MAE | 5.16 * | 8.33 | 1.67 | 1.62 |

| MSE | 67.97 * | 137.07 | 5.92 | 4.67 |

| RMSE | 8.24 | 11.71 | 2.43 | 2.16 |

| Model | East for West (n = 604) | West for East (n = 756) | North for South (n = 581) | South for North (n = 779) | Mainstem for Tributary (n = 878) | Tributary for Mainstem (n = 482) | High for Low (n = 556) | Low for High (n = 804) | |

|---|---|---|---|---|---|---|---|---|---|

| MAE | RF | 3.32 * | 2.41 * | 2.70 | 3.66 * | 3.65 * | 2.58 * | 2.62 | 2.90 |

| O-2012 | 3.50 | 2.08 | 2.68 | 3.12 | 3.89 | 2.31 | 2.75 | 3.04 | |

| MSE | RF | 26.95 * | 14.37 | 21.71 | 25.37 | 33.24 * | 15.23 | 17.00 * | 21.50 * |

| O-2012 | 35.26 | 11.94 | 24.31 | 25.70 | 40.34 | 16.43 | 22.82 | 27.91 |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

DeLuca, N.M.; Zaitchik, B.F.; Curriero, F.C. Can Multispectral Information Improve Remotely Sensed Estimates of Total Suspended Solids? A Statistical Study in Chesapeake Bay. Remote Sens. 2018, 10, 1393. https://0-doi-org.brum.beds.ac.uk/10.3390/rs10091393

DeLuca NM, Zaitchik BF, Curriero FC. Can Multispectral Information Improve Remotely Sensed Estimates of Total Suspended Solids? A Statistical Study in Chesapeake Bay. Remote Sensing. 2018; 10(9):1393. https://0-doi-org.brum.beds.ac.uk/10.3390/rs10091393

Chicago/Turabian StyleDeLuca, Nicole M., Benjamin F. Zaitchik, and Frank C. Curriero. 2018. "Can Multispectral Information Improve Remotely Sensed Estimates of Total Suspended Solids? A Statistical Study in Chesapeake Bay" Remote Sensing 10, no. 9: 1393. https://0-doi-org.brum.beds.ac.uk/10.3390/rs10091393