Reconstructing Cloud Contaminated Pixels Using Spatiotemporal Covariance Functions and Multitemporal Hyperspectral Imagery

Abstract

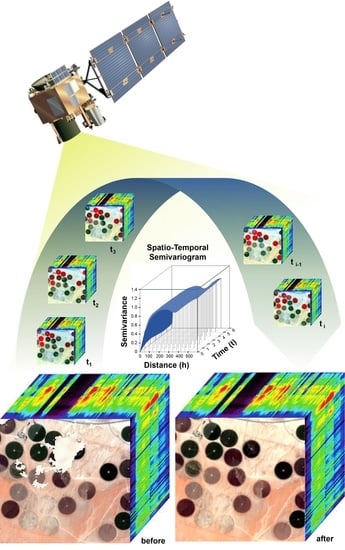

:1. Introduction

2. Materials and Methods

2.1. Study Site

2.2. Hyperion Data Pre-Processing

2.3. Spatiotemporal Statistics Approach

2.4. Block Sampling Scheme

2.5. Spatiotemporal Covariance Function

2.6. Exploratory Data Analysis

2.7. Spatiotemporal Covariance Model Fitting and Kriging

2.8. Evaluation of Results

3. Results

3.1. Spatiotemporal Covariance Model

3.2. Reflectance Predictions

3.3. Evaluation of Cloud-Free Imagery

4. Discussion

4.1. Spatiotemporal Statistical Model for Predicting Reflectance

4.2. Assessment of the Spectral Consistency

4.3. Block Sampling Strategy for Predictions in Heterogeneous Areas

4.4. Computational Efficiency

5. Conclusions

Author Contributions

Funding

Acknowledgments

References

- Gamon, J.A.; Qiu, H.; Sanchez-Azofeifa, A. Ecological applications of remote sensing at multiple scales. In Functional Plant Ecology, 2nd ed.; Pugnaire, F., Valladares, F., Eds.; CRC Press: Boca Raton, FL, USA, 2007; Volume 1, pp. 655–675. [Google Scholar]

- Ustin, S.L.; Gamon, J.A. Remote sensing of plant functional types. New Phytol. 2010, 186, 795–816. [Google Scholar] [CrossRef] [Green Version]

- Malenovský, Z.; Mishra, K.; Zemek, F.; Rascher, U.; Nedbal, L. Scientific and technical challenges in remote sensing of plant canopy reflectance and fluorescence. J. Exp. Bot. 2009, 60, 2987–3004. [Google Scholar] [CrossRef] [Green Version]

- Dale, L.; Thewis, A.; Boudry, C.; Rotar, L.; Dardenne, P.; Baeten, V.; Fernández, J.A. Hyperspectral imaging applications in agriculture and agro-food product quality and safety control: A review. Appl. Spectrosc. Rev. 2013, 48, 142–159. [Google Scholar] [CrossRef]

- Preliminary Assessment of the Value of Landsat 7 ETM+ SLC-off Data. Available online: https://landsat.usgs.gov/sites/default/files/documents/SLC_off_Scientific_Usability.pdf (accessed on 8 April 2019).

- Tanre, D.; Deschamps, P.Y.; Devaux, C.; Herman, M. Estimation of Saharan aerosol optical thickness from blurring effects in thematic mapper data. J. Geophys. Res. 1988, 93, 15955–15964. [Google Scholar] [CrossRef]

- Adler-Golden, S.M.; Robertson, D.C.; Richtsmeier, S.C.; Ratkowski, A.J. Cloud effects in hyperspectral imagery from first-principles scene simulations. In Proceedings of the SPIE Defense, Security, and Sensing, Orlando, FL, USA, 27 April 2009. [Google Scholar]

- Validation of On-Board Cloud Cover Assessment Using EO-1. Available online: https://eo1.gsfc.nasa.gov/new/extended/sensorWeb/EO-1_Validation On-board Cloud Assessment_Rpt.pdf (accessed on 8 April 2019).

- Ju, J.; Roy, D.P. The availability of cloud-free Landsat ETM Plus data over the conterminous United States and globally. Remote Sens. Environ. 2007, 112, 1196–1211. [Google Scholar] [CrossRef]

- Lin, C.H.; Tsai, P.H.; Lai, K.H.; Chen, J.Y. Cloud removal from multitemporal satellite images using information cloning. IEEE Trans. Geosci. Remote Sens. 2013, 51, 232–241. [Google Scholar] [CrossRef]

- Shen, H.; Xinghua, L.; Cheng, Q.; Zeng, C.; Yang, G.; Li, H.; Zhang, L. Missing Information Reconstruction of Remote Sensing Data: A Technical Review. IEEE Geosci. Remote Sens. Mag. 2015, 3, 61–85. [Google Scholar] [CrossRef]

- Helmer, E.; Ruefenacht, B. Cloud-free satellite image mosaics with regression trees and histogram matching. Photogramm. Eng. Remote Sens. 2005, 71, 1079–1089. [Google Scholar] [CrossRef]

- Benabdelkader, S.; Melgani, F. Contextual spatiospectral postreconstruction of cloud-contaminated images. IEEE Geosc. Remote Sens. 2008, 5, 204–208. [Google Scholar] [CrossRef]

- Melgani, F. Contextual reconstruction of cloud-contaminated multitemporal multispectral images. IEEE Trans. Geosci. Remote Sens. 2006, 44, 442–455. [Google Scholar] [CrossRef]

- Shen, H.; Wu, J.; Cheng, Q.; Aihemaiti, M.; Zhang, C.; Li, Z. A spatiotemporal fusion based cloud removal method for remote sensing images with land cover changes. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2019, 12, 862–874. [Google Scholar] [CrossRef]

- Chang, N.B.; Bai, K.; Chen, C.F. Smart information reconstruction via time-space-spectrum continuum for cloud removal in satellite images. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2015, 8, 1898–1912. [Google Scholar] [CrossRef]

- Wang, B.; Ono, A.; Muramatsu, K.; Fujiwarattt, N. Automated detection and removal of clouds and their shadows from Landsat TM images. IEICE Trans. Inf. Syst. 1999, 82, 453–460. [Google Scholar]

- Gabarda, S.; Cristóbal, G. Cloud covering denoising through image fusion. Image Vis. Comput. 2007, 25, 523–530. [Google Scholar] [CrossRef] [Green Version]

- Roerink, G.J.; Menenti, M.; Verhoef, W. Reconstructing cloudfree NDVI composites using Fourier analysis of time series. Int. J. Remote Sens. 2010, 21, 1911–1917. [Google Scholar] [CrossRef]

- Mariethoz, G.; McCabe, M.F.; Renard, P. Spatiotemporal reconstruction of gaps in multivariate fields using the direct sampling approach. Water Resour. Res. 2012, 48, W10507. [Google Scholar] [CrossRef]

- Jha, S.K.; Mariethoz, G.; Evans, J.P.; McCabe, M.F. Demonstration of a geostatistical approach to physically consistent downscaling of climate modeling simulations. Water Resour. Res. 2013, 49, 245–259. [Google Scholar] [CrossRef] [Green Version]

- Zhang, C.; Li, W.; Travis, D.J. Restoration of clouded pixels in multispectral remotely sensed imagery with cokriging. Int. J. Remote Sens. 2009, 30, 2173–2195. [Google Scholar] [CrossRef]

- Meng, Q.M.; Borders, B.E.; Cieszewski, C.J.; Madden, M. Closest spectral fit for removing clouds and cloud shadows. Photogramm. Eng. Remote Sens. 2009, 75, 569–576. [Google Scholar] [CrossRef]

- Cheng, Q.; Shen, H.; Zhang, L.; Yuan, Q.; Zeng, C. Cloud removal for remotely sensed images by similar pixel replacement guided with a spatio-temporal MRF model. ISPRS J. Photogramm. Remote Sens. 2014, 92, 54–68. [Google Scholar] [CrossRef]

- Cerra, D.; Müller, R.; Reinartz, P. Cloud removal in image time series through unmixing. In Proceedings of the 8th International Workshop on the Analysis of Multitemporal Remote Sensing Images, Annecy, France, 22–24 July 2015. [Google Scholar]

- Xu, M.; Pickering, M.; Plaza, A.J.; Jia, X. Thin cloud removal based on signal transmission principles and spectral mixture analysis. IEEE Trans. Geosci. Remote Sens. 2016, 54, 1659–1669. [Google Scholar] [CrossRef]

- Feng, W.; Chen, Q.; He, W.; Gu, G.; Zhuang, J.; Xu, S. A defogging method based on hyperspectral unmixing. Acta Opt. Sin. 2015, 35, 115–122. [Google Scholar]

- Yin, G.; Mariethoz, G.; McCabe, M.F. Gap-filling of landsat 7 imagery using the direct sampling method. Remote Sens. 2017, 9, 12. [Google Scholar] [CrossRef]

- Shekhar, S.; Jiang, Z.; Ali, R.Y.; Eftelioglu, E.; Tang, X.; Gunturi, V.M.V.; Zhou, X. Spatiotemporal data mining: A computational perspective. ISPRS Int. J. Geo-Inf. 2015, 4, 2306–2338. [Google Scholar] [CrossRef]

- Gneiting, T.; Genton, M.G.; Guttorp, P. Geostatistical space-time models, stationarity, separability and full symmetry. In Statistical Methods for Spatio-Temporal Systems, 1st ed.; Finkenstadt, B., Held, L., Isham, V., Eds.; Chapman and Hall/CRC: New York, NY, USA, 2006; Volume 1, pp. 151–175. [Google Scholar]

- De Iaco, S.; Palma, M.; Posa, D. A general procedure for selecting a class of fully symmetric space-time covariance functions. Environmetrics 2016, 27, 212–224. [Google Scholar] [CrossRef]

- Omidi, M.; Mohammadzadeh, M. A new method to build spatio-temporal covariance functions: Analysis of ozone data. Stat. Pap. 2015, 57, 689–703. [Google Scholar] [CrossRef]

- De Iaco, S.; Myers, D.E.; Posa, D. Nonseparable space-time covariance models: Some parametric families. Math. Geol. 2002, 34, 23–42. [Google Scholar] [CrossRef]

- Historical Weather for 2015 in Al-Kharj Prince Sultan Air Base, Saudi Arabia. WeatherSpark, 2015. Available online: http://weatherspark.com/history/32768/2015/Al-Kharj-Riyadh-Saudi-Arabia (accessed on 8 April 2019).

- Houborg, R.; McCabe, M.F. Adapting a regularized canopy reflectance model (REGFLEC) for the retrieval challenges of dryland agricultural systems. Remote Sens. Environ. 2016, 186, 105–112. [Google Scholar] [CrossRef]

- El Kenawy, A.M.; McCabe, M.F. A multi-decadal assessment of the performance of gauge and model based rainfall products over Saudi Arabia: Climatology, anomalies and trends. Int. J. Climatol. 2016, 36, 656–674. [Google Scholar] [CrossRef]

- Folkman, M.A.; Pearlman, J.; Liao, L.B.; Jarecke, P.J. EO-1/Hyperion hyperspectral imager design, development, characterization, and calibration. Proc. SPIE 2001, 4151, 40–51. [Google Scholar] [Green Version]

- Perkins, T.; Adler-Golden, S.M.; Matthew, M.W. Speed and accuracy improvements in FLAASH atmospheric correction of hyperspectral imagery. Opt. Eng. 2012, 51, 111707. [Google Scholar] [CrossRef]

- Felde, G.W.; Anderson, G.P.; Gardner, J.A.; Adler-Golden, S.M.; Matthew, M.W.; Berk, A. Water vapor retrieval using the FLAASH atmospheric correction algorithm. In Proceedings of the SPIE Defense and Security, Orlando, FL, USA, 12 August 2004. [Google Scholar]

- Rochford, P.; Acharya, P.; Adler-Golden, S.M. Validation and refinement of hyperspectral/multispectral atmospheric compensation using shadowband radiometers. IEEE Trans. Geosci. Remote Sen. 2005, 43, 2898–2907. [Google Scholar] [CrossRef]

- Griffin, M.K.; Burke, H.K. Compensation of hyperspectral data for atmospheric effects. Linc. Lab. J. 2003, 14, 29–54. [Google Scholar]

- Houborg, R.; McCabe, M.F. Impacts of dust aerosol and adjacency effects on the accuracy of Landsat 8 and RapidEye surface reflectances. Remote Sens. Environ. 2017, 194, 127–145. [Google Scholar] [CrossRef]

- Adler-Golden, S.M.; Matthew, M.W.; Berk, A.; Fox, M.J.; Ratkowski, A.J. Improvements in aerosol retrieval for atmospheric correction. In Proceedings of the IGARSS 2008—2008 IEEE International Geoscience and Remote Sensing Symposium, Boston, MA, USA, 7–11 July 2008. [Google Scholar]

- Stamnes, K.; Tsay, S.C.; Wiscombe, W.; Jayaweera, K. Numerically stable algorithm for Discrete-Ordinate-Method Radiative Transfer in multiple scattering and emitting layered media. Appl. Opt. 1988, 27, 2502–2509. [Google Scholar] [CrossRef]

- Staenz, K.; Neville, R.A.; Clavette, S.; Landry, R.; White, H.P. Retrieval of surface reflectance from Hyperion radiance data. In Proceedings of the IEEE International Geoscience and Remote Sensing Symposium, Toronto, ON, Canada, 24–28 June 2002. [Google Scholar]

- Pearlman, J.S.; Barry, P.S.; Segal, C.C.; Shepanski, J.; Beiso, D.; Carman, S.L. Hyperion, a space-based imaging spectrometer. IEEE Trans. Geosci. Remote Sens. 2003, 41, 1160–1173. [Google Scholar] [CrossRef]

- Matthew, M.W.; Adler-Golden, S.M.; Berk, A.; Felde, G.; Anderson, G.P.; Gorodetzkey, D.; Paswaters, S.; Shippert, M. Atmospheric correction of spectral imagery: Evaluation of the FLAASH algorithm with AVIRIS data. In Proceedings of the Applied Imagery Pattern Recognition Workshop, Washington, DC, USA, 17–23 October 2003. [Google Scholar]

- Green, A.A.; Berman, M.; Switzer, P.; Craig, M.D. A Transform for ordering multispectral data in terms of image quality with implications for noise removal. IEEE Int. Geosci. Remote Sens. 1988, 26, 65–74. [Google Scholar] [CrossRef]

- Matthew, M.W.; Adler-Golden, S.M.; Berk, A.; Richtsmeier, S.C.; Levine, R.Y.; Bernstein, L.S.; Acharya, P.K.; Anderson, G.P.; Felde, G.W.; Hoke, M.P.; et al. Status of atmospheric correction using a modtran4-based algorithm. In Proceedings of the SPIE AeroSense 2000, Orlando, FL, USA, 23 August 2000. [Google Scholar]

- Cochran, W.G. Sampling Techniques, 3rd ed.; John Wiley & Sons: New York, NY, USA, 1977. [Google Scholar]

- Wang, J.F.; Haining, R.P.; Cao, Z.D. Sample surveying to estimate the mean of a heterogeneous surface: Reducing the error variance through zoning. Int. J. Geogr. Inf. Sci. 2010, 24, 523–543. [Google Scholar] [CrossRef]

- Wang, J.F.; Stein, A.; Gao, B.; Ge, Y. A review of spatial sampling. Spat. Stat. 2012, 2, 1–14. [Google Scholar] [CrossRef]

- Montero, J.M.; Fernández, G.; Mateu, J. Spatial and Spatio-Temporal Geostatistical Modeling and Kriging, 1st ed.; John Wiley & Sons: Chichester, UK, 2015; pp. 178–265. [Google Scholar]

- Cressie, N.; Huang, H. Classes of nonseparable, spatio-temporal stationary covariance functions. J. Am. Stat. Assoc. 1999, 94, 1330–1340. [Google Scholar] [CrossRef]

- Gneiting, T. Nonseparable, stationary covariance functions for space-time data. J. Am. Stat. Assoc. 2002, 97, 590–600. [Google Scholar] [CrossRef]

- Tukey, J. On the comparative anatomy of transformations. Ann. Math. Stat. 1957, 28, 602–632. [Google Scholar] [CrossRef]

- Package CompRandFld. R Package Version 1.0.3-4. Available online: https://cran.r-project.org/package=CompRandFld (accessed on 8 April 2019).

- R Core Team. R: A Language and Environment for Statistical Computing. R Package Version 3.2.2. Available online: http://www.R-project.org (accessed on 8 April 2019).

- Zimmerman, D.L.; Zimmerman, M.B. A comparison of spatial semivariogram estimators and corresponding ordinary kriging predictors. Technometrics 1991, 33, 77–91. [Google Scholar] [CrossRef]

- Curriero, F.; Lele, S. A Composite Likelihood Approach to Semivariogram Estimation. J. Agric. Biol. Environ. Stat. 1999, 4, 9–28. [Google Scholar] [CrossRef]

- Padoan, S.; Bevilacqua, M. Analysis of random fields using CompRandFld. J. Stat. Softw. 2015, 63, 1–27. [Google Scholar] [CrossRef]

- Cressie, N.; Wikle, C. Statistics for Spatio-Temporal Data, 1st ed.; John Wiley & Sons: Chichester, UK, 2011. [Google Scholar]

- Zhang, C.; Li, W.; Travis, D. Gaps-fill of SLC-off Landsat ETM+ satellite image using a geostatistical approach. Int. J. Remote Sens. 2007, 28, 5103–5122. [Google Scholar] [CrossRef]

- Pringle, M.J.; Schmidt, M.; Muir, J.S. Geostatistical interpolation of SLC-off Landsat ETM+ images. ISPRS J. Photogramm. Remote Sens. 2009, 64, 654–664. [Google Scholar] [CrossRef]

- Webster, R.; Oliver, M.A. Geostatistics for Environmental Scientists, 1st ed.; John Wiley & Sons: Chichester, UK, 2007. [Google Scholar]

- Porcu, E.; Bevilacqua, M.; Genton, M. Spatio-temporal covariance and cross-covariance functions of the great circle distance on a sphere. J. Am. Stat. Assoc. 2016, 111, 888–898. [Google Scholar] [CrossRef]

- Genton, M.; Kleiber, W. Cross-covariance functions for multivariate geostatistics. Stat. Sci. 2015, 30, 147–163. [Google Scholar] [CrossRef]

- Jun, M. Non-stationary cross-covariance models for multivariate processes on a globe. Scand. J. Stat. 2011, 38, 726–747. [Google Scholar] [CrossRef]

- Thome, K.J.; Biggar, S.F.; Wisniewski, W. Cross comparison of EO-1 sensors and other Earth resources sensors to Landsat-7 ETM+ using Railroad Valley Playa. IEEE Trans. Geosci. Remote Sens. 2003, 41, 1180–1188. [Google Scholar] [CrossRef]

- Datt, B.; Jupp, D.L.B. Hyperion Data Processing Workshop, Hands-On Processing Instructions; CSIRO Office of Space Science & Applications Earth Observation Centre: Canberra, Australia, 2004. [Google Scholar]

- Cressie, N.; Johannesson, G. Fixed rank kriging for very large spatial data sets. J. R. Stat. Soc. Ser. B Stat. Methodol. 2008, 70, 209–226. [Google Scholar] [CrossRef]

| Time (t) | Date | DOY | Time (UTC + 3) | Cloud (%) | AOD τ550 | Visibility (km) | Sensor Azimuth (°) | Sensor Zenith (°) | Sensor Look Angle (°) |

|---|---|---|---|---|---|---|---|---|---|

| t1 | 18-Sep-15 | 261 | 8:54:05 | 2 | 0.405 | 18 | 112.475 | 153.778 | 26.222 |

| t2 | 26-Sep-15 | 269 | 8:48:59 | 2 | 0.194 | 36 | 115.518 | 162.033 | 17.967 |

| t3 | 4-Oct-15 | 277 | 8:43:00 | 2 | 0.366 | 20 | 118.321 | 171.426 | 8.5741 |

| t4 | 12-Oct-15 | 285 | 8:37:22 | 4 | 0.460 | 16 | 120.731 | 181.554 | -1.554 |

| t5 | 20-Oct-15 | 293 | 8:31:39 | 4 | 0.564 | 13 | 122.827 | 168.255 | 11.745 |

| t6 | 28-Oct-15 | 301 | 8:25:51 | 9 | 0.530 | 14 | 124.538 | 158.672 | 21.328 |

| t7 | 3-Nov-15 | 307 | 8:45:51 | 4 | 0.490 | 15 | 130.147 | 159.946 | 20.054 |

| t8 | 11-Nov-15 | 315 | 8:39:59 | 20 | 0.587 | 13 | 130.944 | 169.782 | 10.218 |

| Block | Clouds % | Block | Clouds % |

|---|---|---|---|

| A1 | 22 | A9 | 25 |

| A2 | 30 | A10 | 28 |

| A3 | 23 | A11 | 90 |

| A4 | 17 | A12 | 18 |

| A5 | 20 | A13 | 20 |

| A6 | 95 | A14 | 32 |

| A7 | 83 | A15 | 5 |

| A8 | 10 |

| Block | Size | % of Clouds |

|---|---|---|

| Area 1 | 30 × 30 | 95 |

| Area 2 | 60 × 60 | 75 |

| Area 3 | 90 × 90 | 55 |

| Separability | Power Space | Power Time | Scale Space | Scale Time | Spatial Sill | Temporal Sill | AIC |

|---|---|---|---|---|---|---|---|

| 0 | 0.25 | 2 | 400 | 6 | 0.96 | 0.16 | 6330.44 |

| 0.5 | 1.50 | 1 | 290 | 4 | 0.93 | 0.11 | 6311.78 |

| 1 | 1.50 | 0.25 | 200 | 2 | 0.91 | 0.09 | 6305.72 |

| Author | Covariance Function | Domains | Fitting Method | Kriging |

|---|---|---|---|---|

| Zhang et al. [61] | Exponential | Spatial (2D) + Temporal (1D) | Full likelihood | Ordinary, Co-Kriging |

| Pringle et al. [62] | Double spherical | Spatial (2D) + Temporal (1D) | Simulated annealing | Co-Kriging |

| Here proposed | Gneiting | Spatio-temporal (3D) | Composite marginal likelihood | Simple |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Angel, Y.; Houborg, R.; McCabe, M.F. Reconstructing Cloud Contaminated Pixels Using Spatiotemporal Covariance Functions and Multitemporal Hyperspectral Imagery. Remote Sens. 2019, 11, 1145. https://0-doi-org.brum.beds.ac.uk/10.3390/rs11101145

Angel Y, Houborg R, McCabe MF. Reconstructing Cloud Contaminated Pixels Using Spatiotemporal Covariance Functions and Multitemporal Hyperspectral Imagery. Remote Sensing. 2019; 11(10):1145. https://0-doi-org.brum.beds.ac.uk/10.3390/rs11101145

Chicago/Turabian StyleAngel, Yoseline, Rasmus Houborg, and Matthew F. McCabe. 2019. "Reconstructing Cloud Contaminated Pixels Using Spatiotemporal Covariance Functions and Multitemporal Hyperspectral Imagery" Remote Sensing 11, no. 10: 1145. https://0-doi-org.brum.beds.ac.uk/10.3390/rs11101145