Domain Adversarial Neural Networks for Large-Scale Land Cover Classification

Abstract

:1. Introduction

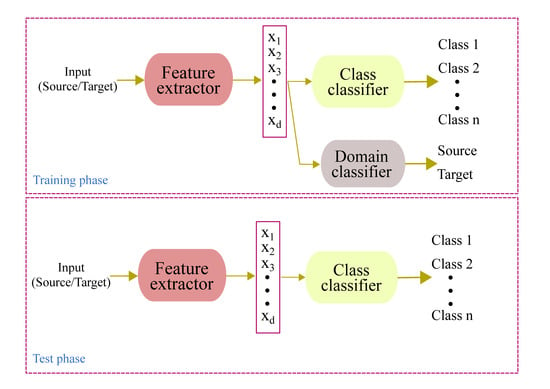

2. Methodology

3. Experimental Results

3.1. Dataset Description

3.2. Experimental Setup

3.3. Experimental Results

3.3.1. Single-Target Domain Adaptation

3.3.2. Multi-Target Domain Adaptation

3.4. Comparison

4. Discussion

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Moranduzzo, T.; Melgani, F. Automatic Car Counting Method for Unmanned Aerial Vehicle Images. IEEE Trans. Geosci. Remote Sens. 2014, 52, 1635–1647. [Google Scholar] [CrossRef]

- Moranduzzo, T.; Melgani, F. Detecting Cars in UAV Images with a Catalog-Based Approach. IEEE Trans. Geosci. Remote Sens. 2014, 52, 6356–6367. [Google Scholar] [CrossRef]

- Cortes, C.; Vapnik, V. Support-vector networks. Mach. Learn. 1995, 20, 273–297. [Google Scholar] [CrossRef]

- Deng, J.; Dong, W.; Socher, R.; Li, L. ImageNet: A large-scale hierarchical image database. In Proceedings of the 2009 IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 20–25 June 2009; pp. 248–255. [Google Scholar]

- Bejiga, M.B.; Zeggada, A.; Nouffidj, A.; Melgani, F. A Convolutional Neural Network Approach for Assisting Avalanche Search and Rescue Operations with UAV Imagery. Remote Sens. 2017, 9, 100. [Google Scholar] [CrossRef]

- Cheng, G.; Li, Z.; Han, J.; Yao, X.; Guo, L. Exploring Hierarchical Convolutional Features for Hyperspectral Image Classification. IEEE Trans. Geosci. Remote Sens. 2018, 56, 6712–6722. [Google Scholar] [CrossRef]

- Zhou, P.; Han, J.; Cheng, G.; Zhang, B. Learning Compact and Discriminative Stacked Autoencoder for Hyperspectral Image Classification. IEEE Trans. Geosci. Remote Sens. 2019. [Google Scholar] [CrossRef]

- Cheng, G.; Yang, C.; Yao, X.; Guo, L.; Han, J. When Deep Learning Meets Metric Learning: Remote Sensing Image Scene Classification via Learning Discriminative CNNs. IEEE Trans. Geosci. Remote Sens. 2018, 56, 2811–2821. [Google Scholar] [CrossRef]

- Tuia, D.; Persello, C.; Bruzzone, L. Domain Adaptation for the Classification of Remote Sensing Data: An Overview of Recent Advances. IEEE Geosci. Remote Sens. Mag. 2016, 4, 41–57. [Google Scholar] [CrossRef]

- Verrelst, J.; Alonso, L.; Caicedo, J.P.R.; Moreno, J.; Camps-Valls, G. Gaussian Process Retrieval of Chlorophyll Content from Imaging Spectroscopy Data. IEEE J. Sel. Top. Appl. Earth Observ. Remote Sens. 2013, 6, 867–874. [Google Scholar] [CrossRef]

- Ballanti, L.; Blesius, L.; Hines, E.; Kruse, B. Tree Species Classification Using Hyperspectral Imagery: A Comparison of Two Classifiers. Remote Sens. 2016, 8, 445. [Google Scholar] [CrossRef]

- Persello, C.; Bruzzone, L. A novel approach to the selection of spatially invariant features for classification of hyperspectral images. In Proceedings of the 2009 IEEE International Geoscience and Remote Sensing Symposium, Cape Town, South Africa, 12–17 July 2009; Volume 2, pp. II-61–II–64. [Google Scholar]

- Persello, C.; Bruzzone, L. Kernel-Based Domain-Invariant Feature Selection in Hyperspectral Images for Transfer Learning. IEEE Trans. Geosci. Remote Sens. 2016, 54, 2615–2626. [Google Scholar] [CrossRef]

- Izquierdo-Verdiguier, E.; Laparra, V.; Gómez-Chova, L.; Camps-Valls, G. Including invariances in SVM remote sensing image classification. In Proceedings of the 2012 IEEE International Geoscience and Remote Sensing Symposium, Munich, Germany, 22–27 July 2012; pp. 7353–7356. [Google Scholar]

- Inamdar, S.; Bovolo, F.; Bruzzone, L.; Chaudhuri, S. Multidimensional Probability Density Function Matching for Preprocessing of Multitemporal Remote Sensing Images. IEEE Trans. Geosci. Remote Sens. 2008, 46, 1243–1252. [Google Scholar] [CrossRef]

- Matasci, G.; Volpi, M.; Kanevski, M.; Bruzzone, L.; Tuia, D. Semisupervised Transfer Component Analysis for Domain Adaptation in Remote Sensing Image Classification. IEEE Trans. Geosci. Remote Sens. 2015, 53, 3550–3564. [Google Scholar] [CrossRef]

- Pan, S.J.; Tsang, I.W.; Kwok, J.T.; Yang, Q. Domain Adaptation via Transfer Component Analysis. IEEE Trans. Neural Netw. 2011, 22, 199–210. [Google Scholar] [CrossRef]

- Volpi, M.; Camps-Valls, G.; Tuia, D. Spectral alignment of multi-temporal cross-sensor images with automated kernel canonical correlation analysis. ISPRS J. Photogramm. Remote Sens. 2015, 107, 50–63. [Google Scholar] [CrossRef]

- Jun, G.; Ghosh, J. Spatially Adaptive Classification of Land Cover with Remote Sensing Data. IEEE Trans. Geosci. Remote Sens. 2011, 49, 2662–2673. [Google Scholar] [CrossRef]

- Gonzalez, D.M.; Camps-Valls, G.; Tuia, D. Weakly supervised alignment of multisensor images. In Proceedings of the 2015 IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Milan, Italy, 26–31 July 2015; pp. 2588–2591. [Google Scholar]

- Tuia, D.; Volpi, M.; Trolliet, M.; Camps-Valls, G. Semisupervised Manifold Alignment of Multimodal Remote Sensing Images. IEEE Trans. Geosci. Remote Sens. 2014, 52, 7708–7720. [Google Scholar] [CrossRef]

- Yang, H.L.; Crawford, M.M. Spectral and Spatial Proximity-Based Manifold Alignment for Multitemporal Hyperspectral Image Classification. IEEE Trans. Geosci. Remote Sens. 2016, 54, 51–64. [Google Scholar] [CrossRef]

- Yang, H.L.; Crawford, M.M. Domain Adaptation with Preservation of Manifold Geometry for Hyperspectral Image Classification. IEEE J. Sel. Top. Appl. Earth Observ. Remote Sens. 2016, 9, 543–555. [Google Scholar] [CrossRef]

- Tuia, D.; Munoz-Mari, J.; Gomez-Chova, L.; Malo, J. Graph Matching for Adaptation in Remote Sensing. IEEE Trans. Geosci. Remote Sens. 2013, 51, 329–341. [Google Scholar] [CrossRef]

- Othman, E.; Bazi, Y.; Alajlan, N.; AlHichri, H.; Melgani, F. Three-Layer Convex Network for Domain Adaptation in Multitemporal VHR Images. IEEE Geosci. Remote Sens. Lett. 2016, 13, 354–358. [Google Scholar] [CrossRef]

- Bencherif, M.A.; Bazi, Y.; Guessoum, A.; Alajlan, N.; Melgani, F.; AlHichri, H. Fusion of Extreme Learning Machine and Graph-Based Optimization Methods for Active Classification of Remote Sensing Images. IEEE Geosci. Remote Sens. Lett. 2015, 12, 527–531. [Google Scholar] [CrossRef]

- Sun, H.; Liu, S.; Zhou, S.; Zou, H. Transfer Sparse Subspace Analysis for Unsupervised Cross-View Scene Model Adaptation. IEEE J. Sel. Top. Appl. Earth Observ. Remote Sens. 2016, 9, 2901–2909. [Google Scholar] [CrossRef]

- Rajan, S.; Ghosh, J.; Crawford, M.M. Exploiting Class Hierarchies for Knowledge Transfer in Hyperspectral Data. IEEE Trans. Geosci. Remote Sens. 2006, 44, 3408–3417. [Google Scholar] [CrossRef]

- Bruzzone, L.; Marconcini, M. Domain Adaptation Problems: A DASVM Classification Technique and a Circular Validation Strategy. IEEE Trans. Pattern Anal. Mach. Intell. 2010, 32, 770–787. [Google Scholar] [CrossRef] [PubMed]

- Sun, Z.; Wang, C.; Wang, H.; Li, J. Learn Multiple-Kernel SVMs for Domain Adaptation in Hyperspectral Data. IEEE Geosci. Remote Sens. Lett. 2013, 10, 1224–1228. [Google Scholar]

- Gomez-Chova, L.; Camps-Valls, G.; Munoz-Mari, J.; Calpe, J. Semisupervised Image Classification with Laplacian Support Vector Machines. IEEE Geosci. Remote Sens. Lett. 2008, 5, 336–340. [Google Scholar] [CrossRef]

- Chi, M.; Bruzzone, L. Semisupervised Classification of Hyperspectral Images by SVMs Optimized in the Primal. IEEE Trans. Geosci. Remote Sens. 2007, 45, 1870–1880. [Google Scholar] [CrossRef]

- Bruzzone, L.; Chi, M.; Marconcini, M. A Novel Transductive SVM for Semisupervised Classification of Remote-Sensing Images. IEEE Trans. Geosci. Remote Sens. 2006, 44, 3363–3373. [Google Scholar] [CrossRef]

- Leiva-Murillo, J.M.; Gomez-Chova, L.; Camps-Valls, G. Multitask Remote Sensing Data Classification. IEEE Trans. Geosci. Remote Sens. 2013, 51, 151–161. [Google Scholar] [CrossRef]

- Rajan, S.; Ghosh, J.; Crawford, M.M. An Active Learning Approach to Hyperspectral Data Classification. IEEE Trans. Geosci. Remote Sens. 2008, 46, 1231–1242. [Google Scholar] [CrossRef]

- Tuia, D.; Ratle, F.; Pacifici, F.; Kanevski, M.F.; Emery, W.J. Active Learning Methods for Remote Sensing Image Classification. IEEE Trans. Geosci. Remote Sens. 2009, 47, 2218–2232. [Google Scholar] [CrossRef]

- Tuia, D.; Pasolli, E.; Emery, W.J. Using active learning to adapt remote sensing image classifiers. Remote Sens. Environ. 2011, 115, 2232–2242. [Google Scholar] [CrossRef]

- Matasci, G.; Tuia, D.; Kanevski, M. SVM-Based Boosting of Active Learning Strategies for Efficient Domain Adaptation. IEEE J. Sel. Top. Appl. Earth Observ. Remote Sens. 2012, 5, 1335–1343. [Google Scholar] [CrossRef]

- Persello, C.; Bruzzone, L. Active Learning for Domain Adaptation in the Supervised Classification of Remote Sensing Images. IEEE Trans. Geosci. Remote Sens. 2012, 50, 4468–4483. [Google Scholar] [CrossRef]

- Alajlan, N.; Pasolli, E.; Melgani, F.; Franzoso, A. Large-Scale Image Classification Using Active Learning. IEEE Geosci. Remote Sens. Lett. 2014, 11, 259–263. [Google Scholar] [CrossRef]

- Persello, C.; Boularias, A.; Dalponte, M.; Gobakken, T.; Næsset, E.; Schölkopf, B. Cost-Sensitive Active Learning with Lookahead: Optimizing Field Surveys for Remote Sensing Data Classification. IEEE Trans. Geosci. Remote Sens. 2014, 52, 6652–6664. [Google Scholar] [CrossRef]

- Demir, B.; Minello, L.; Bruzzone, L. Definition of Effective Training Sets for Supervised Classification of Remote Sensing Images by a Novel Cost-Sensitive Active Learning Method. IEEE Trans. Geosci. Remote Sens. 2014, 52, 1272–1284. [Google Scholar] [CrossRef]

- Stumpf, A.; Lachiche, N.; Malet, J.P.; Kerle, N.; Puissant, A. Active Learning in the Spatial Domain for Remote Sensing Image Classification. IEEE Trans. Geosci. Remote Sens. 2014, 52, 2492–2507. [Google Scholar] [CrossRef]

- Ganin, Y.; Ustinova, E.; Ajakan, H.; Germain, P.; Larochelle, H.; Laviolette, F.; Marchand, M.; Lempitsky, V. Domain-Adversarial Training of Neural Networks. In Domain Adaptation in Computer Vision Applications; Csurka, G., Ed.; Springer International Publishing: Cham, Switzerland, 2017; pp. 189–209. ISBN 978-3-319-58346-4. [Google Scholar]

- Elshamli, A.; Taylor, G.W.; Berg, A.; Areibi, S. Domain Adaptation Using Representation Learning for the Classification of Remote Sensing Images. IEEE J. Sel. Top. Appl. Earth Observ. Remote Sens. 2017, 10, 4198–4209. [Google Scholar] [CrossRef]

- Kingma, D.P.; Ba, J. Adam: A Method for Stochastic Optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar]

- Vincent, P.; Larochelle, H.; Bengio, Y.; Manzagol, P.-A. Extracting and composing robust features with denoising autoencoders. In Proceedings of the 25th International Conference on Machine Learning, Helsinki, Finland, 5–9 July 2008; pp. 1096–1103. [Google Scholar]

| Domain | Vegetation | Non-Vegetation |

|---|---|---|

| CE Spring (CESP) | 7668 | 7405 |

| CE Summer (CESU) | 7531 | 7161 |

| CE Winter (CEWI) | 6995 | 6857 |

| NE Spring (NESP) | 6315 | 6081 |

| NE Summer (NESU) | 6529 | 6869 |

| NE Winter (NEWI) | 7210 | 7061 |

| SE Spring (SESP) | 7356 | 7380 |

| SE Summer (SESU) | 7102 | 7343 |

| SE Winter (SEWI) | 7346 | 7343 |

| Source Domain | Learning Rate | Number of Neurons | Mini-Batch Size |

|---|---|---|---|

| CESP | 10−2 | 64 | 256 |

| CESU | 10−2 | 32 | 32 |

| CEWI | 10−1 | 32 | 32 |

| NESP | 10−1 | 4 | 128 |

| NESU | 10−2 | 64 | 128 |

| NEWI | 10−2 | 16 | 512 |

| SESP | 10−2 | 32 | 32 |

| SESU | 10−2 | 32 | 256 |

| SEWI | 10−2 | 64 | 64 |

| Target Domain | ||||

|---|---|---|---|---|

| Source Domain | NESP (99.9, 0.003) | CESP (1.0, 0.0) | SESP (99.5, 0.005) | |

| NESP | 99.3, 0.013 | 81.5, 0.033 | ||

| 98.1, 0.022 | 71.7, 0.051 | |||

| CESP | 96.7, 0.011 | 83.8, 0.062 | ||

| 88.7, 0.078 | 69.9, 0.065 | |||

| SESP | 61.5, 0.011 | 98.1, 0.011 | ||

| 64.3, 0.042 | 95.8, 0.068 | |||

| Target Domain | ||||

|---|---|---|---|---|

| Source Domain | NESU (98.8, 0.004) | CESU (1.0, 0.0) | SESU (99.0, 0.0) | |

| NESU | 98.0, 0.017 | 61.6, 0.026 | ||

| 83.9, 0.010 | 53.4, 0.020 | |||

| CESU | 95.6, 0.005 | 72.0, 0.023 | ||

| 95.8, 0.004 | 66.9, 0.020 | |||

| SESU | 68.0, 0.173 | 83.8, 0.059 | ||

| 87.1, 0.087 | 95.4, 0.016 | |||

| Target Domain | ||||

|---|---|---|---|---|

| Source Domain | NEWI (1.0, 0.0) | CEWI (99.6, 0.009) | SEWI (1.0, 0.0) | |

| NEWI | 71.7, 0.026 | 83.3, 0.093 | ||

| 81.4, 0.062 | 95.2, 0.014 | |||

| CEWI | 73.2, 0.045 | 87.6, 0.024 | ||

| 63.2, 0.042 | 88.4, 0.038 | |||

| SEWI | 89.1, 0.085 | 74.2, 0.009 | ||

| 91.1, 0.045 | 71.5, 0.022 | |||

| Target Domain | ||||

|---|---|---|---|---|

| Source Domain | NESP (99.9, 0.003) | NESU (98.8, 0.04) | NEWI (1.0, 0.0) | |

| NESP | 94.4, 0.005 | 83.9, 0.120 | ||

| 95.0, 0.004 | 86.0, 0.132 | |||

| NESU | 93.8, 0.027 | 73.7, 0.175 | ||

| 61.3, 0.028 | 82.2, 0.059 | |||

| NEWI | 73.1, 0.096 | 34.1, 0.228 | ||

| 94.5, 0.036 | 74.8, 0.130 | |||

| Target Domain | ||||

|---|---|---|---|---|

| Source Domain | CESP (1.0, 0.0) | CESU (1.0, 0.0) | CEWI (99.6, 0.009) | |

| CESP | 99.0, 0.0 | 77.3, 0.125 | ||

| 98.4, 0.008 | 51.0, 0.0 | |||

| CESU | 95.3, 0.009 | 65.3, 0.078 | ||

| 94.4, 0.022 | 93.2, 0.066 | |||

| CEWI | 95.9, 0.064 | 98.6, 0.007 | ||

| 78.5, 0.102 | 91.8, 0.097 | |||

| Target Domain | ||||

|---|---|---|---|---|

| Source Domain | SESP (99.5, 0.005) | SESU (99.0, 0.0) | SEWI (1.0, 0.0) | |

| SESP | 98.0, 0.0 | 87.3, 0.080 | ||

| 97.8, 0.007 | 70.3, 0.061 | |||

| SESU | 98.6, 0.005 | 69.9, 0.077 | ||

| 96.1, 0.005 | 76.8, 0.043 | |||

| SEWI | 89.8, 0.087 | 85.5, 0.088 | ||

| 61.6, 0.037 | 69.2, 0.037 | |||

| Target Domain | |||||||

|---|---|---|---|---|---|---|---|

| Source Domain | CESP (1.0, 0.0) | CESU (1.0, 0.0) | CEWI (99.6, 0.009) | SESP (99.5, 0.005) | SESU (99.0, 0.0) | SEWI (1.0, 0.0) | |

| NESP | 99.0, 0.0 | 88.0, 0.074 | 75.5, 0.011 | 95.7, 0.015 | |||

| 98.1, 0.022 | 84.2, 0.106 | 72.7, 0.026 | 97.3, 0.013 | ||||

| NESU | 93.1, 0.029 | 86.9, 0.088 | 63.3, 0.041 | 95.5, 0.034 | |||

| 64.3, 0.067 | 62.1, 0.034 | 50.8, 0.026 | 82.9, 0.030 | ||||

| NEWI | 82.1, 0.161 | 84.6, 0.087 | 66.8, 0.156 | 65.9, 0.126 | |||

| 99.2, 0.007 | 99.0, 0.0 | 76.7, 0.073 | 72.7, 0.032 | ||||

| Target Domain | |||||||

|---|---|---|---|---|---|---|---|

| Source Domain | NESP (99.9, 0.003) | NESU (98.8, 0.004) | NEWI (1.0, 0.0) | SESP (99.5, 0.005) | SESU (99.0, 0.0) | SEWI (1.0, 0.0) | |

| CESP | 93.9, 0.003 | 90.3, 0.128 | 81.8, 0.056 | 94.4, 0.061 | |||

| 59.7, 0.077 | 96.4, 0.005 | 89.8, 0.028 | 72.2, 0.044 | ||||

| CESU | 80.8, 0.046 | 78.3, 0.160 | 71.7, 0.047 | 84.7, 0.018 | |||

| 77.2, 0.042 | 82.9, 0.133 | 75.7, 0.024 | 86.0, 0.038 | ||||

| CEWI | 94.9, 0.051 | 95.1, 0.003 | 68.8, 0.055 | 70.4, 0.030 | |||

| 57.8, 0.054 | 93.9, 0.025 | 62.3, 0.062 | 61.7, 0.032 | ||||

| Target Domain | |||||||

|---|---|---|---|---|---|---|---|

| Source Domain | CESP (1.0, 0.0) | CESU (1.0, 0.0) | CEWI (99.6, 0.009) | NESP (99.3, 0.003) | NESU (98.8, 0.004) | NEWI (1.0, 0.0) | |

| SESP | 81.8, 0.008 | 59.6, 0.104 | 57.7, 0.123 | 82.3, 0.117 | |||

| 91.0, 0.073 | 51.1, 0.003 | 50.8, 0.007 | 86.1, 0.062 | ||||

| SESU | 94.6, 0.067 | 67.7, 0.127 | 68.3, 0.076 | 84.5, 0.086 | |||

| 99.0, 0.0 | 62.5, 0.091 | 96.0, 0.0 | 96.7, 0.019 | ||||

| SEWI | 98.4, 0.015 | 97.9, 0.025 | 97.1, 0.008 | 95.2, 0.004 | |||

| 92.7, 0.013 | 98.7, 0.009 | 80.9, 0.024 | 95.0, 0.0 | ||||

| Target Domain | |||||

|---|---|---|---|---|---|

| Source Domain | NESU (98.8, 0.004) | SEWI (1.0, 0.0) | SESU (99.0, 0.0) | CEWI (99.6, 0.009) | |

| CESP | 89.3, 0.112 | 92.4, 0.075 | |||

| 59.7, 0.077 | 72.2, 0.44 | ||||

| NEWI | 70.3, 0.163 | 54.0, 0.034 | |||

| 72.7, 0.032 | 81.4, 0.062 | ||||

| Target Domain | ||||||

|---|---|---|---|---|---|---|

| Source Domain | NESU (98.8, 0.004) | SEWI (1.0, 0.0) | CEWI (99.6, 0.009) | SESU (99.0, 0.0) | SESP (99.5, 0.005) | |

| CESP | 90.3, 0.111 | 93.7, 0.044 | 83.7, 0.105 | |||

| 59.7, 0.077 | 72.2, 0.44 | 51.0, 0.0 | ||||

| NEWI | 53.7, 0.036 | 71.4, 0.133 | 70.4, 0.142 | |||

| 81.4, 0.062 | 72.7, 0.032 | 76.7, 0.073 | ||||

| Target Domain | ||||||

|---|---|---|---|---|---|---|

| Source Domain | NESU (98.8, 0.004) | SEWI (1.0, 0.0) | CEWI (99.6, 0.009) | SESU (99.0, 0.0) | SESP (99.5, 0.005) | |

| CESP | 88.6, 0.099 | 97.0, 0.025 | 74.9, 0.101 | 84.7, 0.028 | ||

| 59.7, 0.077 | 72.2, 0.44 | 51.0, 0.0 | 69.9, 0.065 | |||

| NEWI | 94.1, 0.065 | 70.3, 0.052 | 76.5, 0.118 | 78.6, 0.142 | ||

| 95.2, 0.014 | 81.4, 0.062 | 72.7, 0.032 | 76.7, 0.073 | |||

| Target Domain | ||||||||

|---|---|---|---|---|---|---|---|---|

| Source Domain | NESU (98.8, 0.004) | SEWI (1.0, 0.0) | CEWI (99.6, 0.009) | SESP (99.5, 0.005) | NESP (99.9, 0.003) | SESU (99.0, 0.0) | CESU (1.0, 0.0) | |

| CESP | 93.0, 0.034 | 94.0, 0.056 | 77.9, 0.073 | 83.5, 0.035 | 95.6, 0.025 | |||

| 59.7, 0.077 | 72.2, 0.44 | 51.0, 0.0 | 69.9, 0.065 | 88.7, 0.078 | ||||

| NEWI | 94.9, 0.040 | 74.8, 0.028 | 72.3, 0.072 | 70.4, 0.048 | 98.3, 0.021 | |||

| 95.2, 0.014 | 81.4, 0.062 | 76.7, 0.073 | 72.7, 0.032 | 99.0, 0.0 | ||||

| Source Domain | |||

|---|---|---|---|

| Target Domain | CESP | NEWI | |

| CESP (1.0, 0.0) | 95.4, 0.078 | ||

| 99.2, 0.007 | |||

| NESU (98.8, 0.004) | 93.8, 0.020 | ||

| 59.7, 0.077 | |||

| SEWI (1.0, 0.0) | 93.7, 0.045 | 92.3, 0.075 | |

| 72.2, 0.440 | 95.2, 0.014 | ||

| CEWI (99.6, 0.009) | 83.5, 0.079 | 77.1, 0.032 | |

| 51.0, 0.0 | 81.4, 0.062 | ||

| SESP (99.5, 0.005) | 83.0, 0.032 | 74.5, 0.102 | |

| 69.9, 0.065 | 76.7, 0.073 | ||

| NESP (99.9, 0.003) | 95.0, 0.029 | ||

| 88.7, 0.078 | |||

| SESU (99.0, 0.0) | 71.8, 0.089 | ||

| 72.7, 0.032 | |||

| CESU (1.0, 0.0) | 99.0, 0.0 | 96.9, 0.050 | |

| 98.4, 0.008 | 99.0, 0.0 | ||

| Source Domain | |||

|---|---|---|---|

| Target Domain | CESP | NEWI | |

| CESP (1.0, 0.0) | 99.0, 0.012 | ||

| 99.2, 0.007 | |||

| NESU (98.8, 0.004) | 94.6, 0.005 | ||

| 59.7, 0.077 | |||

| SEWI (1.0, 0.0) | 93.6, 0.045 | 94.7, 0.039 | |

| 72.2, 0.44 | 95.2, 0.014 | ||

| CEWI (99.6, 0.009) | 86.4, 0.094 | 78.5, 0.043 | |

| 81.4, 0.062 | 81.4, 0.062 | ||

| SESP (99.5, 0.005) | 82.4, 0.019 | 78.3, 0.076 | |

| 69.9, 0.065 | 76.7, 0.073 | ||

| NESP (99.9, 0.003) | 95.7, 0.035 | 94.6, 0.045 | |

| 88.7, 0.078 | 94.5, 0.036 | ||

| SESU (99.0, 0.0) | 73.6, 0.043 | ||

| 72.7, 0.032 | |||

| CESU (1.0, 0.0) | 99.0, 0.0 | 99.0, 0.0 | |

| 98.4, 0.008 | 99.0, 0.0 | ||

| NEWI (1.0, 0.0) | 72.8, 0.197 | ||

| 96.4, 0.005 | |||

| Source Domain | |||

|---|---|---|---|

| Target Domain | CESP | NEWI | |

| CESP (1.0, 0.0) | 97.0, 0.057 | ||

| 99.2, 0.007 | |||

| NESU (98.8, 0.004) | 92.5, 0.014 | 94.6, 0.008 | |

| 59.7, 0.077 | 74.8, 0.130 | ||

| SEWI (1.0, 0.0) | 91.2, 0.077 | 92.4, 0.065 | |

| 72.2, 0.440 | 95.2, 0.014 | ||

| CEWI (99.6, 0.009) | 70.6, 0.084 | 79.5, 0.055 | |

| 51.0, 0.0 | 81.4, 0.062 | ||

| SESP (99.5, 0.005) | 85.5, 0.046 | 75.5, 0.085 | |

| 69.9, 0.065 | 76.7, 0.073 | ||

| NESP (99.9, 0.003) | 95.9, 0.013 | 90.8, 0.119 | |

| 88.7, 0.078 | 94.5, 0.036 | ||

| SESU (99.0, 0.0) | 79.6, 0.027 | 71.7, 0.058 | |

| 89.8, 0.028 | 72.7, 0.032 | ||

| CESU (1.0, 0.0) | 99.0, 0.0 | 98.3, 0.021 | |

| 98.4, 0.008 | 99.0, 0.0 | ||

| NEWI (1.0, 0.0) | 74.5, 0.229 | ||

| 96.4, 0.005 | |||

| DAE (1-DOM) | DAE (2-DOM) | Ours | |

|---|---|---|---|

| NESU–CESU (98.8, 0.004) | 98.8, 0.157 | 98.7, 0.006 | 98.0, 0.017 |

| SESU–NESU (1.0, 0.0) | 52.1, 0.003 | 52.1, 0.003 | 68.0, 0.017 |

| NESU–NESP (99.9, 0.003) | 69.2, 0.063 | 77.0, 0.074 | 93.8, 0.027 |

| NEWI–NESU (98.8, 0.004) | 44.2, 0.148 | 40.8, 0.186 | 34.1, 0.228 |

| CESP–NESU (98.8, 0.004) | 69.9, 0.157 | 77.3, 0.149 | 93.9, 0.003 |

| SESU–NESP (99.9, 0.003) | 62.8, 0.015 | 63.4, 0.010 | 97.1, 0.008 |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Bejiga, M.B.; Melgani, F.; Beraldini, P. Domain Adversarial Neural Networks for Large-Scale Land Cover Classification. Remote Sens. 2019, 11, 1153. https://0-doi-org.brum.beds.ac.uk/10.3390/rs11101153

Bejiga MB, Melgani F, Beraldini P. Domain Adversarial Neural Networks for Large-Scale Land Cover Classification. Remote Sensing. 2019; 11(10):1153. https://0-doi-org.brum.beds.ac.uk/10.3390/rs11101153

Chicago/Turabian StyleBejiga, Mesay Belete, Farid Melgani, and Pietro Beraldini. 2019. "Domain Adversarial Neural Networks for Large-Scale Land Cover Classification" Remote Sensing 11, no. 10: 1153. https://0-doi-org.brum.beds.ac.uk/10.3390/rs11101153