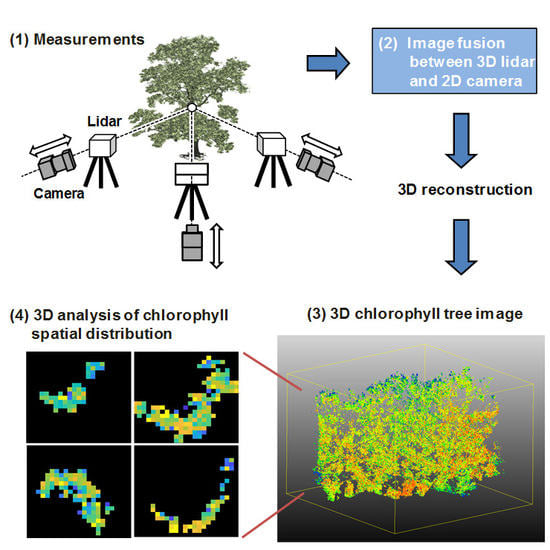

Estimating 3D Chlorophyll Content Distribution of Trees Using an Image Fusion Method Between 2D Camera and 3D Portable Scanning Lidar

Abstract

:1. Introduction

2. Materials and Methods

3. Results

4. Discussion

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Matson, P.; Jonson, L.; Billow, C.; Ruiliang, P. Seasonal patterns and remote spectral estimation of canopy chemistry across the Oregon transect. Ecol. Appl. 1994, 4, 280–298. [Google Scholar] [CrossRef]

- Wu, C.; Niu, Z.; Tang, Q.; Huang, W. Estimating chlorophyll content from hyperspectral vegetation indices: Modeling and validation. Agric. For. Meteorol. 2008, 148, 1230–1241. [Google Scholar] [CrossRef]

- Wang, Y.; Hu, X.; Jin, G.; Hou, Z.; Ning, J.; Zhang, Z. Rapid prediction of chlorophylls and carotenoids content in tea leaves under different levels of nitrogen application based on hyperspectral imaging. J. Sci. Food Agric. 2019, 99, 1997–2004. [Google Scholar] [CrossRef] [PubMed]

- Jones, H.G.; Stoll, M.; Santos, T.; De Sousa, C.; Chaves, M.M.; Grant, O.M. Use of infrared thermography for monitoring stomatal closure in the field: Application to grapevine. J. Exp. Bot. 2002, 53, 2249–2260. [Google Scholar] [CrossRef]

- Pou, A.; Diago, M.P.; Medrano, H.; Baluja, J.; Tardaguila, J. Validation of thermal indices for water status identification in grapevine. Agr. Water Manag. 2014, 134, 60–72. [Google Scholar] [CrossRef]

- Gonzalez-Duago, V.; Lopez-Lopez, M.; Espadafor, M.; Orgaz, F.; Testi, L.; Zarco-Tejada, P.; Lorite, I.J.; Fereres, E. Transpiration from canopy temperature: Implications for the assessment of crop yield in almond orchards. Eur. J. Agron. 2019, 105, 78–85. [Google Scholar] [CrossRef]

- Omasa, K.; Shimazaki, K.; Aiga, I.; Larcher, W.; Onoe, M. Image analysis of chlorophyll fluorescence transients for diagnosing the photosynthetic system of attached leaves. Plant Physiol. 1987, 84, 748–752. [Google Scholar] [CrossRef] [PubMed]

- Genty, B.; Meyer, S. Quantitative mapping of leaf photosynthesis using chlorophyll fluorescence imaging. Funct. Plant Biol. 1994, 22, 277–284. [Google Scholar] [CrossRef]

- Hopkinson, C.; Chasmer, L.; Young-Pow, C.; Treitz, P. Assessing forest metrics with a ground-based scanning lidar. Can. J. For. Res. 2004, 34, 573–583. [Google Scholar] [CrossRef] [Green Version]

- Tanaka, T.; Park, H.; Hattori, S. Measurement of forest canopy structure by a laser plane range-finding method improvement of radiative resolution and examples of its application. Agric. For. Meteorol. 2004, 125, 129–142. [Google Scholar] [CrossRef]

- Hosoi, F.; Omasa, K. Voxel-based 3-D modeling of individual trees for estimating leaf area density using high-resolution portable scanning lidar. IEEE Geosci. Remote Sens. 2006, 44, 3610–3618. [Google Scholar] [CrossRef]

- Hosoi, F.; Omasa, K. Factors contributing to accuracy in the estimation of the woody canopy leaf-area-density profile using 3D portable lidar imaging. J. Exp. Bot. 2007, 58, 3464–3473. [Google Scholar] [CrossRef] [PubMed]

- Hosoi, F.; Nakai, Y.; Omasa, K. 3-D voxel-based solid modeling of a broad-leaved tree for accurate volume estimation using portable scanning lidar. ISPRS J. Photogramm. 2013, 82, 41–48. [Google Scholar] [CrossRef]

- Bailey, B.N.; Mahaffee, W.F. Rapid measurement of the three-dimensional distribution of leaf orientation and the leaf angle probability density function using terrestrial LiDAR scanning. Remote Sens. Environ. 2017, 194, 63–76. [Google Scholar] [CrossRef] [Green Version]

- Itakura, K.; Hosoi, F. Estimation of leaf inclination angle in three-dimensional plant images obtained from lidar. Remote Sens. 2019, 11, 344. [Google Scholar] [CrossRef]

- Ma, X.; Feng, J.; Guan, H.; Liu, G. Prediction of chlorophyll content in different lightareas of apple tree canopies based on the color characteristics of 3D reconstruction. Remote Sens. 2018, 10, 429. [Google Scholar] [CrossRef]

- Eitel, J.U.H.; Vierling, L.A.; Long, D.S. Simultaneous measurements of plant structure and chlorophyll content in broadleaf saplings with a terrestrial laser scanner. Remote Sens. Environ. 2010, 114, 2229–2237. [Google Scholar] [CrossRef]

- Eitel, J.U.H.; Vierling, L.A.; Long, D.S.; Hunt, R.E. Early season remote sensing of wheat nitrogen status using a green scanning laser. Agric. For. Meteorol. 2011, 151, 1338–1345. [Google Scholar] [CrossRef]

- Wei, G.; Shalei, S.; Bo, Z.; Shuo, S.; Faquan, L. Multi-wavelength canopy LiDAR for remote sensing of vegetation: Design and system performance. ISPRS J. Photogramm. 2012, 69, 1–9. [Google Scholar] [CrossRef]

- Nevalainen, O.; Hakata, T.; Suomalainen, J.; Mäkipää, R.; Peltoniemi, M. Fast and nondestructive method for leaf level chlorophyll estimation using hyperspectral LiDAR. Agric. For. Meteorol. 2014, 198-199, 250–258. [Google Scholar] [CrossRef]

- Hakala, T.; Nevalainen, O.; Kaasalainen, S.; Mäkipää, R. Technical note: Multispectral lidar time series of pine canopy chlorophyll content. Biogeoscience 2015, 12, 1629–1634. [Google Scholar] [CrossRef]

- Junttila, S.; Vastaranta, M.; Liang, X.; Kaartinen, H.; Kukko, A.; Kaasalainen, S.; Holopainen, M.; Hyyppä, H.; Hyyppä, J. Measuring leaf water content with dual-wavelength intensity data from terrestrial laser scanners. Remote Sens. 2016, 9, 8. [Google Scholar] [CrossRef]

- Du, L.; Gong, W.; Yang, J. Application of spectral indices and reflectance spectrum on leaf nitrogen content analysis derived from hyperspectral LiDAR data. Opt. Laser Technol. 2018, 107, 372–379. [Google Scholar] [CrossRef]

- Omasa, K.; Hosoi, F.; Konishi, A. 3D lidar imaging for detecting and understanding plant responses and canopy structure. J. Exp. Bot. 2007, 58, 881–898. [Google Scholar] [CrossRef] [PubMed]

- Konishi, A.; Eguchi, A.; Hosoi, F.; Omasa, K. 3D monitoring spatio–temporal effects of herbicide on a whole plant using combined range and chlorophyll a fluorescence imaging. Funct. Plant Biol. 2009, 36, 874–879. [Google Scholar] [CrossRef]

- Guan, H.; Liu, M.; Ma, X.; Yu, S. Three-dimensional reconstruction of soybean canopies using multisource imaging for phenotyping analysis. Sensors 2018, 10, 1206. [Google Scholar] [CrossRef]

- Narváez, F.; del Pedregal, J.; Prieto, P.; Torres-Torriti, M.; Cheein, F. LiDAR and thermal images fusion for ground-based 3D characterisation of fruit trees. Biosyst. Eng. 2016, 151, 479–494. [Google Scholar] [CrossRef]

- Heckbert, P.S. Survey of texture mapping. IEEE Comput. Graph. 1986, 6, 56–67. [Google Scholar] [CrossRef]

- Haeberli, P.; Segal, M. Texture mapping as a fundamental drawing primitive. In Proceedings of the Fourth Eurographics Workshop on Rendering, Paris, France, 14–16 June 1993; pp. 259–266. [Google Scholar]

- Gitelson, A.; Kaufman, Y.; Merzlyak, M. Use of a green channel in remote sensing of global vegetation from EOS-MODIS. Remote Sens. Environ. 1996, 58, 289–298. [Google Scholar] [CrossRef]

- Nieto, J.I.; Monteiro, S.T.; Viejo, D. Global vision for local action. In Proceeding of the IEEE International Geoscience and Remote Sensing Symposium, Honolulu, HI, USA, 25–30 July 2010; pp. 4568–4571. [Google Scholar]

- Wood, C.W.; Reeves, D.W.; Himelrick, D.G. Relationships between chlorophyll meterreadings and leaf chlorophyll concentration, N status, and crop yield: A review. Proc. Agron. Soc. NZ. 1993, 23, 1–9. [Google Scholar]

- Markwell, J.; Osterman, J.C.; Mitchell, J.L. Calibration of the Minolta SPAD-502 leafchlorophyll meter. Photosynth. Res. 1995, 46, 467–472. [Google Scholar] [CrossRef] [PubMed]

- Muraoka, H.; Koizumi, H. Photosynthetic and structural characteristics of canopy and shrub trees in a cool-temperate deciduous broadleaved forest: Implication to the ecosystem carbon gain. Agric. Forest Meteorol. 2005, 134, 39–59. [Google Scholar] [CrossRef]

- Delegido, J.; Wittenberghe, S.; Verrelst, J.; Ortiz, V.; Veroustraete, F.; Valcke, R.; Samson, R.; Rivera, J.; Tenjo, C.; Moreno, J. Chlorophyll content mapping of urban vegetation in the city of Valencia based on the hyperspectral NAOC index. Ecol. Indic. 2014, 40, 34–42. [Google Scholar] [CrossRef]

- Liu, B.; Asseng, S.; Wang, A.; Wang, S.; Tang, L.; Cao, W.; Zhu, Y.; Liu, L. Modelling the effects of post-heading heat stress on biomass growth of winter wheat. Agric. Forest Meteorol. 2017, 247, 476–490. [Google Scholar] [CrossRef]

- Dou, Z.; Cui, L.; Li, J.; Zhu, Y.; Gao, C.; Pan, X.; Lei, Y.; Zhang, M.; Zhao, X.; Li, W. Hyperspectral estimation of the chlorophyll content in short-term and long-term restorations of mangrove in Quanzhou Bay Estuary, China. Sustainability 2018, 10, 1127. [Google Scholar] [CrossRef]

- Koike, T.; Kitao, M.; Maruyama, Y.; Mori, S.; Lei, T.T. Leaf morphology and photosynthetic adjustments among deciduous broad-leaved trees within the vertical canopy profile. Tree Physiol. 2001, 21, 951–958. [Google Scholar] [CrossRef]

- Kenzo, T.; Ichie, T.; Watanabe, Y.; Yoneda, R.; Ninomiya, I.; Koike, T. Changes in photosynthesis and leaf characteristics with tree height in five dipterocarp species in a tropical rain forest. Tree Physiol. 2006, 26, 865–873. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Castro, K.; Sanchez-Azofeifa, A. Changes in spectral properties, chlorophyll content and internal mesophyll structure of senescing Populus balsamifera and Populus tremuloides Leaves. Sensors 2008, 8, 51–69. [Google Scholar] [CrossRef]

- Louis, J.; Genet, H.; Meyer, S.; Soudani, K.; Montpied, P.; Legout, A.; Dreyer, E.; Cerovic, Z.; Dufrêne, E. Tree age-related effects on sun acclimated leaves in a chronosequence of beech (Fagus sylvatica) stands. Funct. Plant Biol. 2012, 39, 323–331. [Google Scholar] [CrossRef]

- Chen, L.-S.; Cheng, L. Photosynthetic enzymes and carbohydrate metabolism of apple leaves in response to nitrogen limitation. J. Hortic. Sci. Biotech. 2015, 79, 923–929. [Google Scholar] [CrossRef]

- Torres, C.; Magnin, A.; Varela, S.; Stecconi, M.; Grosfeld, J.; Puntieri, J. Morpho-physiological responses of Nothofagus obliqua to light intensity and water status, with focus on primary growth dynamics. Trees 2018, 32, 1301–1314. [Google Scholar] [CrossRef]

- Yu, Q.; Shen, Y.; Wang, Q.; Wang, X.; Fan, L.; Wang, Y.; Zhang, S.; Liu, Z.; Zhang, M. Light deficiency and waterlogging affect chlorophyll metabolism and photosynthesis in Magnolia sinostellata. Trees 2019, 33, 11–22. [Google Scholar] [CrossRef]

- Mänd, P.; Hallik, L.; Peñuelas, J.; Kull, O. Electron transport efficiency at opposite leaf sides: Effect of vertical distribution of leaf angle, structure, chlorophyll content and species in a forest canopy. Tree Physiol. 2013, 33, 202–210. [Google Scholar] [CrossRef] [PubMed]

- Murchie, E.H.; Horton, P. Acclimation of photosynthesis to irradiance and spectral quality in British plant species: Chlorophyll content, photosynthetic capacity and habitat preference. Plant Cell Environ. 1997, 20, 438–448. [Google Scholar] [CrossRef]

- Kitajima, K.; Hogan, K. Increases of chlorophyll a/b ratios during acclimation of tropical woody seedlings to nitrogen limitation and high light. Plant Cell Environ. 2003, 26, 857–865. [Google Scholar] [CrossRef] [PubMed]

- Rajsnerová, P.; Klem, K.; Holub, P.; Novotná, K.; Večeřová, K.; Kozáčiková, M.; Rivas-Ubach, A.; Sardans, J.; Marek, M.; Peñuelas, J.; et al. Morphological, biochemical and physiological traits of upper and lower canopy leaves of European beech tend to converge with increasing altitude. Tree Physiol. 2015, 35, 47–60. [Google Scholar] [CrossRef] [Green Version]

- Matile, P.; Hortensteiner, S.; Thomas, H.; Krautler, B. Chlorophyll breakdown in senescent leaves. Plant Physiol. 1996, 112, 1403–1409. [Google Scholar] [CrossRef] [PubMed]

- Gray, A.; Spies, T.; Pabst, R. Canopy gaps affect long-term patterns of tree growth and mortality in mature and old-growth forests in the Pacific Northwest. Forest Ecol. Manag. 2012, 281, 111–120. [Google Scholar] [CrossRef]

- Seidel, D.; Ruzicka, K.; Puettmann, K. Canopy gaps affect the shape of Douglas-fir crowns in the western Cascades, Oregon. Forest Ecol Manag. 2016, 363, 31–38. [Google Scholar] [CrossRef]

| Sample | Registration Error | |||||

|---|---|---|---|---|---|---|

| Pixel | Actual Dimension (m) | |||||

| Mean | Max. | Min. | Mean | Max. | Min. | |

| A | 1.6 | 5.1 | 0.0 | 0.06 | 0.19 | 0.00 |

| B | 1.8 | 3.2 | 0.0 | 0.02 | 0.03 | 0.00 |

| C | 4.3 (4.8) | 12.0 (8.1) | 1.0 (2.2) | 0.16 (0.18) | 0.46 (0.30) | 0.04 (0.08) |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Hosoi, F.; Umeyama, S.; Kuo, K. Estimating 3D Chlorophyll Content Distribution of Trees Using an Image Fusion Method Between 2D Camera and 3D Portable Scanning Lidar. Remote Sens. 2019, 11, 2134. https://0-doi-org.brum.beds.ac.uk/10.3390/rs11182134

Hosoi F, Umeyama S, Kuo K. Estimating 3D Chlorophyll Content Distribution of Trees Using an Image Fusion Method Between 2D Camera and 3D Portable Scanning Lidar. Remote Sensing. 2019; 11(18):2134. https://0-doi-org.brum.beds.ac.uk/10.3390/rs11182134

Chicago/Turabian StyleHosoi, Fumiki, Sho Umeyama, and Kuangting Kuo. 2019. "Estimating 3D Chlorophyll Content Distribution of Trees Using an Image Fusion Method Between 2D Camera and 3D Portable Scanning Lidar" Remote Sensing 11, no. 18: 2134. https://0-doi-org.brum.beds.ac.uk/10.3390/rs11182134