Author Contributions

Conceptualization, Y.C., Q.L.; methodology, Y.C., Q.L. and Z.D.; software, Y.C. and Z.D.; validation, Y.C. and Q.L.; formal analysis, Y.C. and Z.D.; investigation, Y.C. and Z.D., resources, Q.L.; writing—original draft preparation, Y.C., Q.L. and Z.D.; writing—review and editing, Y.C., Q.L. and Z.D.; visualization, Y.C. and Z.D.; supervision, Q.L. and Z.D.; project administration, Q.L. and Z.D.

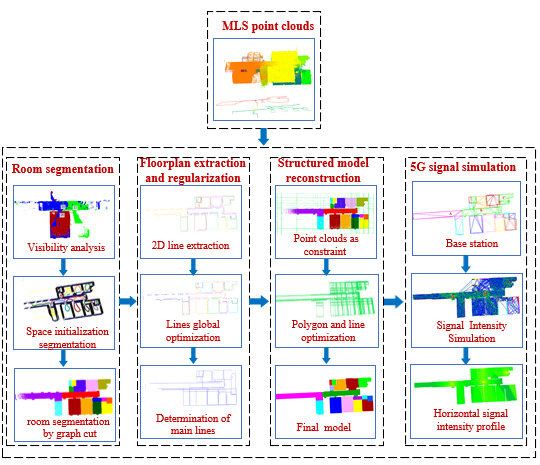

Figure 1.

Flowchart of the proposed method.

Figure 1.

Flowchart of the proposed method.

Figure 2.

Detection of openings. (a) Extracted wall surfaces. (b) Wall surfaces converted into binary image. (c) Template match. (d) Detected doors.

Figure 2.

Detection of openings. (a) Extracted wall surfaces. (b) Wall surfaces converted into binary image. (c) Template match. (d) Detected doors.

Figure 3.

The diagram of visible point clouds of simulated trajectory points. (a) Original point clouds. (b) Original point clouds divided into uniform grids. (c) Sample trajectory points. (d) Visibility analysis based on line-of-sight.

Figure 3.

The diagram of visible point clouds of simulated trajectory points. (a) Original point clouds. (b) Original point clouds divided into uniform grids. (c) Sample trajectory points. (d) Visibility analysis based on line-of-sight.

Figure 4.

Simulating visible point clouds of sample trajectories. (a) The visible points of the three trajectory points 26, 30, and 33. (b) Visible points limited by door.

Figure 4.

Simulating visible point clouds of sample trajectories. (a) The visible points of the three trajectory points 26, 30, and 33. (b) Visible points limited by door.

Figure 5.

Position of doors subdivide trajectory segments. (a) Sample trajectory points. (b) Partition of trajectory segments.

Figure 5.

Position of doors subdivide trajectory segments. (a) Sample trajectory points. (b) Partition of trajectory segments.

Figure 6.

Point clouds before and after space labeling. (a) Original point clouds. (b) Point clouds after space labeling.

Figure 6.

Point clouds before and after space labeling. (a) Original point clouds. (b) Point clouds after space labeling.

Figure 7.

Floorplan Line extraction. (a) Split labeled point clouds from given height. (b) The conversion of projected points into a binary image. (c) Extraction of line elements with label information.

Figure 7.

Floorplan Line extraction. (a) Split labeled point clouds from given height. (b) The conversion of projected points into a binary image. (c) Extraction of line elements with label information.

Figure 8.

Global optimization of lines. (a) Correction of angle. (b) Correction of distance. (c) The global optimization results.

Figure 8.

Global optimization of lines. (a) Correction of angle. (b) Correction of distance. (c) The global optimization results.

Figure 9.

Clustering similar lines. (a) The line groups project onto the cluster line. (b) The final main lines.

Figure 9.

Clustering similar lines. (a) The line groups project onto the cluster line. (b) The final main lines.

Figure 10.

Line segments and point clouds as constraint conditions.

Figure 10.

Line segments and point clouds as constraint conditions.

Figure 11.

The reconstructed room models.

Figure 11.

The reconstructed room models.

Figure 12.

The structured model with openings.

Figure 12.

The structured model with openings.

Figure 13.

The rooms with topological relationship (the solid lines are connected by the doors, and the dotted lines are the connected by adjacent walls).

Figure 13.

The rooms with topological relationship (the solid lines are connected by the doors, and the dotted lines are the connected by adjacent walls).

Figure 14.

Principle of signal propagation (the signal intensity changes from strong to weak that corresponds to color from red to blue).

Figure 14.

Principle of signal propagation (the signal intensity changes from strong to weak that corresponds to color from red to blue).

Figure 15.

Signal intensity simulation. (a) Setting three base stations. (b) Multipath signal propagation. (c) Horizontal profile of signal intensity.

Figure 15.

Signal intensity simulation. (a) Setting three base stations. (b) Multipath signal propagation. (c) Horizontal profile of signal intensity.

Figure 16.

The experiment data. (a) Benchmark point clouds and trajectories acquired by handheld laser scanning (HLS), ZEB-REVO. (b) Point clouds acquired by Shenzhen University (BLS) system of Shenzhen University. (c) A closed-loop corridor by BLS system of Xiamen University. (d) Parking lot by BLS system of Xiamen University.

Figure 16.

The experiment data. (a) Benchmark point clouds and trajectories acquired by handheld laser scanning (HLS), ZEB-REVO. (b) Point clouds acquired by Shenzhen University (BLS) system of Shenzhen University. (c) A closed-loop corridor by BLS system of Xiamen University. (d) Parking lot by BLS system of Xiamen University.

Figure 17.

Opening extraction, room segmentation, structural model and wireframe model results with the benchmark point clouds. (a) Doors (green) and windows (yellow) of the first and the second floors. (b) Segmented rooms of the first and second floors. (c) The structural models with doors and windows of the first and the second floors. (d) The wireframe models with doors and windows of the first and second floors. (e) Matching between the point clouds and the structured models on the first and second floors.

Figure 17.

Opening extraction, room segmentation, structural model and wireframe model results with the benchmark point clouds. (a) Doors (green) and windows (yellow) of the first and the second floors. (b) Segmented rooms of the first and second floors. (c) The structural models with doors and windows of the first and the second floors. (d) The wireframe models with doors and windows of the first and second floors. (e) Matching between the point clouds and the structured models on the first and second floors.

Figure 18.

Structural model results of the corridor at the Shenzhen University. (a) The structural model. (b) Vector model of walls and pillars. (c) Matching between point clouds and the structural model. (d) Matching between point clouds and vector model of walls and pillars.

Figure 18.

Structural model results of the corridor at the Shenzhen University. (a) The structural model. (b) Vector model of walls and pillars. (c) Matching between point clouds and the structural model. (d) Matching between point clouds and vector model of walls and pillars.

Figure 19.

Structural model results of the corridor at the Xiamen University. (a) The structural model. (b) Vector model of walls. (c) Matching between point clouds and structured model. (d) Matching between.

Figure 19.

Structural model results of the corridor at the Xiamen University. (a) The structural model. (b) Vector model of walls. (c) Matching between point clouds and structured model. (d) Matching between.

Figure 20.

Structural model results of the parking lot at the Xiamen university (a) The structural model with slant floor and ceiling. (b) Vector model of walls and pillars. (c) Matching between point clouds and structured model. (d) Matching between point clouds and the vector model of walls and pillars.

Figure 20.

Structural model results of the parking lot at the Xiamen university (a) The structural model with slant floor and ceiling. (b) Vector model of walls and pillars. (c) Matching between point clouds and structured model. (d) Matching between point clouds and the vector model of walls and pillars.

Figure 21.

Close-up views of selected details. (a) Benchmark point clouds and reconstructed model. (b) The point clouds and reconstructed model of the corridor at the Shenzhen University. (c) The point clouds and reconstructed model of the corridor at Xiamen University. (d) The point clouds and reconstructed model of the parking lot at Xiamen University.

Figure 21.

Close-up views of selected details. (a) Benchmark point clouds and reconstructed model. (b) The point clouds and reconstructed model of the corridor at the Shenzhen University. (c) The point clouds and reconstructed model of the corridor at Xiamen University. (d) The point clouds and reconstructed model of the parking lot at Xiamen University.

Figure 22.

5G signal intensity simulation based on the structural model by benchmark data. (a) The multipath signal propagation on the first floor. (b) Horizontal profile of signal intensity on the first floor. (c) The multipath signal propagation on the second floor. (d) Horizontal profile of signal intensity on the second floor. (e) Received energy value.

Figure 22.

5G signal intensity simulation based on the structural model by benchmark data. (a) The multipath signal propagation on the first floor. (b) Horizontal profile of signal intensity on the first floor. (c) The multipath signal propagation on the second floor. (d) Horizontal profile of signal intensity on the second floor. (e) Received energy value.

Figure 23.

Euclidean distance deviation distribution map.

Figure 23.

Euclidean distance deviation distribution map.

Table 1.

Technical specifications of the laser scanning system.

Table 1.

Technical specifications of the laser scanning system.

| Sensor | ZEB REVO | BLS (Shenzhen University) | BLS (Xiamen University) |

|---|

| Max range | 30 m | 100 m | 100 m |

| Speed (points/sec) | 43 × 103 | 300 × 103 | 300 × 103 |

| Horizontal Angular Resolution | 0.625° | 0.1–0.4° | 0.1–0.4° |

| Vertical Angular Resolution | 1.8° | 2.0° | 2.0° |

| Angular FOV | 270 × 360° | 30 × 360° | 2 × 30 × 360° |

Table 2.

Specifications of point clouds.

Table 2.

Specifications of point clouds.

| Dataset | Benchmark Data | Corridor (Shenzhen University) | Corridor (Xiamen University) | Parking lot (Xiamen University) |

|---|

| Number of points | 21.560.263 | 1.980.911 | 2.098.634 | 7.683.766 |

| Clutter | Low | High | Low | High |

Table 3.

Parameters of the proposed indoor structural model method.

Table 3.

Parameters of the proposed indoor structural model method.

| Parameters | Values | Descriptions |

|---|

| Extracting Openings |

| | The size of the pixel (point clouds transform into image) |

| | The width and height of regularized door |

| | The width and height of the regularized window |

| Segmentation of Rooms |

| | The size of the 3D grid (point clouds transform into 3D grid) |

| | Parameters of data term and smooth term of the energy function |

| Line Global Optimization |

| | Angle correction of lines |

| | Distance correction of lines |

| | k-nearest of lines |

| | The weight parameter of line global optimization |

| Cluster Similar Lines |

| | Angle threshold of merging similar lines |

| | Distance threshold of merging similar lines |

| 5G Signal Intensity Simulation |

| | The signal propagation distance |

| | The frequency of the electromagnetic wave |

| | The power exponent by IDW interpolation |

Table 4.

Results of basic element extraction.

Table 4.

Results of basic element extraction.

| Description | Number of Points | Actual/Detected Doors | Actual/Detected Windows | Actual/Detected Rooms | Actual/Detected Pillars |

|---|

| Benchmark data | 11,628,186 | 51/42 | 21/8 | 25/25 | 0/0 |

| Corridor (Shenzhen University) | 1,980,911 | 4/4 | 0/0 | 1/1 | 6/6 |

| Corridor (Xiamen University) | 7,683,766 | 8/8 | 11/11 | 1/1 | 0/0 |

| Parking Lot (Xiamen University) | 2,098,634 | 0/0 | 0/0 | 1/1 | 23/18 |

Table 5.

Running time for different scenes.

Table 5.

Running time for different scenes.

| Description | Surface Extraction (s) | Opening Detection (s) | Room Segmentation (s) | Line Regularization and Model Reconstruction (s) | Total Time (s) |

|---|

| Benchmark data | 80 | 19 | 287 | 49 | 435 |

| Corridor (Shenzhen University) | 9 | 4 | 0 | 24 | 37 |

| Corridor (Xiamen University) | 7 | 6 | 0 | 20 | 33 |

| Parking Lot (Xiamen University) | 28 | 0 | 0 | 32 | 60 |

Table 6.

Euclidean distance deviation for different scenes.

Table 6.

Euclidean distance deviation for different scenes.

| Error/m | 0.05 | 0.10 | 0.15 | 0.20 | 0.25 | 0.30 | 0.35 | 0.40 | 0.45 | 0.50 | 0.55 | 0.60 | 0.65 | 0.70 | 0.75 | 0.80 | 0.85 | 0.90 | 0.95 |

| Benchmark first floor (%) | 51.50 | 27.68 | 12.92 | 3.26 | 1.73 | 1.61 | 0.28 | 0.21 | 0.20 | 0.11 | 0.10 | 0.09 | 0.07 | 0.07 | 0.08 | 0.05 | 0.02 | 0.01 | 0.01 |

| Benchmark second floor (%) | 52.31 | 30.09 | 9.36 | 3.20 | 2.41 | 2.11 | 0.31 | 0.07 | 0.01 | 0.02 | 0.02 | 0.01 | 0.01 | 0.02 | 0.01 | 0.01 | 0.01 | 0.01 | 0.01 |

| Corridor, Shenzhen University (%) | 25.10 | 25.81 | 22.02 | 7.45 | 5.51 | 3.81 | 3.02 | 2.55 | 1.10 | 0.81 | 0.82 | 0.40 | 0.51 | 0.50 | 0.14 | 0.12 | 0.21 | 0.01 | 0.11 |

| Corridor, Xiamen University (%) | 75.83 | 15.49 | 4.81 | 1.75 | 0.62 | 0.60 | 0.11 | 0.11 | 0.41 | 0.10 | 0.02 | 0.01 | 0.02 | 0.05 | 0.02 | 0.02 | 0.01 | 0.01 | 0.01 |

| Parking lot, Xiamen University (%) | 32.82 | 20.87 | 15.71 | 10.92 | 5.38 | 3.30 | 2.62 | 2.01 | 1.37 | 1.23 | 1.06 | 0.91 | 0.44 | 0.26 | 0.27 | 0.23 | 0.20 | 0.25 | 0.15 |