Hyperspectral Image Classification Using Similarity Measurements-Based Deep Recurrent Neural Networks

Abstract

:1. Introduction

2. Background: RNN and LSTM

2.1. RNN

2.2. LSTM

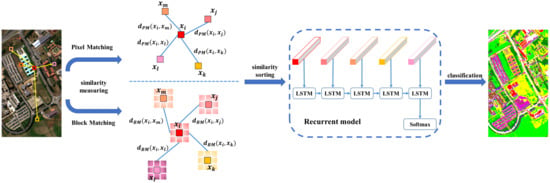

3. Spatial Similarity Measurements in LSTM

3.1. Pixel Matching

3.2. Block Matching

3.3. Sequential Feature Extraction

4. Experimental Setup, Results, and Discussion

4.1. Datasets

4.2. Experimental Design

4.3. Classification Results: Pavia University Image

4.4. Classification Results: Salinas Image

4.5. Parameter Sensitivity Analysis

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Haboudane, D.; Miller, J.R.; Pattey, E.; Zarco-Tejada, P.J.; Strachan, I.B. Hyperspectral vegetation indices and novel algorithms for predicting green LAI of crop canopies: Modeling and validation in the context of precision agriculture. Remote Sens. Environ. 2004, 90, 337–352. [Google Scholar] [CrossRef]

- Camps-Valls, G.; Tuia, D.; Bruzzone, L.; Benediktsson, J.A. Advances in hyperspectral image classification: Earth monitoring with statistical learning methods. IEEE Signal Process. Mag. 2014, 31, 45–54. [Google Scholar] [CrossRef]

- Bioucas-Dias, J.M.; Plaza, A.; Camps-Valls, G.; Scheunders, P.; Nasrabadi, N.; Chanussot, J. Hyperspectral remote sensing data analysis and future challenges. IEEE Geosci. Remote Sens. Mag. 2013, 1, 6–36. [Google Scholar] [CrossRef]

- Eismann, M.T.; Meola, J.; Hardie, R.C. Hyperspectral change detection in the presenceof diurnal and seasonal variations. IEEE Trans. Geosci. Remote Sens. 2008, 46, 237–249. [Google Scholar] [CrossRef]

- Wang, Q.; Yuan, Z.; Du, Q.; Li, X. GETNET: A General End-to-End 2-D CNN Framework for Hyperspectral Image Change Detection. IEEE Trans. Geosci. Remote Sens. 2018, 57, 1–11. [Google Scholar] [CrossRef]

- Plaza, A.; Du, Q.; Chang, Y.L.; King, R.L. High performance computing for hyperspectral remote sensing. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2011, 4, 528–544. [Google Scholar] [CrossRef]

- Ma, L.; Crawford, M.M.; Tian, J. Local manifold learning-based k-nearest-neighbor for hyperspectral image classification. IEEE Trans. Geosci. Remote Sens. 2010, 48, 4099–4109. [Google Scholar] [CrossRef]

- Jia, X.; Richards, J.A. Fast k-NN classification using the cluster-space approach. IEEE Geosci. Remote Sens. Lett. 2005, 2, 225–228. [Google Scholar] [CrossRef]

- Wang, Q.; Zhang, F.; Li, X. Optimal Clustering Framework for Hyperspectral Band Selection. IEEE Trans. Geosci. Remote Sens. 2018, 56, 1–13. [Google Scholar] [CrossRef]

- Melgani, F.; Bruzzone, L. Classification of hyperspectral remote sensing images with support vector machines. IEEE Trans. Geosci. Remote Sens. 2004, 42, 1778–1790. [Google Scholar] [CrossRef] [Green Version]

- Gualtieri, J.; Chettri, S. Support vector machines for classification of hyperspectral data. In Proceedings of the IEEE 2000 International Geoscience and Remote Sensing Symposium (IGARSS 2000), Honolulu, HI, USA, 24–28 July 2000; Volume 2, pp. 813–815. [Google Scholar]

- Tarabalka, Y.; Fauvel, M.; Chanussot, J.; Benediktsson, J.A. SVM and MRF-based method for accurate classification of hyperspectral images. IEEE Geosci. Remote Sens. Lett. 2010, 7, 736–740. [Google Scholar] [CrossRef]

- Chen, Y.; Nasrabadi, N.M.; Tran, T.D. Hyperspectral image classification using dictionary-based sparse representation. IEEE Trans. Geosci. Remote Sens. 2011, 49, 3973–3985. [Google Scholar] [CrossRef]

- Chen, Y.; Nasrabadi, N.M.; Tran, T.D. Hyperspectral image classification via kernel sparse representation. IEEE Trans. Geosci. Remote Sens. 2013, 51, 217–231. [Google Scholar] [CrossRef]

- Zhang, H.; Li, J.; Huang, Y.; Zhang, L. A nonlocal weighted joint sparse representation classification method for hyperspectral imagery. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2014, 7, 2056–2065. [Google Scholar] [CrossRef]

- Wang, Q.; He, X.; Li, X. Locality and Structure Regularized Low Rank Representation for Hyperspectral Image Classification. IEEE Trans. Geosci. Remote Sens. 2018, 1–13. [Google Scholar] [CrossRef]

- Benediktsson, J.A.; Swain, P.H.; Ersoy, O.K. Conjugate-gradient neural networks in classification of multisource and very-high-dimensional remote sensing data. Int. J. Remote Sens. 1993, 14, 2883–2903. [Google Scholar] [CrossRef]

- Yang, H. A back-propagation neural network for mineralogical mapping from AVIRIS data. Int. J. Remote Sens. 1999, 20, 97–110. [Google Scholar] [CrossRef]

- Gómez-Chova, L.; Camps-Valls, G.; Munoz-Mari, J.; Calpe, J. Semisupervised image classification with Laplacian support vector machines. IEEE Geosci. Remote Sens. Lett. 2008, 5, 336–340. [Google Scholar] [CrossRef]

- Bruzzone, L.; Chi, M.; Marconcini, M. A novel transductive SVM for semisupervised classification of remote-sensing images. IEEE Trans. Geosci. Remote Sens. 2006, 44, 3363–3373. [Google Scholar] [CrossRef]

- Ma, L.; Crawford, M.M.; Yang, X.; Guo, Y. Local-manifold-learning-based graph construction for semisupervised hyperspectral image classification. IEEE Trans. Geosci. Remote Sens. 2015, 53, 2832–2844. [Google Scholar] [CrossRef]

- Ma, L.; Ma, A.; Ju, C.; Li, X. Graph-based semi-supervised learning for spectral-spatial hyperspectral image classification. Pattern Recognit. Lett. 2016, 83, 133–142. [Google Scholar] [CrossRef]

- Wang, Z.; Nasrabadi, N.M.; Huang, T.S. Semisupervised hyperspectral classification using task-driven dictionary learning with Laplacian regularization. IEEE Trans. Geosci. Remote Sens. 2015, 53, 1161–1173. [Google Scholar] [CrossRef]

- Kotsia, I.; Guo, W.; Patras, I. Higher rank support tensor machines for visual recognition. Pattern Recognit. 2012, 45, 4192–4203. [Google Scholar] [CrossRef]

- Zhou, H.; Li, L.; Zhu, H. Tensor regression with applications in neuroimaging data analysis. J. Am. Stat. Assoc. 2013, 108, 540–552. [Google Scholar] [CrossRef]

- Makantasis, K.; Doulamis, A.D.; Doulamis, N.D.; Nikitakis, A. Tensor-based classification models for hyperspectral data analysis. IEEE Trans. Geosci. Remote Sens. 2018, 56, 6884–6898. [Google Scholar] [CrossRef]

- Benediktsson, J.A.; Palmason, J.A.; Sveinsson, J.R. Classification of hyperspectral data from urban areas based on extended morphological profiles. IEEE Trans. Geosci. Remote Sens. 2005, 43, 480–491. [Google Scholar] [CrossRef]

- Zhang, L.; Zhang, L.; Tao, D.; Huang, X. On combining multiple features for hyperspectral remote sensing image classification. IEEE Trans. Geosci. Remote Sens. 2012, 50, 879–893. [Google Scholar] [CrossRef]

- Shi, M.; Healey, G. Using multiband correlation models for the invariant recognition of 3-D hyperspectral textures. IEEE Trans. Geosci. Remote Sens. 2005, 43, 1201–1209. [Google Scholar]

- Zhang, L.; Zhang, L.; Du, B. Deep learning for remote sensing data: A technical tutorial on the state of the art. IEEE Geosci. Remote Sens. Mag. 2016, 4, 22–40. [Google Scholar] [CrossRef]

- Wang, Q.; Liu, S.; Chanussot, J.; Li, X. Scene classification with recurrent attention of VHR remote sensing images. IEEE Trans. Geosci. Remote Sens. 2018, 1–13. [Google Scholar] [CrossRef]

- Chen, Y.; Lin, Z.; Zhao, X.; Wang, G.; Gu, Y. Deep learning-based classification of hyperspectral data. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2014, 7, 2094–2107. [Google Scholar] [CrossRef]

- Chen, Y.; Zhao, X.; Jia, X. Spectral–spatial classification of hyperspectral data based on deep belief network. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2015, 8, 2381–2392. [Google Scholar] [CrossRef]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich feature hierarchies for accurate object detection and semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 580–587. [Google Scholar]

- Hu, W.; Huang, Y.; Wei, L.; Zhang, F.; Li, H. Deep convolutional neural networks for hyperspectral image classification. J. Sens. 2015, 2015, 1–12. [Google Scholar] [CrossRef]

- Makantasis, K.; Karantzalos, K.; Doulamis, A.; Doulamis, N. Deep supervised learning for hyperspectral data classification through convolutional neural networks. In Proceedings of the IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Milan, Italy, 26–31 July 2015; pp. 4959–4962. [Google Scholar]

- Li, Y.; Zhang, H.; Shen, Q. Spectral–spatial classification of hyperspectral imagery with 3D convolutional neural network. Remote Sens. 2017, 9, 67. [Google Scholar] [CrossRef]

- Elman, J.L. Finding structure in time. Cogn. Sci. 1990, 14, 179–211. [Google Scholar] [CrossRef]

- Mikolov, T.; Karafiát, M.; Burget, L.; Černockỳ, J.; Khudanpur, S. Recurrent neural network based language model. In Proceedings of the Eleventh Annual Conference of the International Speech Communication Association, Chiba, Japan, 26–30 September 2010. [Google Scholar]

- Graves, A.; Mohamed, A.R.; Hinton, G. Speech recognition with deep recurrent neural networks. In Proceedings of the IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Vancouver, BC, Canada, 26–31 May 2013; pp. 6645–6649. [Google Scholar]

- Hochreiter, S.; Schmidhuber, J. Long short-term memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef]

- Ienco, D.; Gaetano, R.; Dupaquier, C.; Maurel, P. Land cover classification via multitemporal spatial data by deep recurrent neural networks. IEEE Geosci. Remote Sens. Lett. 2017, 14, 1685–1689. [Google Scholar] [CrossRef]

- Sharma, A.; Liu, X.; Yang, X. Land cover classification from multi-temporal, multi-spectral remotely sensed imagery using patch-based recurrent neural networks. Neural Netw. 2018, 105, 346–355. [Google Scholar] [CrossRef] [Green Version]

- Mou, L.; Ghamisi, P.; Zhu, X.X. Deep recurrent neural networks for hyperspectral image classification. IEEE Trans. Geosci. Remote Sens. 2017, 55, 3639–3655. [Google Scholar] [CrossRef]

- Wu, H.; Prasad, S. Convolutional recurrent neural networks for hyperspectral data classification. Remote Sens. 2017, 9, 298. [Google Scholar] [CrossRef]

- Shi, C.; Pun, C.M. Multi-scale hierarchical recurrent neural networks for hyperspectral image classification. Neurocomputing 2018, 294, 82–93. [Google Scholar] [CrossRef]

- Romera-Paredes, B.; Torr, P.H.S. Recurrent instance segmentation. In Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherlands, 8–16 October 2016; Springer: Berlin/Heidelberg, Germany, 2016; pp. 312–329. [Google Scholar]

- Zhong, Y.; Zhao, J.; Zhang, L. A hybrid object-oriented conditional random field classification framework for high spatial resolution remote sensing imagery. IEEE Trans. Geosci. Remote Sens. 2014, 52, 7023–7037. [Google Scholar] [CrossRef]

- Yin, W.; Kann, K.; Yu, M.; Schütze, H. Comparative study of cnn and rnn for natural language processing. arXiv, 2017; arXiv:1702.01923. [Google Scholar]

- Fan, F.; Deng, Y. Enhancing endmember selection in multiple endmember spectral mixture analysis (MESMA) for urban impervious surface area mapping using spectral angle and spectral distance parameters. Int. J. Appl. Earth Obs. Geoinf. 2014, 33, 290–301. [Google Scholar] [CrossRef]

- Roweis, S.T.; Saul, L.K. Nonlinear dimensionality reduction by locally linear embedding. Science 2000, 290, 2323–2326. [Google Scholar] [CrossRef]

- Belkin, M.; Niyogi, P. Laplacian eigenmaps for dimensionality reduction and data representation. Neural Comput. 2003, 15, 1373–1396. [Google Scholar] [CrossRef]

- Asner, G.P.; Heidebrecht, K.B. Spectral unmixing of vegetation, soil and dry carbon cover in arid regions: Comparing multispectral and hyperspectral observations. Int. J. Remote Sens. 2002, 23, 3939–3958. [Google Scholar] [CrossRef]

- Tobler, W.R. A computer movie simulating urban growth in the Detroit region. Econ. Geogr. 1970, 46, 234–240. [Google Scholar] [CrossRef]

- Pu, H.; Chen, Z.; Wang, B.; Jiang, G.M. A novel spatial–spectral similarity measure for dimensionality reduction and classification of hyperspectral imagery. IEEE Trans. Geosci. Remote Sens. 2014, 52, 7008–7022. [Google Scholar]

- Huttenlocher, D.P.; Klanderman, G.A.; Rucklidge, W.J. Comparing images using the Hausdorff distance. IEEE Trans. Pattern Anal. Mach. Intell. 1993, 15, 850–863. [Google Scholar] [CrossRef]

- Congalton, R.G. A review of assessing the accuracy of classifications of remotely sensed data. Remote Sens. Environ. 1991, 37, 35–46. [Google Scholar] [CrossRef]

| Pavia University Image | Salinas image | ||

|---|---|---|---|

| Class No. | Name | Class No. | Name |

| 1 | Asphalt | 1 | Brocoli_green_weeds_1 |

| 2 | Meadow | 2 | Brocoli_green_weeds_2 |

| 3 | Gravel | 3 | Fallow |

| 4 | Trees | 4 | Fallow_rough_plow |

| 5 | Painted Metal Sheets | 5 | Fallow_smooth |

| 6 | Bare Soil | 6 | Stubble |

| 7 | Bitumen | 7 | Celery |

| 8 | Self-Blocking Bricks | 8 | Grapes_untrained |

| 9 | Shadows | 9 | Soil_vinyard_develop |

| 10 | Corn_senesced_green_weeds | ||

| 11 | Lettuce_romaine_4wk | ||

| 12 | Lettuce_romaine_5wk | ||

| 13 | Lettuce_romaine_6wk | ||

| 14 | Lettuce_romaine_7wk | ||

| 15 | Vinyard_untrained | ||

| 16 | Vinyard_vertical_trellis | ||

| Pavia University Image | Salinas Image | ||

|---|---|---|---|

| 1DCNN | 1DLSTM | 1DCNN | 1DLSTM |

| Conv(10)-8 | LSTM-32 | Conv(8)-12 | LSTM-32 |

| Maxpooling-2 | LSTM-64 | Maxpooling-2 | LSTM-64 |

| Conv(10)-8 | LSTM-128 | Conv(8)-12 | LSTM-128 |

| Maxpooling-2 | Maxpooling-2 | ||

| FC layer-9 | FC layer-16 | ||

| Class No. | SVM | 1DCNN | 1DLSTM | LSTM_PEU | LSTM_PSAM | LSTM_BEU | LSTM_BSAM |

|---|---|---|---|---|---|---|---|

| 1 | 97.52 ± 0.21 | 96.53 ± 0.57 | 95.76 ± 0.65 | 85.51 ± 3.06 | 94.06 ± 0.72 | 98.76 ± 0.44 | 98.78 ± 0.39 |

| 2 | 95.77 ± 0.30 | 94.78 ± 1.55 | 94.13 ± 0.93 | 94.11 ± 1.40 | 96.01 ± 1.08 | 99.08 ± 0.25 | 98.84 ± 0.29 |

| 3 | 65.57 ± 3.41 | 68.93 ± 3.65 | 64.29 ± 3.02 | 70.41 ± 2.27 | 66.61 ± 3.68 | 90.22 ± 2.28 | 89.22 ± 2.63 |

| 4 | 71.27 ± 7.38 | 76.62 ± 7.92 | 75.71 ± 4.95 | 62.69 ± 6.28 | 81.58 ± 4.77 | 92.97 ± 2.19 | 94.70 ± 1.71 |

| 5 | 95.50 ± 1.55 | 98.50 ± 0.86 | 97.73 ± 1.31 | 98.51 ± 0.67 | 95.57 ± 2.80 | 98.99 ± 1.18 | 99.42 ± 0.64 |

| 6 | 59.51 ± 6.74 | 63.62 ± 11.03 | 61.12 ± 7.62 | 69.71 ± 8.18 | 64.39 ± 8.23 | 88.65 ± 4.93 | 90.65 ± 3.92 |

| 7 | 52.10 ± 0.93 | 66.87 ± 4.86 | 65.74 ± 3.62 | 63.76 ± 4.45 | 56.69 ± 4.42 | 89.72 ± 4.25 | 88.47 ± 6.60 |

| 8 | 84.27 ± 1.13 | 83.54 ± 1.73 | 81.48 ± 1.70 | 82.37 ± 1.59 | 80.50 ± 1.01 | 93.32 ± 2.46 | 93.27 ± 1.28 |

| 9 | 99.92 ± 0.11 | 99.65 ± 0.31 | 99.72 ± 0.35 | 93.74 ± 2.05 | 95.99 ± 5.06 | 99.09 ± 0.62 | 98.49 ± 1.75 |

| OA | 82.12 ± 1.79 | 84.45 ± 3.01 | 83.41 ± 2.66 | 82.70 ± 1.73 | 84.56 ± 2.41 | 95.96 ± 1.01 | 96.20 ± 0.57 |

| AA | 80.16 ± 0.96 | 83.23 ± 1.71 | 81.74 ± 1.47 | 80.09 ± 1.19 | 81.27 ± 1.72 | 94.53 ± 0.98 | 94.65 ± 0.64 |

| Kappa | 76.98 ± 2.12 | 79.79 ± 3.53 | 78.42 ± 3.13 | 77.40 ± 2.05 | 79.86 ± 2.89 | 94.60 ± 1.31 | 94.91 ± 0.75 |

| Class No. | SVM | 1DCNN | 1DLSTM | LSTM_PEU | LSTM_PSAM | LSTM_BEU | LSTM_BSAM |

|---|---|---|---|---|---|---|---|

| 1 | 96.84 ± 1.18 | 99.12 ± 1.52 | 94.18 ± 10.04 | 98.61 ± 3.93 | 99.40 ± 0.89 | 95.78 ± 11.30 | 99.86 ± 0.22 |

| 2 | 98.79 ± 0.13 | 98.79 ± 0.69 | 98.69 ± 0.78 | 98.75 ± 1.08 | 99.39 ± 0.28 | 99.60 ± 0.16 | 99.34 ± 0.51 |

| 3 | 85.11 ± 1.38 | 95.53 ± 1.32 | 88.68 ± 7.32 | 92.89 ± 3.68 | 95.70 ± 1.07 | 96.11 ± 1.25 | 96.24 ± 1.10 |

| 4 | 97.44 ± 0.18 | 97.94 ± 0.67 | 97.20 ± 1.02 | 98.33 ± 0.74 | 98.45 ± 0.68 | 98.66 ± 0.95 | 97.90 ± 1.70 |

| 5 | 95.03 ± 0.85 | 97.20 ± 2.76 | 98.07 ± 1.65 | 96.26 ± 7.50 | 97.63 ± 1.57 | 98.75 ± 1.65 | 98.83 ± 0.68 |

| 6 | 99.79 ± 0.11 | 99.77 ± 0.21 | 98.98 ± 1.16 | 99.64 ± 0.44 | 99.45 ± 0.87 | 99.69 ± 0.36 | 99.71 ± 0.33 |

| 7 | 98.63 ± 0.44 | 99.35 ± 0.62 | 98.81 ± 0.91 | 99.37 ± 0.53 | 99.11 ± 0.76 | 99.40 ± 0.43 | 99.38 ± 0.51 |

| 8 | 76.70 ± 1.31 | 83.99 ± 4.02 | 76.24 ± 5.90 | 76.97 ± 2.91 | 76.50 ± 3.48 | 83.04 ± 1.37 | 85.66 ± 3.00 |

| 9 | 99.12 ± 0.04 | 99.02 ± 0.29 | 98.03 ± 1.46 | 98.78 ± 0.28 | 98.66 ± 0.33 | 99.20 ± 0.41 | 99.43 ± 0.27 |

| 10 | 81.91 ± 1.58 | 85.89 ± 2.09 | 84.90 ± 1.94 | 85.94 ± 4.19 | 88.65 ± 1.20 | 94.16 ± 1.58 | 91.00 ± 2.13 |

| 11 | 69.51 ± 1.00 | 82.23 ± 6.71 | 81.23 ± 13.79 | 76.81 ± 8.06 | 83.24 ± 4.33 | 86.00 ± 3.27 | 83.49 ± 4.40 |

| 12 | 93.33 ± 0.34 | 96.82 ± 1.05 | 87.58 ± 9.57 | 97.09 ± 1.33 | 97.26 ± 1.03 | 98.31 ± 1.36 | 98.26 ± 1.67 |

| 13 | 92.67 ± 0.65 | 94.15 ± 2.62 | 90.12 ± 4.05 | 96.07 ± 2.23 | 95.27 ± 2.27 | 95.89 ± 2.78 | 97.33 ± 1.98 |

| 14 | 89.68 ± 2.19 | 89.80 ± 3.78 | 84.77 ± 12.61 | 87.66 ± 5.95 | 88.47 ± 5.34 | 92.50 ± 3.95 | 90.75 ± 3.77 |

| 15 | 56.78 ± 2.39 | 59.34 ± 12.38 | 56.88 ± 8.13 | 60.79 ± 6.72 | 63.62 ± 5.58 | 67.33 ± 2.82 | 69.85 ± 3.21 |

| 16 | 95.59 ± 1.18 | 98.06 ± 0.44 | 93.84 ± 1.59 | 96.53 ± 2.14 | 96.63 ± 1.12 | 98.03 ± 1.07 | 96.19 ± 3.79 |

| OA | 84.75 ± 0.62 | 85.99 ± 4.14 | 84.07 ± 2.90 | 86.35 ± 2.07 | 87.53 ± 0.95 | 89.90 ± 0.43 | 90.63 ± 0.61 |

| AA | 89.18 ± 0.23 | 92.31 ± 0.96 | 89.26 ± 3.02 | 91.28 ± 1.40 | 92.34 ± 0.56 | 93.90 ± 0.71 | 93.95 ± 0.55 |

| Kappa | 76.98 ± 2.12 | 84.45 ± 4.49 | 82.26 ± 3.19 | 84.78 ± 2.26 | 86.07 ± 1.05 | 88.72 ± 0.48 | 89.55 ± 0.68 |

| LSTM Parameter | Pavia University Image | |||

|---|---|---|---|---|

| Sequential Feature Length | LSTM_PM_EU | LSTM_PM_SAM | LSTM_BM_EU | LSTM_BM_SAM |

| 10 | 30.88 | 34.01 | 29.11 | 32.00 |

| 20 | 51.14 | 53.25 | 49.92 | 53.73 |

| 30 | 83.39 | 87.43 | 77.52 | 81.63 |

| 40 | 112.49 | 115.03 | 105.31 | 110.75 |

| 50 | 145.29 | 144.98 | 132.31 | 142.07 |

| Salinas Image | ||||

| Sequential Feature Length | LSTM_PM_EU | LSTM_PM_SAM | LSTM_BM_EU | LSTM_BM_SAM |

| 10 | 29.08 | 34.19 | 28.47 | 28.00 |

| 20 | 46.27 | 49.02 | 44.96 | 47.64 |

| 30 | 76.94 | 85.61 | 75.83 | 75.41 |

| 40 | 110.57 | 117.78 | 101.10 | 102.07 |

| 50 | 124.06 | 147.91 | 128.13 | 128.90 |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ma, A.; Filippi, A.M.; Wang, Z.; Yin, Z. Hyperspectral Image Classification Using Similarity Measurements-Based Deep Recurrent Neural Networks. Remote Sens. 2019, 11, 194. https://0-doi-org.brum.beds.ac.uk/10.3390/rs11020194

Ma A, Filippi AM, Wang Z, Yin Z. Hyperspectral Image Classification Using Similarity Measurements-Based Deep Recurrent Neural Networks. Remote Sensing. 2019; 11(2):194. https://0-doi-org.brum.beds.ac.uk/10.3390/rs11020194

Chicago/Turabian StyleMa, Andong, Anthony M. Filippi, Zhangyang Wang, and Zhengcong Yin. 2019. "Hyperspectral Image Classification Using Similarity Measurements-Based Deep Recurrent Neural Networks" Remote Sensing 11, no. 2: 194. https://0-doi-org.brum.beds.ac.uk/10.3390/rs11020194