1. Introduction

The past few years have witnessed the dramatic leap in the remotely sensed imaging. Nonetheless, it is sometimes difficult to obtain the high-resolution satellite images due to the hardware limitations [

1]. Pansharpening is a technique of merging the high-resolution panchromatic (PAN) and low-resolution multispectral images (MSI) to synthesize a new high-resolution multispectral (HRMSI) image. With desirable resolution, the created image can yield better interpretation capabilities in applications such as land-use classification [

2,

3], target recognition [

4], detailed land monitoring [

5], and change detection [

6].

A number of pansharpening methods have been proposed and can be generally divided into four classes: Component substitution (CS), multiresolution analysis (MRA), hybrid algorithms, and learning-based methods. The CS method contains Principle component analysis (PCA) [

7], Intensity-hue-saturation transform (IHS) [

8], Gram–Schmidt transform (GS) [

9], Brovey transform [

10], etc. Those algorithms are efficient in terms of the execution time and are able to render the spatial details of PAN with high fidelity. However, this class may lead to the serious spectral distortion. The MRA approach includes Decimated wavelet transform (DWT) [

11], “

-trous” wavelet transform (ATWT) [

12], Laplacian pyramid [

13], Contourlets [

14], etc. This category is good at preserving the spectral information, while the fusion result suffers from the spatial misalignments. The hybrid method combines the advantages of the above two classes and, therefore, the fusion results can balance the trade-off between the spatial and spectral information. This class contains the methods such as Guided filter in PCA domain (GF-PCA) [

15] and Non-separable wavelet frame transform (NWFT) [

16]. The learning-based model aims at searching for the relationships between MSI, PAN, and the corresponding HRMSI. Since pansharpening is an ill-posed problem, the selection of prior constraints is critical to make the reliable solution [

17,

18]. As such, the pansharpening can be intrinsically viewed as an optimization problem. The representative methods of this category include the Matrix factorization [

19], the Dictionary learning [

20], the Bayesian model [

21], etc. However, the performances of those methods depend heavily on the prior assumptions and the learning abilities, which can be the difficulties in application. Recently, deep learning (DL) has shown much potential in various image-processing applications such as scene classification [

22], super-resolution [

23,

24], and face recognition [

25], and has become a thriving area in the image pansharpening in the last four years.

The basic idea of DL-based methods is to find the image priors via an end-to-end mapping from the training samples of MSI, PAN, and HRMSI, by means of several convolutional and activation layers [

26]. The earliest work of the DL-based pansharpening can be traced back to the Sparse auto-encoder (SAE) method by [

27]. In [

28,

29], to resolve the super-resolution (SR) problem, the authors propose the SRCNN model and open the door for the convolutional neural networks (CNN)-based image restoration. After that, CNN has been extensively adopted for the image fusion tasks. For instance, [

30] uses the SRCNN to process the nonlinear pansharpening, demonstrating the superiority of CNN method as comparing with the traditional algorithms. The variant structures such as residual network [

31,

32], very deep CNN [

33], and generative adversarial model [

34] are also designed to solve the pansharpening problem. To simultaneously obtain the spatial enhancement and the spectral preservation, [

35] proposes a 3D-CNN framework for image fusion and [

36] designs a two-branches pansharpening network, where the spatial and spectral information is separately processed. Apart from the developments on the network architecture, some methods take advantage of the other characteristics of the image to improve the fusion performance. In [

30], some radiometric indices from MSI are extracted as the input. In [

37], the authors train the model with high-pass elements of PAN to reduce the training burden and mitigate the quantitative deviation between the different satellites. In [

38], image patches are clustered according to the geometric attributes and processed via multiple fusion networks, which significantly improves the pansharpening accuracy. Combining the DL methods with traditional pansharpening algorithms is also a research topic. For instance, [

39] combines the CNN with MRA framework and, in [

40], DL with GS approach are adopted. To sum up, DL is a powerful tool for image pansharpening and achieves the state-of-the-art [

41]. The main advantages of DL model mainly manifest in three aspects. First, it can adaptively extract the effective features to obtain the best performance, instead of using the handcrafted design. Second, it can automatically model the nonlinear relationship from the training set, rather than relying on the complex prior assumptions. Third, it focuses on the local similarities between the input and the target, thus, the reconstruction result is able to preserve the spatial details.

However, regardless of the superiority of the DL-based pansharpening, some problems, which are not discussed in the abovementioned DL methods, can still be the obstacles in reality. First, due to the unavailability of external high-resolution examples, DL methods tend to construct the sufficient training samples by downscaling the MSI and PAN themselves. However, most of the existing data-generated methods simply adopt the default blur (i.e., fixed Gaussian kernel) rather than obeying the true point spread function (PSF) in the MSI. Training on the samples derived from the wrong blur kernel will lead to the serious deterioration for the pansharpening results [

42]. Some methods adopt the PSF directly from the parameters of the satellites, which is not feasible for different or even unknown sources [

43]. Second, DL-based image pansharpening is sensitive to local shifts, and the inaccurate overlap between the original MSI and PAN may exert the devastating effects on the fusion results. Nevertheless, traditional coregistration methods rely on the orthorectification provided by the digital surface model and accurate ground control-point selection [

44], which requires the expensive manual work.

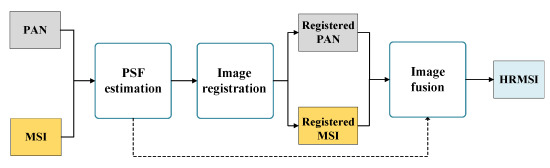

In order to solve the abovementioned problems and improve the adaptability of the DL methods, in this paper, we have proposed a deep self-learning (DSL) model for pansharpening. The DSL algorithm can be divided into three steps. First, to adaptively estimate the PSF of the MSI, which is used to degrade its higher-resolution counterpart, we propose a learning-based PSF estimation technique to predict the true blur kernel in MSI. We design a deep neural network named CNN-1 to obtain the relationship between the MSI and its PSF. Second, we develop an image alignment method to obtain the correct overlap pixel-to-pixel, where the spatial attributes for the registration metrics are extracted by the edge detection. It is an unsupervised technique which does not require any manual labor. Finally, we construct a simple and flexible network named CNN-2 to learn the prior relationship between the training samples, and establish the training set by downscaling the original MSI and PAN with the estimated PSF and train the CNN-2 in the down-sampled domain. Based on the trained CNN-2, we can obtain the pansharpening result using MSI and PAN. Compared with the existing literature, the contributions of this paper are threefold:

We propose a CNN-1 for the PSF calculation, which can directly estimate the blur kernel of the MSI without its higher-resolution counterpart.

We develop an edge-detection-based algorithm for unsupervised image registration with the pixel shifts. The method ensures the same overlaps between the MSI and PAN at the pixel level.

We construct the training set using the estimated PSF and present a CNN-2 to learn the end-to-end pansharpening. This enables the model to adaptively learn the mapping for any dataset.

The remainder of this paper is organized as follows.

Section 2 introduces the proposed DSL framework, where the PSF estimation, the image registration, and the image fusion are described in detail.

Section 3 reports the experimental results and the corresponding analyses on the three remote sensing images.

Section 4 gives some discussions about the essential parameters and the conclusion is drawn in

Section 5.

3. Experiments

3.1. Data Description

Three remotely sensed datasets are used in our experiments, including the GF-2, the GF-1, and the JL-1A satellite images. The details of the three images are described as follows.

GF-2 is a high-resolution optical earth observation satellite which is independently developed by China. It has two PAN/MSI cameras, where the spatial resolution is 1 m and 4 m, respectively. The MSI has four spectral channels including the blue, green, red, and near-infrared (NIR) bands. The data we used in this paper reveal the urban area of Guangzhou city, China and were collected on 4 November 2016.

Figure 5a,d depict the PAN and MSI of this data, respectively.

GF-1 is configured with two PAN and MSI with the spatial resolution 2 m and 8 m, respectively, and another four MSI cameras with 16 m resolution. The 8 m MSI includes four spectral bands including the blue, green, red, and the NIR. In this paper, we adopt the 2 m PAN and the 8 m MSI as the experimental data and the research region is the Guangzhou city, China. The data were acquired on 24 October 2015. The PAN and MSI of this data are displayed in

Figure 5b,e, respectively.

JL-1A is independently developed by China and was launched in 2015. The satellite provides a PAN at 0.72 m and a MSI at 2.88 m, respectively. The MSI has three optical bands including blue, green, and red. The data in this paper cover the region of Qi’ao island in Zhuhai City, China and were collected on 3 January 2017.

Figure 5c,f show the PAN and MSI of the data, respectively.

3.2. Experimental Setup

In order to validate the performance of the proposed DSL framework, 10 state-of-the-art methods have been used for comparison. The descriptions of the comparison algorithms are displayed in

Table 1. Matlab codes are directly downloaded from the website (

https://github.com/sjtrny/FuseBox) (

http://openremotesensing.net/). The DL based models are reproduced according to the corresponding literatures using Python language.

Two different hardware environments, including CPU and GPU, have been used to conduct the experiments. The CPU environment is composed of an Intel Core i7-6700 @ 3.40 GHz, with 24 GB of DDR4 RAM. The software environment in CPU is Matlab 2017a on a Windows 10 operation system. The GPU environment is composed of the NVIDIA Tesla K80, with 12 GB of RAM. The software environment in GPU is the Python 3.6. The comparison methods, which do not belong to the DL family, are implemented in CPU; and the DL methods are set in the GPU in the Keras (

https://keras.io/) framework with Tensorflow (

https://www.tensorflow.org/) backend.

For the three satellite data in the experiments, the size of the MSI and PAN are set as and , respectively. However, we can not evaluate the pansharpening results of them since the high-resolution ground-truth is not available in practice. Therefore, we adopt the original multispectral satellite images as the ground-truth and downscale the original multispectral and panchromatic images to the lower-resolution with the predefined “true” to synthesize the MSI and PAN. To simulate different degradations, the “true” is predefined as 1.5, 2, and 4.5, respectively, for the three datasets. For convenience, the blur region is fixed as 21, because it is enough to contain most of the energy for the three kernels.

For the CNN-1-based PSF estimation, a series of blur kernels are predefined to generate the training set. For GF-2 and GF-1, n is set as 8 and is set as . For JL-1A, n is set as 6 and is set as , because this dataset is visually more blurry than the other two images and it is intuitively insensitive to the slight kernel variance. To train the CNN-1, images are sliced to the patches of pixels and 7688 training samples are generated. 20% of the samples are used to validate the network. We use the Stochastic Gradient Descend (SGD) optimizer with the learning rate of 0.01 and the momentum of 0.9. The number of epochs of training is set as 1000.

For the CNN-2 model, the training images are not sliced to the small patches because the size of the images is not large. Therefore, the number of the training sample is only one and the model converges quickly. We use the Adam optimizer with the initial learning rate of 5 × 10, which can be reduced by a factor of 2 if the loss does not decrease within 25 epochs. The epoch number of the training is set as 1000.

To evaluate the properties of the obtained result, six quantitative indexes are used in this paper: Root-mean-square error (RMSE), peak signal noise ratio (PSNR), structure similarity (SSIM), spectral angle mapper (SAM), cross correlation (CC), and

(ERGAS) [

17]. Additionally, we display the execution time for each method. For the DL-based methods, it denotes the training time. RMSE and PSNR denote the quantitative similarity between the generated and the reference images, while SSIM reveals the structure similarity, CC indicates the correlation, and SAM and ERGAS mean the spectral preservation between two signals. For PSNR, SSIM, and CC, larger value indicates better performance; for RMSE, SAM, and ERGAS, smaller denotes better. The Matlab codes of those metrics are publicly available (

http://openremotesensing.net/). All pansharpening results are normalized before quantitative evaluation.

3.3. Experimental Results

3.3.1. Comparison with Pansharpening Methods

A group of the state-of-the-art methods are compared with the proposed method. For fair comparison, the images for those methods are all locally registered. For the DL-based methods, the training samples are generated using the degradation with the default

of 2.5. The quantitative results of different methods for the three datasets are displayed in

Table 2,

Table 3 and

Table 4.

Figure 6,

Figure 7 and

Figure 8 show the visual results of them. Additionally, we display a zoom-in subregion on the top-left corner for better legibility.

From

Figure 6,

Figure 7 and

Figure 8, we can observe that the image quality of all results is significantly higher than the bicubic interpolation, illustrating the effectiveness of the pansharpening procedure. However, among them, the lack of spatial and spectral fidelities can still be observed. First, the methods that do not belong to DL, PCA, GS, and CNMF are caught in serious spectral distortion, while Wavelet is inferior in the spatial domain. For instance, in

Figure 6, the color of PCA and CNMF is much darker than the reference. The CNMF tends to be blurry, while Wavelet can lead to some artifacts. Second, for the DL-based methods, PNN, DRPNN, and DSL obtain the reasonable visual results in terms of the spectral preservation. However, the comparison methods get deteriorated results for the spatial reconstruction. For example, unrealistic details can be observed from the PANNET in

Figure 6, where the black patches broadly distribute on the architectures. In

Figure 6 and

Figure 7, PNN and DRPNN also result in many artifacts, while in

Figure 8, their results are more blurry as compared with the reference. It is because for those competitors, the blur kernels used for constructing the training samples deviate from the true one, and the wrong kernels significantly damage the fusion performances. If the kernels are larger than the reality (i.e., GF-2 and GF-1), the trained networks incline to get the overclear results and generate the artifacts, while the results of smaller kernels (i.e., JL-1A) can be blurry. On the contrary, the proposed DSL exhibits good performance for the three datasets in terms of both spectral restoration and spatial resolution. The reason for this is that DSL can be adaptively trained with the true blur kernel from the given data using the proposed PSF estimation technique.

Table 2,

Table 3 and

Table 4 display the quantitative results of the comparison methods, the best performances are shown in bold. From the results, two observations can be drawn. First, the proposed DSL method obtains the best evaluation results in most of the indexes. For instance, for the three different datasets, the PSNRs of the DSL are 0.45, 0.93, and 0.30 higher than the second-best approach, respectively, demonstrating the great quantitative similarity of the proposed method. In addition, the SSIM of the proposed DSL are significantly higher than the competitors, illustrating the superior spatial consistency of the DSL. Moreover, although the SAM of the DSL scheme is slightly higher than PANNET for the JL-1A dataset, the ERGAS of DSL is lower than the competitors over all three images, demonstrating the effectiveness in the spectral preservation. Furthermore, the spectral curves of four pixels for the GF-1 results are displayed in

Figure 9. They are located in (512, 768), (727, 752), (127, 49), and (391, 496) for (x, y) coordinates, and they denote vegetable, water, bare soil, and concrete, respectively. From the spectral signatures, we can find that the proposed DSL obtains the most similar spectral curves to the ground-truth, whatever the object characteristic is. Moreover, it can be observed that the spectral similarities of water and bare soil are higher than the vegetable and the concrete. The above analyses indicate the effectiveness of our DSL no matter in the spatial or spectral domain. Second, it can be observed that DL-based methods obtain deteriorated performance when they are trained on the default wrong kernels. From

Table 2, their results are even worse than many of the traditional CS approaches (i.e., PCA and GS), demonstrating the significance of using the right PSF for the DL methods. In addition, the execution time of the DL algorithms is much more than others, even in the GPU environments. The DSL costs less training time than DRPNN and PANNET. This is because the proposed DSL adopts a simpler network structure to learn the nonlinear mapping, rather than the burdensome architecture.

3.3.2. Effectiveness of PSF Estimation Method

From the abovementioned analysis, the PSF exerts significant influence on the performance of the fusion model. If the estimated kernel deviates from the true one, the fusion result can be devastatingly deteriorated. Thus, before comparing the results of the pansharpening, we should assess the effectiveness of the kernel prediction. However, it is difficult to directly compare the proposed algorithm with other PSF estimation methods. On the one hand, the proposed method can be regarded as an unsupervised technique without using the clearer MSI counterpart but, in the literature, the number of unsupervised kernel estimation methods is limited. On the other hand, the codes of most of the unsupervised methods such as [

57,

58] are unfortunately not publicly available.

Therefore, the effectiveness of our proposed PSF method is evaluated from two aspects. First, we display the overall accuracy of the kernel prediction results for the three datasets. In order to achieve this, we slice the MSI to the small patches and use the trained CNN-1 to predict each of them. Then, we use the overall accuracy (OA) metrics to validate the result. Notice that with the

strategy, the true PSF can be obtained as long as more than 50% of the patches can be correctly estimated. The OAs of the prediction results for the three datasets are displayed in

Table 5. From the results, we can observe that the method achieves 92.86%, 90.84%, and 56.17% of the OA for the GF-2, GF-1, and JL-1A dataset, respectively, demonstrating that the proposed algorithm can obtain the true kernels over all three datasets. This result indicates the effectiveness of the CNN-1 in kernel estimation.

Second, the effectiveness of the PSF estimation is also demonstrated by comparing the DSL results using the default kernel. The experiment setting is set the same as our DSL model, except the adopted blur kernel. The results are displayed in

Table 6. It can be observed that the performance of our PSF estimation significantly surpasses the competitors. For instance, the PSNR of three datasets using our algorithm is 4.00, 1.85, and 1.19 higher than the method using the default kernel, respectively. Therefore, we can draw the conclusion that our PSF estimation method plays an essential role in the image pansharpening.

3.3.3. Comparison with Image Registration Methods

In order to investigate the effectiveness of our edge-based image registration method, we compare our method with other local registration algorithms. Since it is difficult to directly compare the performances of the image registration, we use the pansharpening results of DSL model to illustrate the efficacy of different methods. The comparison method we use is from [

44] (we denote it as Aiazzi’s). It is beneficial to recover the local misalignments for the CS- and MTF-based algorithms, however, it has not been used in DL domain. Furthermore, we also set the experiments without the local registration (using the original coarse centroid).

Table 7,

Table 8 and

Table 9 show the results of different local registration methods for the three datasets. It can be observed that the proposed edge-based method obtains the best performance, which illustrates the effectiveness of our registration algorithm. Furthermore, for GF-2 and GF-1 datasets, our method achieves slightly better results than the Aiazzi’s. However, for JL-1A dataset, Aiazzi’s method is seriously deteriorated. The reason is that the method is not suitable for the seriously blurry images. On the contrary, our method can provide the satisfactory results in any condition.