Wetland Classification Based on a New Efficient Generative Adversarial Network and Jilin-1 Satellite Image

Abstract

:1. Introduction

- We propose a new GAN architecture (i.e., ShuffleGAN) for wetland classification. The ShuffleGAN is a semi-supervised method, in which only a small number of labeled samples are required. Moreover, the proposed ShuffleGAN is more effective than the traditional GAN since lightweight ShuffleNet units are adopted.

- We perform species-level identification rather than coarse classification (e.g., wetland and non-wetland). Different tree species (e.g., Ficus, Alstonia scholaris and Delonix, etc.) located in the wetland are separated by using the ShuffleGAN and Jilin-1 satellite image. Species-level classification is beneficial to obtain detailed distribution of the wetland vegetation, thus providing more valuable information for finely analyzing the spatiotemporal distribution regularity of various tree species.

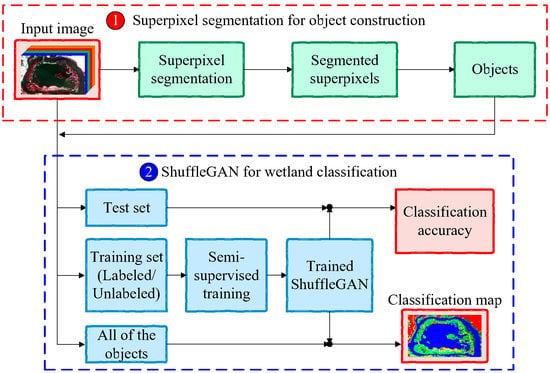

2. The Proposed Method

2.1. Superpixel Segmentation for Object Construction

2.2. ShuffleGAN for Wetland Classification

3. Experiments and Analysis

3.1. Study Area and Dataset

3.2. Experimental Settings

- SVM: the classical SVM with radial basis function (RBF) kernel and using spectral features;

- SVM-s: the object-based SVM, whose objects are generated by SLIC and spectral-spatial features of each object are obtained by mean, standard deviation and entropy;

- LapSVM: the classical graph-based semi-supervised learning method using spectral features;

- LapSVM-s: the object-based LapSVM, whose objects are generated by SLIC and spectral-spatial features of each object are obtained by mean, standard deviation and entropy;

- CNN: the CNN classifier, which is made of Conv, BN, ReLU, pooling, FC and softmax layers;

- GAN-or: the original semi-supervised GAN, which does not contain Shufflenet units and depthwise separable convolutions;

- GAN-dw: the semi-supervised GAN, which uses depthwise separable convolutions.

3.3. Experimental Results

4. Discussions

5. Conclusions

Author Contributions

Acknowledgments

Conflicts of Interest

References

- Amani, M.; Mahdavi, S.; Afshar, M.; Brisco, B.; Huang, W.; Mohammad Javad Mirzadeh, S.; White, L.; Banks, S.; Montgomery, J.; Hopkinson, C. Canadian wetland inventory using google earth engine: The first map and preliminary results. Remote Sens. 2019, 11, 842. [Google Scholar] [CrossRef]

- Lane, C.; Liu, H.; Autrey, B.; Anenkhonov, O.; Chepinoga, V.; Wu, Q. Improved wetland classification using eight-band high resolution satellite imagery and a hybrid approach. Remote Sens. 2014, 6, 12187–12216. [Google Scholar] [CrossRef]

- Mleczko, M.; Mróz, M. Wetland mapping using sar data from the sentinel-1a and tandem-x missions: A comparative study in the biebrza floodplain (Poland). Remote Sens. 2018, 10, 78. [Google Scholar] [CrossRef]

- Mahdavi, S.; Salehi, B.; Granger, J.; Amani, M.; Brisco, B.; Huang, W. Remote sensing for wetland classification: A comprehensive review. GISci. Remote Sens. 2018, 55, 623–658. [Google Scholar] [CrossRef]

- Sánchez-Espinosa, A.; Schröder, C. Land use and land cover mapping in wetlands one step closer to the ground: Sentinel-2 versus landsat 8. J. Environ. Manag. 2019, 247, 484–498. [Google Scholar] [CrossRef]

- Zhao, J.; Yu, L.; Xu, Y.; Ren, H.; Huang, X.; Gong, P. Exploring the addition of Landsat 8 thermal band in land-cover mapping. Int. J. Remote Sens. 2019, 40, 4544–4559. [Google Scholar] [CrossRef]

- Zhu, X.; Hou, Y.; Weng, Q.; Chen, L. Integrating UAV optical imagery and LiDAR data for assessing the spatial relationship between mangrove and inundation across a subtropical estuarine wetland. ISPRS J. Photogramm. Remote Sens. 2019, 149, 146–156. [Google Scholar] [CrossRef]

- Araya-López, R.A.; Lopatin, J.; Fassnacht, F.E.; Hernández, H.J. Monitoring Andean high altitude wetlands in central Chile with seasonal optical data: A comparison between Worldview-2 and Sentinel-2 imagery. ISPRS J. Photogramm. Remote Sens. 2018, 145, 213–224. [Google Scholar] [CrossRef]

- Campbell, A.; Wang, Y. High spatial resolution remote sensing for salt marsh mapping and change analysis at fire island national seashore. Remote Sens. 2019, 11, 1107. [Google Scholar] [CrossRef]

- Wang, X.; Gao, X.; Zhang, Y.; Fei, X.; Chen, Z.; Wang, J.; Zhang, Y.; Lu, X.; Zhao, H. Land-Cover classification of coastal wetlands using the RF algorithm for Worldview-2 and Landsat 8 images. Remote Sens. 2019, 11, 1927. [Google Scholar] [CrossRef]

- Abeysinghe, T.; Simic Milas, A.; Arend, K.; Hohman, B.; Reil, P.; Gregory, A.; Vázquez-Ortega, A. Mapping invasive phragmites australis in the old woman creek estuary using UAV remote sensing and machine learning classifiers. Remote Sens. 2019, 11, 1380. [Google Scholar] [CrossRef]

- Cao, J.; Leng, W.; Liu, K.; Liu, L.; He, Z.; Zhu, Y. Object-based mangrove species classification using unmanned aerial vehicle hyperspectral images and digital surface models. Remote Sens. 2018, 10, 89. [Google Scholar] [CrossRef]

- Cao, J.; Liu, K.; Liu, L.; Zhu, Y.; Li, J.; He, Z. Identifying mangrove species using field close-range snapshot hyperspectral imaging and machine-learning techniques. Remote Sens. 2018, 10, 2047. [Google Scholar] [CrossRef]

- Ahmed, K.R.; Akter, S. Analysis of landcover change in southwest Bengal delta due to floods by NDVI, NDWI and K-means cluster with Landsat multi-spectral surface reflectance satellite data. Remote Sens. Appl. Soc. Environ. 2017, 8, 168–181. [Google Scholar] [CrossRef]

- Snedden, G.A. Patterning emergent marsh vegetation assemblages in coastal Louisiana, USA, with unsupervised artificial neural networks. Appl. Veg. Sci. 2019, 22, 213–229. [Google Scholar] [CrossRef]

- Sannigrahi, S.; Chakraborti, S.; Joshi, P.K.; Keesstra, S.; Sen, S.; Paul, S.K.; Kreuter, U.; Sutton, P.C.; Jha, S.; Dang, K.B. Ecosystem service value assessment of a natural reserve region for strengthening protection and conservation. J. Environ. Manag. 2019, 244, 208–227. [Google Scholar] [CrossRef] [PubMed]

- Hakdaoui, S.; Emran, A.; Pradhan, B.; Lee, C.W.; Fils, N.; Cesar, S. A collaborative change detection approach on multi-sensor spatial imagery for desert wetland monitoring after a flash flood in southern Morocco. Remote Sens. 2019, 11, 1042. [Google Scholar] [CrossRef]

- Kordelas, G.; Manakos, I.; Aragonés, D.; Díaz-Delgado, R.; Bustamante, J. Fast and automatic data-driven thresholding for inundation mapping with Sentinel-2 data. Remote Sens. 2018, 10, 910. [Google Scholar] [CrossRef]

- Belkin, M.; Niyogi, P.; Sindhwani, V. Manifold regularization: A geometric framework for learning from labeled and unlabeled examples. J. Mach. Learn. Res. 2006, 7, 2399–2434. [Google Scholar]

- Melacci, S.; Belkin, M. Laplacian support vector machines trained in the primal. J. Mach. Learn. Res. 2011, 12, 1149–1184. [Google Scholar]

- Maulik, U.; Chakraborty, D. Learning with transductive SVM for semisupervised pixel classification of remote sensing imagery. ISPRS J. Photogramm. Remote Sens. 2013, 77, 66–78. [Google Scholar] [CrossRef]

- Chen, Y.; Lin, Z.; Zhao, X.; Wang, G.; Gu, Y. Deep learning-based classification of hyperspectral data. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2014, 7, 2094–2107. [Google Scholar] [CrossRef]

- Zhu, X.X.; Tuia, D.; Mou, L.; Xia, G.S.; Zhang, L.; Xu, F.; Fraundorfer, F. Deep learning in remote sensing: A comprehensive review and list of resources. IEEE Geosci. Remote Sens. Mag. 2017, 5, 8–36. [Google Scholar] [CrossRef]

- Rezaee, M.; Mahdianpari, M.; Zhang, Y.; Salehi, B. Deep convolutional neural network for complex wetland classification using optical remote sensing imagery. IEEE J. Sel. Top. Appl. Earth Observ. Remote Sens. 2018, 11, 3030–3039. [Google Scholar] [CrossRef]

- Mahdianpari, M.; Salehi, B.; Rezaee, M.; Mohammadimanesh, F.; Zhang, Y. Very deep convolutional neural networks for complex land cover mapping using multispectral remote sensing imagery. Remote Sens. 2018, 10, 1119. [Google Scholar] [CrossRef]

- Liu, T.; Abd-Elrahman, A. Deep convolutional neural network training enrichment using multi-view object-based analysis of Unmanned Aerial systems imagery for wetlands classification. ISPRS J. Photogramm. Remote Sens. 2018, 139, 154–170. [Google Scholar] [CrossRef]

- Mohammadimanesh, F.; Salehi, B.; Mahdianpari, M.; Gill, E.; Molinier, M. A new fully convolutional neural network for semantic segmentation of polarimetric SAR imagery in complex land cover ecosystem. ISPRS J. Photogramm. Remote Sens. 2019, 151, 223–236. [Google Scholar] [CrossRef]

- Chen, Y.; Zhao, X.; Jia, X. Spectral-spatial classification of hyperspectral data based on deep belief network. IEEE J. Sel. Top. Appl. Earth Observ. Remote Sens. 2015, 8, 2381–2392. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 26 June–1 July 2016; pp. 770–778. [Google Scholar]

- Souly, N.; Spampinato, C.; Shah, M. Semi supervised semantic segmentation using generative adversarial network. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 5688–5696. [Google Scholar]

- He, Z.; Liu, H.; Wang, Y.; Hu, J. Generative adversarial networks-based semi-supervised learning for hyperspectral image classification. Remote Sens. 2017, 9, 1042. [Google Scholar] [CrossRef]

- Chen, C.; Ma, Y.; Ren, G. A convolutional neural network with fletcher–reeves algorithm for hyperspectral image classification. Remote Sens. 2019, 11, 1325. [Google Scholar] [CrossRef]

- Achanta, R.; Shaji, A.; Smith, K.; Lucchi, A.; Fua, P.; Süsstrunk, S. SLIC superpixels compared to state-of-the-art superpixel methods. IEEE Trans. Pattern Anal. Mach. Intell. 2012, 34, 2274–2282. [Google Scholar] [CrossRef] [PubMed]

- Ma, N.; Zhang, X.; Zheng, H.T.; Sun, J. Shufflenet v2: Practical guidelines for efficient cnn architecture design. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 116–131. [Google Scholar]

- Laben, C.A.; Brower, B.V. Process for Enhancing the Spatial Resolution of Multispectral Imagery Using Pan-Sharpening. U.S. Patent 6,011,875, 4 January 2000. [Google Scholar]

| Layer | Output Size | Kernel Size | Stride | Output Channel |

|---|---|---|---|---|

| Input | 100 × 1 | – | – | 1 |

| FC | 512 × 1 | – | – | 1 |

| Resize | 8 × 8 | – | – | 8 |

| BN | 8 × 8 | – | – | 8 |

| Upsampling | 16 × 16 | – | – | 8 |

| ShuffleNet unit-1 | 16 × 16 | 3 × 3 | 1 | 8 |

| Upsampling | 32 × 32 | – | – | 8 |

| ShuffleNet unit-1 | 32 × 32 | 3 × 3 | 1 | 8 |

| Conv | 32 × 32 | 3 × 3 | 1 | 4 |

| Tanh | 32 × 32 | – | – | 4 |

| Layer | Output Size | Kernel Size | Stride | Output Channel |

|---|---|---|---|---|

| Input | 32 × 32 | – | – | 4 |

| Conv | 16 × 16 | 3 × 3 | 2 | 16 |

| ShuffleNet unit-2 | 8 × 8 | 3 × 3 | 2 | 32 |

| ShuffleNet unit-1 | 8 × 8 | 3 × 3 | 1 | 32 |

| ShuffleNet unit-2 | 4 × 4 | 3 × 3 | 2 | 64 |

| ShuffleNet unit-1 | 4 × 4 | 3 × 3 | 1 | 64 |

| Conv | 4 × 4 | 1 × 1 | 1 | 128 |

| Average pooling | 1 × 1 | 4 × 4 | – | 128 |

| FC | 1 × 1 | – | – | 128 |

| Sigmoid | 1 × 1 | – | – | 1 |

| Softmax | 1 × 1 | – | – | 10 |

| Class | Name | NoS | Training | Validation | Test |

|---|---|---|---|---|---|

| 1 | Ficus | 417 | 42 | 25 | 350 |

| 2 | Alstonia scholaris | 138 | 14 | 9 | 115 |

| 3 | Delonix | 154 | 16 | 6 | 132 |

| 4 | Bauhinia | 484 | 49 | 24 | 411 |

| 5 | Camphor tree | 135 | 14 | 9 | 112 |

| 6 | Metasequoia | 431 | 44 | 11 | 376 |

| 7 | Palm tree | 260 | 26 | 4 | 230 |

| 8 | Lake/River | 1200 | 120 | 87 | 993 |

| 9 | Road | 833 | 84 | 31 | 718 |

| 10 | Building | 1403 | 141 | 36 | 226 |

| Total | 5455 | 550 | 242 | 4663 |

| Class | SVM | SVM-s | LapSVM | LapSVM-s | CNN | GAN-or | GAN-dw | ShuffleGAN |

|---|---|---|---|---|---|---|---|---|

| 1 | 17.43 | 56.00 | 0.86 | 64.57 | 73.14 | 78.57 | 81.43 | 83.43 |

| 2 | 28.70 | 59.13 | 17.39 | 63.48 | 65.22 | 73.04 | 71.30 | 80.00 |

| 3 | 43.18 | 62.12 | 40.91 | 62.12 | 67.42 | 68.94 | 41.67 | 76.52 |

| 4 | 37.71 | 71.05 | 53.77 | 75.67 | 71.53 | 88.32 | 93.67 | 82.24 |

| 5 | 10.71 | 29.46 | 4.46 | 33.93 | 35.71 | 47.32 | 34.82 | 51.79 |

| 6 | 49.47 | 69.41 | 53.19 | 64.63 | 73.94 | 72.34 | 63.30 | 80.59 |

| 7 | 52.61 | 18.26 | 42.61 | 35.22 | 49.13 | 68.26 | 53.04 | 62.17 |

| 8 | 86.40 | 96.68 | 95.87 | 95.27 | 92.45 | 98.29 | 97.99 | 98.79 |

| 9 | 74.79 | 58.08 | 80.08 | 60.72 | 63.79 | 55.57 | 58.77 | 60.17 |

| 10 | 87.03 | 88.09 | 88.58 | 88.50 | 93.15 | 92.01 | 89.40 | 94.29 |

| OA | 66.20 | 73.58 | 68.93 | 75.51 | 78.55 | 81.45 | 79.28 | 83.55 |

| AA | 48.80 | 60.83 | 47.77 | 64.41 | 68.55 | 74.27 | 68.54 | 77.00 |

| 59.31 | 68.45 | 62.14 | 70.83 | 74.54 | 77.95 | 75.35 | 80.40 | |

| time(s) | 1.03 | 2.97 | 3.54 | 4.86 | 752.14 | 818.41 | 684.55 | 717.45 |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

He, Z.; He, D.; Mei, X.; Hu, S. Wetland Classification Based on a New Efficient Generative Adversarial Network and Jilin-1 Satellite Image. Remote Sens. 2019, 11, 2455. https://0-doi-org.brum.beds.ac.uk/10.3390/rs11202455

He Z, He D, Mei X, Hu S. Wetland Classification Based on a New Efficient Generative Adversarial Network and Jilin-1 Satellite Image. Remote Sensing. 2019; 11(20):2455. https://0-doi-org.brum.beds.ac.uk/10.3390/rs11202455

Chicago/Turabian StyleHe, Zhi, Dan He, Xiangqin Mei, and Saihan Hu. 2019. "Wetland Classification Based on a New Efficient Generative Adversarial Network and Jilin-1 Satellite Image" Remote Sensing 11, no. 20: 2455. https://0-doi-org.brum.beds.ac.uk/10.3390/rs11202455