A Method for Dehazing Images Obtained from Low Altitudes during High-Pressure Fronts

Abstract

:1. Introduction

- Errors in quantitative analyses (e.g., determination of albedo value, surface temperature and vegetation indices);

- Difficulties in comparing multi-temporal data series;

- Challenges in comparing radiometric in situ measurements with values from satellite, aerial or low-altitudes imagery;

- Problems in comparative analyses of spectral signatures in time and/or space;

- A decrease in the accuracy of multispectral imagery classification [17].

1.1. Related Works

1.2. Research Purpose

2. Materials

2.1. Test Area

2.2. Data Acquisition

2.3. Meteorological Conditions

2.3.1. The Synoptic Situation in the Mieruniszki Test Area

2.3.2. The Synoptic Situation in the Kościelisko Test Area

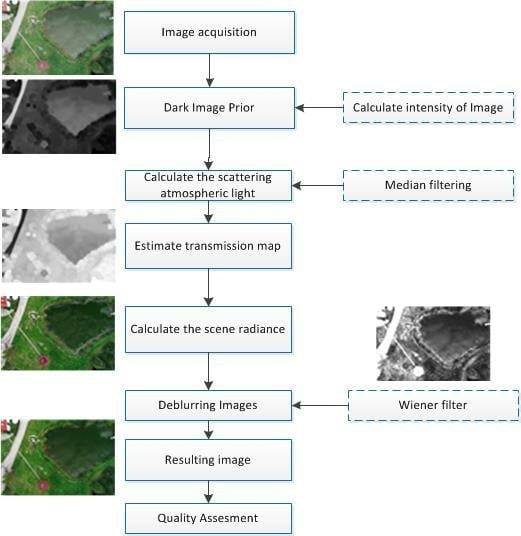

3. Methodology

3.1. Dark Image Prior

3.2. Calculate the Atmospheric Scattering Light

3.3. Estimating Transmission Map

- In the first step, Equation (3) was normalised using atmospheric light . After this operation, the Equation is:In this way, it is possible to normalise each R, G, B band of the image independently.

- According to the adopted assumption that is a constant in a local patch (block) and the value is known, the dark channel can be determined by using the min operator [29]:

- In the next step, it is assumed that for the dehazed image (dark channel) , and value is always positive.

- Based on the above, the transmission value for the block (patch) can be determined from the formula:

3.4. Denoising Images

- In the first step, the filter kernel size was set empirically to 3 × 3 pixels. The average value and variance were determined for the environment of each pixel of the image according to the following formulas:where is N*M elemental proximity to each point (pixel) of the image.

- In the second step, the square of noise variance is determined for the identified noise waveforms or, in the absence of data, the square of mean-variance from all local neighbourhoods of pixels for the filtered channel (image) is calculated;

4. Results and Quality Assessment

4.1. Quality Assessment

4.1.1. Visual Analysis

4.1.2. Quality Metrics Assessment

PSNR Assessment

RSME Assessment

SSIM Assessment

Universal Quality Index Assessment

Correlation Assessment

Entropy Compare Analysis

A Statistical Significance Test of Results

5. Discussion

6. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Dalla Mura, M.; Benediktsson, J.A.; Waske, B.; Bruzzone, L. Morphological attribute profiles for the analysis of very high resolution images. IEEE Trans. Geosci. Remote Sens. 2010, 48, 3747–3762. [Google Scholar] [CrossRef]

- Woloszyn, E. An Overview of Meteorology and Climatology; Gdansk University of Technology: Gdańsk, Poland, 2009; p. 26. ISBN 978-83-7775-237-1. [Google Scholar]

- Mazur, A.; Kacprzak, M.; Kubiak, K.; Kotlarz, J.; Skocki, K. The influence of atmospheric light scattering on reflectance measurements during photogrammetric survey flights at low altitudes over forest areas. Leśne Prace Badawcze 2018, 79. [Google Scholar] [CrossRef] [Green Version]

- Shao, S.; Guo, Y.; Zhang, Z.; Yuan, H. Single Remote Sensing Multispectral Image Dehazing Based on a Learning Framework. Math. Probl. Eng. 2019. [Google Scholar] [CrossRef]

- Chavez, P.S. Atmospheric, solar, and M.T.F. corrections for ERTS digital imagery. Proc. Am. Soc. Photogramm. 1975, 69, 459–479. [Google Scholar]

- Chavez, P.S., Jr. An Improved Dark-Object Subtraction Technique for Atmospheric Scattering Correction of Multispectral Data. Remote Sens. Environ. 1988, 24, 459–479. [Google Scholar] [CrossRef]

- Zarco-Tejada, P.J.; Morales, A.; Testi, L.; Villalobos, F.J. Spatio-temporal patterns of chlorophyll fluorescence and physiological and structural indices acquired from hyperspectral imagery as compared with carbon fluxes measured with eddy covariance. Remote Sens. Environ. 2013, 133, 102–115. [Google Scholar] [CrossRef]

- Huang, Y.; Ding, W.; Li, H. Haze removal for UAV reconnaissance images using layered scattering model. Chin. J. Aeronaut. 2016, 29, 502–511. [Google Scholar] [CrossRef] [Green Version]

- Tagle Casapia, M.X. Study of Radiometric Variations in Unmanned Aerial Vehicle Remote Sensing Imagery for Vegetation Mapping. Master’s Thesis, Lund University, Lund, Sweden, 2017. [Google Scholar]

- Richter, R. A spatially-adaptive fast atmospheric correction algorithm. Int. J.Remote Sens. 1996, 17, 1201–1214. [Google Scholar] [CrossRef]

- Richter, R. Atmospheric correction of DAIS hyperspectral image data. Comput. Geosci. 1996, 22, 785–793. [Google Scholar] [CrossRef]

- Osińska-Skotak, K. Wpływ korekcji atmosferycznej na wyniki cyfrowej klasyfikacji. Acta Sciennarum Polonorum Geodesia et Descriptio Terrarum 2005, 4, 41–53. [Google Scholar]

- Jakomulska, A.; Sobczak, M. Radiometric correction of satellite images—Methodology and exemplification. Teledetekcja Srodowiska 2001, 32, 152–171. [Google Scholar]

- Jones, S.; Reinke, K. (Eds.) Innovations in Remote Sensing and Photogrammetry; Springer Science & Business Media: Berlin, Germany, 2009. [Google Scholar]

- Richards, J.A. Remote Sensing Digital Image Analysis; Springer: Berlin, Germany, 2006; p. 437. [Google Scholar]

- Osinska-Skotak, K. The importance of radiometric correction in satellite images processing. Arch. Photogramm. Cartogr. Remote Sens. 2007, 17, 577–590. [Google Scholar]

- Gao, B.C.; Heidebrecht, K.B.; Goetz, A.F. Derivation of scaled surface reflectances from AVIRIS data. Remote Sens. Environ. 1993, 44, 165–178. [Google Scholar] [CrossRef]

- Qu, Z.; Kindel, B.; Goetz, A.F.H. The High Accuracy Atmospheric Correction for Hyperspectral Data (HATCH) model. IEEE Trans. Geosci. Remote Sens. 2003, 41, 1223–1231. [Google Scholar]

- Matthew, M.; Adler-Golden, S.; Berk, A.; Felde, G.; Anderson, G.; Gorodetzky, D.; Paswaters, S.; Shippert, M. Atmospheric Correction of Spectral Imagery: Evaluation of the FLAASH Algorithm with AVIRIS Data. In Proceedings of the 31st Applied Imagery Pattern Recognition Workshop, Washington, DC, USA, 16–18 October 2002; pp. 157–163. [Google Scholar]

- Black, M.; Fleming, A.; Riley, T.; Ferrier, G.; Fretwell, P.; McFee, J.; Achal, S.; Diaz, A.U. On the atmospheric correction of Antarctic airborne hyperspectral data. Remote Sens. 2014, 6, 4498–4514. [Google Scholar] [CrossRef] [Green Version]

- Berk, A.; Anderson, G.P.; Acharya, P.K.; Bernstein, L.S.; Muratov, L.; Lee, J.; Fox, M.J.; Adler- Golden, S.M.; Chetwynd, J.H.; Hoke, M.L.; et al. MODTRAN5: A reformulated atmospheric band model with auxiliary species and practical multiple scattering options. Proc. SPIE 2005. [Google Scholar] [CrossRef]

- Głowienka, E. Comparison of atmospheric correction methods for hyperspectral sensor data. Arch. Photogramm. Cartogr. Remote Sens. 2008, 18a, 121–130. [Google Scholar]

- Makarau, A.; Richter, R.; Muller, R.; Reinartz, P. Haze detection and removal in remotely sensed multispectral imagery. IEEE Trans. Geosci. Remote Sens. 2014, 52, 5895–5905. [Google Scholar] [CrossRef] [Green Version]

- Zhang, Y.; Guindon, B.; Cihlar, J. An image transform to characterize and compensate for spatial variations in thin cloud contamination of Landsat images. Remote Sens. Environ. 2002, 82, 173–187. [Google Scholar] [CrossRef]

- Seow, M.J.; Asari, V.K. Ratio rule and homomorphic filter forenhancement of digital colour image. Neurocomputing 2006, 69, 954–958. [Google Scholar] [CrossRef]

- Kedzierski, M.; Wierzbicki, D. Methodology of improvement of radiometric quality of images acquired from low altitudes. Measurement 2016, 92, 70–78. [Google Scholar] [CrossRef]

- Zhou, J.; Zhou, F. Single image dehazing motivated by Retinex theory. In Proceedings of the 2013 2nd International Symposium on Instrumentation and Measurement, Sensor Network and Automation (IMSNA), Toronto, ON, Canada, 23–24 December 2013; pp. 243–247. [Google Scholar]

- Wang, W.; Guan, W.; Li, Q.; Qi, M. Multiscale single image dehazing based on adaptive wavelet fusion. Math. Probl. Eng. 2015, 2015, 131082. [Google Scholar] [CrossRef] [Green Version]

- He, K.; Sun, J.; Tang, X. Single image haze removal using dark channel prior. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 20–26 June 2009; pp. 1956–1963. [Google Scholar]

- Xie, B.; Guo, F.; Cai, Z. Universal strategy for surveillance video defogging. Opt. Eng. 2012, 51, 1–7. [Google Scholar] [CrossRef]

- Park, D.; Han, D.; Ko, H. Single image haze removal with WLS-based edge-preserving smoothing filter. In Proceedings of the IEEE International Conference on Acoustics, Speech, and Signal Processing, Vancouver, BC, Canada, 26–31 May 2013; pp. 2469–2473. [Google Scholar]

- Tan, R.T. Visibility in bad weather from a single image. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR), Anchorage, AK, USA, 23–28 June 2008; pp. 1–8. [Google Scholar]

- Yeh, C.; Kang, L.; Lee, M.; Lin, C. Haze effect removal from image via haze density estimation in optical model. Opt. Express 2013, 21, 27127–27141. [Google Scholar] [CrossRef] [PubMed]

- Fattal, R. Single image dehazing. ACM Trans. Graph. 2008, 72, 72:1–72:9. [Google Scholar]

- Berman, D.; Treibitz, T.; Avidan, S. Non-local image dehazing. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 1674–1682. [Google Scholar]

- Tarel, J.P.; Hautiere, N. Fast visibility restoration from a single color or gray level image. In Proceedings of the 2009 IEEE 12th International Conference on Computer Vision, Kyoto, Japan, 29 September–2 October 2009; pp. 2201–2208. [Google Scholar]

- Oakley, J.P.; Satherley, B.L. Improving image quality in poor visibility conditions using a physical model for contrast degradation. IEEE Trans. Image Process. 1998, 7, 167–179. [Google Scholar] [CrossRef] [PubMed]

- Kedzierski, M.; Wierzbicki, D.; Sekrecka, A.; Fryskowska, A.; Walczykowski, P.; Siewert, J. Influence of Lower Atmosphere on the Radiometric Quality of Unmanned Aerial Vehicle Imagery. Remote Sens. 2019, 11, 1214. [Google Scholar] [CrossRef] [Green Version]

- Synoptic Maps. Available online: http://www.pogodynka.pl/polska/mapa_synoptyczna (accessed on 20 May 2019).

- Zhu, Q.; Mai, J.; Shao, L. A fast single image haze removal algorithm using color attenuation prior. IEEE Trans. Image Process. 2015, 24, 3522–3533. [Google Scholar] [PubMed] [Green Version]

- Lim, J.S. Two-Dimensional Signal and Image Processing; Prentice Hall: Englewood Cliffs, NJ, USA, 1990; pp. 536–540. [Google Scholar]

- MATLAB Image Processing Toolbox User’s Guide; Version 2; The Math Works, Inc.: Natick, MA, USA, 1999.

- Wang, Z.; Bovik, A.C. A Universal Image Quality Index. IEEE Signal Process. Lett. 2002, 9, 81–84. [Google Scholar] [CrossRef]

- Wang, Z.; Bovik, A.C.; Sheikh, H.R.; Simoncelli, E.P. Image quality assessment: From error visibility to structural similarity. IEEE Trans. Image Process. 2004, 13, 600–612. [Google Scholar] [CrossRef] [Green Version]

- Markelin, L.; Honkavaara, E.; Schlaepfer, D.; Bovet, S.T.; Korpela, I. Assessment of radiometric correction methods for ADS40 imagery. Photogramm. Fernerkund. Geoinform. 2012, 3, 251–266. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Carper, W.; Lillesand, T.; Kiefer, R. The use of intensity-hue-saturation transformations for merging SPOT panchromatic and multispectral image data. Photogramm. Eng. Remote Sens. 1990, 56, 459–467. [Google Scholar]

- Gibbons, J.D.; Chakraborti, S. Nonparametric statistical inference. In International Encyclopedia of Statistical Science; Springer: Berlin/Heidelberg, Germany, 2011; pp. 977–979. [Google Scholar]

- Liu, K.; He, L.; Ma, S.; Gao, S.; Bi, D. A Sensor Image Dehazing Algorithm Based on Feature Learning. Sensors 2018, 18, 2606. [Google Scholar] [CrossRef] [PubMed] [Green Version]

| Our Method | He’s et al. | Berman’s et al. | Tarel and Hautiere’s | |

|---|---|---|---|---|

| Time [s] | 2.43 | 2.36 | 1.75 | 2.45 |

| PSNR | Our Method | He’s et al. | Berman’s et al. | Tarel and Hautiere’s |

|---|---|---|---|---|

| Mean | 26.44 | 23.58 | 17.40 | 21.38 |

| Std | 7.41 | 7.24 | 2.60 | 4.39 |

| Min | 12.62 | 8.55 | 9.64 | 10.80 |

| Max | 44.03 | 45.01 | 24.28 | 33.55 |

| RMSE [%] | Our Method | He’s et al. | Berman’s et al. | Tarel and Hautiere’s |

|---|---|---|---|---|

| Mean | 11.4 | 16.1 | 24.5 | 16.7 |

| Std | 8.7 | 14.0 | 7.7 | 8.1 |

| Min | 1.1 | 1.1 | 10.6 | 3.6 |

| Max | 40.5 | 64.7 | 57.1 | 50.0 |

| SSIM | Our Method | He’s et al. | Berman’s et al. | Tarel and Hautiere’s |

|---|---|---|---|---|

| mean | 0.890 | 0.843 | 0.564 | 0.746 |

| std | 0.071 | 0.122 | 0.160 | 0.084 |

| min | 0.385 | 0.409 | 0.042 | 0.419 |

| max | 0.986 | 0.987 | 0.874 | 0.905 |

| Q Index | Our Method | He’s et al. | Berman’s et al. | Tarel and Hautiere’s |

|---|---|---|---|---|

| mean | 0.881 | 0.828 | 0.546 | 0.642 |

| std | 0.081 | 0.167 | 0.192 | 0.128 |

| min | 0.533 | 0.275 | 0.019 | 0.265 |

| max | 0.991 | 0.990 | 0.850 | 0.896 |

| Cross-Correlation | Our Method | He’s et al. | Berman’s et al. | Tarel and Hautiere’s |

|---|---|---|---|---|

| mean | 0.930 | 0.933 | 0.932 | 0.751 |

| std | 0.058 | 0.047 | 0.051 | 0.160 |

| min | 0.711 | 0.678 | 0.660 | 0.112 |

| max | 0.997 | 0.998 | 0.995 | 0.984 |

| Our Method with He’s et al. | Our Method with Berman’s et al. | Our Method with Tarel and Hautiere’s | ||

|---|---|---|---|---|

| PSNR | p-value | 0.032 | <0.0001 | 0.033 |

| z-score | −2.134 | −3.509 | −2.133 | |

| RMSE | p-value | 0.048 | <0.0001 | <0.0001 |

| z-score | −1.974 | −4.132 | −5.719 | |

| SSIM | p-value | 0.039 | <0.0001 | <0.0001 |

| z-score | −2.056 | −6.153 | −6.155 | |

| Q | p-value | 0.028 | <0.0001 | <0.0001 |

| z-score | −2.987 | −6.144 | −6.1539 | |

| CC | p-value | <0.0001 | 0.379 | <0.0001 |

| z-score | −4.508 | −0.878 | −6.144 | |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wierzbicki, D.; Kedzierski, M.; Sekrecka, A. A Method for Dehazing Images Obtained from Low Altitudes during High-Pressure Fronts. Remote Sens. 2020, 12, 25. https://0-doi-org.brum.beds.ac.uk/10.3390/rs12010025

Wierzbicki D, Kedzierski M, Sekrecka A. A Method for Dehazing Images Obtained from Low Altitudes during High-Pressure Fronts. Remote Sensing. 2020; 12(1):25. https://0-doi-org.brum.beds.ac.uk/10.3390/rs12010025

Chicago/Turabian StyleWierzbicki, Damian, Michal Kedzierski, and Aleksandra Sekrecka. 2020. "A Method for Dehazing Images Obtained from Low Altitudes during High-Pressure Fronts" Remote Sensing 12, no. 1: 25. https://0-doi-org.brum.beds.ac.uk/10.3390/rs12010025