Reconstruction of High-Temporal- and High-Spatial-Resolution Reflectance Datasets Using Difference Construction and Bayesian Unmixing

Abstract

:1. Introduction

2. Materials and Methods

2.1. Study Area

2.1.1. Study Area of Huailai

2.1.2. Study Area in Hebei

2.2. Data and Processing

2.2.1. Data Preparation

2.2.2. Data Preprocessing

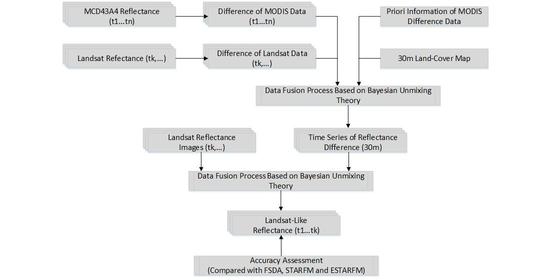

2.3. Ref-BSFM Method

2.3.1. MODIS Data Temporal Change Detection

2.3.2. Building the Difference of Landsat Data

2.3.3. Obtaining Prior Information

2.3.4. Bayesian Pixel Unmixing

2.3.5. Prediction of Reflectance Difference Datasets

3. Results

3.1. Performance Comparison over a Small Area in Huailai

3.1.1. Performance Based on Cloudless MODIS Data

3.1.2. Performance Based on MODIS Data with Clouds

3.2. Performance Comparison over a Large Area in Hebei

4. Discussion

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Shen, M.; Tang, Y.; Chen, J.; Zhu, X.; Zheng, Y. Influences of temperature and precipitation before the growing season on spring phenology in grasslands of the central and eastern Qinghai-Tibetan Plateau. Agric. For. Meteorol. 2011, 151, 1711–1722. [Google Scholar] [CrossRef]

- Galford, G.L.; Mustard, J.F.; Melillo, J.; Gendrin, A.; Cerri, C.E. Wavelet analysis of MODIS time series to detect expansion and intensification of row-crop agriculture in Brazil. Remote Sens. Environ. 2008, 112, 576–587. [Google Scholar] [CrossRef]

- Lunetta, R.S.; Knight, J.F.; Ediriwickrema, J.; Lyon, J.G.; Worthy, L.D. Land-cover change detection using multi-temporal MODIS NDVI data. Remote Sens. Environ. 2006, 105, 142–154. [Google Scholar] [CrossRef]

- Zhu, X.; Cai, F.; Tian, J.; Williams, T.K.A. Spatiotemporal Fusion of Multisource Remote Sensing Data: Literature Survey, Taxonomy, Principles, Applications, and Future Directions. Remote Sens. 2018, 10, 527. [Google Scholar] [CrossRef] [Green Version]

- Gorelick, N.; Hancher, M.; Dixon, M.; Ilyushchenko, S.; Thau, D.; Moore, R. Google Earth Engine: Planetary-scale geospatial analysis for everyone. Remote Sens. Environ. 2017, 202, 18–27. [Google Scholar] [CrossRef]

- Wang, P.; Gao, F.; Masek, J.G. Operational Data Fusion Framework for Building Frequent Landsat-Like Imagery. IEEE Trans. Geosci. Remote Sens. 2014, 52, 7353–7365. [Google Scholar] [CrossRef]

- Seto, K.C.; Fleishman, E.; Fay, J.P.; Betrus, C.J. Linking spatial patterns of bird and butterfly species richness with Landsat TM derived NDVI. Int. J. Remote Sens. 2004, 25, 4309–4324. [Google Scholar] [CrossRef]

- Lenney, M.P.; Woodcock, C.E.; Collins, J.B.; Hamdi, H. The status of agricultural lands in Egypt: The use of multitemporal NDVI features derived from landsat TM. Remote Sens. Environ. 1996, 56, 8–20. [Google Scholar] [CrossRef]

- Ju, J.; Roy, D. The availability of cloud-free Landsat ETM+ data over the conterminous United States and globally. Remote Sens. Environ. 2008, 112, 1196–1211. [Google Scholar] [CrossRef]

- Asner, G.P. Cloud cover in Landsat observations of the Brazilian Amazon. Int. J. Remote Sens. 2001, 22, 3855–3862. [Google Scholar] [CrossRef]

- Zhukov, B.; Oertel, D.; Lanzl, F.; Reinhackel, G. Unmixing-based multisensor multiresolution image fusion. IEEE Trans. Geosci. Remote Sens. 1999, 37, 1212–1226. [Google Scholar] [CrossRef]

- Wu, M.; Niu, Z.; Wang, C.; Wu, C.; Wang, L. Use of MODIS and Landsat time series data to generate high-resolution temporal synthetic Landsat data using a spatial and temporal reflectance fusion model. J. Appl. Remote Sens. 2012, 6, 063507. [Google Scholar]

- Wu, M.; Huang, W.; Niu, Z.; Wang, C. Generating Daily Synthetic Landsat Imagery by Combining Landsat and MODIS Data. Sensors 2015, 15, 24002–24025. [Google Scholar] [CrossRef] [Green Version]

- Gevaert, C.M.; García-Haro, F.J. A comparison of STARFM and an unmixing-based algorithm for Landsat and MODIS data fusion. Remote Sens. Environ. 2015, 156, 34–44. [Google Scholar] [CrossRef]

- Gao, F.; Masek, J.G.; Schwaller, M.; Hall, F. On the blending of the Landsat and MODIS surface reflectance: Predicting daily Landsat surface reflectance. IEEE Trans. Geosci. Remote Sens. 2006, 44, 2207–2218. [Google Scholar] [CrossRef]

- Zhu, X.; Chen, J.; Gao, F.; Chen, X.; Masek, J.G. An enhanced spatial and temporal adaptive reflectance fusion model for complex heterogeneous regions. Remote Sens. Environ. 2010, 114, 2610–2623. [Google Scholar] [CrossRef]

- Zhu, X.; Helmer, E.H.; Gao, F.; Liu, D.; Chen, J.; Lefsky, M.A. A flexible spatiotemporal method for fusing satellite images with different resolutions. Remote Sens. Environ. 2016, 172, 165–177. [Google Scholar] [CrossRef]

- Liao, L.; Song, J.; Wang, J.; Xiao, Z.; Wang, J. Bayesian Method for Building Frequent Landsat-Like NDVI Datasets by Integrating MODIS and Landsat NDVI. Remote Sens. 2016, 8, 452. [Google Scholar] [CrossRef] [Green Version]

- Xue, J.; Leung, Y.; Fung, T. A Bayesian Data Fusion Approach to Spatio-Temporal Fusion of Remotely Sensed Images. Remote Sens. 2017, 9, 1310. [Google Scholar] [CrossRef] [Green Version]

- Xue, J.; Leung, Y.; Fung, T. An Unmixing-Based Bayesian Model for Spatio-Temporal Satellite Image Fusion in Heterogeneous Landscapes. Remote Sens. 2019, 11, 324. [Google Scholar] [CrossRef] [Green Version]

- Ke, Y.; Im, J.; Park, S.; Gong, H. Downscaling of MODIS One Kilometer Evapotranspiration Using Landsat-8 Data and Machine Learning Approaches. Remote Sens. 2016, 8, 215. [Google Scholar] [CrossRef] [Green Version]

- Song, H.; Liu, Q.; Wang, G.; Hang, R.; Huang, B. Spatiotemporal Satellite Image Fusion Using Deep Convolutional Neural Networks. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2018, 11, 821–829. [Google Scholar] [CrossRef]

- Huang, B.; Song, H. Spatiotemporal Reflectance Fusion via Sparse Representation. IEEE Trans. Geosci. Remote Sens. 2012, 50, 3707–3716. [Google Scholar] [CrossRef]

- Moosavi, V.; Talebi, A.; Mokhtari, M.H.; Shamsi, S.R.F.; Niazi, Y. A wavelet-artificial intelligence fusion approach (WAIFA) for blending Landsat and MODIS surface temperature. Remote Sens. Environ. 2015, 169, 243–254. [Google Scholar] [CrossRef]

- United States Geological Survey. Available online: http://earthexplorer.usgs.gov/ (accessed on 31 August 2020).

- L8sr_Product_Guide. Available online: http://landsat.usgs.gov/documents/provisional_l8sr_product_guide.pdf (accessed on 31 August 2020).

- Schaaf, C.B.; Gao, F.; Strahler, A.H.; Lucht, W.; Li, X.; Tsang, T.; Strugnell, N.C.; Zhang, X.; Jin, Y.; Muller, J.-P.; et al. First operational BRDF, albedo nadir reflectance products from MODIS. Remote Sens. Environ. 2002, 83, 135–148. [Google Scholar] [CrossRef] [Green Version]

- Liu, J.; Kuang, W.; Zhang, Z.; Xu, X.; Qin, Y.; Ning, J.; Zhou, W.; Zhang, S.; Li, R.; Yan, C.; et al. Spatiotemporal characteristics, patterns, and causes of land-use changes in China since the late 1980s. J. Geogr. Sci. 2014, 24, 195–210. [Google Scholar] [CrossRef]

- Reverb. Available online: http://reverb.echo.nasa.gov/reverb/ (accessed on 30 June 2020).

- MODIS Reprojection Tool. Available online: https://wiki.earthdata.nasa.gov/display/DAS/ (accessed on 30 June 2020).

- Weiss, J.L.; Gutzler, D.S.; Coonrod, J.E.; Dahm, C.N. Long-term vegetation monitoring with NDVI in a diverse semi-arid setting, central New Mexico, USA. J. Arid. Environ. 2004, 58, 249–272. [Google Scholar] [CrossRef]

- Xin, Q.; Olofsson, P.; Zhu, Z.; Tan, B.; Woodcock, C.E. Toward near real-time monitoring of forest disturbance by fusion of MODIS and Landsat data. Remote Sens. Environ. 2013, 135, 234–247. [Google Scholar] [CrossRef]

- Huang, B.; Zhang, H.; Song, H.; Wang, J.; Song, C. Unified fusion of remote-sensing imagery: Generating simultaneously high-resolution synthetic spatial–temporal–spectral earth observations. Remote Sens. Lett. 2013, 4, 561–569. [Google Scholar] [CrossRef]

- Aman, A.; Randriamanantena, H.P.; Podaire, A.; Frouin, R. Upscale integration of normalized difference vegetation index: The problem of spatial heterogeneity. IEEE Trans. Geosci. Remote Sens. 1992, 30, 326–338. [Google Scholar] [CrossRef]

- Chen, B.; Huang, B.; Xu, B. Multi-source remotely sensed data fusion for improving land cover classification. ISPRS J. Photogramm. Remote Sens. 2017, 124, 27–39. [Google Scholar] [CrossRef]

- Emelyanova, I.V.; McVicar, T.R.; Van Niel, T.G.; Li, L.T.; Van Dijk, A.I.J.M. Assessing the accuracy of blending Landsat–MODIS surface reflectances in two landscapes with contrasting spatial and temporal dynamics: A framework for algorithm selection. Remote Sens. Environ. 2013, 133, 193–209. [Google Scholar] [CrossRef]

- Udelhoven, T.; Schmidt, M.; Gill, T.K.; Röder, A. Long term data fusion for a dense time series analysis with MODIS and Landsat imagery in an Australian Savanna. J. Appl. Remote Sens. 2012, 6, 63512. [Google Scholar] [CrossRef]

- Wu, M.; Huang, W.; Niu, Z.; Wang, C.; Li, W.; Yu, B. Validation of synthetic daily Landsat NDVI time series data generated by the improved spatial and temporal data fusion approach. Inf. Fusion 2018, 40, 34–44. [Google Scholar] [CrossRef]

- Appriou, A. Uncertainty Theories and Multisensor Data Fusion; Wiley: Hoboken, NJ, USA, 2014. [Google Scholar]

- Richardson, A.D.; Dail, D.B.; Hollinger, D.Y. Leaf area index uncertainty estimates for model-data fusion applications. Agric. For. Meteorol. 2011, 151, 1287–1292. [Google Scholar] [CrossRef]

- Gärtner, P.; Förster, M.; Kleinschmit, B. The benefit of synthetically generated RapidEye and Landsat 8 data fusion time series for riparian forest disturbance monitoring. Remote Sens. Environ. 2016, 177, 237–247. [Google Scholar] [CrossRef] [Green Version]

- Luo, Y.; Guan, K.; Peng, J. STAIR: A generic and fully-automated method to fuse multiple sources of optical satellite data to generate a high-resolution, daily and cloud-/gap-free surface reflectance product. Remote Sens. Environ. 2018, 214, 87–99. [Google Scholar] [CrossRef]

- Doxani, G.; Mitraka, Z.; Gascon, F.; Goryl, P.; Bojkov, B.R. A Spectral Unmixing Model for the Integration of Multi-Sensor Imagery: A Tool to Generate Consistent Time Series Data. Remote Sens. 2015, 7, 14000–14018. [Google Scholar] [CrossRef] [Green Version]

- Yokoya, N.; Yairi, T.; Iwasaki, A. Coupled Nonnegative Matrix Factorization Unmixing for Hyperspectral and Multispectral Data Fusion. IEEE Trans. Geosci. Remote Sens. 2012, 50, 528–537. [Google Scholar] [CrossRef]

- Hilker, T.; Wulder, M.A.; Coops, N.C.; Linke, J.; McDermid, G.; Masek, J.G.; Gao, F.; White, J.C. A new data fusion model for high spatial- and temporal-resolution mapping of forest disturbance based on Landsat and MODIS. Remote Sens. Environ. 2009, 113, 1613–1627. [Google Scholar] [CrossRef]

- Wenwen, C.; Jinling, S.; Jindi, W.; Zhiqiang, X. High spatial-and temporal-resolution NDVI produced by the assimilation of MODIS and HJ-1 data. Can. J. Remote Sens. 2011, 37, 612–627. [Google Scholar] [CrossRef]

- Fu, D.; Chen, B.; Wang, J.; Zhu, X.; Hilker, T. An Improved Image Fusion Approach Based on Enhanced Spatial and Temporal the Adaptive Reflectance Fusion Model. Remote Sens. 2013, 5, 6346–6360. [Google Scholar] [CrossRef] [Green Version]

- Meng, J.; Du, X.; Wu, B. Generation of high spatial and temporal resolution NDVI and its application in crop biomass estimation. Int. J. Digit. Earth 2013, 6, 203–218. [Google Scholar] [CrossRef]

- Shen, H.; Wu, P.; Liu, Y.; Ai, T.; Wang, Y.; Liu, X. A spatial and temporal reflectance fusion model considering sensor observation differences. Int. J. Remote Sens. 2013, 34, 4367–4383. [Google Scholar] [CrossRef]

- Zhang, K.; Zhou, H.; Wang, J.; Xue, H. Estimation and validation of high temporal and spatial resolution albedo. In Proceedings of the 2013 IEEE International Geoscience and Remote Sensing Symposium—IGARSS, Melbourne, Australia, 21–26 July 2013. [Google Scholar] [CrossRef]

- Rao, Y.; Zhu, X.; Chen, J.; Wang, J. An Improved Method for Producing High Spatial-Resolution NDVI Time Series Datasets with Multi-Temporal MODIS NDVI Data and Landsat TM/ETM+ Images. Remote Sens. 2015, 7, 7865–7891. [Google Scholar] [CrossRef] [Green Version]

| Method | Band | r | RMSE | AAD | AARD | SSIM | PSNR | |

|---|---|---|---|---|---|---|---|---|

| Input: one pair of images | FSDAF | NIR | 0.9180 | 0.0626 | 0.0877 | 0.6974 | 0.5236 | 38.2108 |

| Red | 0.9274 | 0.0217 | 0.0101 | 0.1468 | 0.7477 | 50.1605 | ||

| Green | 0.9193 | 0.0617 | 0.0842 | 0.6514 | 0.5461 | 39.4421 | ||

| STARFM | NIR | 0.8430 | 0.0821 | 0.0210 | 0.5288 | 0.7304 | 22.2600 | |

| Red | 0.9304 | 0.0215 | 0.0091 | 0.1315 | 0.8005 | 50.1605 | ||

| Green | 0.9259 | 0.0347 | 0.0141 | 0.1467 | 0.7648 | 48.7742 | ||

| Ref-BSFM | NIR | 0.9239 | 0.0251 | 0.0142 | 0.1111 | 0.8183 | 44.6935 | |

| Red | 0.9369 | 0.0204 | 0.0083 | 0.1228 | 0.8052 | 52.3408 | ||

| Green | 0.9356 | 0.0198 | 0.0097 | 0.1214 | 0.8142 | 50.1069 | ||

| Input: two pairs of images | ESTARFM | NIR | 0.9405 | 0.0224 | 0.1139 | 0.0818 | 0.8320 | 54.9317 |

| Red | 0.9353 | 0.0217 | 0.0108 | 0.1212 | 0.7913 | 53.1247 | ||

| Green | 0.9388 | 0.0208 | 0.0106 | 0.1193 | 0.8042 | 54.4223 | ||

| Ref-BSFM | NIR | 0.9601 | 0.0212 | 0.0119 | 0.0722 | 0.8972 | 54.8263 | |

| Red | 0.9410 | 0.0176 | 0.0121 | 0.1237 | 0.8452 | 56.7885 | ||

| Green | 0.9497 | 0.0162 | 0.0119 | 0.1200 | 0.8525 | 56.0023 |

| Method | Band | r | RMSE | AAD | AARD | SSIM | PSNR | |

|---|---|---|---|---|---|---|---|---|

| Input: one pair of images | FSDAF | NIR | 0.6880 | 0.1853 | 0.0620 | 0.7675 | 0.1968 | 13.9891 |

| Red | 0.7422 | 0.1756 | 0.0618 | 0.7224 | 0.1410 | 12.4437 | ||

| Green | 0.7370 | 0.1784 | 0.0618 | 0.7341 | 0.1520 | 12.3158 | ||

| STARFM | NIR | 0.1584 | 0.1872 | 0.1503 | 0.6531 | 0.1132 | 21.5352 | |

| Red | 0.4041 | 0.1895 | 0.0598 | 0.6198 | 0.1680 | 15.1226 | ||

| Green | 0.4247 | 0.1642 | 0.0502 | 0.5744 | 0.1967 | 18.7642 | ||

| Ref-BSFM | NIR | 0.9360 | 0.0247 | 0.0200 | 0.0881 | 0.8209 | 45.1331 | |

| Red | 0.9641 | 0.0200 | 0.0078 | 0.1200 | 0.8483 | 53.7812 | ||

| Green | 0.9605 | 0.0221 | 0.0143 | 0.1347 | 0.8421 | 50.1140 | ||

| Input: two pairs of images | ESTARFM | NIR | 0.5904 | 0.0930 | 0.0725 | 0.2708 | 0.3314 | 22.7283 |

| Red | 0.7481 | 0.0283 | 0.0227 | 0.5511 | 0.5164 | 40.6181 | ||

| Green | 0.4249 | 0.1677 | 0.0598 | 0.5679 | 0.2021 | 21.4413 | ||

| Ref-BSFM | NIR | 0.9412 | 0.0272 | 0.0174 | 0.0733 | 0.8751 | 54.1654 | |

| Red | 0.9651 | 0.0165 | 0.0069 | 0.1186 | 0.8558 | 56.2208 | ||

| Green | 0.9609 | 0.0173 | 0.0061 | 0.1243 | 0.8872 | 55.4791 |

| Method | Band | r | RMSE | AAD | AARD | SSIM | PSNR |

|---|---|---|---|---|---|---|---|

| Ref-BSFM | NIR | 0.6972 | 0.0898 | 0.0611 | 0.3864 | 0.8533 | 55.0437 |

| Red | 0.8202 | 0.0336 | 0.0203 | 0.3459 | 0.9013 | 65.0556 | |

| STARFM | NIR | 0.6069 | 0.0988 | 0.0771 | 0.3768 | 0.8310 | 26.5557 |

| Red | 0.7819 | 0.0337 | 0.0242 | 0.3823 | 0.8922 | 34.4263 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yang, L.; Song, J.; Han, L.; Wang, X.; Wang, J. Reconstruction of High-Temporal- and High-Spatial-Resolution Reflectance Datasets Using Difference Construction and Bayesian Unmixing. Remote Sens. 2020, 12, 3952. https://0-doi-org.brum.beds.ac.uk/10.3390/rs12233952

Yang L, Song J, Han L, Wang X, Wang J. Reconstruction of High-Temporal- and High-Spatial-Resolution Reflectance Datasets Using Difference Construction and Bayesian Unmixing. Remote Sensing. 2020; 12(23):3952. https://0-doi-org.brum.beds.ac.uk/10.3390/rs12233952

Chicago/Turabian StyleYang, Lei, Jinling Song, Lijuan Han, Xin Wang, and Jing Wang. 2020. "Reconstruction of High-Temporal- and High-Spatial-Resolution Reflectance Datasets Using Difference Construction and Bayesian Unmixing" Remote Sensing 12, no. 23: 3952. https://0-doi-org.brum.beds.ac.uk/10.3390/rs12233952