Himawari-8 Aerosol Optical Depth (AOD) Retrieval Using a Deep Neural Network Trained Using AERONET Observations

Abstract

:1. Introduction

2. Data

2.1. Himawari-8 TOA Reflectance and AOD, and Auxiliary Data

2.2. AERONET 500 nm AOD

2.3. Collocated AHI TOA and AERONET AOD Observations

3. Method

3.1. Seventeen Predictors

3.2. Deep Neural Network (DNN)

3.3. K-Fold Cross-Validation and Leave-One-Station-Out Validation

4. Results

4.1. Descriptive Statistics

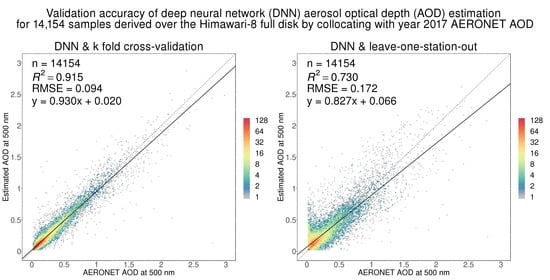

4.2. K-Fold Cross-Validation

4.3. Leave-One-Station-Out Validation

4.4. Comparison with the JMA AOD Product

5. Discussion

5.1. Differences between the K-Fold Cross-Validation and Leave-One-Station-Out Validation

5.2. The DNN Machine Learning Advantage

5.3. The Contribution of the Dark-Target (DT) Derived TOA Ratio Predictors

5.4. Sensitivity to the DNN Structure

6. Conclusions

- (1)

- The leave-one-station-out validation shows the capability of the DNN algorithm for systemic AOD retrieval over large-areas using AHI data (RMSE = 0.172, R2 = 0.730).

- (2)

- The k-fold cross-validation with RMSE = 0.094 and R2 = 0.915 overestimates the accuracy for large-area applications.

- (3)

- DNN estimated AOD agrees better with AERONET measurements than random forest AOD estimates and the JMA official AOD product.

- (4)

- Some variables that are important for physical model-based AOD estimation can also improve the DNN AOD estimation. It highlights the importance of the domain knowledge and expertise when using data driven models for aerosol estimation.

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

Appendix A

| AERONET Site | (Latitude (°), Longitude (°)) | Number of Collocated Samples | Number of Days in 2017 with Collocated Samples |

|---|---|---|---|

| Anmyon | (36.539, 126.330) | 48 | 18 |

| ARM_Macquarie_Is | (−54.500, 158.935) | 25 | 19 |

| Bac_Lieu | (9.280, 105.730) | 87 | 47 |

| Bamboo | (25.187, 121.535) | 2 | 2 |

| Bandung | (−6.888, 107.610) | 59 | 53 |

| Banqiao | (24.998, 121.442) | 66 | 41 |

| Beijing | (39.977, 116.381) | 351 | 121 |

| Beijing-CAMS | (39.933, 116.317) | 596 | 207 |

| Bhola | (22.227, 90.756) | 194 | 80 |

| Birdsville | (−25.899, 139.346) | 822 | 230 |

| BMKG_GAW_PALU | (−1.650, 120.183) | 1 | 1 |

| Bukit_Kototabang | (−0.202, 100.318) | 28 | 19 |

| Canberra | (−35.271, 149.111) | 217 | 108 |

| Cape_Fuguei_Station | (25.298, 121.538) | 110 | 65 |

| Chen-Kung_Univ | (22.993, 120.205) | 313 | 136 |

| Chiang_Mai_Met_Sta | (18.771, 98.973) | 240 | 52 |

| Chiayi | (23.496, 120.496) | 250 | 132 |

| Chiba_University | (35.625, 140.104) | 216 | 102 |

| Dalanzadgad | (43.577, 104.419) | 367 | 166 |

| Dhaka_University | (23.728, 90.398) | 183 | 91 |

| Doi_Inthanon | (18.590, 98.486) | 87 | 45 |

| Dongsha_Island | (20.699, 116.729) | 58 | 31 |

| Douliu | (23.712, 120.545) | 175 | 91 |

| EPA-NCU | (24.968, 121.185) | 200 | 78 |

| Fowlers_Gap | (−31.086, 141.701) | 805 | 230 |

| Fukuoka | (33.524, 130.475) | 77 | 67 |

| Gandhi_College | (25.871, 84.128) | 37 | 19 |

| Gangneung_WNU | (37.771, 128.867) | 519 | 185 |

| Gwangju_GIST | (35.228, 126.843) | 210 | 85 |

| Hankuk_UFS | (37.339, 127.266) | 580 | 194 |

| Hokkaido_University | (43.076, 141.341) | 136 | 63 |

| Hong_Kong_PolyU | (22.303, 114.180) | 4 | 4 |

| Irkutsk | (51.800, 103.087) | 166 | 79 |

| Jabiru | (−12.661, 132.893) | 193 | 85 |

| Jambi | (−1.632, 103.642) | 2 | 2 |

| Kanpur | (26.513, 80.232) | 349 | 168 |

| KORUS_Kyungpook_NU | (35.890, 128.606) | 64 | 32 |

| KORUS_Mokpo_NU | (34.913, 126.437) | 2 | 2 |

| KORUS_UNIST_Ulsan | (35.582, 129.190) | 69 | 19 |

| Kuching | (1.491, 110.349) | 13 | 11 |

| Lake_Argyle | (−16.108, 128.749) | 138 | 65 |

| Lake_Lefroy | (−31.255, 121.705) | 52 | 38 |

| Learmonth | (−22.241, 114.097) | 2 | 2 |

| Luang_Namtha | (20.931, 101.416) | 341 | 144 |

| Lulin | (23.469, 120.874) | 64 | 34 |

| Lumbini | (27.490, 83.280) | 194 | 73 |

| Makassar | (−4.998, 119.572) | 235 | 100 |

| Mandalay_MTU | (21.973, 96.186) | 404 | 111 |

| Manila_Observatory | (14.635, 121.078) | 13 | 9 |

| ND_Marbel_Univ | (6.496, 124.843) | 41 | 21 |

| NhaTrang | (12.205, 109.206) | 4 | 2 |

| Niigata | (37.846, 138.942) | 283 | 106 |

| Nong_Khai | (17.877, 102.717) | 116 | 48 |

| Noto | (37.334, 137.137) | 76 | 33 |

| Omkoi | (17.798, 98.432) | 368 | 117 |

| Osaka | (34.651, 135.591) | 93 | 69 |

| Palangkaraya | (−2.228, 113.946) | 59 | 37 |

| Pioneer_JC | (1.384, 103.755) | 84 | 48 |

| Pokhara | (28.1867, 83.975) | 717 | 246 |

| Pontianak | (0.075, 109.191) | 7 | 5 |

| Pusan_NU | (35.235, 129.082) | 71 | 25 |

| QOMS_CAS | (28.365, 86.948) | 15 | 12 |

| Seoul_SNU | (37.458, 126.951) | 455 | 158 |

| Shirahama | (33.694, 135.357) | 10 | 7 |

| Silpakorn_Univ | (13.819, 100.041) | 309 | 95 |

| Singapore | (1.2977, 103.780) | 164 | 93 |

| Son_La | (21.332, 103.905) | 63 | 33 |

| Songkhla_Met_Sta | (7.184, 100.605) | 213 | 95 |

| Tai_Ping | (10.376, 114.362) | 331 | 109 |

| Taipei_CWB | (25.015, 121.538) | 110 | 61 |

| Tomsk | (56.475, 85.048) | 11 | 6 |

| Tomsk_22 | (56.417, 84.074) | 52 | 22 |

| USM_Penang | (5.358, 100.302) | 38 | 24 |

| Ussuriysk | (43.700, 132.163) | 227 | 109 |

| XiangHe | (39.754, 116.962) | 274 | 87 |

| Yonsei_University | (37.564, 126.935) | 599 | 195 |

References

- Stocker, T. Climate Change 2013: The Physical Science Basis: Working Group I Contribution to the Fifth Assessment Report of the Intergovernmental Panel on Climate Change; Cambridge University Press: Cambridge, UK, 2014. [Google Scholar]

- Lee, K.H.; Li, Z.; Kim, Y.J.; Kokhanovsky, A. Atmospheric Aerosol Monitoring from Satellite Observations: A History of Three Decades. In Atmospheric and Biological Environmental Monitoring; Springer: Dordrecht, The Netherlands, 2009; pp. 13–38. [Google Scholar]

- Kokhanovsky, A.A.; de Leeuw, G. Satellite Aerosol Remote Sensing over Land; Springer: Berlin/Heidelberg, Germany, 2009. [Google Scholar]

- Sogacheva, L.; Popp, T.; Sayer, A.M.; Dubovik, O.; Garay, M.J.; Heckel, A.; Hsu, N.C.; Jethva, H.; Kahn, R.A.; Kolmonen, P.; et al. Merging regional and global aerosol optical depth records from major available satellite products. Atmos. Chem. Phys. 2020, 20, 2031–2056. [Google Scholar] [CrossRef] [Green Version]

- Kaufman, Y.J.; Tanré, D.; Remer, L.A.; Vermote, E.F.; Chu, A.; Holben, B.N. Operational remote sensing of tropospheric aerosol over land from EOS moderate resolution imaging spectroradiometer. J. Geophys. Res. Atmos. 1997, 102, 17051–17067. [Google Scholar] [CrossRef]

- Levy, R.C.; Mattoo, S.; Munchak, L.A.; Remer, L.A.; Sayer, A.M.; Patadia, F.; Hsu, N.C. The collection 6 MODIS aerosol products over land and ocean. Atmos. Meas. Tech. 2013, 6, 2989–3034. [Google Scholar] [CrossRef] [Green Version]

- Veefkind, J.P.; de Leeuw, G.; Durkee, P.A. Retrieval of aerosol optical depth over land using two-angle view satellite radiometry during TARFOX. Geophys. Res. Lett. 1998, 25, 3135–3138. [Google Scholar] [CrossRef]

- Kahn, R.A.; Gaitley, B.J.; Garay, M.J. Multiangle imaging spectroradiometer global aerosol product assessment by comparison with the aerosol robotic network. J. Geophys. Res. Atmos. 2010, 115, D23. [Google Scholar] [CrossRef]

- Holzer-Popp, T.; de Leeuw, G.; Griesfeller, J.; Martynenko, D.; Klüser, L.; Bevan, S.; Davies, W.; Ducos, F.; Deuzé, J.L.; Graigner, R.G. Aerosol retrieval experiments in the ESA aerosol_cci project. Atmos. Meas. Tech. 2013, 6, 1919–1957. [Google Scholar] [CrossRef] [Green Version]

- Dubovik, O.; Li, Z.; Mishchenko, M.I.; Tanre, D.; Karol, Y.; Bojkov, B.; Gu, X. Polarimetric remote sensing of atmospheric aerosols: Instruments, methodologies, results, and perspectives. J. Quant. Spectrosc. Radiat. Transfer. 2019, 224, 474–511. [Google Scholar] [CrossRef]

- Yoshida, M.; Kikuchi, M.; Nagao, T.M.; Murakami, H.; Nomaki, T.; Higurashi, A. Common retrieval of aerosol properties for imaging satellite sensors. J. Meteorol. Soc. Jpn. Ser. II 2018, 96B, 193–209. [Google Scholar] [CrossRef] [Green Version]

- Hsu, N.C.; Tsay, S.C.; King, M.D.; Herman, J.R. Aerosol properties over bright-reflecting source regions. IEEE Trans. Geosci. Remote Sens. 2004, 42, 557–569. [Google Scholar] [CrossRef]

- Sayer, A.M.; Hsu, N.C.; Bettenhausen, C.; Jeong, M.J. Validation and uncertainty estimates for MODIS Collection 6 “deep Blue” aerosol data. J. Geophys. Res. Atmos. 2013, 118, 7864–7872. [Google Scholar] [CrossRef] [Green Version]

- Sayer, A.M.; Munchak, L.A.; Hsu, N.C.; Levy, R.C.; Bettenhausen, C.; Jeong, M.J. MODIS collection 6 aerosol products: Comparison between aqua’s e-deep blue, dark target, and “merged” data sets, and usage recommendations. J. Geophys. Res. Atmos. 2014, 119, 13965–13989. [Google Scholar] [CrossRef]

- Sayer, A.M.; Hsu, N.C.; Bettenhausen, C.; Jeong, M.; Meister, G.; Al, S.E.T. Effect of MODIS terra radiometric calibration improvements on collection 6 deep blue aerosol products: Validation and terra/aqua consistency. J. Geophys. Res. Atmos. 2015, 120, 12157–12174. [Google Scholar] [CrossRef] [Green Version]

- Ge, B.; Li, Z.; Liu, L.; Yang, L.; Chen, X.; Hou, W.; Zhang, Y.; Li, D.; Li, L.; Qie, L. A dark target method for Himawari-8/AHI aerosol retrieval: Application and validation. IEEE Trans. Geosci. Remote Sens. 2018, 57, 381–394. [Google Scholar] [CrossRef]

- Yang, F.; Wang, Y.; Tao, J.; Wang, Z.; Fan, M.; de Leeuw, G.; Chen, L. Preliminary investigation of a new AHI aerosol optical depth (AOD) retrieval algorithm and evaluation with multiple source AOD measurements in china. Remote Sens. 2018, 10, 748. [Google Scholar] [CrossRef] [Green Version]

- Li, S.; Wang, W.; Hashimoto, H.; Xiong, J.; Vandal, T.; Yao, J.; Nemani, R. First provisional land surface reflectance product from geostationary satellite Himawari-8 AHI. Remote Sens. 2019, 11, 2990. [Google Scholar] [CrossRef] [Green Version]

- She, L.; Zhang, H.; Wang, W.; Wang, Y.; Shi, Y. Evaluation of the multi-angle implementation of atmospheric correction (MAIAC) aerosol algorithm for Himawari-8 Data. Remote Sens. 2019, 11, 2771. [Google Scholar] [CrossRef] [Green Version]

- Lyapustin, A.; Wang, Y.; Laszlo, I.; Kahn, R.; Korkin, S.; Remer, L.; Levy, R.; Reid, J.S. Multiangle implementation of atmospheric correction (MAIAC): 2. aerosol algorithm. J. Geophys. Res. Atmos. 2011, 116, D03211. [Google Scholar] [CrossRef]

- Lyapustin, A.; Wang, Y.; Korkin, S.; Huang, D. MODIS collection 6 MAIAC algorithm. Atmos. Meas. Tech. 2018, 11, 5741–5765. [Google Scholar] [CrossRef] [Green Version]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef]

- Holben, B.N.; Eck, T.F.; Slutsker, I.; Tanre, D.; Buis, J.P.; Setzer, A.; Vermote, E.; Reagan, J.A.; Kaufman, Y.J.; Nakajima, T.; et al. AERONET—A federated instrument network and data archive for aerosol characterization. Remote Sens. Environ. 1998, 66, 1–16. [Google Scholar] [CrossRef]

- Vucetic, S.; Han, B.; Mi, W.; Li, Z.; Obradovic, Z. A data-mining approach for the validation of aerosol retrievals. IEEE Geosci. Remote Sens. Lett. 2008, 5, 113–117. [Google Scholar] [CrossRef] [Green Version]

- Radosavljevic, V.; Vucetic, S.; Obradovic, Z. A data-mining technique for aerosol retrieval across multiple accuracy measures. IEEE Geosci. Remote Sens. Lett. 2010, 7, 411–415. [Google Scholar] [CrossRef] [Green Version]

- Ristovski, K.; Vucetic, S.; Obradovic, Z. Uncertainty analysis of neural-network-based aerosol retrieval. IEEE Trans. Geosci. Remote Sens. 2012, 50, 409–414. [Google Scholar] [CrossRef]

- Kolios, S.; Hatzianastassiou, N. Quantitative aerosol optical depth detection during dust outbreaks from METEOSAT imagery using an artificial neural network model. Remote Sens. 2019, 11, 1022. [Google Scholar] [CrossRef] [Green Version]

- Lary, D.J.; Remer, L.A.; MacNeill, D.; Roscoe, B.; Paradise, S. Machine learning and bias correction of MODIS aerosol optical depth. IEEE Trans. Geosci. Remote Sens. 2009, 6, 694–698. [Google Scholar] [CrossRef] [Green Version]

- Just, A.C.; de Carli, M.M.; Shtein, A.; Dorman, M.; Lyapustin, A.; Kloog, I. Correcting measurement error in satellite aerosol optical depth with machine learning for modeling PM2. 5 in the Northeastern USA. Remote Sens. 2018, 10, 803. [Google Scholar] [CrossRef] [Green Version]

- Li, L. A robust deep learning approach for spatiotemporal estimation of satellite AOD and PM2.5. Remote Sens. 2020, 12, 264. [Google Scholar] [CrossRef] [Green Version]

- Su, T.; Laszlo, I.; Li, Z.; Wei, J.; Kalluri, S. Refining aerosol optical depth retrievals over land by constructing the relationship of spectral surface reflectances through deep learning: Application to Himawari-8. Remote Sens. Environ. 2020, 251, 112093. [Google Scholar] [CrossRef]

- Chen, X.; de Leeuw, G.; Arola, A.; Liu, S.; Liu, Y.; Li, Z.; Zhang, K. Joint retrieval of the aerosol fine mode fraction and optical depth using MODIS spectral reflectance over northern and eastern China: Artificial neural network method. Remote Sens. Environ. 2020, 249, 112006. [Google Scholar] [CrossRef]

- Stafoggia, M.; Bellander, T.; Bucci, S.; Davoli, M.; de Hoogh, K.; de’Donato, F.; Scortichini, M. Estimation of daily PM10 and PM2. 5 concentrations in Italy, 2013–2015, using a spatiotemporal land-use random-forest model. Environ. Int. 2019, 124, 170–179. [Google Scholar] [CrossRef]

- Choubin, B.; Abdolshahnejad, M.; Moradi, E.; Querol, X.; Mosavi, A.; Shamshirband, S.; Ghamisi, P. Spatial hazard assessment of the PM10 using machine learning models in Barcelona, Spain. Sci. Total Environ. 2020, 701, 134474. [Google Scholar] [CrossRef] [PubMed]

- Wei, J.; Huang, W.; Li, Z.; Xue, W.; Peng, Y.; Sun, L.; Cribb, M. Estimating 1-km-resolution PM2.5 concentrations across China using the space-time random forest approach. Remote Sens. Environ. 2019, 231, 111221. [Google Scholar] [CrossRef]

- Dong, L.; Li, S.; Yang, J.; Shi, W.; Zhang, L. Investigating the performance of satellite-based models in estimating the surface PM2. 5 over China. Chemosphere 2020, 256, 127051. [Google Scholar] [CrossRef] [PubMed]

- Okuyama, A.; Andou, A.; Date, K.; Hoasaka, K.; Mori, N.; Murata, H.; Tabata, T.; Takahashi, M.; Yoshino, R.; Bessho, K.; et al. Preliminary validation of Himawari-8/AHI navigation and calibration. Earth Obs. Syst. 2015, 9607, 96072E. [Google Scholar]

- Okuyama, A.; Takahashi, M.; Date, K.; Hosaka, K.; Murata, H.; Tabata, T.; Yoshino, R. Validation of Himawari-8/AHI radiometric calibration based on two years of In-Orbit data. J. Meteorol. Soc. Jpn. Ser. II 2018, 96, 91–109. [Google Scholar] [CrossRef] [Green Version]

- Nakajima, T.; Tanaka, M. Matrix formulations for the transfer of solar radiation in a plane-parallel scattering atmosphere. J. Quant. Spectrosc. Radiat. Transfer. 1986, 35, 13–21. [Google Scholar] [CrossRef]

- Russell, P.B.; Livingston, J.M.; Dutton, E.G.; Pueschel, R.F.; Reagan, J.A.; Defoor, T.E.; Box, M.A.; Allen, D.; Pilewskie, P.; Herman, B.M.; et al. Pinatubo and pre-Pinatubo optical-depth spectra: Mauna Loa measurements, comparisons, inferred particle size distributions, radiative effects, and relationship to lidar data. J. Geophys. Res. Atmos. 1993, 98, 22969–22985. [Google Scholar] [CrossRef]

- Giles, D.M.; Sinyuk, A.; Sorokin, M.G.; Schafer, J.S.; Smirnov, A.; Slutsker, I.; Eck, T.F.; Holben, B.N.; Lewis, J.R.; Campbell, J.R.; et al. Advancements in the aerosol robotic network (AERONET) version 3 database–automated near-real-time quality control algorithm with improved cloud screening for sun photometer aerosol optical depth (AOD) measurements. Atmos. Meas. Tech. 2019, 12, 169–209. [Google Scholar] [CrossRef] [Green Version]

- Hsu, N.C.; Jeong, M.J.; Bettenhausen, C.; Sayer, A.M.; Hansell, R.; Seftor, C.S.; Huang, J.; Tsay, S.C. Enhanced DB aerosol retrieval algorithm: The second generation. J. Geophys. Res. Atmos. 2013, 118, 9296–9315. [Google Scholar] [CrossRef]

- Bohren, C.F.; Huffman, D.R. Absorption and Scattering of Light by Small Particles; Wiley & Sons: Hoboken, NJ, USA, 1983. [Google Scholar]

- Li, Z.; Zhang, H.K.; Roy, D.P. Investigation of Sentinel-2 bidirectional reflectance hot-spot sensing conditions. IEEE Trans. Geosci. Remote Sens. 2019, 57, 3591–3598. [Google Scholar] [CrossRef]

- Liang, S.L. Quantitative Remote Sensing of Land Surface; Wiley & Sons: Hoboken, NJ, USA, 2004. [Google Scholar]

- Yuan, Q.; Shen, H.; Li, T.; Li, Z.; Li, S.; Jiang, Y.; Gao, J. Deep learning in environmental remote sensing: Achievements and challenges. Remote Sens. Environ. 2020, 241, 111716. [Google Scholar] [CrossRef]

- Glorot, X.; Bordes, A.; Bengio, Y. Deep sparse rectifier neural networks. In Proceedings of the Fourteenth International Conference on Artificial Intelligence and Statistics, Fort Lauderdale, FL, USA, 11–13 April 2011; pp. 315–323. [Google Scholar]

- Ruder, S. An Overview of Gradient Descent Optimization Algorithms; Insight Centre for Data Analytics: Dublin, Ireland, 2016. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. In Proceedings of the 25th International Conference on Neural Information Processing Systems, Toronto, ON, Canada, 1 December 2012; pp. 1097–1105. [Google Scholar]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. In Proceedings of the International Conference on Learning Representations, Oxford, UK, 14–16 April 2014. [Google Scholar]

- Iandola, F.N.; Han, S.; Moskewicz, M.W.; Ashraf, K.; Dally, W.J.; Keutzer, K. SqueezeNet: AlexNet-Level Accuracy with 50x Fewer Parameters and<0.5 MB Model Size. arXiv 2016, arXiv:1602.07360. [Google Scholar]

- Dong, C.; Loy, C.C.; He, K.; Tang, X. Image super-resolution using deep convolutional networks. IEEE Trans. Pattern Anal. Mach. Intell. 2016, 38, 295–307. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Delving Deep into Rectifiers: Surpassing Human-Level Performance on Imagenet Classification. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 1026–1034. [Google Scholar]

- Ioffe, S.; Szegedy, C. Batch Normalization: Accelerating Deep Network Training by Reducing Internal Covariate Shift; Google: Mountain View, CA, USA, 2015. [Google Scholar]

- Abadi, M.; Agarwal, A.; Barham, P.; Brevdo, E.; Chen, Z.; Citro, C.; Ghemawat, S. Tensorflow: Large-Scale Machine Learning on Heterogeneous Distributed Systems. 2016. Available online: https://static.googleusercontent.com/media/research.google.com/en//pubs/archive/45166.pdf (accessed on 16 December 2020).

- Rodriguez, J.D.; Perez, A.; Lozano, J.A. Sensitivity analysis of k-fold cross validation in prediction error estimation. IEEE Trans. Pattern Anal. Mach. Intell. 2010, 32, 569–575. [Google Scholar] [CrossRef]

- Gupta, P.; Christopher, S.A. Particulate matter air quality assessment using integrated surface, satellite, and meteorological products: Multiple regression approach. J. Geophys. Res. Atmos. 2009, 114, D14. [Google Scholar] [CrossRef] [Green Version]

- Hu, X.; Waller, L.A.; Lyapustin, A.; Wang, Y.; Al-Hamdan, M.Z.; Crosson, W.L.; Liu, Y. Estimating ground-level PM2. 5 concentrations in the Southeastern United States using MAIAC AOD retrievals and a two-stage model. Remote Sens. Environ. 2014, 140, 220–232. [Google Scholar] [CrossRef]

- He, Q.; Huang, B. Satellite-based mapping of daily high-resolution ground PM2. 5 in China via space-time regression modeling. Remote Sens. Environ. 2018, 206, 72–83. [Google Scholar] [CrossRef]

- Kloog, I.; Nordio, F.; Coull, B.A.; Schwartz, J. Incorporating local land use regression and satellite aerosol optical depth in a hybrid model of spatiotemporal PM2. 5 exposures in the Mid-Atlantic states. Environ. Sci. Technol. 2012, 46, 11913–11921. [Google Scholar] [CrossRef] [Green Version]

- Li, T.; Shen, H.; Yuan, Q.; Zhang, X.; Zhang, L. Estimating ground-level PM2. 5 by fusing satellite and station observations: A geo-intelligent deep learning approach. Geophy. Res. Lett. 2017, 44, 11–985. [Google Scholar] [CrossRef] [Green Version]

- Liaw, A.; Wiener, M. Classification and regression by randomForest. R News 2002, 2, 18–22. [Google Scholar]

- Belgiu, M.; Drăguţ, L. Random forest in remote sensing: A review of applications and future directions. ISPRS J. Photogramme. Remote Sens. 2016, 114, 24–31. [Google Scholar] [CrossRef]

- Hu, X.; Belle, J.H.; Meng, X.; Wildani, A.; Waller, L.A.; Strickland, M.J.; Liu, Y. Estimating PM2.5 concentrations in the conterminous United States using the random forest approach. Environ. Sci. Technol. 2017, 51, 6936–6944. [Google Scholar] [CrossRef] [PubMed]

- Chen, J.; Yin, J.; Zang, L.; Zhang, T.; Zhao, M. Stacking machine learning model for estimating hourly PM2.5 in China based on Himawari 8 aerosol optical depth data. Sci. Total Environ. 2019, 697, 134021. [Google Scholar] [CrossRef] [PubMed]

- Zuo, X.; Guo, H.; Shi, S.; Zhang, X. Comparison of six machine learning methods for estimating PM2.5 concentration using the Himawari-8 aerosol optical depth. J. Indian Soc. Remote 2020, 48, 1277–1287. [Google Scholar] [CrossRef]

- Remer, L.A.; Kleidman, R.G.; Levy, R.C.; Kaufman, Y.J.; Tanré, D.; Mattoo, S.; Holben, B.N. Global aerosol climatology from the MODIS satellite sensors. J. Geophys. Res. Atmos. 2008, 113, D14. [Google Scholar] [CrossRef] [Green Version]

- Mao, K.B.; Ma, Y.; Xia, L.; Chen, W.Y.; Shen, X.Y.; He, T.J.; Xu, T.R. Global aerosol change in the last decade: An analysis based on MODIS data. Atmos. Environ. 2014, 94, 680–686. [Google Scholar] [CrossRef] [Green Version]

- Ju, J.; Roy, D.P.; Vermote, E.; Masek, J.; Kovalskyy, V. Continental-scale validation of MODIS-based and LEDAPS Landsat ETM + atmospheric correction methods. Remote Sens. Environ. 2012, 122, 175–184. [Google Scholar] [CrossRef] [Green Version]

- Ramachandran, S.; Rupakheti, M.; Lawrence, M.G. Black carbon dominates the aerosol absorption over the indo-gangetic plain and the Himalayan foothills. Environ. Int. 2020, 142, 105814. [Google Scholar] [CrossRef]

- Xie, B.; Zhang, H.K.; Xue, J. Deep convolutional neural network for mapping smallholder agriculture using high spatial resolution satellite image. Sensors 2019, 19, 2398. [Google Scholar] [CrossRef] [Green Version]

- Wieland, M.; Li, Y.; Martinis, S. Multi-sensor cloud and cloud shadow segmentation with a convolutional neural network. Remote Sens. Environ. 2019, 230, 111203. [Google Scholar] [CrossRef]

- Zhang, B.; Zhao, L.; Zhang, X. Three-dimensional convolutional neural network model for tree species classification using airborne hyperspectral images. Remote Sens. Environ. 2020, 247, 111938. [Google Scholar] [CrossRef]

- Breiman, L. Random forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef] [Green Version]

- Loh, W.Y. Classification and Regression Trees; Wiley & Sons: Hoboken, NJ, USA, 2011; pp. 14–23. [Google Scholar]

- Wei, J.; Li, Z.; Sun, L.; Peng, Y.; Zhang, Z.; Li, Z.; Su, T.; Feng, L.; Cai, Z.; Wu, H. Evaluation and uncertainty estimate of next-generation geostationary meteorological Himawari-8/AHI aerosol products. Sci. Total Environ. 2019, 692, 879–891. [Google Scholar] [CrossRef] [PubMed]

- Gupta, P.; Remer, L.A.; Levy, R.C.; Mattoo, S. Validation of MODIS 3 km land aerosol optical depth from NASA’s EOS terra and aqua missions. Atmos. Meas. Tech. 2018, 11, 3145–3159. [Google Scholar] [CrossRef] [Green Version]

| Band Number | Central Wavelength (μm) | Band Name | Spatial Resolution (km) |

|---|---|---|---|

| 1 | 0.47 | blue | 2 |

| 2 | 0.51 | green | 2 |

| 3 | 0.64 | red | 2 |

| 4 | 0.86 | near infrared (NIR) | 2 |

| 5 | 1.61 | shortwave infrared (SWIR) | 2 |

| 6 | 2.25 | SWIR | 2 |

| Mean | Std | Min | Max | |

|---|---|---|---|---|

| AERONET AOD 500 nm | 0.30 | 0.32 | 0.00 | 2.98 |

| TOA band 1 | 0.22 | 0.06 | 0.08 | 0.45 |

| TOA band 2 | 0.19 | 0.06 | 0.06 | 0.43 |

| TOA band 3 | 0.17 | 0.06 | 0.03 | 0.46 |

| TOA band 4 | 0.28 | 0.08 | 0.02 | 0.71 |

| TOA band 5 | 0.24 | 0.10 | 0.01 | 0.55 |

| TOA band 6 | 0.14 | 0.07 | 0.00 | 0.38 |

| View zenith angle | 46.47 | 11.95 | 17.36 | 69.60 |

| View azimuth angle | 109.39 | 55.81 | −179.03 | 179.11 |

| Solar zenith angle | 44.59 | 15.31 | 1.71 | 70.12 |

| Solar azimuth angle | −0.23 | 125.49 | −179.97 | 179.93 |

| Water vapor content | 22.91 | 16.31 | 0.51 | 66.95 |

| Ozone content | 6.30 × 10−3 | 9.79 × 10−4 | 4.80 × 10−3 | 1.07 × 10−2 |

| Number of Hidden Layers | Number of Neurons in Each Hidden Layer |

|---|---|

| 1-hidden-layer | 256 |

| 2-hidden-layer | 256, 512 |

| 3-hidden-layer (Section 3) | 256, 512, 512 |

| 7-hidden-layer | 256, 256, 256, 512, 512,1024,1024 |

| 18-hidden-layer | 64, 64, 128, 128, 256, 256, 256, 256, 512, 512, 512, 512, 512, 512, 512, 512, 1024,1024 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

She, L.; Zhang, H.K.; Li, Z.; de Leeuw, G.; Huang, B. Himawari-8 Aerosol Optical Depth (AOD) Retrieval Using a Deep Neural Network Trained Using AERONET Observations. Remote Sens. 2020, 12, 4125. https://0-doi-org.brum.beds.ac.uk/10.3390/rs12244125

She L, Zhang HK, Li Z, de Leeuw G, Huang B. Himawari-8 Aerosol Optical Depth (AOD) Retrieval Using a Deep Neural Network Trained Using AERONET Observations. Remote Sensing. 2020; 12(24):4125. https://0-doi-org.brum.beds.ac.uk/10.3390/rs12244125

Chicago/Turabian StyleShe, Lu, Hankui K. Zhang, Zhengqiang Li, Gerrit de Leeuw, and Bo Huang. 2020. "Himawari-8 Aerosol Optical Depth (AOD) Retrieval Using a Deep Neural Network Trained Using AERONET Observations" Remote Sensing 12, no. 24: 4125. https://0-doi-org.brum.beds.ac.uk/10.3390/rs12244125