1. Introduction

At present, around an 11% of the global land surface (13.4 billion ha) is used in crop production (arable land and land under permanent crops) [

1]. According to data of the World Fact Book [

2], in 2015 agriculture accounted for 5.9% of the gross domestic product (GDP) worldwide. However, even though other economic sectors are more profitable for the national economies, the entire world population depends on agriculture and farming. This sector is under a high risk of investment given the unpredictable factors that can affect the yield, such as weather conditions, natural disturbances and market fluctuations. Furthermore, growing season coincides with the peak of disturbances: fires, summer storms, breeding of herbivores, flourishing of plagues and diseases, etc. [

3,

4,

5].

Under many climate change scenarios, many authors point out that human systems developed during the Holocene (such as agriculture) are under risk of disappearing or at least be compromised in the Anthropocene era that we have entered, and it is expected that this situation might become worse in the coming years [

6,

7,

8]. While the total world precipitation has not significantly changed in the last decade, the distribution of rainfall across the year and space has changed, implying relevant effects in biomes and agriculture lands [

9].

Farmers and agronomists are aware of the risk of their business, with an eye on possible changes on climate. Thus, in the literature we found many studies related to alternative management practices to face climate change [

10,

11]. Some effects, unfortunately, cannot be prevented, such as floods, storms and fires. For this reason, an accurate evaluation and prediction of damages is of major importance for farmers and stakeholders in the agriculture business, including insurance companies. Many farmers and agriculture corporations invest in insurance in case they lose part or the totality of the yield. Traditional crop-loss assessment methods are slow, labour intensive, biased by restricted field access, and subjective from adjuster to adjuster. The subjectivity of damage estimation leads in many cases to disagreements between farmers and adjusters, in terms of how many hectares are insurable. Both crop insurers and farmers are looking for ways to improve resource management and claims reporting. The current paper proposes more objective and accurate methods to calculate crop damages by means of Unmanned Aerial Vehicles (UAV) remote sensing.

Precision agriculture is a farming management concept based on observing, measuring and responding to inter and intra-field variability in crops using remote sensing tools, such as satellites, planes or UAVs [

12,

13,

14,

15]. A review on precision agriculture companies reveals that they focus on very-high resolution sensors [

16,

17,

18], multispectral cameras [

19,

20,

21], hyperspectral sensors [

22,

23,

24], thermal sensing [

25] and wireless sensor networks [

25,

26,

27,

28], to measure plant and soil properties such as plant nutrient and water content and soil humidity. At the beginning, precision agriculture made use of light-weight remotely controlled airplanes, but in recent years, UAVs are being more and more used in this field because of their versatility [

18]. Some authors have developed remote sensing methods for the evaluation of crop damage, such as the effects of droughts [

29], the detection of fungal infection [

30], crop losses in floods [

31], and detection of crops affected by fires [

32]. Nevertheless, to our knowledge, there are not many precision agriculture algorithms that account for digital canopy models of plants and damage quantification. Moreover, the existing literature only shows case studies of one or few crop types and/or damage types.

In the last decade, low-cost UAV technology has been significantly developed, encouraging the use of UAVs for commercial applications, such as precision agriculture, oil and gas, urban planning, etc., [

18,

33]. Now it is possible to find low-cost UAVs, cameras and software in the market that collect high-resolution georeferenced imagery, which can be later used to generate Digital Surface Models (DSM) and 3D point clouds by means of Structure from Motion (SfM) and Multi-View Stereopsis (MVS) [

34,

35,

36,

37]. Specifically, low-cost light UAVs dotted with RGB cameras are the most preferred Unmanned Aerial System (UAS) by many users, farmers among others. Whereas RGB cameras are limited by the development of spectral products, beyond scouting and monitoring, DSMs avoid artefacts that appear often in the UAV imagery, such as shadows projected from objects in the surface or clouds, light differences during the flight time or different colours caused by soil moisture or wet vegetation [

38,

39,

40]. These challenges can be addressed with deep learning, but they need a large labelled database to feed the algorithm [

41]. While UAV-derived DSM from commercial UAVs have been largely used for small scale terrain mapping and 3D modelling of urban areas [

42,

43,

44], they have been also proved useful for agricultural applications [

45,

46,

47,

48,

49,

50].

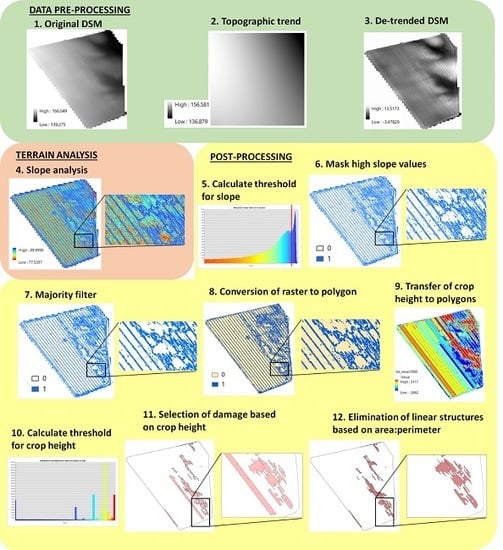

As such, this study explored four terrain analysis tools, based on point cloud data or DSM, for the delineation and quantification, in hectares, of damages in field crops due to insurable causes, such as weather events and wildlife attacks. For this study, we focused on events that cause severe physical damages in the plant structure, generating abrupt depressions in the surface of the canopy, from which the plant rarely recovers. This type of damage is the most distressing for farmers and appointed by adjusters for monetary compensation. By the digital 3D reconstruction of the crop canopy, it is possible to detect differences in crop height or dramatic changes in slope that are related to damages. The accuracy of four different analysis methods are here evaluated and a workflow is proposed to deliver a digital product that can be translated into the number of hectares of damaged crop. The proposed workflow can be easily applied to any type of UAS equipped with snapshot cameras or video recorders, which later produce SfM and MVS-based DSMs. Our contribution to the field of precision agriculture is the assembling of a tool from existing methods that has been proven successful as a generic crop damage estimator, independent of the crop type, damage type or growth stage.

4. Discussion

Agriculture is the most important economic sector worldwide. At the same time, it is a risky activity due to many factors that are outside of human control or difficult to predict, such as weather and market fluctuations. The incorporation of remote sensing to agriculture management is largely improving the efficiency of the agrobusiness and facilitating farmers and other stakeholders’ work.

Farmers and agronomists are incorporating UAVs into their daily crop management. However, most users use only some UAV functions, such as videos or single-shots of the entire cropland at a high elevation and low pixel resolution. Also, most users use basic RGB cameras and light low-cost UAS that do not allow sophisticated spectral analysis, but provide DSMs.

There are numerous studies that use DSM analysis for precision agriculture [

45,

46,

47,

49]; however, the use of DSM for crop damage estimation has been scarcely explored [

29,

30,

31,

32,

40,

73,

74,

75,

76]. Michez et al. [

77] proposed a method to detect damages in corn fields caused by wildlife, using UAV-based DSMs and height thresholds. In a similar fashion, Kuzelka and Surovy [

78] used classification algorithms of crop heights in UAV-DSM to locate damage in wheat generated by wild boars, using accurate height estimations at the field taken with GNSS (Global Navigation Satellite System) for validating their results. Stanton et al. [

79] reported that crop height extracted from SfM DSM and NDVI from UAV products were related to stress caused by aphid plagues. Similarly, Yang et al. [

80] used a hybrid method of spectral and DSM data to classify logging damage in rice fields. As we see, the existing literature only evaluates study cases or use DSM data as a proxy. Our contribution to the sector is a workflow that can potentially be used in any case of structural crop damage, crop type and growth stage, in field crops that present significant damage intensity.

The goal of the present study is to provide a versatile and unsupervised tool, based on DSMs products and Geographic Information Systems analysis, for assessing crop damage after a disturbance. These events (i.e., wildlife, windstorms, fires) bend the vegetation or, in case of a very intense event, remove plant stands from the terrain. In effect, the damaged vegetation is relatively lower than the healthy vegetation and the damage is characterised as a depression or discontinuity in the crop canopy. These depressions can be modelled in Digital Surface Models (DSM) of the crop canopy and be used to detect damage in a terrain model analysis. The presented workflow delivers an estimation of damage in area units. Eventually, if the height of the crop is known, the damage can be estimated as a volume metric. If the yield and plant metrics from other years are known, yield loss can be inferred by regression analysis.

Since we did not find any terrain analysis developed specifically for agriculture, we used algorithms for other applications—such as hydrology, topography and forestry—for the analysis of crop canopies. Four terrain analysis methods were tested: Slope detection, Variance analysis, Geomorphology classification and Cloth Simulation Filter. A selection of six croplands located in different locations in America and Europe were used, representing different crop types (C: corn, W: wheat and B: barley), damage types (W: wind, A: animals, L: logging, M: Man-made) and growth stages, in order to evaluate the influence of those parameters in the accuracy of the tested terrain analysis methods. The results of this study revealed that all tested methods are very accurate detecting crop damage from UAV DSMs. The worst results are above the 77% (for Geomorphology classification), while some of the methods almost reached the 100% accuracy (98% for Slope and Variance analyses). Our results also showed independence of our workflow to environmental and geographic factors, such as topography, soil type and irrigation management.

One of the challenges of this project was to create a tool that is able to analyze any generic DSM, independently of the UAV and camera types, in order to support a broad audience of farmers, agronomists and other agri-businesses’ stakeholders. Also, we wanted our workflow to be independent of the observed target, covering large number of situations, such as different crop types, damage types and growth stage. From a technical point of view, one important achievement is the use of unsupervised methods. Other authors, such as Li et al. [

41], used deep learning techniques to detect tree plantations in UAV images successfully. Deep learning overcomes problems such as projected shadows and colour differences in UAV imagery, at the cost of a large labelled database. A limitation of this study is that the workflow only works on field crops (i.e., wheat, barley, corn or canola). Damage in row crops would require different methods [

74], which are based in image or 3D artificial intelligence algorithms [

41]. The proposed workflow has been proved to be a versatile tool that delivers accurate crop damage estimations independently of the crop type, damage type and growth stage.

A major limitation of our study is that the presented workflow can only detect severe damage in crops. In this study, the damage intensity has not been specifically defined, among other reasons, because of the challenge of defining the term from a technical perspective. However, from the dataset it was possible to observe that the damage was more evident in some cases than in others. The orthomosaics allowed to observe that the workflow was more effective in those crops where the vegetation was completely bent than in the cases where only few plant stands were broken or partially bent. For instance, only Slope values above 89.9 degrees produced a good separation between damaged and not damaged vegetation. The same was observed for the rest of the methods: for Variance, only large variances in elevation; for Geomorphology tools, only large; and for CSF, only points in the clouds that were clearly separated from the surrounding points, were interpreted as damaged vegetation. That implies that damage at leaf level, such as that generated by hail, frost or diseases cannot be detected with this method. For damages that do not affect the plant structure, other methods based on spectral properties of the plant (i.e., using multispectral or hyperspectral sensors) can be useful [

74,

75]. However, rather than being a limitation, small damages are unlikely to cause losses in yield; therefore, the most significant damages (structural damages) are detected with our method.

A significant constraint of the workflow is the requirement for quality DSM as input data. Originally, around 20 farmers signed up as volunteers for this study. However, most DSMs had to be discarded because of low quality, which turned into artefacts that were interpreted wrongly as damage.

Since all terrain methods perform really well, the selection of the best terrain analysis was also evaluated taking into account technical factors, such as data volume, processing time, the number of processing steps and the possibility of a statistical selection of thresholds and parameters. The data volume generated was similar in all methods except for Variance, which was more than 3 times higher. CSF requires more steps for preprocessing and postprocessing; plus, it was not possible to select the appropriate parameters unsupervised. Therefore, Slope analysis was selected as the most accurate and efficient method for the detection of crop damage.

5. Conclusions

The present study intends to help farmers, agronomists and professionals of the agrobusiness in improving their field management and have accurate and objective estimations of damage to be used on insurance claims. In a world of a changing climate where it is expected to experience a rise in plagues, fires, floods, droughts and storms, unsupervised, accurate and fast estimations of crop damages will simplify the task of adjusters and farmers.

The current study explored existing terrain analysis methods to detect crop damage from Unmanned Aerial Vehicles (UAV) Digital Surface Models (DSMs). Datasets from different locations in Europe and America corresponding to different crop types, crop damages, growth stages and damage intensities were tested. This method was designed for field crops (i.e., barley, corn, wheat, rice, etc.) and not for row crops. Four existing terrain analysis methods were tested: Slope detection, Variance analysis, Cloth Simulation Filter and Geomorphology classification. The proposed workflow did not require training data nor expert knowledge, but a posteriori refinement of the results was needed to remove machinery tracks and other sources of noise. The results of our study revealed that all methods are able to detect crop damage in our tested dataset with an accuracy above 90%, and that Slope and Variance were the methods that presented a higher overall accuracy, around 98%.

The presented workflow proved successful in terms of different crop type, growth stage, and damage type. However, our workflow is only effective for field crops data and severe intensity of damage.

Future research will focus on the implementation of the workflow into a programming language and the estimation of crop damage in biomass volume or yield by intersecting the information retrieved from this study (hectares of damaged crops) with information about the absolute height of the observed crops.