1. Introduction

By capturing digital images of several continuous narrow spectral bands, remote sensors can generate three-dimensional multispectral images that contain rich spectral and spatial information [

1]. The abundant information is very useful and has been employed in various applications, such as military reconnaissance, target surveillance, crop condition assessment, surface resource survey, environmental research, and marine applications and so on. However, with the rapid development of multispectral imaging technology, the spectral–spatial resolution of multispectral data becomes higher and higher, resulting in the rapid growth of its data volume. The huge amount of data is not conducive to image transmission, storage, and application, which hinders the development of related technologies. Therefore, it is necessary to find an effective multispectral image compression method to process images before use.

The research of multispectral image compression methods has always received widespread attention. After decades of unremitting efforts, various multispectral image compression algorithms for different application needs have been developed, which can be summarized as follows: predictive coding-based framework [

2], vector quantization coding-based framework [

3], transform coding-based framework [

4,

5]. The predictive coding is mainly applied to lossless compression. Its rationale is to use the correlation between pixels to predict the unknown data based on its neighbors, and then to encode the residual between the real value and the predicted value. In [

6], Slyz et al. proposed a block-based inter-band lossless multispectral image compression method, in which every image was divided into blocks and the current block was predicted by the corresponding block in the adjacent band. For vector quantization coding, several scalar data sets are formed into a vector, and then the data are quantized as a whole in vector space, so as to be compressed without losing much information. As the performance of the vector quantization coding is closely connected with the codebook, to improve the time efficiency, Qian proposed a fast codebook search method in [

7]. In the full search process of the generalized Lloyd algorithm (GLA), if the distance to the partition is better than that of the previous iteration, there is no need to require a search to find the minimum distance partition. Transform coding is an important method in multispectral image compression, which is widely used in lossy compression. This algorithm reduces the correlation between pixels by converting the data to transform domain representation, so that information can be concentrated so as to be quantified and encoded. Karhunen–Loève transform (KLT) [

8], discrete cosine transform (DCT) [

9] and discrete wavelet transform [

10] are all commonly used transform coding algorithms. As we obtain deeper insight into multispectral images, more and more improved algorithms have been developed, such as 3D-SPECK [

11], 3D-SPIHT [

12], and so on.

The traditional compression methods mentioned above are all effective and obtain great results, but they also have shortcomings. For instance, it is simple to implement the predictive coding algorithm, but the compression ratio is relatively low. Although the vector quantization coding algorithm can achieve a more ideal effect, it is not conducive to implementation due to its computation complexity. To overcome the shortcomings of traditional compression methods and also ensure the compression performance, many multispectral image compression algorithms based on deep learning have been rapidly developed in recent years. Among them, the convolutional neural network (CNN) has emerged as one of the main algorithms in image compression in recent years. The history of CNN started from LeNet-style models, which consist of simple stacks of convolution layers for feature extraction and max-pooling layers for downsampling [

13]. In order to extract more features of different scales, AlexNet [

14], proposed in 2012, followed this idea and made an improvement by adding several convolutional layers between every two max-pooling layers. To obtain better performance, it is necessary to increase the depth of the network. As a result, VGG [

15], GoogLENet [

16], ResNet [

17] and other excellent network architectures began to emerge one after another. These network frameworks are all milestones in the process of image compression technology and have obtained great grades in past ILSVRC and other competitions.

Inspired by these remarkable network frameworks, many compression methods based on CNN have appeared and showed applicability for visible images. In [

18], Ballé proposed an end-to-end optimized image compression method based on CNN with generalized divisive normalization (GDN) joint nonlinearity, by means of the flexible use of linear convolution and nonlinear transformation, the proposed network achieved comparable performance with JPEG2000. To further improve the quality of the reconstructed images, Jiang et al. [

19] added CNNs to both encoder and decoder for joint training. The CNN in the encoder produces compact presentation for encoding, and the other CNN in the decoder is to restore the decoded image with high quality, with which block effects can be significantly reduced. It is known that multispectral images are three-dimensional data, in which two dimensions are spatial and one is spectral. As RGB images have three bands as well, it can be seen as special multispectral data. Consequently, many compression methods for visible images can also be applied to multispectral images. In [

20], an end-to-end compression framework for multispectral images with optimized residual unit is presented. It is also based on a CNN, and the default architecture of ResNet, which is adopted in the network, was adjusted to better fit for multispectral images. This algorithm has been proven effective and obtains higher PSNR than that of JPEG2000 by about 2 dB. Even so, the methods mentioned above still fail to focus on the strong correlation between spectra of multispectral images, for that is less important for RGB images. However, for multispectral image compression, ignoring spectral correlation may lead to some information loss after compressing. Hence, in this paper, we proposed a novel multispectral image compression method based on partitioned extraction of spectral–spatial feature.

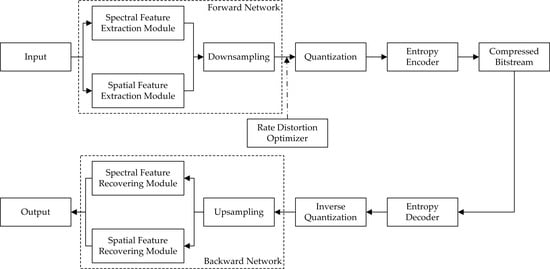

The network is an end-to-end framework based on a CNN and is composed of encoder and decoder. In the encoder, there are two parts for spectral feature extraction and spatial feature extraction, respectively. In the first part, continuous spectral feature extraction modules are adopted to extract spectral features independently. This part does not involve the fusion of spatial information. The second part is for spatial feature extraction, which contains several residual blocks. We use group convolution to separate each channel, so that only spatial features can be extracted without mixing the spectral information within them. Afterwards, all features are fused together and then downsampling is employed to reduce the size of the feature map. Additionally, to make the data more compact, a rate-distortion optimizer is used in the network. After obtaining the intermediate feature data, quantization and lossless entropy encoding are carried out to obtain the compressed binary bit stream. In the decoder, the bit stream first goes through entropy decoding and inverse quantization, and then upsampling helps to restore the image size. Finally, spectral and spatial features are acquired by corresponding deconvolution operations, and the joint feature is used to reconstruct the image. Experimental results demonstrate that our network surpasses JPEG2000 and 3D-SPIHT.

The remainder of this paper is organized as follows.

Section 2 introduces our proposed network framework and principal analysis,

Section 3 includes experimental parameter settings and the training process, and

Section 4 presents the results and comparison with JPEG2000, 3D-SPIHT and the method mentioned in [

20] at the same bit rate, which proves the wonderful performance of our network.

2. Proposed Method

In this section, we introduce the proposed multispectral image compression network framework in detail and describe the training flow diagram. We elaborate on several key operations, such as spectral feature extraction module, spatial feature extraction module, rate-distortion optimizer, etc.

2.1. Spectral Feature Extraction Module

2D convolution has been proven with great promise and successfully applied to lots of aspects of image vision and processing, such as target detection, image classification and image compression. However, as multispectral images are three-dimensional, which is more complex, and rich spectral information is even more important, the information loss problem will inevitably be encountered when 2D convolution is used to process multispectral images. Although there have been many precedents of applying deep learning to multispectral image compression, and it has achieved great performance and exceeded some traditional compression methods such as JPEG and JPEG2000, in the process of feature extraction, however, as the convolution kernel is two-dimensional, the spectral redundancy on the third dimension cannot be efficaciously removed, which inhibits the performance of the network.

To deal with this problem, we have come up with the idea of extracting spectral or spatial features separately. Among this, the inspiration of extracting spectral features derives from [

21]. Ref. [

21] uses three-dimensional kernels for convolution operation, which can maintain the integrity of spectral features in multispectral image data. To avoid the data volume becoming too large, we use a

convolution kernel on the spectral dimension named as 1D spectral convolution to extract spectral features independently.

Figure 1 shows the differences between 2D convolution and 1D spectral convolution.

As shown in

Figure 1a, the image is convolved by 2D convolution, whose kernel is two-dimensional, generally followed by activation functions, such as rectified linear units (ReLU) [

14], parametric rectified linear units (PReLU) [

22], etc. This operation can be expressed as follows:

where

indicates the current layer,

indicates the current feature map of this layer,

is the output value at

of the

feature map in the

layer,

represents the activation function,

denotes the weight of the convolution kernel at position

connected to the

feature map (

indexes over the set of feature maps in the

layer connected to the current feature map),

is the bias of the

feature map in the

layer,

is the number of feature maps in the

layer,

and

are the height and width of the convolution kernel, respectively.

Similarly, considering the dimension of the spectrum, 1D spectral convolution operated on 3D images can be formulated as follows:

where

is the size of the convolution kernel in the spectral dimension,

is the output value at

of the

feature map in the

layer, and

is weight of the kernel at position

connected to the

feature map. As the size of kernel is

, by extension,

and

are set to 1, Equation (2) can be written as:

In regard to the activation function, we adopt ReLU as our first choice, as the gradient is usually constant in back propagation when using ReLU, which alleviates the problem of gradient disappearance in deep network training and contributes to network convergence. Additionally, the computation cost is much less when using ReLU than other functions (e.g., sigmoid). In addition, ReLU can make the output of some neurons zero, which ensures the sparsity of the network so as to alleviate the overfitting problem. The ReLU function can be formulated as below:

In summary, when 2D convolution is operated on three-dimensional images, the output is always two-dimensional, which may cause a large amount of spectral information loss. Therefore, we adopt 1D spectral convolution to retain more feature data of the multispectral image.

2.2. Spatial Feature Extraction Module

In order to ensure the spatial information does not mingle with the spectral features, we use group convolution instead of normal 2D convolution in spatial dimensions. Group convolution first appeared in AlexNet, in order to solve the problem of limited hardware resources at that time. Feature maps were distributed to several GPUs for simultaneous processing, and finally concatenated together.

Figure 2 shows the differences between normal convolution and group convolution.

As shown in

Figure 2a, the size of input data is

, representing the number of channels, width, and height of the feature map, respectively. The size of the convolution kernel is

, and the number of the kernels is

. At this point, the size of the output feature map is

. The parameter number of

N convolution kernels is:

In group convolution, just as its name implies, the input feature maps are divided into several groups, and then convolved separately. Assuming that the size of the input is still

and the number of output feature maps is

. If the input is divided into

groups, the number of input feature maps in each group is

, the number of output feature maps in each group is

, and the size of convolution kernel is

, that is, the amount of convolution kernels remains unchanged and the number of kernels in each group is

. Since the feature maps are only convolved by the convolution kernels of the same group, the total number of parameters can be calculated as:

By comparing the two Equations (5) and (6), it can be easily known that group convolution can greatly reduce the number of parameters, precisely speaking, it can reduce them to

. Moreover, as group convolution can increase the diagonal correlation between filters according to [

14], filter relationships become sparse after grouping.

Figure 3 shows the correlation matrix between filters of adjacent layers [

23], highly correlated filters are brighter, while lower correlated filters are darker. The role of filter groups, namely group convolution, is to take advantage of the block-diagonal sparsity to learn information about the channel dimension. Low correlated filters do not need to be learned, that is to say, they do not need to be given parameters. What is more, as seen in

Figure 3, the highly correlated filters can be trained in a more structured way when using group convolution. Therefore, with structured sparsity, group convolution can not only reduce the number of parameters, but also learn more accurately to make a more efficient network.

2.3. Framework of the Proposed Network

The whole framework of the proposed compression network is illustrated in

Figure 4. The multispectral images are fed into the forward network first, after feature extraction, the data are then compressed and converted to bit stream successively through quantization and entropy encoder. The structure of the decoder is symmetrical with that of the encoder. As a result, for decoding, the bit stream goes through entropy decoding, inverse quantization, and the backward network, in turn, to restore the images. The detailed architecture of the forward and backward network will be demonstrated in

Section 2.3.1.

2.3.1. The Forward Network and the Backward Network

The architecture of the forward and backward network is shown in

Figure 5, the spectral block and the spatial block are shown in

Figure 6.

Figure 5 illustrates the detailed process of our network. First of all, the input multispectral images are simultaneously fed into the spectral feature extraction network and the spatial feature extraction network separately, which consist of corresponding function modules. In the spectral part, there are several spectral blocks (

Figure 6a), which are based on residual block structure. We replace the convolution layers with 1D spectral convolution as adjusted to meet our expectations, and the size of the kernel is

. Likewise, the spatial part is composed by several spatial blocks with a similar structure, as shown in

Figure 6b, and group convolution is used so that each channel will not interact with each other. To be specific, the

GROUP is set to

or

as the input multispectral images are of seven or eight bands. Additionally, some convolution layers are added to enhance the ability of the learning features, whose kernel size is

. After extraction, two parts of the features are fused together, and then downsampling is carried out to reduce the size of the feature maps. At the end of the forward network, the sigmoid function plays a role of limiting the value of the intermediate output, in addition, similar to ReLU as well, it introduces nonlinear factors to make the network more expressive to the model.

Symmetric with the forward network, the backward network is formed with upsampling layers, some convolution layers, and the partitioned extraction part. In particular, upsampling is implemented with PixelShuffle [

24], which can turn low resolution images into high resolution images using sub-pixel operation.

2.3.2. Quantization and Entropy Coding

After the forward network, the intermediate data are first quantized into a succession of discrete integers by the quantizer. Since the descent gradient is used in the backward propagation to update parameters when training the network, the gradient needs to be passed down. However, the rounding function is not differentiable [

25], which will hinder the optimization of the network. Therefore, we relax the function, and it is calculated as:

where

is the quantization level,

is the intermediate datum after sigmoid activation,

is the rounding function, and

is the quantized data. The function rounds the data in the forward network and is skipped during backward propagation, to pass the gradient directly to the previous layer.

Then, we adopt ZPAQ as the lossless entropy coding standard and select “Method-6” as the compression pattern, in order to further process the quantized and generate the binary bit stream. In the decoder, the bit stream goes through the entropy decoder and de-quantization, and the data are finally fed into the backward network to recover the image.

2.4. Rate-Distortion Optimizer

There are two criterions to evaluate a compression method, one is the bit rate, and the other is the quality of the recovered image. To enhance the performance of the network, it is vital to strike a balance between these two criterions. In consequence, rate-distortion optimization is introduced:

where

is the loss function that should be minimized during training,

indicates the distortion loss,

represents the rate loss, which can be controlled by the penalty

. As we use MSE to measure the distortion loss of the recovered image,

can be expressed as follows:

where

denotes the batch size,

represents the original multispectral image and

is the recovered image,

,

and

are, respectively, height, width, and spectral band number of the image.

In order to estimate the rate loss, we adopt an Importance-Net to replace the entropy computation with a continuous approximation of the code length. The importance network is used to generate an importance map

learning from the input images [

26]. The intention is to assign the bit rate according to the importance of the content of the image, more bits are assigned to complex regions, and fewer bits are assigned to smooth regions. The importance-net is simply composed of four layers, two

convolution layers and a residual block that consists of two

convolution layers, which is shown as

Figure 7.

The activation function used in the importance-net is Mish [

27], and it has been proven to be smoother than ReLU and achieve better results. Nonetheless, considering the time cost and limited hardware conditions due to the increased complexity of Mish, we only adopt Mish in the importance-net rather than the whole network. Mish can be formulated as:

After sigmoid activation, the value range of the output is

. The importance map can be described as below:

where

represents the un-quantized output after the encoder,

indicates the weight of the importance-net,

is the spatial location of the pixel, and

,

and

are the bias, size of the kernel and stride, respectively. Unlike [

26], we use the mean of

instead of the sum to define the rate loss:

where

is the number of the intermediate channel.