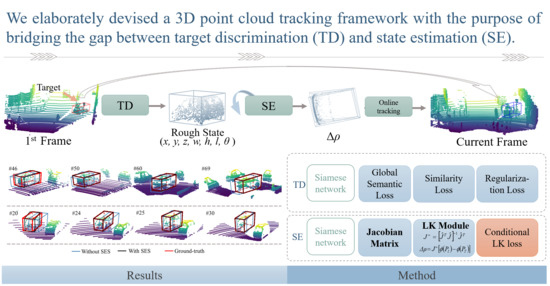

3.2. State Estimation Subnetwork

Our state estimation subnetwork (SES) is designed to learn the incremental warp parameters between the template cropped from the first frame and candidate point clouds, so as to accommodate any motion variations. We took inspiration from DeepLK [

19] and extended it to the 3D point cloud tracking task. To describe the state estimation subnetwork, we briefly revisit the inverse compositional (IC) LK algorithm [

47] for 2D tracking.

The IC formulation is very ingenious and efficient because it avoids the repeated computation of the Jacobian on the warped source image. Given a template image

T and a source image

I, the essence of IC-LK is to solve the incremental warp parameters

on

T using sum-of-squared-error criterion. Therefore its objective function for one pixel

can be formulated as follows:

where

are currently known state parameters,

is the number of increments the state parameters are to go through,

are the pixel coordinates, and

is the warp function. More concretely, if one considers the location shift and scale, i.e.,

, the warp function can be written as

. Using the first-order Taylor expansion at the identity warp

, the Equation (

1) can be rewritten as

where

is the identity mapping and

represents the image gradients. Let the Jacobian

. We hence can obtain

by minimizing the above Equation (

2); namely,

Compared with 2D visual tracking, 3D point cloud tracking has an unstructured data representation and high-dimensional search space for state parameters. Let

denote the template point cloud.

denotes the source point cloud in the tracked frames, which is extracted by a bounding box with inaccurate center and orientation. Note that we set the quantities of both

and

to

N, and when their totals of points are less than

N, we repeat sampling from existing points. In this work, we treat the deep network

as a learnable “image” function. In light of this, the template point cloud

and the source point cloud

can obtain their descriptors using the network

after transforming them into the canonical coordinate system. In addition, we regard the rigid transformation

between

and

as the “warp” function. In this way, we can apply the philosophy of IC-LK to the 3D point cloud tracking problem. In practice, the 3D bounding box is usually utilized to represent the target state which can be parametrized by

in the LiDAR system, as shown in

Figure 2. Therein,

is the target center coordinate,

represents the target size, and

is the rotation angle around the y-axis. Due to the target size remaining almost unchanged in 3D spatial space, it is sufficient to focus only on the state variations in the angle and x, y, and z axes. Consequently, the transformation

G will be represented by four warping parameters

. More concretely, it can be simplified as follows:

Now the state estimation problem in 3D tracking can be transformed to find

satisfying

, where

is the warp operation on the homogeneous coordinate with

. Being analogous to the aforementioned IC-LK in Equation (

2), the objective of state estimation can be written as

Similar to the Equation (

2), we could solve the incremental warp

with the Jacobian matrix

. Unfortunately, this Jacobian matrix cannot be calculated like the classical image manner. The core obstacle is that the gradients in

x,

y, and

z cannot be calculated in the scattered point clouds due to the lack of connections among points or another regular convolution structure.

We introduce two solutions to circumvent this problem. One direct solution is to approximate the Jacobian matrix through a finite difference gradient [

48]. Each column of the Jacobian matrix

can be computed as

where

are infinitesimal perturbations of the warp parameters

, and

is a transformation involving only one of the warp parameters. (In other words, only the

i-th warp parameter has a non-zero value

. Please refer to

Appendix A for details).

On the other hand, we treat the construction of

as a non-linear function

with respect to

. We hence propose an alternative: to learn the Jacobian matrix using a multi-layer perceptron, which consists of three fully-connected layers and ReLU activation functions (

Figure 3). In

Section 4.4, we report the comparison experiments.

Based on the above extension, we can analogously solve the incremental warp

of the 3D point cloud in terms of Equation (

3) as follows:

where

is a Moore–Penrose inverse of

. Afterwards, the source point cloud cropped from the coming frame can adjust its state by the following formula

where

is the inverse compositional function and

is the state representation of the source point cloud

.

Network Architecture.Figure 3 summarizes the architecture of the state estimation subnetwork. Owing to the inherent complexity of the state estimation, it is non-trivial to train a powerful estimator on the fly under the sole supervision of the first point cloud scenario. We hence train the SES offline to learn general properties for predicting the incremental warp. It is natural that we opt to adopt a Siamese architecture for producing the incremental warp parameters between the template and candidate. In particular, our network contains two branches sharing the same feature backbone, each of which consists of two blocks. As shown in

Figure 3, Block-1 first generates the global descriptor. Then Block-2 consumes the aggregation of the global descriptor and the point-wise features to generate the final

K-dimensional descriptor, based on which the Jacobian matrix

can be calculated. Finally, the LK module jointly considers

,

, and

to predict

. It is notable that this module theoretically provides the fusion strategy, namely,

, between two features produced by the Siamese network. Moreover, we adopt the conditional LK loss [

19] to train this subnetwork in an end-to-end manner. It is formulated as

where

is the ground-truth warp parameter,

is the smooth

function [

49], and

M is the number of paired point clouds in a mini-batch. This loss can propagate back to update the network when the derivative of the batch inverse matrix is implemented.

3.3. Target Discrimination Subnetwork

In the 3D search space, how to efficiently determine the presence of the target is very critical for an agent to conduct state estimation. In this section, considering that the SES lacks discrimination ability, we present the design of a target discrimination subnetwork (TDS) to realize a strong alliance with the SES. It aims to distinguish the best candidate from distractors, thereby providing a rough target state. We leverage the matching function based method [

2] to track the target. Generally, its model can be written as

where

is the confidence score function,

is the feature extractor, and

g is a similarity metric. Under this framework, the candidate with the highest score is selected as the target.

In this work, to equip the model with global semantic information, we incorporate the intermediate features generated by the first block to point-wise features, and then pass them to the second block, as shown in

Figure 4. Consequently, our TDS could project 3D partial shapes into a more discriminatory latent space, which allows an agent to distinguish the target more accurately from distractors.

Network Architecture. We trained the TDS offline from scratch with an annotated KITTI dataset. Based on the Siamese network, the TDS takes paired point clouds as inputs and directly produces their similarity scores. Specifically, its feature extractor also consists of two blocks the same as in the SES. As can be seen in

Figure 4, Block-1

generates the global descriptor, and Block-2

utilizes the aggregation of the global point-wise features to generate the more discriminative descriptor. As for the similarity metric

g, we conservatively utilize hand-crafted cosine function. Finally, the similarity loss, global semantic loss, and regularization completion loss are combined in order to train this subnetwork; i.e.,

where

is mean square error loss,

s is the ground-truth score,

is the balance factor, and

is the completion loss for regularization [

2], where

represents each template point cloud predicted via shape completion network [

2].