Cotton Stand Counting from Unmanned Aerial System Imagery Using MobileNet and CenterNet Deep Learning Models

Abstract

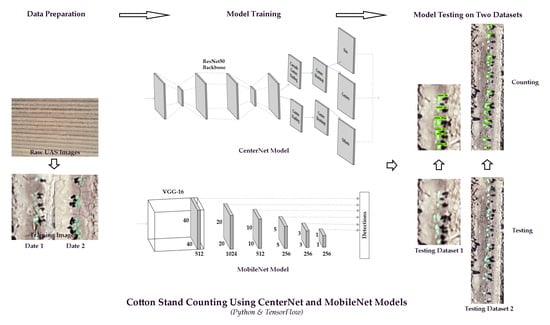

:1. Introduction

2. Materials and Methods

2.1. Experimental Sites

2.2. UAS Image Acquisition

2.3. Training and Testing Images

2.4. MobileNet

2.5. CenterNet

2.6. Counting and Evaluations

3. Results

3.1. Model Validation

3.2. Model Evaluation in Stand Counting

4. Discussion

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Reddy, K.R.; Brand, D.; Wijewardana, C.; Gao, W. Temperature effects on cotton seedling emergence, growth, and development. Agron. J. 2017, 109, 1379–1387. [Google Scholar] [CrossRef]

- Zheng, S.L.; Wang, L.J.; Wan, N.X.; Zhong, L.; Zhou, S.M.; He, W.; Yuan, J.C. Response of potato tuber number and spatial distribution to plant density in different growing seasons in Southwest China. Front. Plant Sci. 2016, 7, 365. [Google Scholar] [CrossRef] [Green Version]

- Liu, T.; Wu, W.; Chen, W.; Sun, C.; Zhu, X.; Guo, W. Automated image-processing for counting seedlings in a wheat field. Precis. Agric. 2016, 17, 392–406. [Google Scholar] [CrossRef]

- Godfrey, L.D.; Goodell, P.B.; Natwick, E.T.; Haviland, D.R.; Barlow, V.M. UC IPM pest management guidelines: Cotton. Available online: http://ipm.ucanr.edu/PMG/r3300311.html (accessed on 16 November 2020).

- Benson, G.O. Making corn replant decisions. In Proceedings of the Beltwide Cotton Conference Proceedings, San Antonio, TX, USA, 3–5 January 2018; pp. 1–7. [Google Scholar]

- Hopper, N.W.; Supak, J. Fungicide treatment effects on cotton (Gossypium hirsutum) emergence, establishment and yield. Texas J. Agric. Nat. Resour. 1993, 6, 69–80. [Google Scholar]

- Sun, S.; Li, C.; Paterson, A.H.; Chee, P.W.; Robertson, J.S. Image processing algorithms for infield single cotton boll counting and yield prediction. Comput. Electron. Agric. 2019, 166, 104976. [Google Scholar] [CrossRef]

- Zhao, B.; Zhang, J.; Yang, C.; Zhou, G.; Ding, Y.; Shi, Y.; Zhang, D.; Xie, J.; Liao, Q. Rapeseed seedling stand counting and seeding performance evaluation at two early growth stages based on unmanned aerial vehicle imagery. Front. Plant Sci. 2018, 9, 1362. [Google Scholar] [CrossRef]

- Chen, R.; Chu, T.; Landivar, J.A.; Yang, C.; Maeda, M.M. Monitoring cotton (Gossypium hirsutum L.) germination using ultrahigh-resolution UAS images. Precis. Agric. 2018, 19, 161–177. [Google Scholar] [CrossRef]

- Guo, W.; Zheng, B.; Potgieter, A.B.; Diot, J.; Watanabe, K.; Noshita, K.; Jordan, D.R.; Wang, X.; Watson, J.; Ninomiya, S.; et al. Aerial imagery analysis—quantifying appearance and number of sorghum heads for applications in breeding and agronomy. Front. Plant Sci. 2018, 9, 1544. [Google Scholar] [CrossRef] [Green Version]

- Chen, S.W.; Shivakumar, S.S.; Dcunha, S.; Das, J.; Okon, E.; Qu, C.; Taylor, C.J.; Kumar, V. Counting apples and oranges with deep learning: A data-driven approach. IEEE Robot. Autom. Lett. 2017, 2, 781–788. [Google Scholar] [CrossRef]

- Gnädinger, F.; Schmidhalter, U. Digital counts of maize plants by unmanned aerial vehicles (UAVs). Remote Sens. 2017, 9, 544. [Google Scholar] [CrossRef] [Green Version]

- Oh, M.; Olsen, P.; Ramamurthy, K.N. Counting and segmenting sorghum heads. arXiv 2019, arXiv:1905.13291. [Google Scholar]

- Olsen, P.A.; Natesan Ramamurthy, K.; Ribera, J.; Chen, Y.; Thompson, A.M.; Luss, R.; Tuinstra, M.; Abe, N. Detecting and counting panicles in sorghum images. In Proceedings of the 2018 IEEE 5th International Conference on Data Science and Advanced Analytics (DSAA 2018), Turin, Italy, 1–3 October 2018; pp. 400–409. [Google Scholar]

- Feng, A.; Zhou, J.; Vories, E.; Sudduth, K.A. Evaluation of cotton emergence using UAV-based narrow-band spectral imagery with customized image alignment and stitching algorithms. Remote Sens. 2020, 12, 1764. [Google Scholar] [CrossRef]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef]

- Nielsen, M.A. Neural Networks and Deep Learning; Determination Press: San Francisco, CA, USA, 2015; Available online: http://neuralnetworksanddeeplearning.com/index.html (accessed on 28 April 2021).

- Pan, S.J.; Yang, Q. A survey on transfer learning. IEEE Trans. Knowl. Data Eng. 2009, 22, 1345–1359. [Google Scholar] [CrossRef]

- Kamilaris, A.; Prenafeta-Boldú, F.X. Deep learning in agriculture: A survey. Comput. Electron. Agric. 2018, 147, 70–90. [Google Scholar] [CrossRef] [Green Version]

- Liu, Z.; Feng, Y.; Li, R.; Zhang, S.; Zhang, L.; Cui, G.; Al-Mallahi, A.; Fu, L.; Cui, Y. Improved kiwifruit detection using VGG16 with RGB and NIR information fusion. In Proceedings of the 2019 ASABE Annual International Meeting, Boston, MA, USA, 7–10 July 2019. [Google Scholar]

- Fu, L.; Majeed, Y.; Zhang, X.; Karkee, M.; Zhang, Q. Faster R–CNN–based apple detection in dense-foliage fruiting-wall trees using RGB and depth features for robotic harvesting. Biosyst. Eng. 2020, 197, 245–256. [Google Scholar] [CrossRef]

- Gao, F.; Fu, L.; Zhang, X.; Majeed, Y.; Li, R.; Karkee, M.; Zhang, Q. Multi-class fruit-on-plant detection for apple in SNAP system using Faster R-CNN. Comput. Electron. Agric. 2020, 176, 105634. [Google Scholar] [CrossRef]

- Wu, J.; Yang, G.; Yang, X.; Xu, B.; Han, L.; Zhu, Y. Automatic counting of in situ rice seedlings from UAV images based on a deep fully convolutional neural network. Remote Sens. 2019, 11, 691. [Google Scholar] [CrossRef] [Green Version]

- Lin, Z.; Guo, W. Sorghum panicle detection and counting using unmanned aerial system images and deep learning. Front. Plant Sci. 2020, 11, 1346. [Google Scholar] [CrossRef] [PubMed]

- Oh, S.; Chang, A.; Ashapure, A.; Jung, J.; Dube, N.; Maeda, M.; Gonzalez, D.; Landivar, J. Plant counting of cotton from UAS imagery using deep learning-based object detection framework. Remote Sens. 2020, 12, 2981. [Google Scholar] [CrossRef]

- Feng, A.; Zhou, J.; Vories, E.; Sudduth, K.A. Evaluation of cotton emergence using UAV-based imagery and deep learning. Comput. Electron. Agric. 2020, 177, 105711. [Google Scholar] [CrossRef]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich feature hierarchies for accurate object detection and semantic segmentation. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 580–587. [Google Scholar]

- Howard, A.G.; Zhu, M.; Chen, B.; Kalenichenko, D.; Wang, W.; Weyand, T.; Andreetto, M.; Adam, H. MobileNets: Efficient convolutional neural networks for mobile vision applications. arXiv 2017, arXiv:1704.04861. [Google Scholar]

- Duan, K.; Bai, S.; Xie, L.; Qi, H.; Huang, Q.; Tian, Q. CenterNet: Keypoint triplets for object detection. In Proceedings of the IEEE International Conference on Computer Vision, Seoul, Korea, 27 October–3 November 2019; pp. 6568–6577. [Google Scholar]

- Sun, Y.; Zhang, Y.; Wang, H. Select the model who knows the image best: A multi-model method. Optoelectron. Imaging Multimed. Technol. VII 2020, 11550. [Google Scholar] [CrossRef]

- Dai, J.; Lei, S.; Dong, L.; Lin, X.; Zhang, H.; Sun, D.; Yuan, K. More reliable AI solution: Breast ultrasound diagnosis using multi-AI combination. arXiv 2021, arXiv:2101.02639. [Google Scholar]

- Chen, J.; Zhang, D.; Suzauddola, M.; Nanehkaran, Y.A.; Sun, Y. Identification of plant disease images via a squeeze-and-excitation MobileNet model and twice transfer learning. IET Image Process. 2021, 15, 1115–1127. [Google Scholar] [CrossRef]

- Bi, C.; Wang, J.; Duan, Y.; Fu, B.; Kang, J.R.; Shi, Y. MobileNet based apple leaf diseases identification. Mob. Netw. Appl. 2020, 1–9. [Google Scholar] [CrossRef]

- Jin, X.; Che, J.; Chen, Y. Weed identification using deep learning and image processing in vegetable plantation. IEEE Access 2021, 9, 10940–10950. [Google Scholar] [CrossRef]

- Zhao, K.; Yan, W.Q. Fruit detection from digital images using CenterNet. In Proceedings of the Geometry and Visionfirst International Symposium, ISGV 2021, Auckland, New Zealand, 28–29 January 2021; Revised Selected Papers. 2021. Volume 1386, pp. 313–326. [Google Scholar]

- Texas Climate Data-Lubbock. Available online: https://www.usclimatedata.com/climate/lubbock/texas/united-states/ustx2745 (accessed on 25 February 2021).

- Dhakal, M.; West, C.P.; Deb, S.K.; Kharel, G.; Ritchie, G.L. Field calibration of PR2 capacitance probe in pullman clay—loam soil of Southern High Plains. Agrosyst. Geosci. Environ. 2019, 2, 1–7. [Google Scholar] [CrossRef] [Green Version]

- Abadi, M.; Agarwal, A.; Barham, P.; Brevdo, E.; Chen, Z.; Citro, C.; Corrado, G.S.; Davis, A.; Dean, J.; Devin, M.; et al. TensorFlow: Large-scale machine learning on heterogeneous distributed systems. arXiv 2016, arXiv:1603.04467. [Google Scholar]

- Google Colaboratory—Google. Available online: https://research.google.com/colaboratory/faq.html (accessed on 31 May 2021).

- Tzutalin, D. LabelImg. Git Code. Available online: https://github.com/tzutalin/labelImg (accessed on 16 February 2021).

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. In Proceedings of the 3rd International Conference on Learning Representations (ICLR 2015—Conference Track Proceedings), San Diego, CA, USA, 7–9 May 2015. [Google Scholar]

- Deng, J.; Dong, W.; Socher, R.; Li, L.-J.; Li, K.; Fei-Fei, L. ImageNet: A large-scale hierarchical image database. In Proceedings of the 2009 IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 20–25 June 2009; pp. 248–255. [Google Scholar]

- Sandler, M.; Howard, A.; Zhu, M.; Zhmoginov, A.; Chen, L.C. MobileNetV2: Inverted residuals and linear bottlenecks. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 4510–4520. [Google Scholar]

- Law, H.; Deng, J. CornerNet: Detecting objects as paired keypoints. Int. J. Comput. Vis. 2018, 128, 642–656. [Google Scholar] [CrossRef] [Green Version]

- Goutte, C.; Gaussier, E. A probabilistic interpretation of precision, recall and F-score, with implication for evaluation. In Advances in Information Retrieval, Proceedings of the 27th European Conference on IR Research (ECIR 2005), Santiago de Compostela, Spain, 21–23 March 2005; Springer: Berlin/Heidelberg, Germany, 2005; Volume 3408, pp. 345–359. [Google Scholar]

- Everingham, M.; Van Gool, L.; Williams, C.K.I.; Winn, J.; Zisserman, A. The pascal visual object classes (VOC) challenge. Int. J. Comput. Vis. 2010, 88, 303–338. [Google Scholar] [CrossRef] [Green Version]

- Supak, J.; Boman, R. Making replant decisions. In Proceedings of the 1990 Beltwide Cotton Production Conference, Las Vegas, NV, USA, 10–13 January 1990. [Google Scholar]

- Pailla, D.R.; Kollerathu, V.; Chennamsetty, S.S. Object detection on aerial imagery using CenterNet. arXiv 2019, arXiv:1908.08244. [Google Scholar]

- Cui, Z.; Wang, X.; Liu, N.; Cao, Z.; Yang, J. Ship detection in large-scale SAR images via spatial shuffle-group enhance attention. IEEE Trans. Geosci. Remote Sens. 2021, 59, 379–391. [Google Scholar] [CrossRef]

- Brendel, W.; Bethge, M. Approximating CNNs with bag-of-local-features models works surprisingly well on Imagenet. In Proceedings of the 7th International Conference on Learning Representations (ICLR 2019), New Orleans, LA, USA, 6–9 May 2019. [Google Scholar]

- Huang, Z.; Wang, N. Data-driven sparse structure selection for deep neural networks. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 304–320. [Google Scholar]

- D’Odorico, P.; Besik, A.; Wong, C.Y.S.; Isabel, N.; Ensminger, I. High-throughput drone-based remote sensing reliably tracks phenology in thousands of conifer seedlings. New Phytol. 2020, 226, 1667–1681. [Google Scholar] [CrossRef]

- Wang, R.J.; Li, X.; Ling, C.X. Pelee: A real-time object detection system on mobile devices. arXiv 2018, arXiv:1804.06882. [Google Scholar]

- Velasco, J.; Pascion, C.; Alberio, J.W.; Apuang, J.; Cruz, J.S.; Gomez, M.A.; Molina, B.; Tuala, L.; Thio-Ac, A.; Jorda, R. A smartphone-based skin disease classification using Mobilenet CNN. Int. J. Adv. Trends Comput. Sci. Eng. 2019, 8, 2632–2637. [Google Scholar] [CrossRef]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.Y.; Berg, A.C. SSD: Single shot multibox detector. In Proceedings of the European Conference on Computer Vision Computer Vision, Amsterdam, The Netherlands, 8–16 October 2016; pp. 21–37. [Google Scholar]

- Girshick, R. Fast R-CNN. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; Volume 2015, pp. 1440–1448. [Google Scholar]

- Deng, L.; Yu, D. Deep learning: Methods and applications. Found. Trends Signal Process. 2014, 7, 197–387. [Google Scholar] [CrossRef] [Green Version]

- Maimaitijiang, M.; Ghulam, A.; Sidike, P.; Hartling, S.; Maimaitiyiming, M.; Peterson, K.; Shavers, E.; Fishman, J.; Peterson, J.; Kadam, S.; et al. Unmanned aerial system (UAS)—Based phenotyping of soybean using multi-sensor data fusion and extreme learning machine. ISPRS J. Photogramm. Remote Sens. 2017, 134, 43–58. [Google Scholar] [CrossRef]

- Li, B.; Xu, X.; Han, J.; Zhang, L.; Bian, C.; Jin, L.; Liu, J. The estimation of crop emergence in potatoes by UAV RGB imagery. Plant Methods 2019, 15, 1–13. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Rasmussen, J.; Nielsen, J.; Streibig, J.C.; Jensen, J.E.; Pedersen, K.S.; Olsen, S.I. Pre-harvest weed mapping of Cirsium arvense in wheat and barley with off-the-shelf UAVs. Precis. Agric. 2019, 20, 983–999. [Google Scholar] [CrossRef]

- Lee, U.; Chang, S.; Putra, G.A.; Kim, H.; Kim, D.H. An automated, high-throughput plant phenotyping system using machine learning-based plant segmentation and image analysis. PLoS ONE 2018, 13, e0196615. [Google Scholar] [CrossRef] [PubMed] [Green Version]

| Model | Number of Training Images | mAP (%) | AR (%) | F1-Score (%) |

|---|---|---|---|---|

| MobileNet | 400 | 67 | 39 | 63 |

| - | 900 | 86 | 72 | 81 |

| CenterNet | 400 | 71 | 48 | 75 |

| - | 900 | 79 | 73 | 87 |

| Model | Testing Dataset | Number of Training Images | R2 | RMSE | MAE | MAPE (%) |

|---|---|---|---|---|---|---|

| MobileNet | 1 | 400 | 0.86 | 0.89 | 0.54 | 0.26 |

| - | - | 900 | 0.96 | 0.64 | 0.33 | 0.11 |

| - | 2 | 400 | 0.48 | 7.81 | 7.48 | 7.83 |

| - | - | 900 | 0.87 | 3.66 | 6.22 | 5.61 |

| CenterNet | 1 | 400 | 0.89 | 0.58 | 0.25 | 0.10 |

| - | - | 900 | 0.98 | 0.37 | 0.27 | 0.07 |

| - | 2 | 400 | 0.60 | 6.08 | 8.03 | 6.57 |

| - | - | 900 | 0.86 | 3.94 | 5.39 | 4.73 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lin, Z.; Guo, W. Cotton Stand Counting from Unmanned Aerial System Imagery Using MobileNet and CenterNet Deep Learning Models. Remote Sens. 2021, 13, 2822. https://0-doi-org.brum.beds.ac.uk/10.3390/rs13142822

Lin Z, Guo W. Cotton Stand Counting from Unmanned Aerial System Imagery Using MobileNet and CenterNet Deep Learning Models. Remote Sensing. 2021; 13(14):2822. https://0-doi-org.brum.beds.ac.uk/10.3390/rs13142822

Chicago/Turabian StyleLin, Zhe, and Wenxuan Guo. 2021. "Cotton Stand Counting from Unmanned Aerial System Imagery Using MobileNet and CenterNet Deep Learning Models" Remote Sensing 13, no. 14: 2822. https://0-doi-org.brum.beds.ac.uk/10.3390/rs13142822