1. Introduction

Nowcasting convective precipitation, which refers to the methods for near-real-time prediction of the intensity of rainfall in a particular region, has long been a significant problem in weather forecasting for its strong relation with agricultural and industrial production, as well as daily life [

1,

2,

3]. It can issue citywide rainfall alerts to avoid casualties, provide weather guidance for regional aviation to enhance flight safety, and predict road conditions to facilitate drivers [

4]. High precision and high promptness of the nowcasting precipitation leads to early prevention of major catastrophe, which means the core task of the problem is to improve the accuracy and to accelerate the prediction process [

5,

6]. Due to the inherent complexities of the atmosphere and relevant dynamical processes, as well as higher forecasting resolution requirement than with respect to other traditional forecasting tasks like weekly average temperature prediction, the precipitation nowcasting problem is quite challenging and has emerged as a hot research topic [

7,

8,

9].

Methods to solve the problem of precipitation nowcasting can be divided into two categories [

2], including methods based on the numerical weather prediction (NWP) model, and methods based on radar echo reflectivity extrapolation. Methods based on NWP calculate the prediction using massive and various meteorological data, through a complex and meticulous simulation of the physical equations in the atmosphere [

10]. However, unsatisfactory prediction results are obtained if inappropriate initial states are set. For nowcasting purposes, NWP-based models are quite ineffective, especially if high-resolution and large domains are needed. Moreover, NWP-based approaches do not take full advantage of the vast amount of existing historical observation data [

11,

12]. Since radar echo reflectivity maps can be converted to rainfall intensity maps through the Marshall–Palmer relationship, Z(radar reflectivity)-R(precipitation intensity) relationship, and some other methods [

13], nowcasting convective precipitation can be accomplished by the faster and more accurate radar echo reflectivity extrapolation [

9]. The radar echo reflectivity dataset in this paper is provided by Guangdong Meteorological Bureau. An example of a radar echo reflectivity image acquired in the south China with the study area framed is shown in

Figure 1. This is the radar CAPPI (Constant Altitude Plan Position Indicator) reflectivity image, which is taken from an altitude of 3 km and covers a 300 km × 300 km area centered in Guangzhou City. It is obtained from seven S-band radars, which are located at Guangzhou, Shenzhen, Shaoguan, etc. Radar echo reflectivity intensities are colored corresponding to the class thresholds defined in

Figure 1.

Traditional radar echo extrapolation includes centroid tracking [

13], tracking radar echoes by cross-correlation (TREC) [

14], and the optical flow method [

15,

16,

17]. The centroid tracking method is mainly suitable for the tracking and short-term prediction of heavy rainfall with strong convection. TREC is one of the most classical radar echo tracking algorithms, which calculates the correlation coefficient of radar echo in the first few moments and obtains the displacement of echo to predict the future radar echo motion. Instead of computing the maximum correlation to obtain the motion vector like TREC, the McGill Algorithm for Precipitation nowcasting using Lagrangian Extrapolation (MAPLE [

18,

19]) employs the variational method to minimize a cost function to define the motion field that then advects the radar echo images for nowcasting [

20]. The major drawback of the extrapolation-based nowcasting is that capturing the growth and decay of the weather system and the displacement uncertainty is difficult [

20]. To overcome this issue, blending techniques are applied to improve nowcasting systems, such as the Short-Term Ensemble Prediction System (STEPS [

21]). In [

20], a blending system is formed by synthesizing the wind information from the model forecast with the echo extrapolation motion field via a variational algorithm to improve the nowcasting system. The blending scheme performed especially well after a typhoon made landfall in Taiwan [

20]. Moreover, some nowcasting rainfall models based on the advection-diffusion equation with non-stationary motion vectors are proposed in [

22] to obtain smoother rainfall predictions for lead times and increase skill scores. The motion vectors are updated in each time step by solving the two-dimensional (2-D) Burgers’ equations [

22]. The optical flow-based methods are proposed and widely utilized by various observatory stations [

17]. The well-known Pysteps [

23] supplies many optical flow-based methods, such as Extrapolation nowcast using the Lucas–Kanade tracking approach and Deterministic nowcast with S-PROG (Spectral Prognosis) [

21]. In the S-PROG nowcast method, the motion field is estimated using the Lucas–Kanade optical flow and then is used to generate a deterministic nowcast with the S-PROG model, which implements a scale filtering approach in order to progressively remove the unpredictable spatial scales during the forecast [

23]. The optical flow-based methods first estimate the convective precipitation cloud movements from the observed radar echo maps and then predict the future radar echo maps using semi-Lagrangian advection [

9]. However, two key assumptions limit its performance: (1) the total intensity remains constant; (2) the motion contains no rapid nonlinear changes and is very smooth [

24,

25]. Actually, the radar echo intensity may vary over time and the motion is highly dynamic in the radar echo map extrapolation. Moreover, the radar echo extrapolation step is separated from the flow estimation step, and then the model parameters are not easy to determine to obtain good prediction performance. Furthermore, many optical flow-based methods do not only make the most of abundant historical radar echo maps in the database, but also utilize the given radar echo map sequence for prediction.

With the improvement of computing power, machine learning algorithms have boosted great interest in radar echo extrapolation [

26,

27,

28,

29], which is essentially a spatiotemporal sequence forecasting problem [

30,

31]. The sequences of past radar echo maps are input, and the future radar echo sequences are output. Recurrent neural network (RNN) and especially long short-term memory (LSTM) encoder-decoder frameworks in [

32,

33,

34] are proposed to capture the sequential correlations and provide new solutions to solve the sequence-to-sequence prediction problem. Klein et al. [

35] proposed a “dynamic convolutional layer” for extracting spatial features and forecasting rain and snow.

To capture the spatiotemporal dependency and predict radar echo image sequence, Shi et al. [

9] developed conventional LSTM and designed convolutional LSTM (ConvLSTM), which can capture the dynamic features among the image sequence. Additionally, they further proposed the trajectory gated recurrent unit (TrajGRU) model [

4], which is more flexible than ConvLSTM by the location-variant recurrent connection structure and outperforms on the grid-wise precipitation nowcasting task. Wang et al. [

36] presented a predictive recurrent neural network (PredRNN) and utilized a unified memory pool to memorize both spatial appearances and temporal variations. PredRNN++ [

37] further introduced a gradient unit module to capture the long-term memory dependence and achieved better performance than the previous TrajGRU and ConvLSTM methods. In addition, hybrid methods combine the effective information of observation data (radar echo data and other meteorological parameters) and numerical weather prediction (NWP) data to further enhance the prediction accuracy. A Unet-based model on the fusion of rainfall radar images and wind velocity produced by a weather forecast model is proposed in [

7] and improves the prediction for high precipitation rainfalls. Moreover, the dual-input dual-encoder network structures are also proposed to extract simulation-based and observation-based features for prediction [

38,

39]. The limitation of the existing deep learning models lies in the defect of the extracting ability of spatiotemporal characteristics. Moreover, detailed information loss in the long-term extrapolation often occurs, which leads to blurry prediction images [

40,

41,

42,

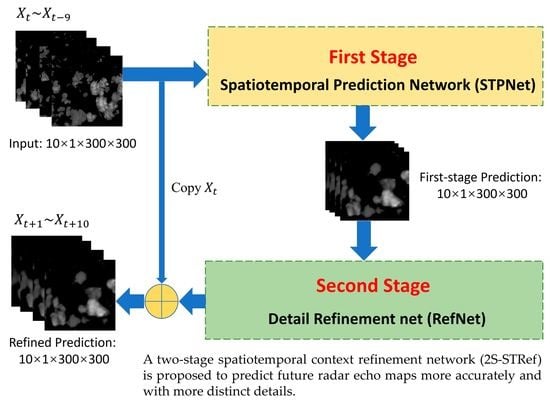

43]. To further enhance the prediction accuracy and preserve the sharp details of predicted radar echo maps, we propose a new model, the two-stage spatiotemporal context refinement network for precipitation nowcasting (2S-STRef), in this work. The proposed model generates first-stage prediction using a spatiotemporal predictive model, then it refines the first-stage results to obtain higher accuracy and more details. We use a real-world radar echo map dataset of South China to evaluate 2S-STRef, which outperforms the traditional optical flow method and two representative deep learning models (ConvLSTM as well as and PredRNN++) in both image and forecasting evaluation metrics.

The remainder of this paper is organized as follows.

Section 2 introduces the problem statement and details the proposed 2S-STRef framework, followed by descriptions of the dataset, the evaluation index, and the performance evaluation of real-world radar echo experiments in

Section 3.

Section 4 is a general conclusion to the whole research.

2. Methods

The short-term precipitation prediction algorithms based on radar echo images need to extrapolate a fixed length of the future radar echo maps in a local region from the previously observed radar image sequence first [

44], and then obtain the short-term precipitation prediction according to the relationship between the echo reflectivity factor and rainfall intensity value. In practical applications, the radar echo maps are usually sampled from the weather radar every 6 or 12 min in China (5 min in some public datasets [

23]) and forecasting is usually done for lead times from 5, 6, or 12 min to 2 h [

9,

23], i.e., to predict the 10 frames ahead in this work (one frame every 12 min). The reviewed methods of spatiotemporal series prediction have limited ability of extracting spatiotemporal features and the fine-level information can be lost, which leads to unsatisfactory prediction accuracy. To achieve fine-grained spatiotemporal feature learning, this paper designs a two-stage spatiotemporal context refinement network.

In general, there are three main innovation ideas:

A new two-stage precipitation prediction framework is proposed. On the basis of the spatiotemporal sequence prediction model capturing the spatiotemporal sequence, a two-stage model is designed to refine the output.

An efficient and concise prediction model of the spatiotemporal sequence is constructed to learn spatiotemporal context information from past radar echo maps and output the predicted sequence of radar echo maps in the first stage.

In the second stage, a new structure of the refinement network (RefNet) is proposed. The details of the output images can be improved by multi-scale feature extraction and fusion residual block. Instead of predicting the radar echo map directly, our RefNet outputs the residual sequence for the last frame, which further improves the whole model’s ability to predict the radar echo maps and enhances the details.

In this section, the precipitation nowcasting problem will be formulated and a two-stage prediction and refinement model will be proposed.

2.1. Formulation of Prediction Problem

Weather radar is one of the best instruments to monitor the precipitation system. The intensity of radar echo is related to the size, shape, state of precipitation particles, and the number of particles per unit volume. Generally, the stronger the reflected signal is, the stronger the precipitation intensity is. Therefore, the intensity and distribution of precipitation in a weather system can be judged by the radar echo map.

The rainfall rate values (mm/h) can be calculated by the radar reflectivity values using the Z–R relationship. Z is the radar reflectivity values and R is the rain-rate level.

It means that if we can predict the following radar echo images by inputting the previous frames, we can achieve the goal of precipitation nowcasting.

In this work, the 2-D radar echo image at every timestamp is divided into tiled non-overlapping patches, whose pixels are measurements. The short-term and temporary precipitation nowcasting naturally becomes capturing the spatiotemporal features and extrapolating the sequences of future radar echo images. The observation image can be represented as a tensor

, representing the image at time

t, where

R denotes the observed feature domain,

refers to the channel number of feature maps, and

and

represent the width and height of the state and input tensors, respectively.

is used to represent the predicted radar echo image at time

t. Therefore, the problem can be described as (1):

The main task is to predict the most likely length-K radar echo maps based on the previous J observations including the current one () and make them as close as possible to the real observations for the next time slots. In this paper, 10 frames are put into the network and the next 10 frames are expected to be output, i.e., in (1).

2.2. Network Structure

Figure 2 illustrates the overall architecture of 2S-STRef. The network framework is composed of two stages.

The first stage, named the spatiotemporal prediction network (STPNet), is an encoder-decoder structure based on the spatiotemporal recurrent neural network (ST-RNN), which is inspired by ConvLSTM [

9] and TrajGRU [

4] and has convolutional structures in both the input-to-state and state-to-state transitions. We input the previous radar echo observation sequence into the encoder of STPNet and obtain

n layers of RNN states, then utilize another

n layers of RNNs to generate the future radar echo predictions based on the encoded status. The prediction is the first-stage result and is an intermediate result.

The second stage, named the detail refinement stage, proposed in this paper acts as a defuzzification network for the predicted radar echo images, and also improves the ability of spatial and temporal feature extraction for echo image detail information. We input the first-stage prediction into the detail refinement stage to acquire a refined prediction, which is also the final result with improvement of the radar echo image quality and promotion of precipitation prediction precision.

Two stages will be introduced in detail in the following subsections.

2.2.1. First Stage: Spatiotemporal Prediction Net

Inspired by the models [

4,

9,

35], an end-to-end spatiotemporal prediction network (STPNet) based on the encoder-decoder network frame is designed to compensate the translation invariance of convolution when capturing spatiotemporal correlations. For moving and scaling in the precipitation area, the local correlation structure should be changed with different timestamp and spatial locations. STPNet can effectively represent such a location–variant connection relationship, whose structure is shown in the dotted box with a yellow background of

Figure 2.

ST-RNN. The precipitation process based on radar echo maps will naturally have random rotation and elimination. In ConvLSTM, location-invariant filters are used by the convolution operation to the input, which is thus inefficient. We designed ST-RNN, which employs the current input and the state of the previous step to obtain a local neighborhood set of each location at each timestamp. A set of continuous flows is used to represent the discrete and non-differentiable location indices. The specific formula of ST-RNN is given in Equation (2):

In the formula, ‘*’ is the convolution operation and ‘∘’ is the Hadamard product.

is the sigmoid activation function.

L is the number of links and

are the flow fields storing local connections, whose generating network is

.

refers to the weights for projecting the channels. Function

is used to generate location information from

by double linear sampling [

4]. We represent

, where

and

, which can be stated as Equation (3):

The connection topology can be obtained from the parameters of the subnetwork , whose input is the concatenation of . The subnetwork adopts a simple convolutional neural network and nearly no additional computation cost is added.

For our spatiotemporal sequence forecasting problem, ST-RNN net is the key building block used in the whole encoder-decoder network structure. This structure is similar to the predictor model in [

4,

9]. The encoder network (shown in

Figure 3) is in charge of compressing the recent radar echo observation sequence

into

layers of ST-RNNs and the decoder network (shown in

Figure 4) unfolds these encoder states to generate the most likely length-

predictions

. Three-dimensional tensors are employed in the input and output elements to preserve the spatial information.

Encoder. The structure of the encoder, shown in

Figure 3, is formed by stacking Convolution, ST-RNN, Down Sampling, ST-RNN, Down Sampling, and ST-RNN. The input sequence of radar echo images first passes through the first convolution layer to extract the spatial feature information of each echo image. The resolution is reduced, and the output feature image size is 100 × 100. Then, the local spatiotemporal feature information of the echo images is extracted at a low scale through the ST-RNN layer, and the hidden state

is output. At the same time, the output feature map is sent to the lower sampling layer to extract the spatial features of high-level spatial scale, whose size is now 50 × 50. The second ST-RNN layer is used to extract the spatial and temporal features of mesoscale and output the hidden state

. The output result of the second layer ST-RNN is transferred to the second lower sampling layer whose size is 25 × 25. The last convolution layer extracts the spatial features of higher spatial scale, and outputs the feature map to the last ST-RNN layer, which outputs the hidden state

. In this paper, three stacked ST-RNN layers are used [

4]. Few ST-RNN layers do not have strong enough representational power for spatiotemporal features and prediction accuracy will be affected. A large number of ST-RNN layers will increase the difficulty of training and the network is easy to overfit.

Decoder. The structure of the decoder, shown in

Figure 4, is dual to that of the encoder, including ST-RNN, Up Sampling, ST-RNN, Up Sampling, ST-RNN, Deconvolution, and Convolution. The order of the decoder network is reversed, where the high-level states capturing the global spatiotemporal representation are utilized to influence the update of the low-level states. When the decoder is initialized, all ST-RNN layers receive the hidden states

,

, and

from the encoder, respectively. Firstly, the encoder sends

to the top-level ST-RNN, and transmits the output results to the upper sampling layer to fill in details at high scale. The output feature map size is now 50 × 50. Then, through the middle ST-RNN layer, the prediction is carried out on the mesoscale with the received hidden state

. The predicted output feature map is sent to the second upsampling layer to fill in the details on the mesoscale, and the size of the output feature map is 100 × 100. The lowest level ST-RNN layer receives the hidden state

and the mesoscale feature map, and makes prediction on the small scale. Finally, through the deconvolution layer, by combining features and filling in the small-scale image details, the first-stage radar echo prediction image sequence is output.

Table 1 and

Table 2 show the detailed structure settings of the encoder and decoder of our spatiotemporal RNN model. Kernel is a matrix that moves over the input data, and performs the dot product with the sub-region of input data. Stride defines the step size of the kernel when sliding through the image. L is the number of links in the state-to-state transition.

2.2.2. Second Stage: Detail Refinement Net

Although the predicted images by the first-stage STPNet already perform well and have high prediction accuracy, the extrapolation radar echo images still seem to be blurred and lack details [

9,

45,

46], as shown in

Figure 5b. Therefore, the second-stage network (RefNet) is proposed to further extract spatiotemporal features and refine the predicted radar echo sequences.

The overall architecture of the proposed RefNet is shown in

Figure 6. The details of the predicted radar echo images can be improved by multi-scale feature extraction and fusion residual block. An encoder–decoder network is utilized to implement high-frequency enhancement, which serves as a feature selector for focusing on the locations full of tiny textures. Meanwhile, multi-level skip connection is employed between different-scale features for feature sharing and reuse. Both local and global residual learning is integrated for preserving the low-level features and decreasing the difficulty of training and learning. Different hierarchical features are combined to generate finer features, which favor the reconstruction of high-resolution images. The widely used stride

r = 2 is chosen in the downsampling layers and upsampling layers. Finally, a 1 ×1 convolution layer at the end of the RefNet outputs the residual sequence for the last frame. This operation could further improve the whole model’s ability to predict the radar echo map sequence and enhance the details.

Three subnets are defined to build up the RefNet, named RefNet-Basic (purple arrow in

Figure 6), RefNet-Att (brown arrow in

Figure 6), and RefNet-Down (red arrow in

Figure 6). The blue arrow denotes the convolution operation. Among those modules, RefNet-Basic, shown in

Figure 7a, is the basic module of the other two modules. The RefNet-Basic module is composed of 3-D conv layers with different depths. It generates and combines different hierarchical features that are useful for low-level feature learning [

47]. The low-level feature means edge, texture, and contours in images. The high-level feature means sematic information. Different hierarchical high-level features are fused to help the reconstruction of low-level features. Residual connections are employed for both local and global feature extraction, which make the multi-scale feature information flow more efficiently in the network and mitigate the difficulty of network training.

Intuitively, the high-resolution feature maps have more high-frequency details than those of low-resolution feature maps [

48,

49]. In each RefNet-Basic module, multiple local features are extracted in the encode process, and then reused in the decode process. The fusion operation will merge multi-level feature maps from different phases into decoded feature maps. The operation

in

Figure 6 and

Figure 7 is performed using a shortcut connection and element-wise addition.

As shown in

Figure 7b, RefNet-Att is a RefNet-Basic module followed by channel-wise attention operation to preserve the discriminative features and details to the most extent. In this work, the SE (squeeze-and-excitation) operation [

50] is incorporated as an attention mechanism for learning the spatio-temporal feature importance, and producing the importance weight matrix for input feature map sequences. In

Figure 7c, the RefNet-Down is a RefNet-Basic module followed by the max-pooling operation, which is used to compress the input feature map. The input feature maps are progressively downsampled into small-scale abstractions through successive RefNet-Down modules. Specifically, the RefNet-Down with stride

r = 2 is utilized as the downsampling layer. In the expansive part, the deconvolution layers are then used to upsample the obtained abstractions back to the previous resolution. Therefore, the deconvolution layer with stride

r = 2 is utilized to upsample the upper features.

Figure 8 presents an example of predicted radar echo images with just the first-stage STPNet method and the whole 2S-STRef method. From

Figure 5b and

Figure 8, it is clear that the proposed 2S-STRef method integrating the second-stage RefNet is able to produce sharper predicted radar echo images with more details compared with only the first-stage STPNet method.

2.3. Loss Function

In addition, the frequencies of different intensities of rainfall are outstandingly imbalanced, so weighted loss is utilized to alleviate this problem. As defined in Equation (5), we designed different weights for different radar echo reflectivity

Z (denoting different rainfall intensities):

The weighted loss function we designed is shown in Equation (6):

where

N represents the number of all images in the predicted sequence, and

represents the weight of the (

i,

j)th pixel at the

n-th frame.

and

are the values of (

i,

j)th of the

n-th ground-truth radar echo image and the

n-th predicted image, respectively.

can be denoted as B(Balanced)-MSE and

as B-MAE. More weights are assigned to bigger radar echo reflectivity in the calculation of MSE and MAE to enhance the prediction performance for heavy precipitation. In this way, a lack of samples with heavy rain can be compensated. Our main goal is to learn a network to ensure that the predicted radar echo image

is as close as possible to the ground truth image

. The SSIM (structural similarity) loss is combined with the loss function, which can further enhance the details of the output images [

51].

2.4. Implementation

All models are optimized using the Adam optimizer with a learning rate equal to 10−4. These models are trained with early stopping on the sum of SSIM, B-MSE, and B-MAE. In the ROVER, the mean of the last two flow fields is employed to initialize the motion field [

9]. The training batch size in the RNN models is set to 4. For the STPNet and ConvLSTM models, a 3-layer encoding-forecasting structure is used and the numbers of filters for the RNNs are set to 64, 192, and 192. The kernel sizes (5 × 5, 5 × 5, 3 × 3) are used in the ConvLSTM models. For the STPNet model, the numbers of links are set as 13, 13, and 9.

The implementation details of the proposed RefNet are shown in

Table 3. A 3-layer encoding-forecasting structure is utilized and the numbers of hidden states (64, 128, 256) are set. The kernel sizes of all convolutional layers except that in the output operations (the kernel size is 1 × 1) are set as 3 × 3. Zero padding around the boundaries of radar echo images is performed before convolution to keep the size of the feature maps unchanged. All training data are randomly rotated by 30° and flipped horizontally. The model is trained with the Adam optimizer [

52] by setting β1 = 0.9, β2 = 0.999. The minibatch size is 4. The learning rate is initialized as 1 × 10

−4 and decreased by 0.7 at every 10 epoch.

We implemented the proposed method with the Pytorch 1.7 framework and trained it using NVIDIA RTX 3090 GPU and Cuda 11.0. The default weight initialization method in Pytorch 1.7 was used.