1. Introduction

Different from the universal RGB images, hyperspectral images (HSIs) characterize each pixel of the observed materials with a unique spectral signature that is composed of dozens or even hundreds of components corresponding to different wavelengths [

1]. This provides a much finer knowledge of the scenes, making HSIs advantageous and crucial tools for some computer vision tasks, such as object categorization [

2,

3], recognition [

4] and restoration [

5]. However, the benefits of the additional information also pose challenges for the HSI sensor storage capacity and the attainable transmission bandwidth. Therefore, an effective compression technology is vital for HSI processing tasks.

Ideally, the compressed HSIs should preserve all information without distortion. Due to the restricted storage capacity or transmission bandwidth, a compression technique with a high compression rate is a feasible solution to address the limitations of practical application. Since the compression ratios are usually approximately three or four in the current lossless HSI compression algorithms [

6], lossy compression under an acceptable rate-distortion tradeoff is becoming an increasingly favorable choice.

As a classical lossy compression method, Transform Coding (TC) has been widely used for HSI compression with reasonable complexity. It first maps pixels from high-dimensional pixel space into a compact latent space by decorrelating transforms in order to exploit the spatial and spectral correlation and then quantizes and codes each latent separately [

7]. According to the difference of decorrelating transform methods, the TC method contains linear and nonlinear transform algorithms.

Representative linear transform coding-based image compression techniques include the Joint Photographic Experts Group (JPEG) 2000 [

8], removing high-frequency components from images with discrete wavelet transform, and set partitioning methods, providing a sequence for significant pixels with a tree or block splitting algorithm, such as set partitioning in hierarchical trees (SPIHT) [

9] and embedded zero block coding (EZBC) [

10]. Based on the requirement of HSI compression, two common strategies are proposed. First, the transforms in these methods are directly designed in 3D form to match the three-dimensional characteristic of HSIs, such as 3D discrete cosine transform (3D-DCT) [

11] and 3D discrete wavelet transform (3D-DWT) [

12]. However, not all methods benefit from direct 3D transform. For example, JP3D (part 10 in JPEG2000) [

13] is designed for 3D image compression, yet does not work well for HSIs due to the fact that the 3D transform in JP3D is isotropic, but the spectral correlation in HSIs is much higher than the spatial direction [

14]. Thus, since HSIs can be viewed as an anisotropic joint of 1D spectra and 2D space, a 1D transform (such as the Karhunen–Loève Transform (KLT), DCT or DWT) in the spectral dimension combined with a 2D transform in space has become a popular and effective solution [

15,

16,

17,

18].

However, these linear transforms often implicitly or explicitly assume that the data source satisfies joint Gaussian distribution. Although such an assumption allows for a simple closed-form solution, it may degrade the performance of subsequent entropy coding and rate allocation and thus lead to a suboptimal compression result. This is mainly attributed to the following two reasons. First, some researchers have proven that real-world HSIs represented with separable spatial-spectral bases have marginal distributions of individual coefficients that show greater kurtosis and have heavier tails than HSIs with the same variance of the Gaussian distribution [

19]. At the same time, the joint distributions of different spatial coefficients show variance dependencies at the same location. Therefore, these results illustrate that the HSI source is non-Gaussian, and linear transforms are not the optimal compression methods for hyperspectral data. Second, the Gaussian assumption of the data source also makes the latent representation for HSIs a Gaussian distribution after a linear transform. This gives rise to deviation in the entropy modeling of the latent representation and finally causes a mismatch rate estimation. To address these problems, nonlinear transform coding combined with a non-Gaussian prior needs to be considered in HSI compression tasks because the nonlinearity possesses a more powerful representation capability than traditional linear transforms and a non-Gaussian prior may be helpful for accurate entropy modeling.

Fortunately, the artificial neural network (ANN) is a typical nonlinear transform framework that implements transforms by approximating nonlinear functions, with the ability of mapping pixels into a more compact space than traditional linear transforms; it has achieved excellent results in natural image compression [

20,

21,

22,

23,

24,

25,

26,

27,

28,

29,

30,

31,

32,

33,

34]. Autoencoder [

35] is one of the representative ANN frameworks implementing such nonlinear transform coding [

22,

36,

37]. Moreover, the merger of variational Bayesian theory makes autoencoder-based compression methods more easily explained from the perspective of information quantity [

38].

Furthermore, since the latent representation obtained from nonlinear transform is compressed with entropy coding methods via the entropy model, improving the capacity of the entropy model also needs to be considered. Earlier works usually use a fully factorized density [

21] to construct entropy models to estimate the probability distribution of the latents. Advanced methods have improved the accuracy of the entropy model. The entropy model in [

20] is implemented with a fixed or even complex model based on the context. To model the relationships over latents, a conditional probability model [

39] is proposed, which resembles the recurrent networks idea in [

40]. To reduce the time complexity, a hyperprior [

30] linked to the concept of side information is employed, which enhances the accuracy of the entropy model by introducing small additional bits. Although the hyperprior-based method is flexible and has high efficiency in the image compression task, the rationality of the existing hyperprior still depends on the assumption that the statistics of each latent follow a Gaussian distribution, which may not be appropriate in many real cases. This is because the latent representation is of non-Gaussian behavior after nonlinear transform, regardless of whether the data source has a Gaussian or non-Gaussian distribution.

In view of the existing ANN-based image compression methods, establishing a nonlinear transform for HSIs becomes feasible. Because most of the ANN-based compression works focus on natural images (RGB) [

41], we need to combine the characteristics of HSIs for an optimal compression result. First, the designing of the network architecture should be consistent with the anisotropic hyperspectral cubes. Moreover, proposing a rational hyperprior to learn an accurate entropy model over compressed HSIs for entropy coding is also another key factor for obtaining the optimal rate-distortion performance.

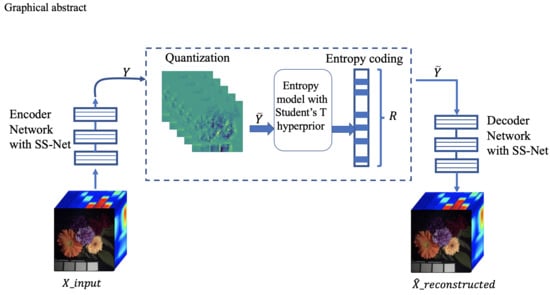

Based on the above analysis, this paper explores a specific end-to-end learning-based framework for the HSI compression task. The contributions of this research are as follows.

(1) A spatial and spectral network (SS-Net) is developed and embedded into the comprehensive ANN-based compression architecture so as to both realize the nonlinear transform and take into account the anisotropic characteristic of HSIs. The proposed architecture links cascades of convolutional neural networks to the anisotropic HSI cubes, which can possess a more powerful representation capability than traditional linear transform codecs.

(2) A Student’s T hyperprior that merges the statistics of the latents and the side information concept into a unified neural network is proposed to learn an accurate entropy model for entropy coding, which can not only increase the flexibility of entropy model, but also greatly improve the efficiency of entropy coding.

(3) The experimental results show that the proposed compression framework can outperform the commonly used linear transform coding methods for HSI compression in terms of rate-distortion performance. To the best of our knowledge, the present method is the first joint rate-distortion optimization with an ANN-based method developed for the HSI compression task.

The remainder of the paper is organized as follows.

Section 2 provides a comprehensive review of the related works. The proposed novel HSI compression model and network architecture are presented in

Section 3.

Section 4 specifies the experimental setup, and the results of the proposed method are represented visually and quantitatively and are compared to those of the widely used HSI codecs. Then, the strengths and weaknesses of the proposed method are assessed based on two nature HSI datasets and one remote sensing HSI dataset for three distortion metrics. In

Section 5, the conclusions of this paper and future works are discussed.

5. Conclusions

We propose an end-to-end network architecture for the hyperspectral compression task, which not only involves particular characteristics of HSIs, but also embeds an accurate entropy model. First, an SS-Net is designed to match the anisotropic characteristic of HSIs to capture a more powerful latent representation. Then, a Student’s T hyperprior is proposed to reduce the mismatch between the entropy model and latent representation, since the latents exhibit striking non-Gaussian characteristics, and an inaccurate or unmatched prior of the latent representation can lead to an inexact rate estimation. The summarized results illustrate that our method displays better rate-distortion performance than the state-of-the-art linear transform coding methods, which suffer from issues with visual artifacts at low bit rates.

Our study verifies the potential of ANNs for the HSI compression task. The excellent rate-distortion performance over the low-resolution remote sensing HSI dataset can provide convenient storage and transmission for some low-resolution HSI tasks (e.g., HSI fusion). To some extent, our HSI compression method may reduce the impact on the accuracy of other HSI tasks, as our Student’s T prior based models are considerable compared with other compression methods. Although the choice of the degree of freedom increases the flexibility of the entropy model, it also brings a difficulty in selecting the best value, as we fix it in our experiments. In addition, our model may not match the performance of carefully optimized traditional compression methods due to the domain shift between the training set and the testing set. Therefore, the construction of a model that can quickly adapt to different HSI compression tasks and achieve an optimal rate-distortion performance needs to be performed in the future.