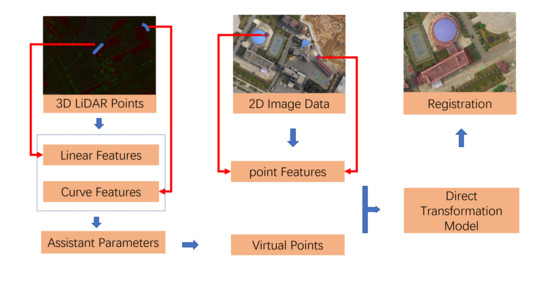

As mentioned in the introduction, registration based on primitives starts from the extraction of salient features, which include point, linear, and planar features, as

Figure 2 shows. LiDAR point cloud has semi-discrete random characteristics, so the three types of registration primitives all have specific new features on the LiDAR point cloud, as shown in

Table 2. Point registration primitives have random characteristics in LiDAR point cloud and there are error offsets along the three directions of the coordinate axis. The line and patch features in the point cloud are usually generated or fitted by multiple points, so the influence of the discrete error can be greatly reduced [

49]. However, the three types of registration primitive have different mathematical expression, and the mathematical expression complexity in

Table 2 indicates the difficulty of mathematical expression of each feature. The simpler the expression of registration primitives, the simpler the registration model and the smaller the registration process error.

2.1. Building Edges Extraction and Feature Selection

Building outlines have distinguishable features that include linear features and curve features, and many methods can be used to extract building outlines from the LiDAR data. To eliminate the semi-random discrete characteristics of point cloud data and obtain high-precision linear registration primitives, the patch intersection can be seen as the most reliable way [

50]. In other words, the linear features with wall point cloud should be the first choice for the registration primitives in the L side, as

Figure 3 shows. However, the general method of tracking the plane profile of buildings based on airborne LiDAR data is to convert the point cloud into the depth image, then use an image segmentation algorithm to segment the depth image, and finally, use the scanning line method and neighborhood searching method to track the building boundary [

51]. The problem of the abovementioned process is that the edge tracked is the rough boundary of the discrete point set, which has low accuracy. Some researchers have also studied the method of extracting the contour directly from the discrete point set. For example, Sampath A et al. [

52] proposed an edge tracking algorithm based on plane discrete points. The algorithm takes the edge length ratio as the constraint condition, reduces the influence of the point density, and improves the adaptability for the edge extraction of the slender feature or uneven distribution, but an unsuitable threshold setting can cause the edge excessive contraction. Alpha shapes algorithm was first proposed by Edelsbruuner et al. [

53]. Later, many researchers improved upon it and have applied it to the field of airborne LiDAR data processing, because the algorithm has perfect theory and high efficiency and can also deal with complex building contour extraction conditions (e.g., curved building outlines). However, the alpha shapes algorithm is not suitable for uneven data distribution and the selection of algorithm parameters is also difficult. Therefore, to deal with the above problems, this paper proposes a double threshold alpha shapes algorithm to extract the contour of the point set. Then, the initial contour is simplified by using the least square simplification algorithm so that a candidate linear registration primitive satisfying the requirements is generated. Finally, the linear features with wall points can be selected as the registration primitive including straight line features and curve features.

Figure 4 illustrates the framework of the proposed linear extraction method.

2.2. Contour Extraction Based on Double Threshold Alpha Shapes Algorithm

Restoring the original shape of a discrete (2D or 3D) point set is a fundamental and difficult problem. In order to effectively solve the problem, a series of excellent algorithms have been proposed, and the alpha shapes algorithm [

54] is one of the best. The alpha shapes algorithm is a deterministic algorithm with a strict mathematical definition. For any finite point set

S, the shape of the point set

δS obtained by the alpha shapes algorithm is definite. In addition, the user can also control the shape

δS of set

S by adjusting the unique parameter α of the algorithm. In addition to formal definitions, the alpha shapes algorithm can also use geometric figures for intuitive description. As shown in

Figure 5a, the yellow points constitute a point set

S; a circle C with a radius of α rolls around the point set

S as closely as possible. During the rolling process, the circle C cannot completely contain any point in the point set. Finally, the intersection of the circle C and the point set

S is connected in an orderly manner to obtain the point shape set, the shape of which is called the α-shape of the point set

S. For any point set

S, there are two specific α-shapes: (1) When α approaches infinity, the circle C degenerates into a straight line on the plane, and the point set

S is on the same side of the circle C at any given time. Therefore, α-shape is equivalent to the convex hull of the point set

S (as shown in

Figure 5b); (2) When α approaches 0, since the circle C can roll into the point set, each point in set

S is an independent individual, so the shape of the point set is the point itself (as shown in

Figure 5c).

Considering the excellent performance of the alpha shapes algorithm, many researchers have proposed to use the alpha shapes algorithm to directly extract building contours from airborne LiDAR data [

54]. However, in practical applications, there are still some problems that need to be solved: (1) Alpha shapes algorithm is mainly suitable for point set data with relatively uniform density, which is determined by the nature of the algorithm, because the fineness of the point set shape is completely determined by the parameters α as shown in

Figure 6a,b; the left half of the point set is denser, while the right half is relatively sparse.

Figure 6a shows that the alpha shapes algorithm uses a larger α value. The shape shown in

Figure 6b is artificially vectorized according to the distribution of the point set and the result is quite different from the shape automatically extracted by the alpha shapes algorithm. It can be seen that a single α value setting of the alpha shapes algorithm cannot adapt to the point set data with varying point densities, and that is exactly the characteristic of the LiDAR point clouds. (2) The alpha shapes algorithm is not very effective when processing concave point sets. If the value of α is large, the concave corners are easily dulled (

Figure 6c); if the value of α is small, it is easy to obtain a broken point set shape (

Figure 6d). Lach S. R. et al. [

55] pointed out that the value of α should be set as one to two times that of the average point spacing and then the shape of the point set obtained at this time is relatively complete and not too broken.

In response to the above problems, this paper proposes a dual threshold alpha shapes algorithm. The main idea of the dual threshold alpha shapes algorithm is: (1) According to the judgment criterion of alpha shapes, set two thresholds α1 and α2 (α1 = 2.5α2), and obtain the qualified line segment (LS) sets and about the point set ; Select one of the optional line segments from edge sets where point and point are the two endpoints of . In an undirected graph composed of the edges of the point set and , differing from the condition of , the points p and q are not always adjacent. However, starting from point p and passing through several nodes, it can always reach point q and generate a path—the path with the smallest length is recorded as . Then, set up a path selection mechanism as follows. (2) Select as the final path from and . The same operation is performed to the edge . All the edges in the iterative processing are used to obtain the path set {, , , …}. Finally, all the paths are connected in turn to obtain the high-precision point set shape. The double threshold alpha shapes algorithm is mainly composed of the following two steps, which are described in detail as follows.

- (1)

Obtaining dual threshold α-shape

Let

be the Delaunay triangulation of the point set

S, and

and

are the α-shapes obtained by the alpha shapes algorithm when the parameters are set to α

1 and α

2, respectively. Literature has proved that α-shapes under any threshold are all sub-shapes of

, which means

. Therefore, the process of obtaining α-shape is as follows: firstly, use the point-by-point insertion algorithm to construct the Delaunay triangulation

of the point set

S (see [

52] for the detailed steps of the algorithm) and then perform an alpha shapes algorithm on each edge in

in turn, as shown in

Figure 7. A line

pq (point

p and

q are adjacent boundary points) is an edge in

, circle

C is a circle that passes through

pq and has a radius of α (the coordinates of the circle center are as shown in (1) and (2), if there is no other vertices in the circle

C), then the edge

pq belongs to the α-shape.

where:

| Coordinate of point p; |

| : | Coordinate of point q; |

| Coordinate of point c; c is the center of circle C; |

| Radius of circle C. |

- (2)

Optimization of boundary path

In an undirected graph composed of the edges of the point set and . Select any line segment, denoted as the line segment path , with the two endpoints of p and q. Search all paths in the undirected graph with as the starting point and as the end point, calculate the length of each path, and record the path with the smallest length as the boundary path of point and point .

To ensure that the boundary path from point p to point q is the real boundary of the building. In this paper, three criteria are set to determine the path L from the. The judgment criteria are as follows:

- ✧

As

Figure 8a shows, if the length of

is more than 5 times that of

, then discard

and keep

;

- ✧

As

Figure 8b shows, if the two adjacent edges of

are close to vertical (more than 60 degree), and all the distances from the endpoints of

to any adjacent edge of

are small, discard

and keep

;

- ✧

As

Figure 8c shows, if the two adjacent sides of

and

are close to parallel, and the distance from the end point on

to

is less than a certain threshold (such as half the average point spacing), then

is discarded and

is retained.

Repeat the above procedure until all the edges of the are complete, then the paths obtained in step (2) are connected in turn to obtain the shape of the point set .

2.3. Straight Linear Feature Simplification Based on Least Square Algorithm

The initial boundary edges of the building obtained by the dual threshold alpha shapes algorithm are very rough and generally need to be simplified first. Douglas Peucker’s [

56] algorithm is a classic vector compression algorithm, which is used by many global information systems (GISs). The algorithm uses the vertical distance from the vertex to the line as the simplification basis. If the vertical distance is less than the threshold, the two ends of the line are directly used to replace the current simplification. Otherwise, use the maximum offset point to divide the element into two new elements to be simplified, and then recursively repeat the above operation for the new elements to be simplified, as

Figure 9a shows.

This paper proposes a simplification algorithm for linear features based on the least square method, as

Figure 9b shows. The algorithm requires two parameters, namely the distance threshold

and the length threshold

Len. The detailed steps of the algorithm are as follows:

- (1)

Select three consecutive vertices, A, B, and C, of the polygon in order, use the least square method to fit the straight line L, and calculate the distance from the vertices A, B, C to the straight line L. If any of the distances are greater than , then go to step (4); otherwise, let U = , and go to step (2);

- (2)

Let set U have two ends p and q, which extend to both directions from p and q, respectively. A new vertex will be added and judged during the growth process. If the distance between the new vertex and the line L is less than, then add it to the set U and use it as a new starting point to continue the growth—otherwise, it will stop growing at the vertex and the direction—until both directions are finished;

- (3)

Determine the length of the set U. If the length of U is greater than the threshold Len, keep the two ends of the set and discard the middle vertices;

- (4)

If there are three consecutive vertexes remaining to be judged, go to step (1); otherwise, calculate the size of the length threshold Len. If Len is greater than 2~3 times the average point spacing, reduce the length threshold to Len = 0.8 Len, and go to step (1).

In

Figure 9, the black lines are building contours extracted based on double threshold alpha shapes algorithm. Nodes A, B, C, …, I are the boundary key points of the building and the blue line is the intermediate result of the optimization process. In

Figure 9a, the blue line is the final result after optimization. In

Figure 9b, the red line is a fitting line based on the least square method.

2.4. Curve Feature Simplification Based on Least Square Algorithm

Most of the non-rectangular building boundaries are circular or arc-shaped building outlines, and even an elliptical arc can be regarded as a segmented combination of multiple radius arcs. Therefore, any building boundary curve segment can be regarded as a circular arc segment, defined by rotating a certain radius R around an angle

θ, which is shown in

Figure 10. In addition, for any arc segment, as long as the number of boundary point clouds on the arc segment

N0 > 3 is satisfied, the arc segment C at this time can be fitted by the least square method to obtain the center

o, the radius

R and the arc segment angle

θ (

Figure 10). For any arc, the height on the roof boundary is the same, and it can be directly obtained and recorded as

Z0 during feature extraction. At this time, any arc can be shown in (3):

Though most commercial and residential buildings have rectangular outlines, arc-shape outlines are not uncommon. In the paper, arc-shape outlines are viewed as arcs of circles. For a given arc, the associated radius and central angle are denoted by

R and

, respectively, as shown in

Figure 10. Denote the coordinates of the circle center by

, then any arc can be expressed as follows:

where:

X1, Y1 and X2, Y2: The coordinates of the two distinct end points of the arc;

Z0: The constant height value of the arc which can usually be obtained directly from building edge point.

The three parameters

,

, and

can be calculated using the least square method. At this time, any coordinate on the arc segment can be expressed via (4):

where:

| (X0, Y0, Z0): | The center coordinate of the space circle where the arc is located; |

| R: | The radius of the circle where the arc is located; |

| θ: | The polar coordinate angle of the current point in the transformed coordinate system. |

2.5. The Selection of the Linear Registration Primitives

In most cases, a laser beam hits a building at a scanning angle, except when the building is at the nadir of the scanner. This means that some wall facets can be reached by the laser beam, but others cannot, and there is usually a ditch between the wall and the roof edge, as shown in

Figure 11. We term wall facets exposed to the laser beam as positives while the others are negatives. That means not all building outlines are accurate enough to meet the registration requirement. To solve the abovementioned problem, only the linear features formed by positive facets are selected as the candidates for registration primitives. By determining that a facet is positive or negative, the following strategy is employed: fitting a given facet by a plane equation so that the normal vector to the plane is obtained, then moving the fitted plane along the normal vector outwardly by a small distance

ds, which is determined according to the density of point cloud. A small cuboid is then formed (

Figure 11). If the total number of points in the cuboid is larger than a given threshold

N, then the line segment formed by projecting the facet onto the ground (xy-plane) is selected as one of the registration primitives.

where:

| The volume of ith cuboid; |

| : | The ith linear feature segment associated with the ith cuboid; |

| The threshold of the total number of points per unit area; |

| : | The average height of the linear feature; |

| The distance that the facet moves outwardly along the facet normal vector. |

The abovementioned idea can be shown more intuitively by

Figure 11, which gives an outline segment of a building: the vertical view of the building, where VL and VR are the two outline segments formed by projection of the building onto the xy-plane, and its horizontal view from a specific angle. VL is selected as a candidate registration primitive in the paper.

The linear registration primitives extracted by the above method are equivalent to the intersection of the roof surface and the wall surface, so the extraction accuracy is high and the influence of the semi-random attribute of the point cloud is greatly avoided.