Forest Road Detection Using LiDAR Data and Hybrid Classification

Abstract

:1. Introduction

1.1. Background

1.2. Brief Review of the State-of-Art

- (i)

- To explore the potential of LiDAR data as the only source of information for identifying and outlining paved and unpaved forest roads in a rural landscape.

- (ii)

- To demonstrate whether integration of several classification approaches (object-based and pixel-based) in a single decision tree, created using the available information, contributes to improving the accuracy of an automatic pixel-based classification using Random Forest (RF).

- (iii)

- To assess the extent to which the accuracy of detected forest roads is affected by several factors, such as point density, slope, penetrability and road surface.

2. Study Area and Data

3. Methodology

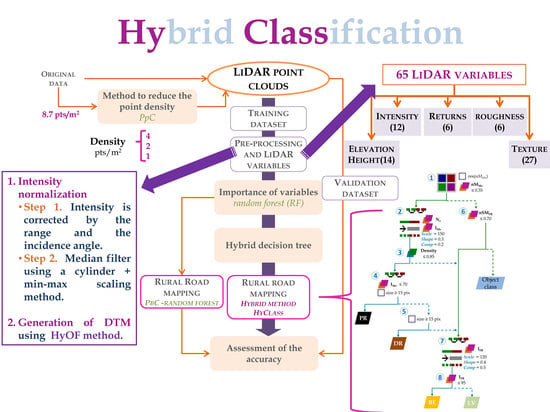

3.1. LiDAR Data Processing

3.1.1. Reduction of Point-Cloud Density

3.1.2. Intensity Normalization

3.1.3. LiDAR Variables

3.2. Road Detection

3.2.1. Importance of Variables

3.2.2. Design of Hybrid Classification

3.3. Strategies for Assessing and Analyzing the Results

3.3.1. Quality Measures and Positional Accuracy

3.3.2. Sensitivity Analysis

4. Results and Discussion

4.1. Analysis of Importance of Variables

4.2. Overall Assessment of Road Extraction

4.3. Effect of Factors on the Quality Measures

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| CCF | Canopy Cover Fraction |

| DR | Dirt road |

| FN | False negative |

| FP | False positive |

| TN | True negative |

| TP | True positive |

| OA | Overall accuracy |

| OPA | Overall positional accuracy |

| PR | Paved road |

| PS | Point spacing |

| RF | Random Forest |

Appendix A. R Code for Calculating the Road Centerlines

- # 1. Copyright statement comment ---------------------------------------

- # Copyright 2020

- # 2. Author comment ----------------------------------------------------

- # Sandra Bujan

- # 3. File description comment ------------------------------------------

- # Code to calculate the road centerlines

- # 4. source() and library() statements ---------------------------------

- library (rgdal)

- library (sp)

- library (raster)

- library (geosphere)

- # 5. function definitions ----------------------------------------------

- # Code obtained from

- CreateSegment <- function (coords, from, to) {

- distance <- 0

- coordsOut <- c()

- biggerThanFrom <- F

- for (i in 1:(nrow (coords) - 1)) {

- d <- sqrt ((coords[i, 1] - coords[i + 1, 1])^2 + (coords[i, 2] - coords[i + 1, 2])^2)

- distance <- distance + d

- if (!biggerThanFrom && (distance > from)) {

- w <- 1 - (distance - from)/d

- x <- coords[i, 1] + w ∗ (coords[i + 1, 1] - coords[i, 1])

- y <- coords[i, 2] + w ∗ (coords[i + 1, 2] - coords[i, 2])

- coordsOut <- rbind (coordsOut, c(x, y))

- biggerThanFrom <- T

- }

- if (biggerThanFrom) {

- if (distance > to) {

- w <- 1 - (distance - to)/d

- x <- coords[i, 1] + w * (coords[i + 1, 1] - coords[i, 1])

- y <- coords[i, 2] + w * (coords[i + 1, 2] - coords[i, 2])

- coordsOut <- rbind (coordsOut, c(x, y))

- break

- }

- coordsOut <- rbind (coordsOut,

- c(coords[i + 1, 1],

- coords[i + 1, 2]))

- }

- }

- return (coordsOut)

- }

- CreateSegments <- function (coords, length = 0, n.parts = 0) {

- stopifnot ((length > 0 || n.parts > 0))

- # calculate total length line

- total_length <- 0

- for (i in 1:(nrow (coords) - 1)) {

- d <- sqrt((coords[i, 1] - coords[i + 1, 1])^2 + (coords[i, 2] - coords[i + 1, 2])^2)

- total_length <- total_length + d

- }

- # calculate stationing of segments

- if (length > 0) {

- stationing <- c(seq(from = 0, to = total_length, by = length), total_length)

- } else {

- stationing <- c(seq(from = 0, to = total_length, length.out = n.parts),

- total_length)

- }

- # calculate segments and store the in list

- newlines <- list ()

- for (i in 1:(length (stationing) - 1)) {

- newlines[[i]] <- CreateSegment (coords, stationing[i], stationing[i + 1])

- }

- return (newlines)

- }

- segmentSpatialLines <- function (sl, length = 0, n.parts = 0, merge.last = FALSE) {

- stopifnot ((length > 0 || n.parts > 0))

- id <- 0

- newlines <- list()

- sl <- as (sl, “SpatialLines”)

- for (lines in sl@lines) {

- for (line in lines@Lines) {

- crds <- line@coords

- # create segments

- segments <- CreateSegments (coords = crds, length, n.parts)

- if (merge.last && length (segments) > 1) {

- # in case there is only one segment, merging would result into error

- l <- length (segments)

- segments[[l - 1]] <- rbind (segments[[l - 1]], segments[[l]])

- segments <- segments[1:(l - 1)]

- }

- # transform segments to lineslist for SpatialLines object

- for (segment in segments) {

- newlines <- c(newlines, Lines (list(Line(unlist(segment))), ID = as.character(id)))

- id <- id + 1

- }

- }

- }

- # transform SpatialLines object to SpatialLinesDataFrame object

- newlines.df <- as.data.frame (matrix (data = c(1:length (newlines)),

- nrow = length (newlines),

- ncol = 1))

- newlines <- SpatialLinesDataFrame (SpatialLines (newlines),

- data = newlines.df,

- match.ID = FALSE)

- return (newlines)

- }

- # 6. Code to calculate the road centerlines --------------------------------------------------

- # Set the user parameters----

- InputDir <- “H:/ForestRoad/Data” # Set the data directory

- OutputDir <- “H:/ForestRoad/Centerlines” # Set the output directory

- CoodSystem <- “+init=epsg:25829” # Set the coordinate system of the data

- FileLS <- “Left_side.shp” # Set the name of left side shapefile

- FileRS <- “Right_side.shp” # Set the name of right side shapefile

- # 6.1 Load road sides from shapefiles----

- LeftSide <- rgdal::readOGR (file.path (InputDir,FileLS))

- RightSide <- rgdal::readOGR (file.path (InputDir,FileRS))

- # 6.2 Divide the right side in segments and transform to points----

- RightSide <- segmentSpatialLines (RightSide,

- length = 0.5,

- merge.last = TRUE)

- RightSide <- sp::remove.duplicates (as (RightSide, “SpatialPointsDataFrame”))

- # 6.3 Calculate the mirror point from the previous points----

- ## 6.3.1 Coordinate transformation----

- raster::crs (RightSide) <- raster::crs (LeftSide)

- LeftSide <- sp::spTransform (LeftSide,

- CRS (“+init=epsg:4326”))

- RightSide <- sp::spTransform (RightSide,

- CRS (“+init=epsg:4326”))

- ## 6.3.2 Mirror points----

- MirrorPoints <- geosphere::dist2Line (RightSide,

- LeftSide)

- MirrorPointsSpatial <- sp::SpatialPointsDataFrame (coords = MirrorPoints[,c(2:3)],

- data = as.data.frame (MirrorPoints),

- proj4string = CRS (“+init=epsg:4326”))

- MirrorPointsSpatial <- sp::spTransform (MirrorPointsSpatial,

- CRS (CoodSystem))

- # 6.4 Calculate middle points between mirror and right points----

- MPoints.df <- data.frame (matrix (data=NA, ncol = 2,nrow=0))

- for (i in c(1:nrow (MirrorPoints))){

- MPoints.df[i,] <- geosphere::midPoint (RightSide@coords[i,],

- MirrorPoints[i,c(2:3)])

- }

- ## 6.4.1 Transform to spatialpoints data frame and save spatial points----

- MPoints <- sp::SpatialPointsDataFrame (coords = MPoints.df,

- data = MPoints.df,

- proj4string = CRS (“+init=epsg:4326”))

- MPoints <- sp::spTransform (MPoints, CRS (CoodSystem))

- rgdal::writeOGR (MPoints,

- dsn = OutputDir,

- layer = “MPoints”,

- driver = “ESRI˽Shapefile”,

- overwrite_layer = TRUE)

Appendix B. Decision Trees with Reduced Point Clouds

References

- Alberdi, I.; Vallejo, R.; Álvarez-González, J.G.; Condés, S.; González-Ferreiro, E.; Guerrero, S.; Hernández, L.; Martínez-Jauregui, M.; Montes, F.; Oliveira, N.; et al. The multiobjective Spanish National Forest Inventory. For. Syst. 2017, 26, e04S. [Google Scholar] [CrossRef]

- Gucinski, H.; Furniss, M.; Ziemer, R.; Brookes, M. Forest Roads: A Synthesis of Scientific Information; General Technical Report PNW-GTR-509; US Department of Agriculture, Forest Service, Pacific Northwest Research Station: Portland, OR, USA, 2001. [Google Scholar]

- Prendes, C.; Buján, S.; Ordoñez, C.; Canga, E. Large scale semi-automaticdetection of forest roads from low density LiDAR data on steep terrain in Northern Spain. iForest 2019, 12, 366–374. [Google Scholar] [CrossRef] [Green Version]

- Lugo, A.E.; Gucinski, H. Function, effects, and management of forest roads. For. Ecol. Manag. 2000, 133, 249–262. [Google Scholar] [CrossRef]

- Sherba, J.; Blesius, L.; Davis, J. Object-Based Classification of Abandoned Logging Roads under Heavy Canopy Using LiDAR. Remote Sens. 2014, 6, 4043–4060. [Google Scholar] [CrossRef] [Green Version]

- Tejenaki, S.A.K.; Ebadi, H.; Mohammadzadeh, A. A new hierarchical method for automatic road centerline extraction in urban areas using LIDAR data. Adv. Space Res. 2019, 64, 1792–1806. [Google Scholar] [CrossRef]

- Zhang, Z.; Zhang, X.; Sun, Y.; Zhang, P. Road Centerline Extraction from Very-High-Resolution Aerial Image and LiDAR Data Based on Road Connectivity. Remote Sens. 2018, 10, 1284. [Google Scholar] [CrossRef] [Green Version]

- Abdi, E.; Sisakht, S.; Goushbor, L.; Soufi, H. Accuracy assessment of GPS and surveying technique in forest road mapping. Ann. For. Res. 2012, 55, 309–317. [Google Scholar]

- Triglav-Čekada, M.; Crosilla, F.; Kosmatin-Fras, M. Theoretical LiDAR point density for topographic mapping in the largest scales. Geod. Vestn. 2010, 54, 403–416. [Google Scholar] [CrossRef]

- Rieger, W.; Kerschner, M.; Reiter, T.; Rottensteiner, F. Roads and buildings from laser scanner data within a forest enterprise. Int. Arch. Photogramm. Remote Sens. 1999, 32, W14. [Google Scholar]

- Xu, Y.; Xie, Z.; Feng, Y.; Chen, Z. Road Extraction from High-Resolution Remote Sensing Imagery Using Deep Learning. Remote Sens. 2018, 10, 1461. [Google Scholar] [CrossRef] [Green Version]

- Kearney, S.P.; Coops, N.C.; Sethi, S.; Stenhouse, G.B. Maintaining accurate, current, rural road network data: An extraction and updating routine using RapidEye, participatory GIS and deep learning. Int. J. Appl. Earth Obs. Geoinf. 2020, 87, 102031. [Google Scholar] [CrossRef]

- Roman, A.; Ursu, T.; Fǎrcaş, S.; Lǎzǎrescu, V.; Opreanu, C. An integrated airborne laser scanning approach to forest management and cultural heritage issues: A case study at Porolissum, Romania. Ann. For. Res. 2017, 60, 127–143. [Google Scholar] [CrossRef] [Green Version]

- Dal Poz, A.P.; Gallis, R.A.B.; da Silva, J.F.C.; Martins, É.F.O. Object-Space Road Extraction in Rural Areas Using Stereoscopic Aerial Images. IEEE Geosci. Remote Sens. Lett. 2012, 9, 654–658. [Google Scholar] [CrossRef]

- Quackenbush, L.; Im, I.; Zuo, Y. Remote Sensing of Natural Resources. In Remote Sensing of Natural Resources; chapter Road extraction: A review of LiDAR-focused studies; Wang, G., Weng, Q., Eds.; CRC Press: Boca Raton, FL, USA, 2013; pp. 155–169. [Google Scholar]

- García, M.; Ria no, D.; Chuvieco, E.; Danson, F. Estimating biomass carbon stocks for a Mediterranean forest in central Spain using LiDAR height and intensity data. Remote Sens. Environ. 2010, 114, 816–830. [Google Scholar] [CrossRef]

- Ruiz, L.Á.; Recio, J.A.; Crespo-Peremarch, P.; Sapena, M. An object-based approach for mapping forest structural types based on low-density LiDAR and multispectral imagery. Geocarto Int. 2018, 33, 443–457. [Google Scholar] [CrossRef]

- Wang, W.; Yang, N.; Zhang, Y.; Wang, F.; Cao, T.; Eklund, P. A review of road extraction from remote sensing images. J. Traffic Transp. Eng. 2016, 3, 271–282. [Google Scholar] [CrossRef] [Green Version]

- Ferraz, A.; Mallet, C.; Chehata, N. Large-scale road detection in forested mountainous areas using airborne topographic lidar data. ISPRS J. Photogramm. Remote Sens. 2016, 112, 23–36. [Google Scholar] [CrossRef]

- Azizi, Z.; Najafi, A.; Sadeghian, S. Forest Road Detection Using LiDAR Data. J. For. Res. 2014, 25, 975–980. [Google Scholar] [CrossRef]

- Liu, D.; Xia, F. Assessing object-based classification: Advantages and limitations. Remote Sens. Lett. 2010, 1, 187–194. [Google Scholar] [CrossRef]

- Pu, R.; Landry, S.; Yu, Q. Object-based urban detailed land cover classification with high spatial resolution IKONOS imagery. Int. J. Remote Sens. 2011, 32, 3285–3308. [Google Scholar] [CrossRef] [Green Version]

- Boyaci, D.; Erdoǧan, M.; Yildiz, F. Pixel- versus object-based classification of forest and agricultural areas from multiresolution satellite images. Turk. J. Electr. Eng. Comput. Sci. 2017, 25, 365–375. [Google Scholar] [CrossRef]

- Sturari, M.; Frontoni, E.; Pierdicca, R.; Mancini, A.; Malinverni, E.S.; Tassetti, A.N.; Zingaretti, P. Integrating elevation data and multispectral high-resolution images for an improved hybrid Land Use/Land Cover mapping. Eur. J. Remote Sens. 2017, 50, 1–17. [Google Scholar] [CrossRef]

- Chen, Y.; Zhou, Y.; Ge, Y.; An, R.; Chen, Y. Enhancing Land Cover Mapping through Integration of Pixel-Based and Object-Based Classifications from Remotely Sensed Imagery. Remote Sens. 2018, 10, 77. [Google Scholar] [CrossRef] [Green Version]

- Aguirre-Gutiérrez, J.; Seijmonsbergen, A.C.; Duivenvoorden, J.F. Optimizing land cover classification accuracy for change detection, a combined pixel-based and object-based approach in a mountainous area in Mexico. Appl. Geogr. 2012, 34, 29–37. [Google Scholar] [CrossRef] [Green Version]

- Malinverni, E.S.; Tassetti, A.N.; Mancini, A.; Zingaretti, P.; Frontoni, E.; Bernardini, A. Hybrid object-based approach for land use/land cover mapping using high spatial resolution imagery. Int. J. Geogr. Inf. Sci. 2011, 25, 1025–1043. [Google Scholar] [CrossRef]

- Cánovas-García, F.; Alonso-Sarría, F. A local approach to optimize the scale parameter in multiresolution segmentation for multispectral imagery. Geocarto Int. 2015, 30, 937–961. [Google Scholar] [CrossRef] [Green Version]

- Definiens. Definiens. Developer 7. Reference Book, document version 7.0.2.936 ed.; Definiens AG: München, Germany, 2007; p. 197. [Google Scholar]

- González-Ferreiro, E.; Miranda, D.; Barreiro-Fernández, L.; Buján, S.; García-Gutierrez, J.; Diéguez-Aranda, U. Modelling stand biomass fractions in Galician Eucalyptus globulus plantations by use of different LiDAR pulse densities. For. Syst. 2013, 22, 510–525. [Google Scholar] [CrossRef]

- Ruiz, L.A.; Hermosilla, T.; Mauro, F.; Godino, M. Analysis of the influence of plot size and LiDAR density on forest structure attribute estimates. Forests 2014, 5, 936–951. [Google Scholar] [CrossRef] [Green Version]

- Singh, K.K.; Chen, G.; McCarter, J.B.; Meentemeyer, R.K. Effects of LiDAR point density and landscape context on estimates of urban forest biomass. ISPRS J. Photogramm. Remote Sens. 2015, 101, 310–322. [Google Scholar] [CrossRef] [Green Version]

- Buján, S.; González-Ferreiro, E.M.; Cordero, M.; Miranda, D. PpC: A new method to reduce the density of lidar data. Does it affect the DEM accuracy? Photogramm. Rec. 2019, 34, 304–329. [Google Scholar] [CrossRef]

- Kashani, A.G.; Olsen, M.J.; Parrish, C.E.; Wilson, N. A review of LIDAR radiometric processing: From Ad Hoc intensity correction to rigorous radiometric calibration. Sensors 2015, 15, 28099–28128. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Song, J.; Han, S.; Yuand, K.; Kim, Y. Assessing the possibility of land-cover classification using LiDAR intensity data. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2002, 34, 259–262. [Google Scholar]

- Donoghue, D.N.; Watt, P.J.; Cox, N.J.; Wilson, J. Remote sensing of species mixtures in conifer plantations using LiDAR height and intensity data. Remote Sens. Environ. 2007, 110, 509–522. [Google Scholar] [CrossRef]

- Höfle, B.; Pfeifer, N. Correction of laser scanning intensity data: Data and model-driven approaches. ISPRS J. Photogramm. Remote Sens. 2007, 62, 415–433. [Google Scholar] [CrossRef]

- Buján, S.; González-Ferreiro, E.; Barreiro-Fernández, L.; Santé, I.; Corbelle, E.; Miranda, D. Classification of rural landscapes from low-density lidar data: Is it theoretically possible? Int. J. Remote Sens. 2013, 34, 5666–5689. [Google Scholar] [CrossRef]

- Coren, F.; Sterzai, P. Radiometric correction in laser scanning. Int. J. Remote Sens. 2006, 27, 3097–3104. [Google Scholar] [CrossRef]

- Habib, A.F.; Kersting, A.P.; Shaker, A.; Yan, W.Y. Geometric calibration and radiometric correction of LiDAR data and their impact on the quality of derived products. Sensors 2011, 11, 9069–9097. [Google Scholar] [CrossRef] [Green Version]

- Han, J. Data Mining: Concepts and Techniques, 3rd ed.; Elsevier: Amsterdam, The Netherlands, 2012; p. 703. [Google Scholar]

- R Development Core Team. R: A Language and Environment for Statistical Computing; R Foundation for Statistical Computing: Vienna, Austria, 2017. [Google Scholar]

- Buján, S.; Cordero, M.; Miranda, D. Hybrid Overlap Filter for LiDAR Point Clouds Using Free Software. Remote Sens. 2020, 12, 1051. [Google Scholar] [CrossRef] [Green Version]

- Nychka, D.; Furrer, R.; Paige, J.; Sain, S. Fields: Tools for Spatial Data; University Corporation for Atmospheric Research: Boulder, CO, USA, 2017. [Google Scholar] [CrossRef]

- Hijmans, R.J. Raster: Geographic Data Analysis and Modeling; R Package Version 2.4-15; 2019. [Google Scholar]

- Salleh, M.R.M.; Ismail, Z.; Rahman, M.Z.A. Accuracy assessment of Lidar-Derived Digital Terrain Model (DTM) with different slope and canopy cover in Tropical Forest Region. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2015, 2, 183–189. [Google Scholar] [CrossRef] [Green Version]

- Roussel, J.R.; Auty, D. LidR: Airborne LiDAR Data Manipulation and Visualization for Forestry Applications, R package version 3.0.3; 2020. [Google Scholar]

- Im, J.; Jensen, J.; Hodgson, M. Object-based land cover classification using high-posting-density LiDAR data. GISci. Remote Sens. 2008, 45, 209–228. [Google Scholar] [CrossRef] [Green Version]

- Höfle, B.; Hollaus, M.; Hagenauer, J. Urban vegetation detection using radiometrically calibrated small-footprint full-waveform airborne LiDAR data. ISPRS J. Photogramm. Remote Sens. 2012, 67, 134–147. [Google Scholar] [CrossRef]

- Teo, T.A.; Huang, C.H. Object-based land cover classification using airborne LiDAR and different spectral images. Terr. Atmos. Ocean. Sci. 2016, 27, 491–504. [Google Scholar] [CrossRef] [Green Version]

- Alonso-Benito, A.; Arroyo, L.A.; Arbelo, M.; Hernändez-Leal, P. Fusion of WorldView-2 and LiDAR Data to Map Fuel Types in the Canary Islands. Remote Sens. 2016, 8, 669. [Google Scholar] [CrossRef] [Green Version]

- Antonarakis, A.; Richards, K.; Brasington, J. Object-based land cover classification using airborne LiDAR. Remote Sens. Environ. 2008, 112, 2988–2998. [Google Scholar] [CrossRef]

- Guo, B.; Huang, X.; Zhang, F.; Sohn, G. Classification of airborne laser scanning data using JointBoost. ISPRS J. Photogramm. Remote Sens. 2015, 100, 71–83. [Google Scholar] [CrossRef]

- Zhou, W. An Object-Based Approach for Urban Land Cover Classification: Integrating LiDAR Height and Intensity Data. IEEE Geosci. Remote Sens. Lett. 2013, 10, 928–931. [Google Scholar] [CrossRef]

- Matikainen, L.; Karila, K.; Hyyppä, J.; Litkey, P.; Puttonen, E.; Ahokas, E. Object-based analysis of multispectral airborne laser scanner data for land cover classification and map updating. ISPRS J. Photogramm. Remote Sens. 2017, 128, 298–313. [Google Scholar] [CrossRef]

- Guan, H.; Li, J.; Chapman, M.; Deng, F.; Ji, Z.; Yang, X. Integration of orthoimagery and lidar data for object-based urban thematic mapping using random forests. Int. J. Remote Sens. 2013, 34, 5166–5186. [Google Scholar] [CrossRef]

- Arefi, H.; Hahn, M.; Lindenberger, J. LiDAR data classification with remote sensing tools. In Proceedings of the ISPRS Commission IV Joint Workshop: Challenges in Geospatial Analysis, Integration and Visualization II, Stuttgart, Gemany, 8–9 September 2003; pp. 131–136. [Google Scholar]

- Kim, H.B.; Sohn, G. Point-based Classification of Power Line Corridor Scene Using Random Forests. Photogramm. Eng. Remote Sens. 2013, 79, 821–833. [Google Scholar] [CrossRef]

- Li, N.; Pfeifer, N.; Liu, C. Tensor-Based Sparse Representation Classification for Urban Airborne LiDAR Points. Remote Sens. 2017, 9, 1216. [Google Scholar] [CrossRef] [Green Version]

- Kim, H. 3D Classification of Power Line Scene Using Airborne LiDAR Data. Ph.D. Thesis, York University, Toronto, ON, Canada, 2015. [Google Scholar]

- Im, J.; Jensen, J.; Tullis, J. Object-based change detection using correlation image analysis and image segmentation. Int. J. Remote Sens. 2008, 29, 399–423. [Google Scholar] [CrossRef]

- Sasaki, T.; Imanishi, J.; Ioki, K.; Morimoto, Y.; Kitada, K. Object-based classification of land cover and tree species by integrating airborne LiDAR and high spatial resolution imagery data. Landsc. Ecol. Eng. 2012, 8, 157–171. [Google Scholar] [CrossRef]

- Buján, S.; González-Ferreiro, E.; Reyes-Bueno, F.; Barreiro-Fernández, L.; Crecente, R.; Miranda, D. Land Use Classification from LiDAR Data and Ortho-Images in a Rural Area. Photogramm. Rec. 2012, 27, 401–422. [Google Scholar] [CrossRef]

- Guo, Q.; Li, W.; Yu, H.; Alvarez, O. Effects of Topographic Variability and Lidar Sampling Density on Several DEM Interpolation Methods. Photogramm. Eng. Remote Sens. 2010, 76, 1–12. [Google Scholar] [CrossRef] [Green Version]

- Samadzadegan, F.; Bigdeli, B.; Ramzi, P. A Multiple Classifier System for Classification of LIDAR Remote Sensing Data Using Multi-class SVM. In Multiple Classifier Systems; El Gayar, N., Kittler, J., Roli, F., Eds.; Springer: Berlin/Heidelberg, Germany, 2010; pp. 254–263. [Google Scholar]

- Breiman, L. Random forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef] [Green Version]

- Liaw, A.; Wiener, M. Classification and Regression by randomForest. R News 2002, 2/3, 18–22. [Google Scholar]

- Breiman, L. Bagging predictors. Mach. Learn. 1996, 24, 123–140. [Google Scholar] [CrossRef] [Green Version]

- Belgiu, M.; Drǎguţ, L. Random forest in remote sensing: A review of applications and future directions. ISPRS J. Photogramm. Remote Sens. 2016, 114, 24–31. [Google Scholar] [CrossRef]

- Ghosh, A.; Fassnacht, F.E.; Joshi, P.; Koch, B. A framework for mapping tree species combining hyperspectral and LiDAR data: Role of selected classifiers and sensor across three spatial scales. Int. J. Appl. Earth Obs. Geoinf. 2014, 26, 49–63. [Google Scholar] [CrossRef]

- Gislason, P.O.; Benediktsson, J.A.; Sveinsson, J.R. Random Forests for land cover classification. Pattern Recognit. Lett. 2006, 27, 294–300. [Google Scholar] [CrossRef]

- O’Connell, J.; Bradter, U.; Benton, T.G. Wide-area mapping of small-scale features in agricultural landscapes using airborne remote sensing. ISPRS J. Photogramm. Remote Sens. 2015, 109, 165–177. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Strobl, C.; Boulesteix, A.L.; Zeileis, A.; Hothorn, T. Bias in random forest variable importance measures: Illustrations, sources and a solution. BMC Bioinform. 2007, 8, 25. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Baatz, M.; Schäpe, A. Multiresolution Segmentation: An optimization approach for high quality multi-scale image segmentation. In Angewandte Geographische Informations-Verarbeitung; Strobl, J., Blaschke, T., Griesbner, G., Eds.; Wichmann: Heidelberg, Germany, 2000; Volume XII, pp. 1–12. [Google Scholar]

- Möller, M.; Lymburner, L.; Volk, M. The comparison index: A tool for assessing the accuracy of image segmentation. Int. J. Appl. Earth Obs. Geoinf. 2007, 9, 311–321. [Google Scholar] [CrossRef]

- Maxwell, A.; Warner, T.; Strager, M.; Conley, J.; Sharp, A. Assessing machine-learning algorithms and image- and lidar-derived variables for GEOBIA classification of mining and mine reclamation. Int. J. Remote Sens. 2015, 36, 954–978. [Google Scholar] [CrossRef]

- Foody, G. Status of land cover classification accuracy assessment. Remote Sens. Environ. 2002, 80, 185–201. [Google Scholar] [CrossRef]

- Olofsson, P.; Foody, G.M.; Herold, M.; Stehman, S.V.; Woodcock, C.E.; Wulder, M.A. Good practices for estimating area and assessing accuracy of land change. Remote Sens. Environ. 2014, 148, 42–57. [Google Scholar] [CrossRef]

- Dengsheng, L.; Hetrick, S.; Moran, E. Land coverclassification in a complex urban-rural landscape with QuickBird imagery. Photogramm. Eng. Remote Sens. 2010, 76, 1159–1168. [Google Scholar]

- Pontius, R.G.; Millones, M. Death to Kappa: Birth of quantity disagreement and allocation disagreement for accuracy assessment. Int. J. Remote Sens. 2011, 32, 4407–4429. [Google Scholar] [CrossRef]

- Wiedemann, C.; Heipke, C.; Mayer, H.; Jamet, O. Empirical Evaluation Of Automatically Extracted Road Axes. In Empirical Evaluation Techniques in Computer Vision; Bowyer, K., Philips, P., Eds.; IEEE Computer Society Press: Los Alamitos, CA, USA, 1998; pp. 172–187. [Google Scholar]

- Wiedemann, C. External Evaluation of Road Networks. Int. Arch. Photogramm. Remote Sens. 2003, 34, 93–98. [Google Scholar]

- Gao, L.; Song, W.; Dai, J.; Chen, Y. Road Extraction from High-Resolution Remote Sensing Imagery Using Refined Deep Residual Convolutional Neural Network. Remote Sens. 2019, 11, 552. [Google Scholar] [CrossRef] [Green Version]

- Senthilnath, J.; Varia, N.; Dokania, A.; Anand, G.; Benediktsson, J.A. Deep TEC: Deep Transfer Learning with Ensemble Classifier for Road Extraction from UAV Imagery. Remote Sens. 2020, 12, 245. [Google Scholar] [CrossRef] [Green Version]

- Shan, B.; Fang, Y. A Cross Entropy Based Deep Neural Network Model for Road Extraction from Satellite Images. Entropy 2020, 22, 535. [Google Scholar] [CrossRef] [PubMed]

- Goodchild, M.F.; Hunter, G.J. A simple positional accuracy measure for linear features. Int. J. Geogr. Inf. Sci. 1997, 11, 299–306. [Google Scholar] [CrossRef]

- Alonso, M.; Malpica, J. Satellite imagery classification with LiDAR data. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2010, XXXVIII, 730–741. [Google Scholar]

- Dinis, J.; Navarro, A.; Soares, F.; Santos, T.; Freire, S.; Fonseca, A.; Afonso, N.; Tenedório, J. Hierarchical object-based classification of dense urban areas by integrating high spatial resolution satellite images and LiDAR elevation data. In Proceedings of the GEOBIA 2010: Geographic Object-Based Image Analysis, Ghent, Belgium, 29 June–2 July 2010; Volume 38, pp. 1–6. [Google Scholar]

- Teo, T.A.; Wu, H.M. Analysis of land cover classification using multi-wavelength LiDAR system. Appl. Sci. 2017, 7, 663. [Google Scholar] [CrossRef] [Green Version]

- Beck, S.J.; Olsen, M.J.; Sessions, J.; Wing, M.G. Automated Extraction of Forest Road Network Geometry from Aerial LiDAR. Eur. J. For. Eng. 2015, 1, 21–33. [Google Scholar]

- Craven, M.; Wing, M.G. Applying airborne LiDAR for forested road geomatics. Scand. J. For. Res. 2014, 29, 174–182. [Google Scholar] [CrossRef]

- White, R.A.; Dietterick, B.C.; Mastin, T.; Strohman, R. Forest Roads Mapped Using LiDAR in Steep Forested Terrain. Remote Sens. 2010, 2, 1120–1141. [Google Scholar] [CrossRef] [Green Version]

- Craven, M.B. Assessment of Airborne Light Detection and Ranging (LiDAR) for Use in Common Forest Engineering Geomatic Applications. Master’s Thesis, Oregon State University, Corvallis, OR, USA, 2011. [Google Scholar]

- Doucette, P.; Grodecki, J.; Clelland, R.; Hsu, A.; Nolting, J.; Malitz, S.; Kavanagh, C.; Barton, S.; Tang, M. Evaluating automated road extraction in different operational modes. In Algorithms and Technologies for Multispectral, Hyperspectral, and Ultraspectral Imagery XV; International Society for Optics and Photonics: Orlando, FL, USA, 2009; Volume XV, pp. 1–12. [Google Scholar] [CrossRef]

- Sánchez, J.M.; Rivera, F.F.; Domínguez, J.C.C.; Vilari no, D.L.; Pena, T.F. Automatic Extraction of Road Points from Airborne LiDAR Based on Bidirectional Skewness Balancing. Remote Sens. 2020, 12, 2025. [Google Scholar] [CrossRef]

- Zahidi, I.; Yusuf, B.; Hamedianfar, A.; Shafri, H.Z.M.; Mohamed, T.A. Object-based classification of QuickBird image and low point density LIDAR for tropical trees and shrubs mapping. Eur. J. Remote Sens. 2015, 48, 423–446. [Google Scholar] [CrossRef] [Green Version]

- David, N.; Mallet, C.; Pons, T.; Chauve, A.; Bretar, F. Pathway detection and geometrical description from ALS data in forested montaneous area. In Proceedings of the Laser Scanning 2009, IAPRS, Paris, France, 1–2 September 2009; Volume XXXVIII, Part 3/W8. pp. 242–247. [Google Scholar]

- Wu, H.; Li, Z.L. Scale issues in remote sensing: A review on analysis, processing and modeling. Sensors 2009, 9, 1768–1793. [Google Scholar] [CrossRef] [PubMed]

| Group | Metrics | Attributes | Description | Resol. | Ref. |

|---|---|---|---|---|---|

| E-H | nSM | Z, R | Normalized surface model from the maximum value of the first returns. | r | [48] |

| nSM | Z, R | Normalized surface model from the minimum value of the non-first returns. | r | [49] | |

| nM | Z | Normalized model of medium-sized vegetation from the maximum value of the returns which Z ≤ 4 m. | r | [50] | |

| RDB | Z | Relative density bins show the ratio between the non-groun points by height intervals and the total non-ground points in the voxel (height intervals: RDB: 0.5 m < Z ≤ 1 m; RDB: 1 m < Z ≤ 2 m; RDB: 2 m < Z ≤ 3 m; RDB: 3 m < Z ≤ 4 m; RDB: 4 m < Z ≤ 6 m; RDB: 6 m < Z ≤ 8 m; RDB: 8 m < Z ≤ 12 m; RDB: Z > 12 m). | r | [51] | |

| RD | Z | Accumulated relative density is the ratio between the non-ground points and total points in the voxel. | r | [51] | |

| Q | Z | Height percentiles show the values below which a given percentages (1%, 5%, 10%, 25%, 50%, 75%, 90%) of observations falls (minimum height threshold = 0.5 m). | r | [17] | |

| CS | Z | Coefficient of skewness measures the data asymmetry compared to a normal distribution. CS is the ratio between the third moment about the mean divided by the second moment about the mean. | r | [52] | |

| CK | Z | Coefficient of kurtosis measures the data peakedness. CK is the ratio between the fourth moment about the mean and the second moment about the mean. | r | [52] | |

| I | I | I, R | Intensity image is calculated from the 68.3% quantile of normalized intensity values of the first returns. | r | [52,53] |

| I | I, R | Intensity image is calculated from the 68.3% quantile of corrected intensity values of the non-first returns. | r | [54] | |

| I | I | Intensity image is calculated from the 68.3% quantile of corrected intensity values of the returns which Z ≤ 4 m. | r | — | |

| I | I, R | Difference of intensity between returns is the difference between the maximum intensity value of first returns and the minimum intensity value of non-first returns. If non-first returns do not exist, the minimum intensity value of first returns is considered. | r | [52] | |

| I | I | Standard deviation of the intensity. | r | [55] | |

| I | I | Coefficient of variation is the ratio of the standard deviation and mean using the LiDAR intensity data. | r | — | |

| IQ | I | Intensity percentiles show the values below which a given percentages (10%, 25%, 50%, 75%, 90%) of observations falls (minimum height threshold = 0). | r | [55] | |

| DWRS | I | Density weighted reflection sum is the sum of the corrected intensity of all returns multiplied by a correction factor. The correction factor is the mean point density of the whole dataset divided by the density at each voxel. | r | [16] | |

| Re | SM | Z, R | Difference of elevation between returns is the difference between the maximum elevation value of first returns and the minimum elevation value of non-first returns. If non-first returns do not exist, the minimum elevation value of first returns is considered. | r | [53,56] |

| nSM | Z, R | Normalized difference of height between returns is the difference between the maximum height value of first returns and the minimum height value of non-first returns divide by the sum of such values. If non-first returns do not exist, the minimum height value of first returns is considered. | r | [56,57] | |

| PNT | Z | The penetrability is the ratio between the number of points which Z ≤ 2 m and the number of total points in each voxel/cell. | r | [58,59] | |

| RR | R | Return ratio is the percentage of first and intermediate returns in each voxel/cell. | r | [49,50] | |

| TR | Z, R | Terrain return is the percentage of single and last returns in each voxel/cell. | r | [53,60] | |

| VR | Z, R | Vegetation return is the percentage of first and intermediate returns and points which Z > 12 m in each voxel/cell. | r | [53,60] | |

| Ro | SD | Z | Standard deviation of the elevation. | r | [61] |

| SD | Z | Standard deviation of the height. Only points which Z > 0.5 m are considered. | r | [50,62] | |

| P | Z | Slope image is calculated using nSM as input data. | r | [63] | |

| CV | Z | Coefficient of variation is the ratio of the standard deviation and mean using the LiDAR-derived elevation data. | r | [64] | |

| N | Z | Normal angle is calculated from the 68.3% quantile of angles (obtained for each point) between the normal vector to (see Footnote ) and the vertical axis. | r | [56,58] | |

| D | Z | Vertical distance from each point to . The maximum vertical distance of points in the voxel is the cell value. | r | [59] | |

| RSM | Z | Root mean square of D. | r | [60] | |

| T | I, I, I, I, I, I, I, I | I | Texture metrics of I, SM and nSM raster, respectively, using the grey-level co-occurrence matrix (GLCM). The calculated measures are mean, variance, contrast, dissimilarity, homogeneity, entropy, second moment and correlation, respectively. The textures are calculated using all directions. | r | [55,56] |

| Z, Z, Z, Z, Z, Z, Z, Z | SM | r | [50,65] | ||

| Z, Z, Z, Z, Z, Z, Z, Z | nSM | r | [61,62] |

| Equation | Description | Ref. |

|---|---|---|

| The ratio of the records correctly extracted to the total number of relevant records within the ground-truth data, i.e., the percentage of the reference roads which could be extracted. Optimum value = 1. | [3,12,20,81,82,83,84,85] | |

| The ratio of the number of relevant records extracted to the total number of relevant and irrelevant records retrieved, i.e., the percentage of correctly extracted road data. Optimum value = 1. | [3,12,20,81,82,83,84] | |

| The ratio of the overlapping area of extracted records and ground-truth records to the total area. It is a measure of the goodness of the final result combining completeness and correctness into a single measure. Optimum value = 1. | [3,12,20,81,82,83,84,85] | |

| This measure is a powerful evaluation ratio for the harmonic mean of completeness and correctness. | [83,84,85] | |

| The number of gaps per kilometer represents the number of gaps in the reference data. It is an indicator for the fragmentation of the extraction results. Optimal value = 0. | [81] | |

| This measure shows the average number of gap length per gap in the study area. This measure helps in analyzing the fragmentation of the extracted output. Optimal value = 0. | [81,84] |

| Roads Totally or Partially Included Below Canopy Cover | 8 point/m | 4 point/m | 2 point/m | 1 point/m | ||||

|---|---|---|---|---|---|---|---|---|

| PR | DR | PR | DR | PR | DR | PR | DR | |

| Yes | 91% | 78% | 91% | 75% | 91% | 61% | 79% | 37% |

| No | 95% | 85% | 93% | 84% | 94% | 71% | 79% | 59% |

| Factors | |||||

|---|---|---|---|---|---|

| Penetrability | Slope | Road Surface | Ponit Density | ||

| Completeness | Df | 4 | 2 | 1 | 3 |

| F value | 5.394 | 85.222 | 7.632 | 5.068 | |

| Pr (>F) | *** | *** | ** | ** | |

| Correctness | Df | 4 | 2 | 1 | 3 |

| F value | 0.890 | 24.296 | 17.776 | 4.656 | |

| Pr (>F) | 0.4734 | *** | *** | ** | |

| Quality | Df | 4 | 2 | 1 | 3 |

| F value | 4.279 | 62.663 | 27.715 | 4.328 | |

| Pr (>F) | ** | *** | *** | ** | |

| |||||

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Buján, S.; Guerra-Hernández, J.; González-Ferreiro, E.; Miranda, D. Forest Road Detection Using LiDAR Data and Hybrid Classification. Remote Sens. 2021, 13, 393. https://0-doi-org.brum.beds.ac.uk/10.3390/rs13030393

Buján S, Guerra-Hernández J, González-Ferreiro E, Miranda D. Forest Road Detection Using LiDAR Data and Hybrid Classification. Remote Sensing. 2021; 13(3):393. https://0-doi-org.brum.beds.ac.uk/10.3390/rs13030393

Chicago/Turabian StyleBuján, Sandra, Juan Guerra-Hernández, Eduardo González-Ferreiro, and David Miranda. 2021. "Forest Road Detection Using LiDAR Data and Hybrid Classification" Remote Sensing 13, no. 3: 393. https://0-doi-org.brum.beds.ac.uk/10.3390/rs13030393