1. Introduction

Since the 1960s, satellite technology has developed rapidly, and remote sensing technology has been widely used, for example, in environmental monitoring and geological exploration, map navigation, precision agriculture, and national defense security [

1]. Remote sensing data is collected by satellite sensors with different imaging modes. The image information contained in these different data has both redundant parts and complementary parts in space. Remote sensing images can obtain visible light images that we are familiar with and also multispectral and hyperspectral images with more abundant spectral information. All objects on Earth emit or reflect externally in the form of electromagnetic waves and absorb energy internally. Because of the essential differences between objects, the electromagnetic characteristics they exhibit are also different. Remote sensing images provide information about these objects and provide a snapshot of different aspects of objects on the Earth’s surface. The combination of different vision technologies and remote sensing technologies is more conducive for us to accomplish high-level vision tasks.

Limited by different satellite sensors, remote sensing imaging technology can acquire only panchromatic (PAN) images with high spatial resolution and multispectral (MS) images with high spectral resolution. For example, although Earth observation satellites such as QuickBird, GeoEye, Ikonos, and WorldView-3 can capture two different types of remote sensing images, satellite sensors cannot acquire MS images with high spatial resolution due to the contradiction between spectrum and space, which cannot solve the current research problems. This problem has led to the rapid development of multisource information fusion technology. Therefore, a large number of studies are currently devoted to the fusion of MS images and PAN images. The fusion technology of MS images and PAN images studied in this paper extracts rich spectral information and spatial information from MS images and PAN images, respectively, and fuses different image information together to generate composite images with hyperspectral resolution and spatial resolution. This kind of fusion algorithm has become an important preprocessing step for remote sensing feature detection and various land problem analyses, providing high-quality analysis data for later complex problems.

To date, remote sensing image fusion algorithms can be roughly divided into component replacement (CS) [

1,

2,

3,

4], multi-resolution analysis (MRA) [

5,

6,

7,

8,

9,

10,

11,

12], model-based optimization (MBO) [

13,

14,

15,

16,

17,

18,

19,

20,

21], and machine learning methods [

22,

23,

24,

25,

26,

27,

28,

29,

30,

31,

32,

33,

34,

35,

36,

37,

38,

39,

40,

41,

42,

43,

44,

45]. At present, the method of CS is the earliest and most mature fusion algorithm, where the main idea is to use the quantitative computing advantage of the color space model to linearly separate and replace the spectral and spatial information of the image, and then recombine the replaced image information to obtain the target fusion result. Intensity-hue saturation (IHS) [

1], principal component analysis (PCA) [

2], Gram–Schmidt (GS) [

3], and partial substitution (PRACS) [

4] all apply the idea of component replacement. In practical applications, although this kind of algorithm can improve the resolution of MS images simply and effectively, it is usually accompanied by serious spectral distortion.

The MRA method has also been successfully applied in many aspects of remote sensing image fusion. The fusion method can be divided into three steps. First, the source image is decomposed into multiple scales by using pyramid or wavelet transform. Second, each layer of the source image is fused, and finally, the fusion result is obtained by inverse transformation. Common MRA methods include Laplacian pyramid decomposition [

5,

6,

7] and wavelet transform [

8,

9,

10,

11,

12]. Although these methods may affect the clarity of the image, they have good spectral effects.

The MBO method establishes the relationship model between low-resolution (LR) multispectral (LRMS) images, PAN images, and high-resolution (HR) multispectral images (HRMS) and combines the prior characteristics of HRMS images to construct the objective function to reconstruct the fused image. Some classic prior models include the Gauss–Markov random field model [

13,

14], variational model [

15,

16,

17], sparsity regularization [

18,

19,

20,

21]. Such methods can achieve great improvements in gradient information extraction.

With the rapid development of the field of artificial intelligence, deep learning technology has achieved great success in the field of vision. Convolutional neural network (CNN) has shown remarkable results in the field of deep learning. In the field of computer vision, CNN has been successfully used in a large number of fields such as detection, segmentation, object recognition, and image. CNN is an input-to-output mapping, which can learn numerious mapping relations between input and output. Its characteristic is that end-to-end training can effectively learn the mapping relations between LRMS and HRMS images. Training is data driven and does not require manual setting of weight parameters. Due to the complex spatial structure of remote sensing images and the local similarity between geographic information, the contortion invariance and local weight sharing of CNN have unique advantages in dealing with this problem. Its layout is closer to the actual biological neural network, and weight sharing reduces the complexity of the network, especially the feature that the image of multidimensional input vector can be directly input into the network, which avoids the complexity of data reconstruction in the process of feature extraction and classification. The CNN technique can retain the spectral information of the image to a great extent while maintaining good spatial information. The idea of this kind of method is inspired by super-resolution. Inspired by a deep convolutional network for image super-resolution (SRCNN) [

22], Masi et al. [

23] proposed pan-sharpening by convolutional neural networks (PNNs) of a three-layer network. This is one of the early applications of convolutional neural networks in remote sensing. With the continuous deepening of deep learning networks, the fusion results obtained by the complex and simple network structure can no longer meet the demand for images. Convolutional neural network has been widely used in remote sensing image fusion and its structure has become more and more complex. Wei et al. [

24] proposed a deep residual network (DRPNN) to extract more abundant image information. Yang et al. [

25] proposed a deep network architecture for pan-sharpening (PanNet), a residual structure of high-pass domain training, on the basis of the previous deep learning network and better retained the spectral information by means of spectral mapping. By learning the high-frequency components of images, a correlation mapping relationship was obtained, and better fusion results were obtained.

PanNet also has certain limitations. First, PanNet performs feature extraction by directly superimposing PAN and MS images, resulting in the network’s inability to fully utilize the different features of PAN and MS images and its insufficient utilization of different spatial information and spectral information. Second, PanNet only uses a simple residual structure, which cannot fully extract image features of different scales and lacks the ability to recover details. Finally, the network directly outputs the fusion result through a single-layer convolutional layer, failing to make full use of all the features extracted by the network, which affects the final fusion effect.

In response to the above problems, we consider using a multiscale perception dense coding convolutional neural network (MDECNN) to improve the learning ability and reconstruction ability of the model. The problem of gradient disappearance caused by a large number of network layers is avoided by means of skip connections. The different features of MS and PAN images are extracted by dual-stream network input. At the same time, multiscale blocks are designed to extract features from PAN images with richer spatial information. Two different multiscale feature extraction blocks are used to enhance the features of the network, and then the spectral and spatial features of the image are reconstructed with complete detailed information through dense coding blocks. Finally, the fusion image reconstruction is completed through a three-layer super-resolution network.

The main contributions of this paper are as follows:

In view of the limitations of image spatial information acquisition, a single multiscale feature extraction block is used for feature extraction of PAN images with high spatial resolution, which enriches the spatial information of network extraction.

We propose a feature extraction block composed of two multiscale blocks with different receptive fields to enhance the image details in the training network and reduce the loss of details in the network training process.

We design a dense coding structure block to reconstruct the spectral and spatial features of the image and improve the spectral quality and detail recovery capabilities of the fused image.

We propose a comprehensive spectral loss, adding spatial constraints on the basis of common L2 loss, reducing the loss of edge information during training, and enhancing the spatial quality of fused images.

The rest of this paper is arranged as follows: In

Section 2, the background of image blending and related work are introduced, and the CNN-based pan-sharpening approach is briefly reviewed; in

Section 3, we describe our proposed multiscale dense network structure in detail; in

Section 4 we present the experimental results and compare them with other methods; in

Section 5, we discuss the structure of multiscale dense networks; and finally, I

Section 6, we provide conclusions.

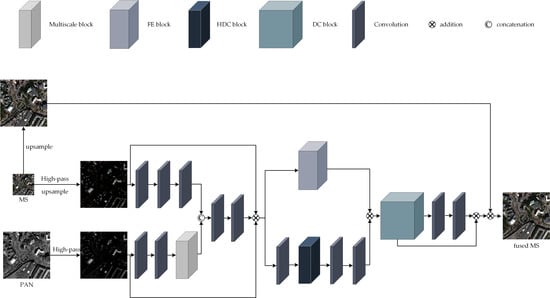

3. The Proposed Network

In this chapter, we introduce the proposed MDECNN, as shown in

Figure 2. The dual-stream network is used to extract the remote sensing image information contained in the MS

image and PAN

image, and the fused image

is obtained after feature processing and image reconstruction of the fusion network as:

where H denotes the high-pass information,

denotes the convolution operation, and

denotes the multiscale feature extraction. Finally, we concatenate

and

to from the fusion features as follows:

where

refers to the concatenation operation. Then, the output

is obtained through the feature enhancement module and the output

is obtained through the dense coding structure as:

where

denotes the feature enhancement operation and

denotes the dense coded operation. The final prediction

is as follows:

We attempt to use deep neural networks to learn the map between the input MS, PAN, and output

. We us

to represent the reference target and use Loss regularization as the loss function of the training to measure the magnitude of the error between

and

as follows:

where the function

is the matrix norm, especially

is the square of Frobenius norm.

To obtain more spatial features, a multiscale feature extraction module was designed to extract the feature information of the PAN image. The feature images extracted from the dual-stream network are superimposed into the trunk network, and the features of different receptive fields of the image are obtained by using the parallel dilated convolution block. After the two-layer convolution, the feature is enhanced by skip connection with the feature enhancement module. Then, a dense coding structure similar to the U-Net structure, is sent for feature fusion and reconstruction. Finally, the fusion image is obtained by enhancing the spectral information of the image by spectral mapping. The weight parameters of the whole network are obtained by learning many nonlinear relationships between simulated data and do not need to be set manually. The details of our proposed network architecture are described below.

3.1. Multiscale Feature Extraction Block

The depth and width of the network have a significant influence on the image fusion results. With a deeper network structure, the network can learn richer feature information and context-dependent mapping. However, with the deepening of the network structure, gradient explosion, gradient disappearance, training difficulties, and other problems often occur. To solve relevant problems, He et al. [

47] proposed a residual network structure, the ResNet network structure. By means of skip connection, the training process is optimized, while the network depth is guaranteed. In terms of the width of the network, Szegedy, C. et al. [

48] proposed an inception structure, which fully expands the width of the network and enables the network to obtain more characteristic information.

Inspired by GoogLeNet, a multiscale feature extraction block was designed to extract the rich spatial features contained in PAN images.

Figure 3 shows the multiscale blocks we designed for feature extraction of PAN images.

The convolution kernels with sizes of , , , and are used for feature extraction of PAN images after two convolution layers. The size of the first three convolution kernels to extract the characteristics of the different sizes of receptive field, using the convolution for dimension reduction of character figure, across the channel characteristics of integration and model simplification, by the multiscale feature extraction piece, we can get rich images in PAN image information.

3.2. Feature Enhancement (FE) Block

The feature enhancement module is shown in

Figure 4.

Remote sensing images contain a large number of buildings, vegetation, mountains, water, and other large-scale objects and contain relatively small-scale target objects such as vehicles, ships, and roads. The traditional convolutional neural network selects the convolution kernel of fixed size, and the receptive field is relatively small, so the context information of the image is not sufficiently learned. To solve this problem, in this paper, the feature enhancement block is proposed. As shown in

Figure 4, we select three sensory fields of

,

, and

and do not use an activation function to retain image information when passing through the first convolutional layer. After the first convolutional layer, we use a

convolutional layer to enlarge the feature sensory fields and obtain more contextual information.

To enhance each feature detail in the remote sensing image, a dilated convolution block, as shown in

Figure 5, is designed in the trunk network to extract the multiscale details of the image, and then the features extracted by a skip connection and parallel feature extraction block are stacked to achieve the effect of feature enhancement.

We follow the experimental setting of Fu et al. [

30] and set the dilation rate of the dilated convolution block to 1, 2, 3, and 4. The magnitude of the receptive field of the convolution kernel of the dilated convolution is

, where

d represents the dilation rate, and

k represents the size of the convolution kernel. In the parameter setting, the size of the standard convolution kernel and the dilated convolution kernel are both

, the activation function is ReLU, and the number of filters is 64. In addition, after the dilated convolution of each layer, a convolutional layer with the same dilation rate is added to further expand the receptive field. Finally, a convolution layer of size

is used for dimensionality reduction to reduce computing consumption.

Although the dilated convolution can increase the receptive field of the convolution kernel by expanding the dilation coefficient, it has the problem of “meshing” [

49]. In remote sensing images, there are a large number of buildings, vegetation, vehicles, and other objects. These feature-rich objects tend to gather in a large number in the same area, so that there is a strong similarity in the spectrum and spatial structure. Therefore, the use of dilated convolution will lead to the loss of local information from remote sensing images.

To solve this problem, the feature enhancement method, mentioned above, is used to extract multiscale features through parallel feature extraction blocks and to fuse and enhance the features extracted by dilated convolution blocks in the trunk network to improve the robustness of feature extraction in various complex remote sensing images. In addition, through such a feature extraction method, we can extract more perfect spectral information and spatial information and reduce the feature loss in the fusion process.

3.3. Dense Coding (DC) Structure

Considering the abundance of remote sensing image characteristics, a common network structure would not be able to fully extract the deep image characteristics, easily causing information loss in the process of convolution, therefore, we designed a dense coding block to fully image the deep character extraction and avoid common coding in the network layer useful information leakage problems. As shown in

Figure 6, in the dense coding network, the feature mapping obtained at each layer is cascaded with the input at the next layer, and the information of the middle layer is retained to the greatest extent by adding channels. At the same time, the feature multiplexing of dense connections does not introduce redundant parameters and does not increase the computing consumption.

There are three advantages to using a block-intensive architecture which are the following: (1) it can hold as much information as possible; (2) this architecture can improve the information flow and gradient flow in the network, making the network easy to train; and (3) intensive contact has a regularized effect, which reduces the over adaptation of tasks [

50].

In the decoding stage, to avoid information loss caused by channel plummeting, a U-Net decoding structure similar to the structure of the encoder is used, which is reduced to the number of channels equivalent to the encoder each time to facilitate the full extraction and fusion of features. Its structure is shown in

Figure 7.

In the encoding and decoding process, the convolution kernel size is set as and the number of channels is 64, so the reduction of channels at each layer in the decoding process is 64. Through the dense coding structure proposed by us, the deep features of remote sensing images can be fully extracted, and the feature details can be fully recovered in the subsequent image reconstruction process. With the deepening of the network depth, spectral information is often seriously lost. Inspired by PanNet, the spectral mapping method is used to enhance the spectral details of the image in the final image reconstruction part of the network to ensure the spectral quality of the fused image.

3.4. Loss Function

In addition to the network architecture, the loss function is another important factor affecting the fusion image quality. The loss function optimizes the parameters by minimizing the loss between the reconstructed image and the corresponding ground live HR image. Thus, we give a set of training sets

, where

and

represent the PAN image and LRMS image, respectively, and

is the corresponding HRMS image. In the existing remote sensing image fusion literature, most of the loss functions used are

L2 norm, i.e., root mean square error (MSE). By minimizing the prediction error between the output data

and the standard image, the nonlinear mapping relationship between the input image and the output image is learned. The

L2 loss function is defined as follows:

where

N is the number of small batch samples and

i is the

ith image. We select the Adam optimizer to carry out back propagation and optimize the allocation of all parameters in the iterative network.

Sharpening the image using

L2 losses smooths the image and penalizes larger outliers but is less sensitive to smaller outliers, meaning that the learning process slows significantly as the output approaches the target. To make further improvements, additional small outliers are processed, and image edge information is retained. The

L1 norm provides the better effect, with the more pronounced smooth

L1 loss defined as follows:

Selecting the

L2 norm alone will cause the image to be too smooth and the edge information will be lost. The use of

L1 norm alone will lead to insufficient training convergence and serious spectral noise. In view of this problem, we have designed a mixed loss function that uses the combination of

L2 loss and smooth

L1 loss. The loss of spectral information is constrained by

L2 loss and smooth

L1 is used as the spatial loss constraint. The mixed loss is defined as follows:

Through experience, the value of is set to 0.3.

4. Experimental Analysis

In this section, we will demonstrate the superiority of the proposed method through experimental results on multiple datasets. By comparing and evaluating the training and test results of the models with different network parameters, the best model is selected for the experiment. Finally, the visual and objective indicators of our best model are compared with several other existing methods to prove the superior performance of the proposed method.

4.1. Dataset and Model Training

To evaluate the performance of our proposed dense coding network based on multiscale perception, we conducted model training and testing on datasets collected by three different satellite sensors, GeoEye-1, Quickbird, and WorldView-3. The band number and spatial resolution of different satellite sensors are shown in

Table 1. For the convenience of training, the input images of each dataset are uniformly set as

image patch, and the size of each training batch is 4. The train set is used for network training, while the test set is used to evaluate network performance. The spatial resolution of the train set and the test set are shown in

Table 2.

The maximum number of training sessions is set to 350,000. For the Adam optimizer, we set the learning rate to 0.001 and the exponential decay factor to 0.9. We set the weight attenuation to . We use the proposed comprehensive loss as a loss function to minimize the prediction error of the model, and the training time of the overall program is approximately 26 h 54 min.

The network is implemented in the TensorFlow deep learning framework and trained on an NVIDIA Tesla V100-SXM2-32GB, and the results are presented with ENVI Classic 5.3.

To facilitate visual observation, the red, green, and blue bands of the multispectral image are used as the imaging bands of the RGB image to form color images. However, in the calculation of objective indicators, other bands of the image will not be ignored.

4.2. Compare Algorithms and Evaluation Methods

For the three experimental datasets, we choose several typical representative methods of pan-sharpening as comparison methods. These include four CS-based methods, i.e., GS [

3], PRACS [

4], IHS [

1], and HPF [

29]; two MRA-based methods, i.e., DWT [

6] and GLP [

8]; one model-based method, i.e., SIRF [

13]; and two methods based on the CNN, i.e., PanNet [

25] and PSGan [

51].

In real application scenes of remote sensing images, HRMS images are often lacking. Therefore, in the comparison algorithm, we use the following two kinds of experiments for comparison: one is the simulation experiment with HRMS images as a reference, and the other is the real experiment without HRMS images. The evaluation criteria of the reference images are as follows: the spectral angle mapper (SAM), the relative average spectral error (RASE), the root mean squared error (RMSE), the universal image quality index (QAVE), the relative dimensionless global error in synthesis (ERGAS), the correlation coefficient (CC), and the structural similarity (SSIM). The other assessments are based on the quality with no reference index (QNR) and the spectral and spatial components ( and ).

4.3. Simulated Experiments

4.3.1. Experiment with WorldView-3 Dataset with Eight Bands

Figure 8 shows a set of fusion results on WorldView-3 satellite data; the data are 8-band data.

Figure 8a,b show the HRMS and PAN images with resolution, respectively.

Figure 8c–i are seven non-deep learning pan-sharpening methods, and

Figure 8j–l are deep learning methods.

Figure 8 shows that seven methods of non-deep learning are accompanied by relatively obvious spectral deviation. Among these methods, DWT and SIRF exhibit obvious spectral distortion, while the edge details of the image are blurred. The IHS fusion image shows partial detail loss in some spectral distortion areas and fuzzy artefacts in road vehicle areas. The HPF, GS, GLP, and PRACS methods show good performance in the overall spatial structure, but they are distorted and blurred in both spectrum and detail. For the fusion method of deep learning, the image texture information performs well, but in terms of spectral information, the fusion method of PSGan shows obvious changes in partial regional spectra, while other differences are not obvious. To further distinguish the image quality, we use the objective evaluation index mentioned before for further comparison. The results are shown in

Table 3.

As shown in

Table 3, from the perspective of the reference index of WorldView-3 dataset, the pan-sharpening method of deep learning is obviously better than the fusion method of non-deep learning. Among these methods, GLP is superior to other non-deep learning methods in overall effect, and the spectral information of fusion results obtained by HPF and GLP is superior to that obtained by other non-deep learning methods. GLP and PRACS are more complete in preserving spatial information than those of the non-deep learning methods. The results obtained by the PRACS, HPF, and GLP methods showed no significant difference in image quality. In the pan-sharpening method of deep learning, the effectiveness of the network structure directly affects the fusion effect. Therefore, the method proposed in this paper is obviously superior to the existing fusion methods, which proves the effectiveness of the method proposed in this paper.

4.3.2. Experiment with QuickBird Dataset

Figure 9 shows a set of fusion results on QuickBird satellite data with a dataset of 4-band images.

Figure 9a,b show HRMS and PAN images with resolution,

Figure 9c–i represent seven non-deep learning pan-sharpening methods, and

Figure 9j–l represent deep learning methods.

In

Figure 9, the non-deep learning method obviously has spectral distortion. From

Figure 9c–i, the traditional fusion method more or less exhibits the whole spectrum distortion phenomenon. Among the methods, DWT, his, and SIRF present the most severe spectral distortion. GLP and GS present obvious edge blurring in the spectral distortion area, and the PRACS method presents artefacts in the image edge. The deep learning method has good fidelity in both spectral information and spatial information, among which the method proposed by us is the most similar to the original image in both spectral information and spatial information.

Table 4 below objectively analyses each method in terms of index values.

As shown in

Table 4, the QuickBird experimental assessment results show that the performance of the pan-sharpening method, which is deep learning on the 4-band dataset and is significantly better than the traditional method. In terms of the experimental results of these data, HPF has achieved an overall better performance in traditional methods. Although the HPF method and GLP method are not significantly different in other indicators, the HPF method is obviously superior to the GLP method in maintaining spectral information. PanNet and PSGan have good performance in the deep learning method, but the method proposed in this paper is the best among all the existing methods in terms of all the indicators.

4.3.3. Experiment with GeoEye-1 Dataset

In this section, experiments were performed using a 4-band dataset from GeoEye-1, and the image size is

.

Figure 10 shows the experimental results of a set of images.

Figure 10a,b show HRMS and PAN images, respectively.

Figure 10c–i represent seven non-deep learning pan-sharpening methods, while

Figure 10j–l represent deep learning methods.

Figure 10 shows that obvious spectral distortion occurs in the DWT, GS, IHS, and SIRF methods, and blurring or loss of edge details occurs in all seven traditional methods. The PRACS method retains good spectral information, but the spatial structure is too smooth, the edge information is severely lost, and there are many artefacts. Compared with GLP and HPF methods, the overall effect is better. In the deep learning method, the PSGan method exhibits spectral distortion in local areas, and the overall effect of deep learning is better than traditional methods. The image from our proposed method is the closest to the original image. The index values shown in

Table 5 objectively show the comparison of various methods.

Combined with

Table 5, the experimental evaluation indexes of GeoEye-1, QuickBird, and WorldView-3 are roughly the same, which proves the robustness of the network structure proposed by us. Through the above experimental results, the numerical values clearly support the proposed solution, thus, indicating that the proposed solution achieves a significant performance improvement on the same satellite or different satellite, 8-band or 4-band datasets.

4.4. Experiment with GeoEye-1 Real Dataset

Figure 11 shows the pan-sharpening results of the GeoEye-1 image size dataset under real data from unreferenced images.

Figure 11a,b show the MS and PAN images, respectively.

Figure 11c–l show the DWT, GLP, GS, HPF, IHS, PRACS, SIRF, PanNet, PSGan, and our fusion results of the proposed method.

By observing the fusion images, DWT, IHS, and SIRF all can be found to have obvious spectral distortion, and the edge information of SIRF appears fuzzy. Although the overall spatial structure information is well preserved in the GS and GLP methods, local information is lost. The merged image in the PRACS method is too smooth, resulting in severe loss of edge details. PanNet, PSGan, and our proposed method have the best overall performance, but spectral distortion appears in some regions of PSGan.

Table 6 shows that the fusion method proposed by us is the most effective on the real dataset without reference images.

6. Conclusions

In this paper, we propose a deep learning-based method to solve the pan-sharpening problem by combining convolutional neural network technology and domain-specific knowledge. On the basis of the existing pan-sharpening solutions, multiscale feature blocks are designed to process PAN images separately to extract richer and more complete spatial information, feature enhancement blocks and dense coding networks are used to learn more accurate mapping relationships, and comprehensive loss functions are designed to constrain image loss. Better fusion images can be obtained with full consideration of different spectral and spatial characteristics. In remote sensing images, regional spatial structure, land cover and development characteristics are diverse. Because the method proposed in this paper is more sensitive to multiscale features in theory, MDECNN can achieve better results in different types of remote sensing images in areas with different sizes of seeding sites, diverse structures in densely built areas, and different urban greening proportions. It is significant for remote sensing image fusion of complex image information. At the same time, in some remote sensing images with relatively single image features, the improvement of fusion effect of the proposed method is relatively limited, which reflects the limitations of multiscale feature image fusion. The experimental results of three kinds of satellite datasets show that the proposed method can perform better than the existing methods in the pan-sharpening of a wide range of satellite data, which proves the potential value of our network for different tasks. Next, we will take the loss function with the constraint of objective indexes as the starting point to further improve the network performance on the premise of ensuring the spectrum and space quality.