1. Introduction

If close-range geomatics techniques are useful for the survey and investigation of civil engineering constructions, such as buildings, bridges and water towers [

1], satellite remote sensing is traditionally suitable to support studies on geographic areas, e.g., urban growth effects [

2,

3], glacier inventory [

4,

5], desertification [

6,

7], grassland monitoring [

8,

9], burned area detection [

10,

11], seismic damage assessment [

12,

13], land deformations monitoring aims—landslides [

14], land subsidence [

15], coastal changes [

16], etc. However, a detailed investigation of the Earth’s surface and land cover can be performed using Very High Resolution (VHR) satellite images, characterised by pixel dimension of panchromatic (PAN) data equal or less than 1 m. Generally, VHR sensors carried on a satellite can capture also multispectral (MS) images that have a lower resolution than PAN [

17,

18]. In fact, typical spectral imaging systems supply multiband images of high spatial resolution at a small number of spectral bands or multiband images of high spectral resolution with a lower spatial resolution [

19]. Since MS bands are requested for many applications, it is desirable to increase the geometric resolution of MS images. This operation is possible by pan-sharpening, which allows the pixel size of a PAN image to be combined with the radiometric information of MS images at a lower spatial resolution [

18,

20,

21,

22,

23]. Pan-sharpening is usually applied to images from the same sensor but can also be used for data supplied by different sensors [

24,

25].

In the framework of the multi-representation of the geographical data [

26], pan-sharpened images are the most detailed layer of information acquired from space. The pan-sharpened images’ field of use is very large. We can distinguish at least three different macro-areas: visualisation, classification and feature extraction.

The first includes the production of orthophotos: substituting the panchromatic image in grey level with Red—Green—Blue (RGB) true-colour composition based on the respective multispectral pan-sharpened bands allows the user to have a better vision of the scene. In fact, most of the high-resolution imagery in Google Earth Maps is the DigitalGlobe Quickbird, which is roughly 65 cm pan-sharpened (65 cm panchromatic at nadir, 2.62 m multispectral at nadir) [

27].

Supervised classification algorithms applied on pan-sharpened images produce a more detailed thematic map than in the case of the initial images. However, pan-sharpened products cannot be considered at the same level as real sensor products. In fact, the procedure introduces distortions of the radiometric values, and this influences the classification accuracy. Nevertheless, the benefit of the enhanced geometric resolution is higher than the loss of the radiometric match. Pan-sharpened images are very advantageous to support land cover classification [

28], and often they are integrated with other data to perform a better investigation of the considered area, e.g., SAR images [

29] to detect environmental hazards [

30].

The injection of PAN image details into multispectral images enables the user to perform the geospatial feature extraction process, which has been the subject of extensive research in the last decades. In 2006, Mohammadzadeh et al. [

31] proposed an approach based on fuzzy logic and mathematical morphology to extract main road centrelines from pan-sharpened IKONOS images: the results were encouraging, considering that the extracted road centrelines had an average error of 0.504 pixels and a root-mean-square error of 0.036 pixels. More recently (2020), Phinzi et al. [

32] applied Machine Learning (ML) algorithms to a Systeme Pour l’Observation de la Terre (SPOT-7) image to extract gullies. They compared three commonly used ML algorithms, including Discriminant Analysis (LDA), Support Vector Machine (SVM), and Random Forest (RF); the pan-sharpened product from SPOT-7 multispectral image successfully discriminated gullies, with an overall accuracy > 95%.

Several methods for pan-sharpening applications are described in literature, and the most frequently used of them are implemented in software for remote sensing. Most of them are based on steps that can be easily executed using typical algorithms of Map Algebra and raster processes that are present in GIS software. Coined by Dana Tomlin [

33], the framework, Map Algebra, includes operations and functions that allow the production of a new raster layer starting from one or more raster layers (“maps”) of similar dimensions. Depending on the spatial neighbourhood, Map Algebra operations and functions are distinguished into four groups:

local,

focal,

global, and

zonal. Local ones work on individual pixels; focal ones work on pixels and their neighbours; global ones work on the entire layer; zonal ones work on areas of pixels presenting the same value [

34]. Map Algebra allows basic mathematical functions like addition, subtraction, multiplication and division, as well as statistical operations such as minimum, maximum, average and median. GIS systems use Map Algebra concepts, e.g., ArcGIS implements them in Python (ESRI), MapInfo in MapBasic [

35], GRASS GIS in C programming language. Finally, Map Algebra operators and functions are available as specific algorithms in GIS software but can be combined into a procedure or script to perform complex tasks [

34].

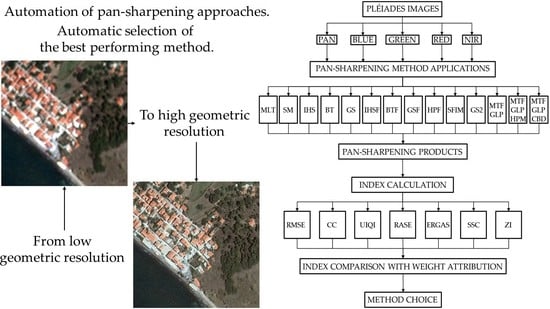

In this paper, the attention is focused on the possibility to automatise the pan-sharpening process of VHR satellite images, e.g., Pléiades images, based on raster utilities present in Quantum GIS (QGIS) [

36], a free and open-source GIS software. Particularly, the graphical modeller, a simple and easy-to-use interface, is employed to include different phases and algorithms in a single process to facilitate the pan-sharpening application. Experiments to test the performance of the automatic procedure are developed on Pléiades imagery concerning Lesbo—a Greek island located in the north-eastern Aegean Sea. The remainder of this paper is organised as follows.

Section 2 describes 14 pan-sharpening methods and 7 quality indices chosen for this work (Correlation Coefficient (CC), Universal Image Quality Index (UIQI), Root-Mean-Square Error (RMSE), Relative Average Spectral Error (RASE), Erreur Relative Globale Adimensionalle de Synthèse (ERGAS), Spatial Correlation Coefficient (SCC), Zhou Index (ZI)).

Section 3 explains the experimental procedure: first, a very brief description of the main characteristics of the Pléiades images used for this study is supplied; then, the implementation of the fusion techniques in the QGIS graphical modeller is illustrated; finally, the procedure steps are explained.

Section 4 presents and discusses the results of the automatisation of pan-sharpening method application, highlighting the relevance of the quality index calculation and comparison to support the choice of the best-fused products in relation to the user purposes; particularly, a multi-criteria analysis is proposed as a methodological tool based on weight attribution to each quality index.

Section 5 resumes the proposed approach and remarks the efficiency of it in consideration of the good results.

4. Results and Discussion

Considering that the starting multispectral images are 4 and the methods implemented are 14, the implementation of our proposal generates 56 pan-sharpened images. The products obtained from the application of each method are quantitatively evaluated using the quality indices mentioned in

Section 2.2. The results of these metrics are shown in the following tables (

Table 1,

Table 2,

Table 3,

Table 4,

Table 5,

Table 6,

Table 7,

Table 8,

Table 9,

Table 10,

Table 11,

Table 12,

Table 13 and

Table 14), one for each method.

Table 15 is a comparative table of all the methods (note: for each index, only the mean value is reported for an easier synoptic view).

However, the results highlight some significant aspects, as reported below.

Even if all spectral indices do not supply the same classification, underlying trends are evident. For example, the level of correlation between each pan-sharpened image and the corresponding original one is high in many cases, testifying to the effectiveness of the analysed method. The lowest values are usually obtained for the blue band as a consequence of the low level of overlap between the PAN sensor’s spectral response and the Blue sensor’s spectral response (

Figure 1). In addition, all spectral indices supply the same result for the best performing method (MTF–GLP–CBD); however, they do not identify the same method as the weakest (i.e., BT for CC and MLT for ERGAS).

On the other hand, spatial indices provide a ranking that is in some respects different from that given by spectral indices. In fact, the best performing method in terms of spectral fidelity, MTF–GLP–CBD, supply poor results in terms of spatial fidelity. As testified by other studies [

44,

93], the improvement of the spatial quality of one image means the deterioration of the spectral quality and vice versa.

In light of the above considerations, a compromise must be sought in terms of spectral preservation and spatial enhancement to ensure good pan-sharpened products.

To better compare the results, we decided to assign a score to each method. In particular, as a first step, a ranking is made for the methods in consideration of each indicator, assigning a score from 1 to 14. The spectral indicators are then mediated between them, as well as the spatial indicators.

Figure 5 shows the results obtained by each method taking into account the mean spectral indicator and the mean spatial indicator.

As the last step, a general ranking can be obtained by introducing weights for Mean Spectral Indicator and Mean Spatial Indicator. For example, using the same weight (0.5) for both indicators, the resulting classification is shown in

Table 16.

To give an idea of the quality of the derived images,

Figure 6 shows the zoom of the RGB composition of the initial multispectral images as well as the zoom of the RGB composition of the multispectral pan-sharpened images obtained from each method.

Comparing these results by means of visual inspection, the increase of the geometric resolution as well as the level of the reliability of the obtained colours are evident. In some cases, e.g., for the images resulting from the GSF, the colours are correctly preserved, and the details injected by the panchromatic data are impressive. This result confirms the high performance certified for this method by our classification based on the Multi-criteria approach. In other cases, however, e.g., for the images deriving from the BTF or HPF, there is a lower level of fidelity to the original gradations of the colours. Finally, as in the case of SFIM-derived images, there is a loss of spatial detail that is not opportunely injected in the multispectral products.

All the operations conducted in an automated way by means of GIS tools were also executed in a non-automated way by the authors to test the exactness of the algorithms implemented in the graphical modeller. Particularly, for each method, the differences between the single multispectral pan-sharpened image obtained in an automated way and the corresponding one obtained in a non-automated way were calculated. The residuals were zero in every case. Ultimately, both products are perfectly coincident, and the proposed approach is positively tested, so it can be used as a valuable tool to deal with a critical aspect of pan-sharpening. In fact, according to [

44], there is no single method or processing chain for image fusion: in order to obtain the best results, a good understanding of the principles of fusing operations and especially good knowledge of the data characteristics are compulsory. Since each method can give different performances in different situations, applications of several algorithms and comparison of the results are preferable. This approach is very demanding and time-consuming, so the automation of pan-sharpening methods using GIS basic functions proposed in this work enables the user to achieve the best results in a rapid, easy and effective way.

5. Conclusions

The work presented here analyses the possibility to apply pan-sharpening methods to Pléiades images by means of GIS basic functions, without using specific pan-sharpening tools. The free and open-source GIS software named QGIS was chosen for all applications carried out. Finally, 14 methods are automatically applied and compared using quality indices and multi-criteria analysis.

The results demonstrate that the transfer of the higher geometric resolution of panchromatic data to multispectral ones does not require specific tools because it can be implemented using filters and Map Algebra functions. QGIS software supplies the Raster calculator, a simple but powerful tool to support specific operations that are fundamentals for pan-sharpening method applications, i.e., direct and reverse transformations between RGB and IHS space, synthetic image production, co-variance estimation. This tool is also useful to calculate indices for a quantitative evaluation of the quality of the resulting pan-sharpened images. The whole process can be automatised using a graphical modeller to simplify the user’s task by reducing it to select data.

Since the best performing method cannot be fixed in an absolute way but rather depends on the characteristics of the scene, several algorithms must be compared to select one providing suitable results for a defined purpose each time. For evaluating the quality indices by means of multi-criteria analysis, weights can be introduced in accordance with the user needs, i.e., putting spatial requirements before spectral ones or vice versa. In other words, our proposal aids the user by giving the automatic execution of pan-sharpening methods but also supporting the choice of the best-fused products. For this reason, the calculation of quality indices and the comparison of their values are both necessary.

Concerning the future developments of this work, further applications will be focused on the possibility to integrate other pan-sharpening methods in order to increase the number of available options for the user. In addition, we will be mainly focused on the possibility to facilitate the choice of the best performing method, also supplying other results in an automatic way, i.e., feature extractions in sample area to compare them with known shape objects for accuracy estimation.