MBES Seabed Sediment Classification Based on a Decision Fusion Method Using Deep Learning Model

Abstract

:1. Introduction

- (1)

- The limited field samples and inevitable noise in acoustic images are obstacles for high accuracy seabed sediment classification. Can we find a classification framework that has good performance with small samples?

- (2)

- Although deep learning has been proved to be effective for seabed sediment classification, it may falsely erase small useful features and cause misclassification. In fact, any classifier, regardless of the architecture, has limited abilities to mine effective features and uncertainties in its predictions. Can we design an architecture to take advantage of the complementarity between deep and shallow learning classifiers?

- (1)

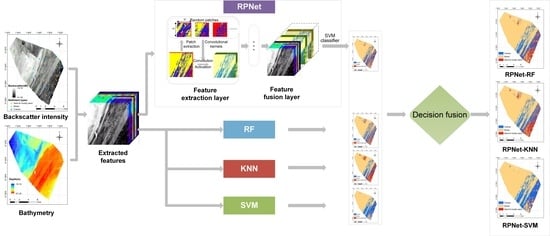

- After feature extraction, we employ the RPNet algorithm for seabed sediment classification, which only needs a small number of samples during the training stage. The results are compared with several traditional machine learning methods (random forest, K-nearest neighbor, support vector machine and deep belief network) to verify the efficiency and effectiveness of RPNet. This algorithm may be a promising way to reduce the impact of few samples and noise on classification accuracy.

- (2)

- In order to take advantage of the complementarity between RPNet and other shallow architectures to alleviate the problem of over-smoothness and misclassification, we propose a deep and shallow learning decision fusion model based on voting strategies and fuzzy membership rules, which combines the seabed sediment classification results of RPNet and several traditional shallow learning classifiers. Then, a benchmark comparison is provided by the single classifier to evaluate the performance of our proposed decision fusion strategy.

2. Study Sites and Experimental Data

2.1. Study Sites

2.2. Experimental Data

3. Methods

3.1. Feature Extraction

3.2. RPNet Framework

3.2.1. Input Layer

3.2.2. Feature Extraction Layer

3.2.3. Feature Fusion Layer and SVM Classifier

3.3. Decision Fusion Method Based on Multi Classifiers

4. Experiments and Results

4.1. Parameter Setting of RPNet

4.2. Classification Results of RPNet

4.3. Decision Fusion Results

5. Discussion

5.1. Effect of Sample Size on Classification Performance

5.2. Distribution of Topographic Features for Different Sediment Types

5.3. Other Considerations

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Halpern, B.S.; Frazier, M.; Potapenko, J.; Casey, K.S.; Koenig, K.; Longo, C.; Lowndes, J.S.; Rockwood, R.C.; Selig, E.R.; Selkoe, K.A.; et al. Spatial and temporal changes in cumulative human impacts on the world’s ocean. Nat. Commun. 2015, 6, 7615. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Madricardo, F.; Foglini, F.; Campiani, E.; Grande, V.; Catenacci, E.; Petrizzo, A.; Kruss, A.; Toso, C.; Trincardi, F. Assessing the human footprint on the sea-floor of coastal systems: The case of the Venice Lagoon, Italy. Sci. Rep. 2019, 9, 6615. [Google Scholar] [CrossRef] [PubMed]

- Kostylev, V.E.; Todd, B.J.; Fader, G.; Courtney, R.C.; Cameron, G.; Pickrill, R.A. Benthic habitat mapping on the Scotian Shelf based on multibeam bathymetry, surficial geology and sea floor photographs. Mar. Ecol. Prog. Ser. 2001, 219, 121–137. [Google Scholar] [CrossRef] [Green Version]

- Zhi, H.; Siwabessy, J.; Nichol, S.L.; Brooke, B.P. Predictive mapping of seabed substrata using high-resolution multibeam sonar data: A case study from a shelf with complex geomorphology. Mar. Geol. 2014, 357, 37–52. [Google Scholar] [CrossRef]

- Ward, S.L.; Neill, S.P.; Van Landeghem, K.J.J.; Scourse, J.D. Classifying seabed sediment type using simulated tidal-induced bed shear stress. Mar. Geol. 2015, 367, 94–104. [Google Scholar] [CrossRef] [Green Version]

- Diesing, M.; Mitchell, P.J.; O Keeffe, E.; Gavazzi, G.O.A.M.; Bas, T.L. Limitations of Predicting Substrate Classes on a Sedimentary Complex but Morphologically Simple Seabed. Remote Sens. 2020, 12, 3398. [Google Scholar] [CrossRef]

- Zelada Leon, A.; Huvenne, V.A.I.; Benoist, N.M.A.; Ferguson, M.; Bett, B.J.; Wynn, R.B. Assessing the Repeatability of Automated Seafloor Classification Algorithms, with Application in Marine Protected Area Monitoring. Remote Sens. 2020, 12, 1572. [Google Scholar] [CrossRef]

- Strong, J.A.; Clements, A.; Lillis, H.; Galparsoro, I.; Bildstein, T.; Pesch, R. A review of the influence of marine habitat classification schemes on mapping studies: Inherent assumptions, influence on end products, and suggestions for future developments. ICES J. Mar. Sci. 2019, 76, 10–22. [Google Scholar] [CrossRef] [Green Version]

- Cui, X.; Yang, F.; Wang, X.; Ai, B.; Luo, Y.; Ma, D. Deep learning model for seabed sediment classification based on fuzzy ranking feature optimization. Mar. Geol. 2021, 432, 106390. [Google Scholar] [CrossRef]

- Kruss, A.; Madricardo, F.; Sigovini, M.; Ferrarin, C.; Gavazzi, G.M. Assessment of submerged aquatic vegetation abundance using multibeam sonar in very shallow and dynamic environment. In Proceedings of the 2015 IEEE/OES Acoustics in Underwater Geosciences Symposium (RIO Acoustics), Rio de Janeiro, Brazil, 29–31 July 2015; pp. 1–7. [Google Scholar] [CrossRef]

- Khomsin; Mukhtasor; Pratomo, D.G.; Suntoyo. The Development of Seabed Sediment Mapping Methods: The Opportunity Application in the Coastal Waters. IOP Conf. Ser. Earth Environ. Sci. 2021, 731, 012039. [Google Scholar] [CrossRef]

- Manik, H.M.; Nishimori, Y.; Nishiyama, Y.; Hazama, T.; Kasai, A.; Firdaus, R.; Elson, L.; Yaodi, A. Developing signal processing of echo sounder for measuring acoustic backscatter. In Proceedings of the 3rd International Conference on Marine Science (ICMS), Bogor City, Indonesia, 4 September 2019. [Google Scholar] [CrossRef]

- Luo, X.; Qin, X.; Wu, Z.; Yang, F.; Wang, M.; Shang, J. Sediment Classification of Small-Size Seabed Acoustic Images Using Convolutional Neural Networks. IEEE Access 2019, 7, 98331–98339. [Google Scholar] [CrossRef]

- Snellen, M.; Gaida, T.C.; Koop, L.; Alevizos, E.; Simons, D.G. Performance of Multibeam Echosounder Backscatter-Based Classification for Monitoring Sediment Distributions Using Multitemporal Large-Scale Ocean Data Sets. IEEE J. Ocean. Eng. 2019, 44, 142–155. [Google Scholar] [CrossRef] [Green Version]

- Montereale Gavazzi, G.; Kapasakali, D.A.; Kerchof, F.; Deleu, S.; Degraer, S.; Van Lancker, V. Subtidal Natural Hard Substrate Quantitative Habitat Mapping: Interlinking Underwater Acoustics and Optical Imagery with Machine Learning. Remote Sens. 2021, 13, 4608. [Google Scholar] [CrossRef]

- Innangi, S.; Barra, M.; Martino, G.D.; Parnum, I.M.; Tonielli, R.; Mazzola, S. Reson SeaBat 8125 backscatter data as a tool for seabed characterization (Central Mediterranean, Southern Italy): Results from different processing approaches. Appl. Acoust. 2015, 87, 109–122. [Google Scholar] [CrossRef]

- Brown, C.J.; Blondel, P. Developments in the application of multibeam sonar backscatter for seafloor habitat mapping. Appl. Acoust. 2009, 70, 1242–1247. [Google Scholar] [CrossRef]

- Ji, X.; Yang, B.; Tang, Q. Seabed sediment classification using multibeam backscatter data based on the selecting optimal random forest model. Appl. Acoust. 2020, 167, 107387. [Google Scholar] [CrossRef]

- Gaida, T.C.; Mohammadloo, T.H.; Snellen, M.; Simons, D.G. Mapping the Seabed and Shallow Subsurface with Multi-Frequency Multibeam Echosounders. Remote Sens. 2020, 12, 52. [Google Scholar] [CrossRef] [Green Version]

- Lucieer, V.; Hill, N.A.; Barrett, N.S.; Nichol, S. Do marine substrates ‘look’ and ‘sound’ the same? Supervised classification of multibeam acoustic data using autonomous underwater vehicle images. Estuar. Coast. Shelf Sci. 2013, 117, 94–106. [Google Scholar] [CrossRef]

- Ji, X.; Yang, B.; Tang, Q. Acoustic Seabed Classification Based on Multibeam Echosounder Backscatter Data Using the PSO-BP-AdaBoost Algorithm: A Case Study From Jiaozhou Bay, China. IEEE J. Ocean. Eng. 2021, 46, 509–519. [Google Scholar] [CrossRef]

- Pillay, T.; Cawthra, H.C.; Lombard, A.T. Characterisation of seafloor substrate using advanced processing of multibeam bathymetry, backscatter, and sidescan sonar in Table Bay, South Africa. Mar. Geol. 2020, 429, 106332. [Google Scholar] [CrossRef]

- Hasan, R.C.; Ierodiaconou, D.; Laurenson, L. Combining angular response classification and backscatter imagery segmentation for benthic biological habitat mapping. Estuar. Coast. Shelf Sci. 2012, 97, 1–9. [Google Scholar] [CrossRef] [Green Version]

- Ahmed, K.I.; Demšar, U. Improving seabed classification from Multi-Beam Echo Sounder (MBES) backscatter data with visual data mining. J. Coast. Conserv. 2013, 17, 559–577. [Google Scholar] [CrossRef]

- Diesing, M.; Mitchell, P.; Stephens, D. Image-based seabed classification: What can we learn from terrestrial remote sensing? ICES J. Mar. Sci. 2016, 73, 2425–2441. [Google Scholar] [CrossRef]

- Samsudin, S.A.; Hasan, R.C. ASSESSMENT OF MULTIBEAM BACKSCATTER TEXTURE ANALYSIS FOR SEAFLOOR SEDIMENT CLASSIFICATION. Int. Arch. Photogramm. Remote Sens. Spatial Inf. Sci. 2017, XLII-4/W5, 177–183. [Google Scholar] [CrossRef] [Green Version]

- Pillay, T.; Cawthra, H.C.; Lombard, A.T.; Sink, K. Benthic habitat mapping from a machine learning perspective on the Cape St Francis inner shelf, Eastern Cape, South Africa. Mar. Geol. 2021, 440, 106595. [Google Scholar] [CrossRef]

- Zhang, C.; Pan, X.; Li, H.; Gardiner, A.; Sargent, I.; Hare, J.; Atkinson, P.M. A hybrid MLP-CNN classifier for very fine resolution remotely sensed image classification. ISPRS J. Photogramm. Remote Sens. 2018, 140, 133–144. [Google Scholar] [CrossRef] [Green Version]

- Zhu, Z.; Cui, X.; Zhang, K.; Ai, B.; Shi, B.; Yang, F. DNN-based seabed classification using differently weighted MBES multifeatures. Mar. Geol. 2021, 438, 106519. [Google Scholar] [CrossRef]

- Chen, Y.S.; Lin, Z.H.; Zhao, X.; Wang, G.; Gu, Y.F. Deep Learning-Based Classification of Hyperspectral Data. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2014, 7, 2094–2107. [Google Scholar] [CrossRef]

- Chen, Y.S.; Zhao, X.; Jia, X.P. Spectral-Spatial Classification of Hyperspectral Data Based on Deep Belief Network. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2015, 8, 2381–2392. [Google Scholar] [CrossRef]

- Xu, Y.; Du, B.; Zhang, F.; Zhang, L. Hyperspectral image classification via a random patches network. ISPRS J. Photogramm. Remote Sens. 2018, 142, 344–357. [Google Scholar] [CrossRef]

- Arriaga, R.I.; Vempala, S. An algorithmic theory of learning: Robust concepts and random projection. Mach. Learn. 2006, 63, 161–182. [Google Scholar] [CrossRef] [Green Version]

- Zhao, W.Z.; Du, S.H. Learning multiscale and deep representations for classifying remotely sensed imagery. ISPRS J. Photogramm. Remote Sens. 2016, 113, 155–165. [Google Scholar] [CrossRef]

- Preston, J. Automated acoustic seabed classification of multibeam images of Stanton Banks. Appl. Acoust. 2009, 70, 1277–1287. [Google Scholar] [CrossRef]

- Li, W.; Prasad, S.; Fowler, J.E. Decision Fusion in Kernel-Induced Spaces for Hyperspectral Image Classification. IEEE Trans. Geosci. Remote Sens. 2014, 52, 3399–3411. [Google Scholar] [CrossRef] [Green Version]

- Hu, Y.B.; Zhang, J.; Ma, Y.; An, J.B.; Ren, G.B.; Li, X.M. Hyperspectral Coastal Wetland Classification Based on a Multiobject Convolutional Neural Network Model and Decision Fusion. IEEE Geosci. Remote Sens. Lett. 2019, 16, 1110–1114. [Google Scholar] [CrossRef]

- Southern North Sea MPA. Available online: http://jncc.defra.gov.uk/page-7243 (accessed on 23 February 2019).

- Robinson, K.A.; Karen, A. Seabed Habitats of the Southern Irish Sea. In Seafloor Geomorphology as Benthic Habitat, 2nd ed.; Peter, T.H., Elaine, K.B., Eds.; Elsevier: Amsterdam, The Netherlands, 2012; Volume 3, pp. 523–537. [Google Scholar] [CrossRef]

- Robinson, K.A.; Darbyshire, T.; Landeghem, K.V.; Lindenbaum, C.; O’Beirn, F. Habitat Mapping for Conservation and Management of the Southern Irish Sea (HABMAP): I: Seabed Surveys, 3rd ed.; National Museum Wales: Cardiff, Wales, 2009. [Google Scholar]

- Pearce, B.; Tappin, D.R.; Dove, D.; Pinnion, J. Benthos supported by the tunnel-valleys of the southern North Sea. In Seafloor Geomorphology as Benthic Habitat, 2nd ed.; Peter, T.H., Elaine, K.B., Eds.; Elsevier: Amsterdam, The Netherlands, 2012; Volume 3, pp. 597–612. [Google Scholar] [CrossRef] [Green Version]

- Holler, P.; Markert, E.; Bartholomä, A.; Capperucci, R.; Hass, H.C.; Kröncke, I.; Mielck, F.; Reimers, H.C. Tools to evaluate seafloor integrity: Comparison of multi-device acoustic seafloor classifications for benthic macrofauna-driven patterns in the German Bight, southern North Sea. Geo-Mar. Lett. 2017, 37, 93–109. [Google Scholar] [CrossRef]

- Tappin, D.R.; Chadwick, R.A.; Jackson, A.A.; Wingfield, R.; Smith, N. The Geology of Cardigan Bay and the Bristol Channel; British Geological Survey, UK Offshore Regional Report; HM Stationery Office: London, UK, 1994. [Google Scholar]

- Mackie, A.; Rees, E.; Wilson, J.G. The south-west Irish Sea survey (SWISS) of benthic biodiversity. In Marine Biodiversity in Ireland and Adjacent Waters, Proceedings of the Conference, Belfast, Northern Ireland, April 2001; MAGNI Publication 8; Ulster Museum: Belfast, UK; pp. 26–27.

- Long, D. BGS Detailed Explanation of Seabed Sediment Modified Folk Classification; British Geological Survey: Nottingham, UK, 2006. [Google Scholar]

- Connor, D.W.; Gilliland, P.M.; Golding, N.; Robinson, P.; Todd, D.; Verling, E. UKSeaMap: The Mapping of Seabed and Water Column Features of UK Seas, 3rd ed.; Joint Nature Conservation Committee: Peterborough, UK, 2006. [Google Scholar]

- Folk, R.L.; Andrews, P.B.; Lewis, D.W. Detrital sedimentary rock classification and nomenclature for use in New Zealand. N. Z. J. Geol. Geophys. 1970, 13, 937–968. [Google Scholar] [CrossRef] [Green Version]

- Lacharité, M.; Brown, C.J.; Gazzola, V. Multisource multibeam backscatter data: Developing a strategy for the production of benthic habitat maps using semi-automated seafloor classification methods. Mar. Geophys. Res. 2018, 39, 307–322. [Google Scholar] [CrossRef]

- Zhang, K.; Li, Q.; Zhu, H.; Yang, F.; Wu, Z. Acoustic Deep-Sea Seafloor Characterization Accounting for Heterogeneity Effect. IEEE Trans. Geosci. Remote Sens. 2020, 58, 3034–3042. [Google Scholar] [CrossRef]

- Cui, X.; Liu, H.; Fan, M.; Ai, B.; Ma, D.; Yang, F. Seafloor habitat mapping using multibeam bathymetric and backscatter intensity multi-features SVM classification framework. Appl. Acoust. 2021, 174, 107728. [Google Scholar] [CrossRef]

- Qiu, B.W.; Fan, Z.L.; Zhong, M.; Tang, Z.H.; Chen, C.C. A new approach for crop identification with wavelet variance and JM distance. Environ. Monit. Assess. 2014, 186, 7929–7940. [Google Scholar] [CrossRef] [PubMed]

- Hasan, R.; Ierodiaconou, D.; Monk, J. Evaluation of Four Supervised Learning Methods for Benthic Habitat Mapping Using Backscatter from Multi-Beam Sonar. Remote Sens. 2012, 4, 3427–3443. [Google Scholar] [CrossRef] [Green Version]

- Diesing, M.; Stephens, D. A multi-model ensemble approach to seabed mapping. J. Sea Res. 2015, 100, 62–69. [Google Scholar] [CrossRef]

- Eleftherakis, D.; Amiri-Simkooei, A.; Snellen, M.; Simons, D.G. Improving riverbed sediment classification using backscatter and depth residual features of multi-beam echo-sounder systems. J. Acoust. Soc. Am. 2012, 131, 3710–3725. [Google Scholar] [CrossRef] [Green Version]

- Moustier, C.D.; Matsumoto, H. Seafloor acoustic remote sensing with multibeam echo-sounders and bathymetric sidescan sonar systems. Mar. Geophys. Res. 1993, 15, 27–42. [Google Scholar] [CrossRef] [Green Version]

- Ismail, K.; Huvenne, V.A.I.; Masson, D.G. Objective automated classification technique for marine landscape mapping in submarine canyons. Mar. Geol. 2015, 362, 17–32. [Google Scholar] [CrossRef] [Green Version]

- Stephens, D.; Diesing, M. A Comparison of Supervised Classification Methods for the Prediction of Substrate Type Using Multibeam Acoustic and Legacy Grain-Size Data. PLoS ONE 2014, 9. [Google Scholar] [CrossRef] [PubMed]

- Wilson, M.F.J.; O’Connell, B.; Brown, C.; Guinan, J.C.; Grehan, A.J. Multiscale Terrain Analysis of Multibeam Bathymetry Data for Habitat Mapping on the Continental Slope. Mar. Geod. 2007, 30, 3–35. [Google Scholar] [CrossRef] [Green Version]

- Caywood, M.S.; Willmore, B.; Tolhurst, D.J. Independent components of color natural scenes resemble V1 neurons in their spatial and color tuning. J. Neurophysiol. 2004, 91, 2859–2873. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Congalton, R.G. A review of assessing the accuracy of classifications of remotely sensed data. Remote Sens. Environ. 1991, 37, 270–279. [Google Scholar] [CrossRef]

- Cohen, J.A. A Coefficient of Agreement for Nominal Scales. Educ. Psychol. Meas. 1960, 20, 37–46. [Google Scholar] [CrossRef]

- Chinchor, N.; Sundheim, B. MUC-5 evaluation metrics. In Proceedings of the 5th Conference on Message Understanding, Baltimore, MD, USA, 25–27 August 1993; pp. 69–78. [Google Scholar]

- Wang, F.; Yu, J.; Liu, Z.; Kong, M.; Wu, Y. Study on offshore seabed sediment classification based on particle size parameters using XGBoost algorithm. Comput. Geosci. 2021, 149, 104713. [Google Scholar] [CrossRef]

- Lark, R.M.; Marchant, B.P.; Dove, D.; Green, S.L.; Stewart, H.; Diesing, M. Combining observations with acoustic swath bathymetry and backscatter to map seabed sediment texture classes: The empirical best linear unbiased predictor. Sediment. Geol. 2015, 328, 17–32. [Google Scholar] [CrossRef] [Green Version]

- Ierodiaconou, D.; Schimel, A.; Kennedy, D.; Monk, J.; Gaylard, G.; Young, M.; Diesing, M.; Rattray, A. Combining pixel and object based image analysis of ultra-high resolution multibeam bathymetry and backscatter for habitat mapping in shallow marine waters. Mar. Geophys. Res. 2018, 39, 271–288. [Google Scholar] [CrossRef]

| Study Sites | Class Name | Training | Test |

|---|---|---|---|

| S1 | Sand and muddy sand | 103 | 41 |

| Mixed sediments | 370 | 170 | |

| Coarse sediments | 157 | 59 | |

| Total | 630 | 270 | |

| S2 | Sand and muddy sand | 279 | 117 |

| Mixed sediments | 219 | 105 | |

| Coarse sediments | 510 | 210 | |

| Total | 1008 | 432 |

| Data | Variable Description | Layers |

|---|---|---|

| Backscatter intensity | A function of the absorption and scattering of water and seabed interface, the angle of incidence and the seafloor topography [55]. | Backscatter (1,2) * |

| Texture | Grayscale distribution of pixels and surrounding neighborhoods based on gray level co-occurrence matrix. | Mean (1,2); correlation (2) |

| Bathymetry | Depth (negative elevation) of the grid. | Bathymetry (1,2) |

| Mean depth | The mean of all cell values in the focal neighborhood of water depth value. | Mean depth (1) |

| Aspect | The downslope direction of the maximum rate of change in value from each cell to its neighbors. Description of the orientation of slope. | Aspect (1,2) |

| Slope | The maximum rate of change in depth between each cell and its analysis neighborhood (degrees from horizontal) [56]. | Slope (1,2) |

| Curvature | Seabed curvature defined as the derivative of the rate of change in the seabed. | Maximum curvature (1); minimum curvature (1) |

| BPI | The vertical difference between a cell and the mean of the local neighborhood. Broad BPI and fine BPI were calculated by 25/250 m and 3/25 m radii, respectively [57]. | Broad BPI (2); fine BPI (2) |

| Roughness | The difference between the minimum and maximum bathymetry of a cell and its 8 neighbors [58]. | Roughness (2) |

| Ground Truth | Predicted Labels | PA (%) | OA (%) | Kappa Coefficient | F1 Score | ||

|---|---|---|---|---|---|---|---|

| Sand and Muddy Sand | Mixed Sediments | Coarse Sediments | |||||

| Sand and muddy sand | 38 | 0 | 3 | 92.68 | 94.07 | 0.890 | 0.941 |

| Mixed sediments | 2 | 164 | 4 | 96.47 | |||

| Coarse sediments | 3 | 4 | 52 | 88.13 | |||

| UA (%) | 88.37 | 97.62 | 88.14 | -- | |||

| Ground Truth | Predicted Labels | PA (%) | OA (%) | Kappa Coefficient | F1 Score | ||

|---|---|---|---|---|---|---|---|

| Sand and Muddy Sand | Mixed Sediments | Coarse Sediments | |||||

| Sand and muddy sand | 115 | 0 | 2 | 98.29 | 94.91 | 0.919 | 0.949 |

| Mixed sediments | 1 | 96 | 8 | 91.43 | |||

| Coarse sediments | 0 | 11 | 199 | 94.76 | |||

| UA (%) | 99.14 | 89.72 | 95.22 | -- | |||

| RPNet | RF | RPNet-RF | Variation | KNN | RPNet-KNN | Variation | SVM | RPNet-SVM | Variation | ||

|---|---|---|---|---|---|---|---|---|---|---|---|

| Producer’s accuracy | Class1 * | 92.68 | 65.85 | 97.56 | 31.71 | 70.37 | 95.12 | 24.75 | 12.20 | 12.20 | 0.00 |

| Class2 | 96.47 | 91.18 | 98.82 | 7.64 | 88.24 | 98.23 | 9.99 | 87.06 | 97.06 | 10.00 | |

| Class3 | 88.13 | 83.05 | 91.53 | 8.48 | 79.66 | 88.14 | 8.48 | 77.97 | 79.67 | 1.70 | |

| Overall accuracy | OA (%) | 94.07 | 85.56 | 97.04 | 11.48 | 83.70 | 95.56 | 11.86 | 73.70 | 80.37 | 6.67. |

| Kappa | 0.890 | 0.727 | 0.944 | 0.217 | 0.701 | 0.916 | 0.215 | 0.498 | 0.606 | 0.108 | |

| F1 score | 0.941 | 0.854 | 0.970 | 0.116 | 0.840 | 0.955 | 0.115 | 0.712 | 0.765 | 0.053 |

| RPNet | RF | RPNet-RF | Variation | KNN | RPNet-KNN | Variation | SVM | RPNet-SVM | Variation | ||

|---|---|---|---|---|---|---|---|---|---|---|---|

| Producer’s accuracy | Class1 * | 98.29 | 93.16 | 96.58 | 3.42 | 84.62 | 90.60 | 5.98 | 41.88 | 44.44 | 2.56 |

| Class2 | 91.43 | 86.67 | 89.52 | 2.85 | 80.00 | 87.62 | 7.62 | 30.48 | 31.43 | 0.95 | |

| Class3 | 94.76 | 90.95 | 99.05 | 8.10 | 92.38 | 99.05 | 6.67 | 99.52 | 100.00 | 0.48 | |

| Overall accuracy | OA (%) | 94.91 | 90.51 | 96.06 | 5.55 | 87.26 | 93.98 | 6.72 | 67.13 | 68.29 | 1.16 |

| Kappa | 0.919 | 0.849 | 0.937 | 0.088 | 0.794 | 0.903 | 0.109 | 0.410 | 0.432 | 0..022 | |

| F1 score | 0.949 | 0.905 | 0.960 | 0.055 | 0.872 | 0.940 | 0.068 | 0.635 | 0.649 | 0.014 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wan, J.; Qin, Z.; Cui, X.; Yang, F.; Yasir, M.; Ma, B.; Liu, X. MBES Seabed Sediment Classification Based on a Decision Fusion Method Using Deep Learning Model. Remote Sens. 2022, 14, 3708. https://0-doi-org.brum.beds.ac.uk/10.3390/rs14153708

Wan J, Qin Z, Cui X, Yang F, Yasir M, Ma B, Liu X. MBES Seabed Sediment Classification Based on a Decision Fusion Method Using Deep Learning Model. Remote Sensing. 2022; 14(15):3708. https://0-doi-org.brum.beds.ac.uk/10.3390/rs14153708

Chicago/Turabian StyleWan, Jiaxin, Zhiliang Qin, Xiaodong Cui, Fanlin Yang, Muhammad Yasir, Benjun Ma, and Xueqin Liu. 2022. "MBES Seabed Sediment Classification Based on a Decision Fusion Method Using Deep Learning Model" Remote Sensing 14, no. 15: 3708. https://0-doi-org.brum.beds.ac.uk/10.3390/rs14153708