Self-Supervised Encoders Are Better Transfer Learners in Remote Sensing Applications

Abstract

:1. Introduction

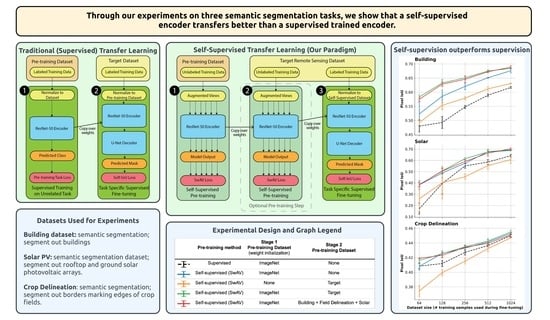

- We further demonstrate that the model pre-trained through self-supervision on ImageNet outperforms the model pre-trained using the supervised ImageNet classification task on three distinct remote sensing tasks, suggesting that practitioners should apply transfer learning using self-supervised models as a standard practice.

- We evaluate the performance improvement of a model pre-trained using SwAV on ImageNet, along with the extra improvement possible through further pre-training, with different amounts of labeled training data available for fine-tuning. We found that using of the self-supervised encoder is most beneficial in data-limited scenarios, and as more labeled data are available for the target task, the performance gap between encoders decreases.

- We show that, when using the SwAV model with pre-trained weights from ImageNet, further pre-training of SwAV on the target dataset does provide additional accuracy improvements, although often these improvements are minor. Our experiments suggest that a larger unlabeled target dataset yields larger improvements during this additional pre-training step.

2. Materials and Methods

2.1. Experimental Design

2.2. Datasets

2.2.1. Building Segmentation

2.2.2. Solar Photovoltaic Array Detection

2.2.3. Field Delineation

3. Results

3.1. Representations from Self-Supervision on Imagenet Transfer Better to New Tasks Than Supervised Representations

3.2. Further Self-Supervised Pre-Training Is of Limited Benefit

3.3. The Number of Prototypes Used in Additional Pre-Training Should Match the Original Model’S

4. Discussion

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A. More Details on SwAV

Appendix B. Initializing a Model for Transfer Learning

Appendix C. Further Details on Applying SwAV

References

- Wang, Y.; Yao, Q.; Kwok, J.T.; Ni, L.M. Generalizing from a Few Examples: A Survey on Few-shot Learning. ACM Comput. Surv. 2020, 53, 63. [Google Scholar] [CrossRef]

- Zhang, P.; Bai, Y.; Wang, D.; Bai, B.; Li, Y. Few-Shot Classification of Aerial Scene Images via Meta-Learning. Remote Sens. 2021, 13, 108. [Google Scholar] [CrossRef]

- Kemker, R.; Luu, R.; Kanan, C. Low-Shot Learning for the Semantic Segmentation of Remote Sensing Imagery. IEEE Trans. Geosci. Remote Sens. 2018, 56, 6214–6223. [Google Scholar] [CrossRef] [Green Version]

- Miller, E.; Matsakis, N.; Viola, P. Learning from one example through shared densities on transforms. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, CVPR 2000 (Cat. No.PR00662), Hilton Head Island, SC, USA, 13–15 June 2000; Volume 1, pp. 464–471. [Google Scholar] [CrossRef]

- Shorten, C.; Khoshgoftaar, T.M. A survey on Image Data Augmentation for Deep Learning. J. Big Data 2019, 6, 60. [Google Scholar] [CrossRef]

- Kong, F.; Huang, B.; Bradbury, K.; Malof, J. The Synthinel-1 dataset: A collection of high resolution synthetic overhead imagery for building segmentation. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, Snowmass Village, CO, USA, 1–5 March 2020; pp. 1814–1823. [Google Scholar]

- Xie, M.; Jean, N.; Burke, M.; Lobell, D.; Ermon, S. Transfer Learning from Deep Features for Remote Sensing and Poverty Mapping. In Proceedings of the AAAI Conference on Artificial Intelligence, Phoenix, AZ, USA, 12–17 February 2016; Volume 30, pp. 3929–3935. [Google Scholar] [CrossRef]

- Pires de Lima, R.; Marfurt, K. Convolutional Neural Network for Remote-Sensing Scene Classification: Transfer Learning Analysis. Remote Sens. 2020, 12, 86. [Google Scholar] [CrossRef] [Green Version]

- Russakovsky, O.; Deng, J.; Su, H.; Krause, J.; Satheesh, S.; Ma, S.; Huang, Z.; Karpathy, A.; Khosla, A.; Bernstein, M.; et al. ImageNet Large Scale Visual Recognition Challenge. Int. J. Comput. Vis. 2015, 115, 211–252. [Google Scholar] [CrossRef] [Green Version]

- Ericsson, L.; Gouk, H.; Hospedales, T.M. How Well Do Self-Supervised Models Transfer? In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 5414–5423. [Google Scholar]

- Risojević, V.; Stojnić, V. Do we Still Need Imagenet Pre-Training in Remote Sensing Scene Classification? ISPRS—Int. Arch. Photogramm. Remote. Sens. Spat. Inf. Sci. 2022, 43B3, 1399–1406. [Google Scholar] [CrossRef]

- Gidaris, S.; Singh, P.; Komodakis, N. Unsupervised Representation Learning by Predicting Image Rotations. In Proceedings of the International Conference on Learning Representations, Virtual, 25–29 April 2022. [Google Scholar]

- Zhang, R.; Isola, P.; Efros, A.A. Colorful Image Colorization. In Computer Vision—ECCV 2016; Lecture Notes in Computer Science; Leibe, B., Matas, J., Sebe, N., Welling, M., Eds.; Springer International Publishing: Cham, Switzerlanfd, 2016; pp. 649–666. [Google Scholar] [CrossRef]

- Pathak, D.; Krähenbühl, P.; Donahue, J.; Darrell, T.; Efros, A.A. Context Encoders: Feature Learning by Inpainting. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 2536–2544. [Google Scholar] [CrossRef] [Green Version]

- Noroozi, M.; Favaro, P. Unsupervised Learning of Visual Representations by Solving Jigsaw Puzzles. In Computer Vision—ECCV 2016; Lecture Notes in Computer Science; Leibe, B., Matas, J., Sebe, N., Welling, M., Eds.; Springer International Publishing: Cham, Switzerland, 2016; pp. 69–84. [Google Scholar] [CrossRef] [Green Version]

- Chen, T.; Kornblith, S.; Norouzi, M.; Hinton, G. A Simple Framework for Contrastive Learning of Visual Representations. In Proceedings of the 37th International Conference on Machine Learning, PMLR 2020, Virtual, 13–18 July 2020; pp. 1597–1607. [Google Scholar]

- Chen, T.; Kornblith, S.; Swersky, K.; Norouzi, M.; Hinton, G. Big self-supervised models are strong semi-supervised learners. In Proceedings of the 34th International Conference on Neural Information Processing Systems, NIPS’20, Vancouver, BC, Canada, 6–12 December 2020; Curran Associates Inc.: Red Hook, NY, USA, 2020; pp. 22243–22255. [Google Scholar]

- Grill, J.B.; Strub, F.; Altché, F.; Tallec, C.; Richemond, P.; Buchatskaya, E.; Doersch, C.; Avila Pires, B.; Guo, Z.; Gheshlaghi Azar, M.; et al. Bootstrap Your Own Latent—A New Approach to Self-Supervised Learning. In Proceedings of the Advances in Neural Information Processing Systems, Online, 6–12 December 2020; Curran Associates, Inc.: Red Hook, NY, USA, 2020; Volume 33, pp. 21271–21284. [Google Scholar]

- Jean, N.; Wang, S.; Samar, A.; Azzari, G.; Lobell, D.; Ermon, S. Tile2Vec: Unsupervised Representation Learning for Spatially Distributed Data. In Proceedings of the AAAI Conference on Artificial Intelligence, Honolulu, HI, USA, 27 January–1 February 2019; Volume 33, pp. 3967–3974. [Google Scholar] [CrossRef] [Green Version]

- Ayush, K.; Uzkent, B.; Meng, C.; Tanmay, K.; Burke, M.; Lobell, D.; Ermon, S. Geography-Aware Self-Supervised Learning. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 10–17 October 2021; pp. 10161–10170. [Google Scholar] [CrossRef]

- Mañas, O.; Lacoste, A.; Giró-i Nieto, X.; Vazquez, D.; Rodríguez, P. Seasonal Contrast: Unsupervised Pre-Training from Uncurated Remote Sensing Data. In Proceedings of the 2021 IEEE/CVF International Conference on Computer Vision (ICCV), Montreal, QC, Canada, 10–17 October 2021; pp. 9394–9403. [Google Scholar] [CrossRef]

- Reed, C.J.; Yue, X.; Nrusimha, A.; Ebrahimi, S.; Vijaykumar, V.; Mao, R.; Li, B.; Zhang, S.; Guillory, D.; Metzger, S.; et al. Self-Supervised Pretraining Improves Self-Supervised Pretraining. In Proceedings of the 2022 IEEE/CVF Winter Conference on Applications of Computer Vision (WACV), Waikoloa, HI, USA, 4–8 January 2022; pp. 1050–1060. [Google Scholar] [CrossRef]

- Caron, M.; Misra, I.; Mairal, J.; Goyal, P.; Bojanowski, P.; Joulin, A. Unsupervised Learning of Visual Features by Contrasting Cluster Assignments. In Proceedings of the Advances in Neural Information Processing Systems, Online, 6–12 December 2020; Curran Associates, Inc.: Red Hook, NY, USA, 2020; Volume 33, pp. 9912–9924. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar] [CrossRef] [Green Version]

- Ronneberger, O.; Fischer, P.; Brox, T. U-Net: Convolutional Networks for Biomedical Image Segmentation. In Medical Image Computing and Computer-Assisted Intervention—MICCAI 2015; Lecture Notes in Computer Science; Navab, N., Hornegger, J., Wells, W.M., Frangi, A.F., Eds.; Springer International Publishing: Cham, Switzerland, 2015; pp. 234–241. [Google Scholar] [CrossRef] [Green Version]

- Huang, B.; Lu, K.; Audeberr, N.; Khalel, A.; Tarabalka, Y.; Malof, J.; Boulch, A.; Le Saux, B.; Collins, L.; Bradbury, K.; et al. Large-Scale Semantic Classification: Outcome of the First Year of Inria Aerial Image Labeling Benchmark. In Proceedings of the IGARSS 2018—2018 IEEE International Geoscience and Remote Sensing Symposium, Valencia, Spain, 22–27 July 2018; pp. 6947–6950. [Google Scholar] [CrossRef]

- Milletari, F.; Navab, N.; Ahmadi, S.A. V-Net: Fully Convolutional Neural Networks for Volumetric Medical Image Segmentation. In Proceedings of the 2016 Fourth International Conference on 3D Vision (3DV), Stanford, CA, USA, 25–28 October 2016; pp. 565–571. [Google Scholar] [CrossRef]

- Maggiori, E.; Tarabalka, Y.; Charpiat, G.; Alliez, P. Can semantic labeling methods generalize to any city? The inria aerial image labeling benchmark. In Proceedings of the 2017 IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Fort Worth, TX, USA, 23–28 July 2017; IEEE: Fort Worth, TX, USA, 2017; pp. 3226–3229. [Google Scholar] [CrossRef] [Green Version]

- Bradbury, K.; Saboo, R.; Johnson, T.L.; Malof, J.M.; Devarajan, A.; Zhang, W.; Collins, L.M.; G. Newell, R. Distributed solar photovoltaic array location and extent dataset for remote sensing object identification. Sci. Data 2016, 3, 160106. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Aung, H.L.; Uzkent, B.; Burke, M.; Lobell, D.; Ermon, S. Farm Parcel Delineation Using Spatio-temporal Convolutional Networks. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Seattle, WA, USA, 14–19 June 2020; pp. 340–349. [Google Scholar] [CrossRef]

- Newell, A.; Deng, J. How Useful Is Self-Supervised Pretraining for Visual Tasks? In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 14–19 June 2020; pp. 7343–7352. [Google Scholar] [CrossRef]

- Huh, M.; Agrawal, P.; Efros, A.A. What makes ImageNet good for transfer learning? arXiv 2016, arXiv:1608.08614. [Google Scholar] [CrossRef]

- Caron, M.; Touvron, H.; Misra, I.; Jégou, H.; Mairal, J.; Bojanowski, P.; Joulin, A. Emerging Properties in Self-Supervised Vision Transformers. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, QC, Canada, 10–17 October 2021; pp. 9650–9660. [Google Scholar]

- Ridnik, T.; Ben-Baruch, E.; Noy, A.; Zelnik, L. ImageNet-21K Pretraining for the Masses. arXiv 2021, arXiv:2104.10972. [Google Scholar]

| Experiment # | Pre-Training Method | Stage 1 Pre-Training Dataset (via Weight Initialization) | Stage 2 Pre-Training Dataset |

|---|---|---|---|

| 1 | Supervised | ImageNet | None |

| 2 | Self-supervised (SwAV) | ImageNet | None |

| 3 | Self-supervised (SwAV) | None | Target |

| 4 | Self-supervised (SwAV) | ImageNet | Target |

| 5 | Self-supervised (SwAV) | ImageNet | Building + Field Delineation + Solar |

| Dataset | Train Set Size | Validation Set Size | Image Size | Task Type |

|---|---|---|---|---|

| Inria Aerial Image Labeling | 75,020 | 1024 | 5000 × 5000 (retiled to 224 × 224) | Semantic segmentation (pixelwise labeling) |

| Solar Photovoltaic Array Detection | 28,245 | 1024 | 5000 × 5000 (retiled to 224 × 224) | Semantic segmentation (scarce object detection) |

| Farm Parcel Delineation | 1572 | 198 | 224 × 224 | Semantic segmentation (boundaries delineation) |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Calhoun, Z.D.; Lahrichi, S.; Ren, S.; Malof, J.M.; Bradbury, K. Self-Supervised Encoders Are Better Transfer Learners in Remote Sensing Applications. Remote Sens. 2022, 14, 5500. https://0-doi-org.brum.beds.ac.uk/10.3390/rs14215500

Calhoun ZD, Lahrichi S, Ren S, Malof JM, Bradbury K. Self-Supervised Encoders Are Better Transfer Learners in Remote Sensing Applications. Remote Sensing. 2022; 14(21):5500. https://0-doi-org.brum.beds.ac.uk/10.3390/rs14215500

Chicago/Turabian StyleCalhoun, Zachary D., Saad Lahrichi, Simiao Ren, Jordan M. Malof, and Kyle Bradbury. 2022. "Self-Supervised Encoders Are Better Transfer Learners in Remote Sensing Applications" Remote Sensing 14, no. 21: 5500. https://0-doi-org.brum.beds.ac.uk/10.3390/rs14215500