Scale-Invariant Multi-Level Context Aggregation Network for Weakly Supervised Building Extraction

Abstract

:1. Introduction

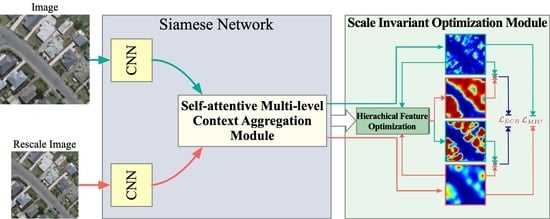

- A self-attentive method that effectively generates and aggregates multi-level CAMs is proposed to produce fine-structured CAMs;

- A scale-invariant optimization method that incorporates multi-scale supervision is proposed to improve the completeness of CAMs;

- The Siamese network that integrates the above two improvements with designed losses is introduced with the aim of narrowing the supervision gap between fully and weakly supervised building extraction.

2. Related Works

2.1. Building Extraction

2.2. Weakly Supervised Semantic Segmentation

3. Scale-Invariant Multi-Level Context Aggregation Network

3.1. Prerequisites

3.2. Overall Network Architecture

3.3. Self-Attentive Multi-Level Context Aggregation Module

3.4. Scale-Invariant Optimization Module for Improving Integrity of CAMs

in Figure 4. The parameters of SIOM are learned by comparing optimized and unoptimized CAMs on two scales. The gradient propagation path of this module is detached from the classification network to prevent interference with learning classification representation. By optimizing the hierarchy of non-local and local information, the problem of building edges and integrity can be solved to a large extent, effectively improving the quality of the CAM for building extraction.

in Figure 4. The parameters of SIOM are learned by comparing optimized and unoptimized CAMs on two scales. The gradient propagation path of this module is detached from the classification network to prevent interference with learning classification representation. By optimizing the hierarchy of non-local and local information, the problem of building edges and integrity can be solved to a large extent, effectively improving the quality of the CAM for building extraction.3.5. The Design of Loss Function

4. Experiments

4.1. Experimental Datasets

4.2. Experimental Setup

4.2.1. Methods for Comparison

- CAM-GAP: Zhou et al. [16] propose CAM-GAP for discriminative localization by adding a global average pooling modification to the network. It actually builds a generic localizable deep representation that can be applied to weakly supervised semantic segmentation;

- GradCAM++: Chattopadhay et al. [23] propose GradCAM++ based on gradients without changing the network structure. Its goal is to provide a visual interpretation for CNN-based models and can also be used for WSSS. It can be regarded as the generalization of a CAM;

- WILDCAT: Durand et al. [26] introduce WILDCAT to simultaneously align image regions for spatial invariance and learn strongly localized features for WSSS. It uses a single generic training scheme for classification, object localization, and semantic segmentation;

- SPN: The superpixel pooling network (SPN), proposed by Kwak et al. [25], utilizes superpixel segmentation of the input image as a pooling layout to cooperate with low-level features for semantic segmentation learning and inferring. The network architecture decouples the semantic segmentation task into classification and segmentation, allowing the network to learn class-agnostic shapes prior to the noisy annotations. It achieves outstanding performance on the challenging PASCAL VOC 2012 segmentation benchmark;

- WSF-Net: WSF-Net [56] is proposed for binary segmentation in remote sensing images with the potential to handle class imbalance through a balanced binary training strategy. It introduces a feature fusion strategy to adapt to the characteristics of objects in remote sensing images. The experiments achieve a promising performance for water and cloud extraction;

- MSG-SR-Net: MSG-SR-Net is proposed by Yan et al. [29] for weakly supervised building extraction from high-resolution images. It integrates two modules, i.e., multiscale generation and superpixel refinement, to generate high-quality CAMs so as to provide reliable pixel-level training samples for subsequent semantic segmentation. It achieves excellent performance for building extraction;

- SEAM: The self-supervised equivariant attention mechanism (SEAM) is proposed by Wang et al. [24] for WSSS. It embeds self-supervision into the weakly supervised learning framework through equivariant regularization, which forces CAMs generated from various transformed images to be consistent. It achieved state-of-the-art performance on the PASCAL VOC 2012 dataset;

- Ours–SIOM: The proposed method that only utilized SIOM. The CAM is generated by GradCAM++ at the last convolutional layer;

- Ours–SMCAM: The proposed method that only utilized SMCAM, including mutual learning between different scales ();

- Ours: The proposed network with SMCAM and SIOM.

4.2.2. Evaluation Criteria

4.2.3. Implementation Details

4.3. Comparison of CAM Results

4.3.1. Results of Chicago Dataset

4.3.2. Results of WHU Dataset

4.4. Comparison of Building Extraction Results

5. Discussion

5.1. The Influence of Auxiliary Branches for Classification Network

5.2. Comparison of Different Fusion Strategies in SMCAM

5.3. Performance of Hierarchical Feature Optimization in SIOM

5.4. Effect of Scale Setting

5.5. Limitations and Future Work

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Maktav, D.; Erbek, F.; Jürgens, C. Remote Sensing of Urban Areas. Int. J. Remote Sens. 2005, 26, 655–659. [Google Scholar] [CrossRef]

- Tomás, L.; Fonseca, L.; Almeida, C.; Leonardi, F.; Pereira, M. Urban Population Estimation based on Residential Buildings Volume Using IKONOS-2 Images and Lidar Data. Int. J. Remote Sens. 2016, 37, 1–28. [Google Scholar] [CrossRef] [Green Version]

- Li, J.; Huang, X.; Tu, L.; Zhang, T.; Wang, L. A Review of Building Detection from Very High Resolution Optical Remote Sensing Images. GISci. Remote Sens. 2022, 59, 1199–1225. [Google Scholar] [CrossRef]

- Guo, H.; Shi, Q.; Marinoni, A.; Du, B.; Zhang, L. Deep Building Footprint Update Network: A Semi-supervised Method for Updating Existing Building Footprint from Bi-temporal Remote Sensing Images. Remote Sens. Environ. 2021, 264, 112589. [Google Scholar] [CrossRef]

- Haq, M.A. CNN Based Automated Weed Detection System Using UAV Imagery. Comput. Syst. Sci. Eng. 2022, 42, 837–849. [Google Scholar]

- Kim, J.; Lee, M.; Han, H.; Kim, D.; Bae, Y.; Kim, H.S. Case Study: Development of the CNN Model Considering Teleconnection for Spatial Downscaling of Precipitation in a Climate Change Scenario. Sustainability 2022, 14, 4719. [Google Scholar] [CrossRef]

- Haq, M.A.; Khan, M.A.R.; Talal, A. Development of PCCNN-based network intrusion detection system for EDGE computing. Comput. Mater. Contin. 2022, 71, 1769–1788. [Google Scholar]

- Haq, M.A.; Khan, M.A.R. DNNBoT: Deep neural network-based botnet detection and classification. Cmc-Comput. Mater. Continua 2022, 71, 1729–1750. [Google Scholar]

- Ronneberger, O.; Fischer, P.; Brox, T. U-Net: Convolutional Networks for Biomedical Image Segmentation. In Proceedings of the Medical Image Computing and Computer-Assisted Intervention, Munich, German, 21–26 July 2017; pp. 234–241. [Google Scholar]

- Chen, L.C.; Papandreou, G.; Schroff, F.; Adam, H. Rethinking Atrous Convolution for Semantic Image Segmentation. arXiv 2017, arXiv:1706.05587. [Google Scholar]

- Zhao, H.; Shi, J.; Qi, X.; Wang, X.; Jia, J. Pyramid Scene Parsing Network. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 2881–2890. [Google Scholar]

- Schuegraf, P.; Bittner, K. Automatic Building Footprint Extraction from Multi-resolution Remote Sensing Images Using a Hybrid FCN. ISPRS Int. J. Geo-Inf. 2019, 8, 191. [Google Scholar] [CrossRef] [Green Version]

- Feng, W.; Sui, H.; Hua, L.; Xu, C.; Ma, G.; Huang, W. Building Extraction from VHR Remote Sensing Imagery by Combining an Improved Deep Convolutional Encoder-decoder Architecture and Historical Land Use Vector Map. Int. J. Remote Sens. 2020, 41, 6595–6617. [Google Scholar] [CrossRef]

- Shao, Z.; Tang, P.; Wang, Z.; Saleem, N.; Yam, S.; Sommai, C. BRRNet: A Fully Convolutional Neural Network for Automatic Building Extraction from High-resolution Remote Sensing Images. Remote Sens. 2020, 12, 1050. [Google Scholar] [CrossRef] [Green Version]

- Ma, J.; Wu, L.; Tang, X.; Liu, F.; Zhang, X.; Jiao, L. Building Extraction of Aerial Images by a Global and Multi-scale Encoder-decoder Network. Remote Sens. 2020, 12, 2350. [Google Scholar] [CrossRef]

- Zhou, B.; Khosla, A.; Lapedriza, A.; Oliva, A.; Torralba, A. Learning Deep Features for Discriminative Localization. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 2921–2929. [Google Scholar]

- Saleh, F.S.; Aliakbarian, M.S.; Salzmann, M.; Petersson, L.; Álvarez, J.M.; Gould, S. Incorporating Network Built-in Priors in Weakly-Supervised Semantic Segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 40, 1382–1396. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Wei, Y.; Ji, S. Scribble-based Weakly Supervised Deep Learning for Road Surface Extraction from Remote Sensing Images. IEEE Trans. Geosci. Remote Sens. 2021, 60, 1–12. [Google Scholar] [CrossRef]

- Ahn, J.; Kwak, S. Learning Pixel-Level Semantic Affinity with Image-Level Supervision for Weakly Supervised Semantic Segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018. [Google Scholar]

- Cao, Y.; Huang, X. A Coarse-to-fine Weakly Supervised Learning Method for Green Plastic Cover Segmentation Using High-resolution Remote Sensing Images. ISPRS J. Photogramm. Remote Sens. 2022, 188, 157–176. [Google Scholar] [CrossRef]

- Zeng, Y.; Zhuge, Y.; Lu, H.; Zhang, L. Joint Learning of Saliency Detection and Weakly Supervised Semantic Segmentation. In Proceedings of the IEEE International Conference on Computer Vision, Seoul, Republic of Korea, 27 Octorber–2 November 2019. [Google Scholar]

- Du, Y.; Fu, Z.; Liu, Q.; Wang, Y. Weakly Supervised Semantic Segmentation by Pixel-to-Prototype Contrast. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 19–24 June 2022; pp. 4320–4329. [Google Scholar]

- Chattopadhay, A.; Sarkar, A.; Howlader, P.; Balasubramanian, V.N. Grad-CAM++: Generalized Gradient-based Visual Explanations for Deep Convolutional Networks. In Proceedings of the IEEE Winter Conference on Applications of Computer Vision, Lake Tahoe, NV, USA, 12–15 March 2018; pp. 839–847. [Google Scholar]

- Wang, Y.; Zhang, J.; Kan, M.; Shan, S.; Chen, X. Self-supervised Equivariant Attention Mechanism for Weakly Supervised Semantic Segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 14–19 June 2020. [Google Scholar]

- Kwak, S.; Hong, S.; Han, B. Weakly Supervised Semantic Segmentation Using Superpixel Pooling Network. In Proceedings of the AAAI Conference on Artificial Intelligence, San Francisco, CA, USA, 4–9 February 2017. [Google Scholar]

- Durand, T.; Mordan, T.; Thome, N.; Cord, M. WILDCAT: Weakly Supervised Learning of Deep Convnets for Image Classification, Pointwise Localization and Segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 642–651. [Google Scholar]

- Chan, L.; Hosseini, M.S.; Plataniotis, K.N. A Comprehensive Analysis of Weakly-supervised Semantic Segmentation in Different Image Domains. Int. J. Comput. Vis. 2021, 129, 361–384. [Google Scholar] [CrossRef]

- Chen, J.; He, F.; Zhang, Y.; Sun, G.; Deng, M. SPMF-Net: Weakly Supervised Building Segmentation by Combining Superpixel Pooling and Multi-scale Feature Fusion. Remote Sens. 2020, 12, 1049. [Google Scholar] [CrossRef] [Green Version]

- Yan, X.; Shen, L.; Wang, J.; Deng, X.; Li, Z. MSG-SR-Net: A Weakly Supervised Network Integrating Multiscale Generation and Superpixel Refinement for Building Extraction from High-Resolution Remotely Sensed Imageries. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2021, 15, 1012–1023. [Google Scholar] [CrossRef]

- Li, Z.; Zhang, X.; Xiao, P.; Zheng, Z. On the Effectiveness of Weakly Supervised Semantic Segmentation for Building Extraction from High-resolution Remote Sensing Imagery. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2021, 14, 3266–3281. [Google Scholar] [CrossRef]

- Yan, X.; Shen, L.; Wang, J.; Wang, Y.; Li, Z.; Xu, Z. PANet: Pixelwise Affinity Network for Weakly Supervised Building Extraction From High-Resolution Remote Sensing Images. IEEE Geosci. Remote Sens. Lett. 2022, 19, 1–5. [Google Scholar] [CrossRef]

- Fang, F.; Zheng, D.; Li, S.; Liu, Y.; Zeng, L.; Zhang, J.; Wan, B. Improved Pseudomasks Generation for Weakly Supervised Building Extraction from High-Resolution Remote Sensing Imagery. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2022, 15, 1629–1642. [Google Scholar] [CrossRef]

- Wang, J.; Shen, L.; Qiao, W.; Dai, Y.; Li, Z. Deep Feature Fusion with Integration of Residual Connection and Attention Model for Classification of VHR Remote Sensing Images. Remote Sens. 2019, 11, 1617. [Google Scholar] [CrossRef] [Green Version]

- Ji, S.; Wei, S.; Lu, M. Fully Convolutional Networks for Multisource Building Extraction from an Open Aerial and Satellite Imagery Data Set. IEEE Trans. Geosci. Remote Sens. 2019, 57, 574–586. [Google Scholar] [CrossRef]

- Maggiori, E.; Tarabalka, Y.; Charpiat, G.; Alliez, P. Convolutional Neural Networks for Large-scale Remote-sensing Image Classification. IEEE Trans. Geosci. Remote Sens. 2016, 55, 645–657. [Google Scholar] [CrossRef] [Green Version]

- Maltezos, E.; Doulamis, A.; Doulamis, N.; Ioannidis, C. Building Extraction from LiDAR Data Applying Deep Convolutional Neural Networks. IEEE Geosci. Remote Sens. Lett. 2018, 16, 155–159. [Google Scholar] [CrossRef]

- Zhang, Z.; Wang, Y. JointNet: A Common Neural Network for Road and Building Extraction. Remote Sens. 2019, 11, 696. [Google Scholar] [CrossRef] [Green Version]

- Hosseinpour, H.; Samadzadegan, F.; Javan, F.D. CMGFNet: A Deep Cross-modal Gated Fusion Network for Building Extraction from Very High-resolution Remote Sensing Images. ISPRS J. Photogramm. Remote Sens. 2022, 184, 96–115. [Google Scholar] [CrossRef]

- Li, Q.; Mou, L.; Hua, Y.; Shi, Y.; Zhu, X.X. CrossGeoNet: A Framework for Building Footprint Generation of Label-Scarce Geographical Regions. Int. J. Appl. Earth Obs. Geoinf. 2022, 111, 102824. [Google Scholar] [CrossRef]

- Yuan, W.; Wang, J.; Xu, W. Shift Pooling PSPNet: Rethinking PSPNet for Building Extraction in Remote Sensing Images from Entire Local Feature Pooling. Remote Sens. 2022, 14, 4889. [Google Scholar] [CrossRef]

- Chen, M.; Wu, J.; Liu, L.; Zhao, W.; Tian, F.; Shen, Q.; Zhao, B.; Du, R. DR-Net: An Improved Network for Building Extraction from High Resolution Remote Sensing Image. Remote Sens. 2021, 13, 294. [Google Scholar] [CrossRef]

- Guo, H.; Du, B.; Zhang, L.; Su, X. A Coarse-to-fine Boundary Refinement Network for Building Footprint Extraction from Remote Sensing Imagery. ISPRS J. Photogramm. Remote Sens. 2022, 183, 240–252. [Google Scholar] [CrossRef]

- Shu, Q.; Pan, J.; Zhang, Z.; Wang, M. MTCNet: Multitask Consistency Network with Single Temporal Supervision for Semi-supervised Building Change Detection. Int. J. Appl. Earth Obs. Geoinf. 2022, 115, 103110. [Google Scholar] [CrossRef]

- Xia, L.; Zhang, X.; Zhang, J.; Yang, H.; Chen, T. Building Extraction from Very-high-resolution Remote Sensing Images Using Semi-supervised Semantic Edge Detection. Remote Sens. 2021, 13, 2187. [Google Scholar] [CrossRef]

- Zheng, Y.; Yang, M.; Wang, M.; Qian, X.; Yang, R.; Zhang, X.; Dong, W. Semi-supervised Adversarial Semantic Segmentation Network Using Transformer and Multiscale Convolution for High-resolution Remote Sensing Imagery. Remote Sens. 2022, 14, 1786. [Google Scholar] [CrossRef]

- Li, Q.; Shi, Y.; Zhu, X.X. Semi-supervised Building Footprint Generation with Feature and Output Consistency Training. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–17. [Google Scholar]

- Meng, Y.; Chen, S.; Liu, Y.; Li, L.; Zhang, Z.; Ke, T.; Hu, X. Unsupervised Building Extraction from Multimodal Aerial Data Based on Accurate Vegetation Removal and Image Feature Consistency Constraint. Remote Sens. 2022, 14, 1912. [Google Scholar] [CrossRef]

- Chen, H.; Cheng, L.; Zhuang, Q.; Zhang, K.; Li, N.; Liu, L.; Duan, Z. Structure-aware Weakly Supervised Network for Building Extraction from Remote Sensing Images. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–12. [Google Scholar] [CrossRef]

- Wei, Y.; Feng, J.; Liang, X.; Cheng, M.M.; Zhao, Y.; Yan, S. Object Region Mining with Adversarial Erasing: A Simple Classification to Semantic Segmentation Approach. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 1568–1576. [Google Scholar]

- Lee, J.; Kim, E.; Lee, S.; Lee, J.; Yoon, S. FickleNet: Weakly and Semi-supervised Semantic Image Segmentation Using Stochastic Inference. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 5267–5276. [Google Scholar]

- Jiang, P.T.; Yang, Y.; Hou, Q.; Wei, Y. L2G: A Simple Local-to-global Knowledge Transfer Framework for Weakly Supervised Semantic Segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 19–24 June 2022; pp. 16886–16896. [Google Scholar]

- Kolesnikov, A.; Lampert, C.H. Seed, Expand and Constrain: Three Principles for Weakly-supervised Image Segmentation. In Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherlands, 11–14 October 2016; pp. 695–711. [Google Scholar]

- Huang, Z.; Wang, X.; Wang, J.; Liu, W.; Wang, J. Weakly-supervised Semantic Segmentation Network with Deep Seeded Region Growing. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 7014–7023. [Google Scholar]

- Ahn, J.; Cho, S.; Kwak, S. Weakly Supervised Learning of Instance Segmentation with Inter-pixel Relations. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 2209–2218. [Google Scholar]

- Chang, Y.T.; Wang, Q.; Hung, W.C.; Piramuthu, R.; Tsai, Y.H.; Yang, M.H. Weakly-supervised Semantic Segmentation via Sub-category Exploration. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 14–19 June 2020; pp. 8991–9000. [Google Scholar]

- Fu, K.; Lu, W.; Diao, W.; Yan, M.; Sun, H.; Zhang, Y.; Sun, X. WSF-NET: Weakly Supervised Feature-fusion Network for Binary Segmentation in Remote Sensing Image. Remote Sens. 2018, 10, 1970. [Google Scholar] [CrossRef] [Green Version]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Simonyan, K.; Zisserman, A. Very Deep Convolutional Networks for Large-Scale Image Recognition. In Proceedings of the International Conference on Learning Representations, San Diego, CA, USA, 7–9 May 2015. [Google Scholar]

- Wang, X.; Girshick, R.; Gupta, A.; He, K. Non-local Neural Networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 7794–7803. [Google Scholar]

- Su, H.; Jampani, V.; Sun, D.; Gallo, O.; Learned-Miller, E.; Kautz, J. Pixel-adaptive Convolutional Neural Networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 11166–11175. [Google Scholar]

- Maggiori, E.; Tarabalka, Y.; Charpiat, G.; Alliez, P. Can Semantic Labeling Methods Generalize to Any City? The Inria Aerial Image Labeling Benchmark. In Proceedings of the 2017 IEEE International Geoscience and Remote Sensing Symposium, Fort Worth, TX, USA, 23–28 July 2017; pp. 3226–3229. [Google Scholar]

- Maaten, L.; Hinton, G. Visualizing Data using t-SNE. J. Mach. Learn. Res. 2008, 9, 2579–2605. [Google Scholar]

- Achanta, R.; Shaji, A.; Smith, K.; Lucchi, A.; Fua, P.; Süsstrunk, S. SLIC Superpixels Compared to State-of-the-art Superpixel Methods. IEEE Trans. Pattern Anal. Mach. Intell. 2012, 34, 2274–2282. [Google Scholar] [CrossRef] [PubMed] [Green Version]

| Method | WHU Dataset | Chicago Dataset | ||||

|---|---|---|---|---|---|---|

| IoU | F1 | OA | IoU | F1 | OA | |

| CAM-GAP | 41.85 | 59.01 | 84.56 | 36.17 | 53.13 | 73.72 |

| GradCAM++ | 42.73 | 59.88 | 84.13 | 35.74 | 52.66 | 73.29 |

| WILDCAT | 37.11 | 54.13 | 85.51 | 33.61 | 50.31 | 71.22 |

| SEAM | 51.83 | 68.27 | 90.97 | 46.82 | 63.78 | 79.56 |

| SPN | 40.79 | 57.94 | 85.31 | 44.76 | 61.84 | 75.93 |

| MSG-SR-NET | 53.76 | 69.93 | 91.90 | 51.25 | 67.77 | 80.98 |

| WSF-Net | 46.58 | 63.56 | 87.77 | 45.27 | 62.33 | 79.04 |

| Ours-SIOM | 51.91 | 68.34 | 91.78 | 47.48 | 64.39 | 80.38 |

| Ours-SMCAM | 54.38 | 70.45 | 92.21 | 51.22 | 67.74 | 80.01 |

| Ours | 58.04 | 73.45 | 93.29 | 52.68 | 69.01 | 81.53 |

| Method | WHU Dataset | Chicago Dataset | ||||

|---|---|---|---|---|---|---|

| IoU | F1 | OA | IoU | F1 | OA | |

| CAM | 45.11 | 62.17 | 88.99 | 40.12 | 57.27 | 75.33 |

| GradCAM++ | 42.19 | 59.34 | 87.62 | 38.30 | 55.39 | 74.49 |

| WILDCAT | 30.28 | 46.49 | 64.33 | 28.38 | 44.21 | 67.11 |

| SEAM | 55.44 | 71.33 | 91.94 | 51.27 | 67.79 | 81.56 |

| SPN | 43.25 | 60.38 | 86.6 | 50.40 | 67.02 | 82.35 |

| MSG-SR-NET | 57.07 | 72.67 | 92.34 | 57.68 | 73.16 | 83.28 |

| WSF-Net | 49.60 | 66.31 | 91.03 | 50.15 | 66.80 | 80.24 |

| Ours | 63.39 | 77.59 | 94.57 | 58.87 | 74.11 | 84.36 |

| Position | Subnetwork 1 (I) | Subnetwork 2 () | ||

|---|---|---|---|---|

| Training Acc. | Testing Acc. | Training Acc. | Testing Acc. | |

| Branch 1 | 0.971 | 0.980 | 0.975 | 0.972 |

| Branch 2 | 0.990 | 0.993 | 0.991 | 0.989 |

| Branch 3 | 0.992 | 0.995 | 0.995 | 0.995 |

| Branch 4 | 0.993 | 0.994 | 0.992 | 0.995 |

| Trunk | 0.990 | 0.992 | 0.992 | 0.989 |

| IoU | F1 | OA | |

|---|---|---|---|

| Last Conv. | 48.63 | 65.44 | 90.01 |

| Addition | 51.73 | 68.19 | 92.07 |

| Attention | 54.38 | 70.45 | 92.21 |

| Last Conv. + SIOM (Ours-SIOM) | 51.91 | 68.34 | 91.78 |

| Addition + SIOM | 56.48 | 72.19 | 92.98 |

| Attention + SIOM (Ours) | 58.04 | 73.45 | 93.29 |

| IoU | F1 | OA | |

|---|---|---|---|

| GradCAM++ (4 levels) | 49.75 | 66.44 | 90.01 |

| SEAM (SMCAM + PCM) | 55.17 | 71.11 | 92.07 |

| SIOM (Stage 1) | 55.69 | 71.54 | 92.61 |

| SIOM (Stage 1 + 2) | 56.12 | 71.90 | 93.11 |

| SIOM (Stage 1 + 2 + 3) | 58.04 | 73.45 | 93.29 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, J.; Yan, X.; Shen, L.; Lan, T.; Gong, X.; Li, Z. Scale-Invariant Multi-Level Context Aggregation Network for Weakly Supervised Building Extraction. Remote Sens. 2023, 15, 1432. https://0-doi-org.brum.beds.ac.uk/10.3390/rs15051432

Wang J, Yan X, Shen L, Lan T, Gong X, Li Z. Scale-Invariant Multi-Level Context Aggregation Network for Weakly Supervised Building Extraction. Remote Sensing. 2023; 15(5):1432. https://0-doi-org.brum.beds.ac.uk/10.3390/rs15051432

Chicago/Turabian StyleWang, Jicheng, Xin Yan, Li Shen, Tian Lan, Xunqiang Gong, and Zhilin Li. 2023. "Scale-Invariant Multi-Level Context Aggregation Network for Weakly Supervised Building Extraction" Remote Sensing 15, no. 5: 1432. https://0-doi-org.brum.beds.ac.uk/10.3390/rs15051432